1 Introduction to Speech Synthesis

advertisement

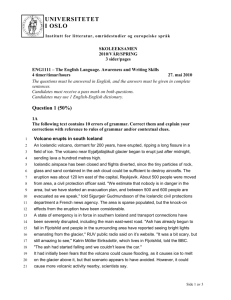

GSLT: Speech Technology 1 Fall semester 2001 Teacher: Björn Granström Speech Synthesis for Icelandic Auður Þórunn Rögnvaldsdóttir Table of Contents Table of Contents ........................................................................................................... 3 1 Introduction to Speech Synthesis ........................................................................... 4 1.1 What is Speech Synthesis .............................................................................. 4 1.2 The History of Speech Synthesis ................................................................... 4 1.3 Prosody Control ............................................................................................. 4 1.4 Different Parts of Speech Synthesizers .......................................................... 4 1.4.1 Deriving Pronunciation From Text ........................................................ 5 1.4.2 The Speech Signal Generation ............................................................... 5 2 Speech Synthesizers for Icelandic ......................................................................... 6 2.1 Introduction .................................................................................................... 6 2.2 The synthesizer program ................................................................................ 6 2.2.1 Older version .......................................................................................... 6 2.2.2 Newer version ........................................................................................ 6 2.3 Current Usability ............................................................................................ 7 2.4 Future Usability ............................................................................................. 7 2.5 Accent (Dialect) ............................................................................................. 7 2.6 History of the Icelandic Speech Synthesizer ................................................. 7 2.7 Experiment ..................................................................................................... 8 2.8 Evaluation ...................................................................................................... 8 2.8.1 Prominence ............................................................................................ 9 2.8.2 Structure ................................................................................................. 9 2.8.3 Tune ....................................................................................................... 9 2.8.4 Language Accent ................................................................................. 10 2.8.5 Vowels ................................................................................................. 10 2.8.6 Fricatives and Plosives ......................................................................... 10 2.8.7 Concatenated Words ............................................................................ 11 2.8.8 Length .................................................................................................. 11 2.8.9 Sound ................................................................................................... 11 2.8.10 Extra Noise........................................................................................... 12 2.8.11 Other Aspects ....................................................................................... 12 3 Conclusions .......................................................................................................... 12 4 Appendix A .......................................................................................................... 13 5 References ............................................................................................................ 15 12.2.2016 3 Speech Syntesis for Icelandic 1 Introduction to Speech Synthesis 1.1 What is Speech Synthesis Speech synthesis is about converting written text to speech. That is, producing computer and electronic software that can analyse text, produce a phonetic transcription and from that produce a speech output. 1.2 The History of Speech Synthesis The first speech synthesizers were made for English in the 1970s. These were not very useful for other languages because it was not possible to control all phonetic aspects. In 1983 a speech synthesizer was marketed, that was developed with the aim of being used for other languages than English. The synthesizer was marketed by Infovox, but made by Björn Granström and Rolf Carlson at KTH in Stockholm and therefore Swedish was its first language. It has since been “taught” various other languages, such as French, German, Spanish, Norwegian and Danish. The Organization of Disabled in Iceland saw this as an opportunity for blind and disabled people and in June 1989 work started on adapting the Swedish synthesizer to Icelandic. 1.3 Prosody Control It has been found that a very important aspect of speech synthesizers is how they handle things like audible change in pitch, loudness, syllable length, rhythm, tempo, accent, intonation and stress. This is called prosody control. Prosody changes the way we read sentences and can change the meaning of the sentence. In so-called tone languages, such as Chinese and most African languages, the pitch level of a word is an essential feature in the meaning of the word. Punctuation does give some hint as to the prosody of a sentence, but it isn’t nearly enough. The focus in the sentence, its main verb and a shared knowledge of the world also play a part in generating the prosody of a sentence. 1.4 Different Parts of Speech Synthesizers Text-to-Speech synthesis consists of two major parts: Converting normal text into phonetic transcription and converting this phonetic transcription into parameters that can drive the actual synthesis of speech. Figure 1: A general diagram of a text-to-speech synthesizer 12.2.2016 4 Speech Syntesis for Icelandic 1.4.1 Deriving Pronunciation From Text Converting text to phonemes is not an easy task, as the pronunciation of words generally differs from their spelling. For example, the pronunciation of the same character differs depending on the environment it appears in. Different characters or character sequences can also have the same pronunciation, like the sh in dish and the t in action. Moreover the pronunciation of words in a sentence differs from the pronunciation of single word utterances. There are two basic strategies of converting text into phonetic transcription. Dictionary-based Solutions Dictionary-based solutions consist of storing a maximum of phonological knowledge in a lexicon. To keep the size reasonably small, entries generally consist of morphemes and the pronunciation of surface forms is accounted for by inflectional, derivational and compounding rules. Words that are not found in the lexicon are transcribed by rule. Rule-based Solutions Rule-based solutions on the other hand consist mainly of letter-to-sound (graphemeto-phoneme) rules. Words with pronunciations that don’t fit these rules are then stored in an exception dictionary. 1.4.2 The Speech Signal Generation After the text has been converted to phonetic transcription, this transcription is used to produce a signal mimicking that of human speech. There are three different approaches to speech synthesis: Articulatory Synthesizers Articulatory synthesizers are physical models of the human speech production system. This was the earliest method that was tried for speech synthesis, but has not been much used, since it proved hard and expensive to make. Rule-based Synthesizers (Formant Synthesizers) In a preliminary phase to rule-based synthesis a large number of speech data is collected and analysed. Then rules are made from the speech data deciding the values of the speech parameters, such as duration and formant frequency values. Speech generation is then done by using these rules to model the main acoustic properties of the speech signal. Rule base synthesizers are always formant synthesizers. These synthesizers describe speech using mostly parameters related to formant and antiformant frequencies and bandwidths, as well as glottal waveforms. Concatenation-based Synthesizers Preliminary stages to concatenation-based synthesis include selecting segments to use and then completing a list of words including at least one occurrence of each of these segments. The speech data is recorded and the segments identified. The segment waveforms and the corresponding segmental information are then stored. 12.2.2016 5 Speech Syntesis for Icelandic To generate speech signals the stored segments are recalled and arranged in order to generate the sound sequences needed for the speech. The prosody of the segments is then matched to the required prosody of the generated speech. The segments should be easily connectible, they should account for as many coarticulatory effects as possible and their number and length should be as small as possible. The most widely used segments are diphones. Diphones are units that begin in the middle of the stable state of a phone and end in the middle of the following one. Other segments are half-syllables and triphones, which are units that begin in the middle of a phone, include completely the next phone and end in the middle of the third one. 2 Speech Synthesizers for Icelandic 2.1 Introduction As already mentioned, the first speech synthesizer for Icelandic was made in 1989-90 and was intended for the handicapped. It was of the formant-based type. It has served its purpose well since, but cannot be said to be usable for other purposes. Recently a new version of the speech synthesizer has been released. This version uses diphone analysis. I very recently managed to get a copy of it to try it out. It is very interesting to look at the two models in parallel. The most interesting of course is to hear if the new model sounds more natural than the older model. Another speculation that has been brought up is that in the formant-based version it is possible to make it read faster or slower depending on the needs of the listener. This is important for the handicapped that use the speech synthesizer for what others call fast reading or scanning. People have speculated if this is possible with the diphone-based version. It would be very interesting to try this. 2.2 The synthesizer program A demo version of both synthesizers can be downloaded at http://www.infovox.se/tdemo.htm. The speech synthesizer for Icelandic uses a rule-based solution to derive phonetical transcription from normal text. 2.2.1 Older version The speech synthesizer is formant based. There are 5 different types of speakers: Icelandic Child, Icelandic Female, Icelandic Male, Icelandic Giant and Icelandic Zombie. The giant and the zombie are both missing a part of the voice and sound as if something is missing in the voice. The child voice does not sound very well and the female voice sounds as if the frequency of the male voice has been turned up, which is probably the case. My conclusion is therefore that the Icelandic Male voice is the best to listen to. This seems also to be the case for most people who use the system, they mostly use this voice. 2.2.2 Newer version This version of the speech synthesizer is diphone based. There exists one male speaker, called Snorri. 12.2.2016 6 Speech Syntesis for Icelandic 2.3 Current Usability The synthesizer has been used by blind and disabled people in Iceland and has helped them a lot. They can surf the net, use computers without a special screen for the blind, which is expensive and ties them to a specific workstation. They can also use it to verify the text they type, and read books and other texts without having to wait for them to be published as audio books or blind transcript books. They also use it to skim through text in a similar way to the seeing by making it read the text at high speed. A special computer screen for the blind is very expensive and so the speech synthesizer can save a lot of money. 2.4 Future Usability By improving the synthesizer it would be of even more use to the blind and disabled. It could also be used as a teaching aid for children with reading and learning disabilities. They would then be able to practice on their own with the machine, without needing to have a person assisting them at all times. It could be used in distance learning and in IT teaching material as well as for teaching in general. A speech synthesizer that sounded natural enough and that could produce different voices could be used for dubbing of movies and television programs. This would be extremely useful especially for children and people with poor vision as they are not able to read the subtitles. 2.5 Accent (Dialect) One can hardly say that there exist dialects in Iceland. However, there is a definite difference in pronunciation between the north and the south. In the north consonants are voiced in many circumstances whereas they are mostly unvoiced in the south. An interesting question when designing speech synthesizers is whether one should stick to one dialect, and then which, or whether the dialects can be mixed. In the original Icelandic version from 1989 the northern dialect was used because of the formant-based synthesizer’s inability to produce an unvoiced n. In this version the decision was also made not to mix different dialects. The diphone-based synthesizer is however able to produce an unvoiced n and therefore could be made to speak with a southern dialect, should that be desired. 2.6 History of the Icelandic Speech Synthesizer As already mentioned The Organization of Disabled in Iceland saw opportunity in the Swedish synthesizer in 1989 and started work on an Icelandic version. Prior to that there had however been some research on speech synthesis for Icelandic. In 1985 Kjartan R. Guðmundsson wrote his final thesis in computer technology at the University of Iceland (HÍ) on possibilities for making a speech synthesizer for Icelandic. In continuation of this thesis, work was started to build such a system. In June 1986 the work was abandoned, as people found out that it was not possible to adapt the American speech synthesizer they had looked at to Icelandic. At this time the Swedish synthesizer was mostly unknown to people in Iceland. 12.2.2016 7 Speech Syntesis for Icelandic In June 1989 work started in adapting the Swedish synthesizer to Icelandic. In charge of the project were Guðrún Hannesdóttir, Höskuldur Þráinsson and Páll Jensson, who was the project leader. A student, Pétur Helgason was employed to do the work, and he was assisted by Kjartan R. Guðmundsson. The Icelandic speech synthesizer uses a rule-based method to convert the text into phonetic descriptions. The speech synthesis itself was formant-based in this earlier version of the synthesizer. It is worth mentioning that the synthesizer itself is not based on studies of Icelandic, but is common for all languages. A specific problem with Icelandic is that some numerals (1, 2, 3 and 4) change their gender and cases depending on the word they refer to. The speech synthesizer cannot decide the gender and case of these numerals and therefore all numerals except years are read as masculine. Inflectional endings and concatenations were one of the larger concerns in the making of the Icelandic synthesizer. The pronunciation of character sequences differs depending on if they contain a morpheme boundary or not. The stress is also different in concatenated words. Icelandic is an inflectional language and therefore this is a bigger problem than for many other languages. The solution thought of for this problem, but not included in the system at that time, was to include a filter that divides the concatenated words into their individual word parts. The correct pronunciation for each word part could then be found and applied individually for each part. At first a lot of abbreviations were included. As the system was used it was however discovered that there were too many of them, as the synthesizer would read too many things as abbreviations. Kr would be read “krónur”, which was not good if the text was talking about the sports club KR. It would read “mín” as mínútur, so “Búkolla mín” (My Búkolla) in the following text would become “Búkolla mínútur” (Búkolla minutes). This lead to many of the abbreviations being removed from the system three years ago. 2.7 Experiment The text used to try the synthesizer is an old Icelandic fairy tale called Búkolla. It is about a farmer’s boy who is searching for his parent’s cow who they love more than their son. The text can be found in appendix A. 2.8 Evaluation The most obvious shortcoming of the synthesizer as of now is that it sounds computerized and its speech therefore does not sound normal. This is however the problem with all synthesizers and not unique for the Icelandic synthesizer. Its speech does sound a little more unclear than English speech synthesizers I have heard. The reason could be that it is harder to produce the speech sounds of Icelandic. Another reason is perhaps also that the Icelandic speaking community is much smaller than the English speaking one and therefore there are fewer resources available to improve a speech synthesizer for Icelandic than an English one. In the following I listened to the Icelandic speech synthesizers with the attitude of a normal Icelandic speaker. The aspects I noted are how the generated speech sounds to the general Icelandic speaker and what could be improved in that connection. I also 12.2.2016 8 Speech Syntesis for Icelandic compared the two versions of the synthesizer, the formant-based version and the diphone-based version. I use the International Phonetic Alphabet (IPA) phonetic transcription to transcribe the pronunciations in the following examples. 2.8.1 Prominence Prominence is a broad term used to cover stress and accent. In a natural sentence in Icelandic, certain syllables are more prominent than others. These syllables are said to be accented. These accented syllables may be prominent because they are louder, longer, associated with a change in the fundamental frequency, or a combination of these factors. A stress pattern or rhythm is the series of stressed and unstressed syllables that make up a sentence. As the system doesn’t understand the text it is reading, it is difficult for it to decide on the rhythm of the sentences. The only things it can count on are punctuation marks such as points, commas, question marks, etc. This makes it hard for the system to compute the correct rhythm for the text it is reading. The rhythm of the sentences is often unnatural. Small words as “í, á, um…” are handled as if they were unaccented, which is more often the case than not. However, when they are spoken without stress where they should be with accented, the sentence sounds strange. 2.8.2 Structure Some words in a sentence seem to group naturally together, whereas others seem to have a noticeable break between them. This is called the prosodic structure of the sentence. It is well known that it is hard to get a natural sounding flow of sentences with a speech synthesizer. This is also apparent in the Icelandic version. In the formant-based version there is a pause between “Búkolla” and “mín” in paragraph 5, where there shouldn't be a pause. This pause has disappeared in the diphone-based model. An interesting point is also that in Icelandic the use of punctuation marks has decreased over the past years, which makes it harder for the system to decide upon the right rhythm. When there is a comma in the text, it is read as if it were a list, which is often a good choice. However, where there is a comma and it isn’t a list this makes the sentence sound strange. An example from the text is the sentence “Hann gekk lengi, lengi, þangað til…” 2.8.3 Tune The meaning of two utterances is different if they have different tunes. Tune refers to the intonational melody of an utterance. The most important part of intonational tunes is the change in fundamental frequency. In the sentence “Þá segir hann: "Hvað eigum við nú að gera, Búkolla mín?"” the fundamental frequency rises a lot at the “mín” in the end of the sentence. This happens in both the formant-based and the diphone-based version and sounds really unnatural. 12.2.2016 9 Speech Syntesis for Icelandic 2.8.4 Language Accent Icelandic dialects In the word “stelpa”, the l is voiced in the formant-based version [], this version of the synthesizer talks with a northern dialect. In the diphone-based version the word has an unvoiced l [], and so now has a southern dialect. However, the formant-based version sounded more like a northern dialect than the diphonebased version sounds like a southern dialect. I cannot quite place my finger on it, but it has something to do with the sound of the e. Foreign accents Sometimes one could imagine that the synthesizer talks with a Swedish accent. I think it is more the flow and stress in the sentences as a whole and not in each word that gives this feeling. Two sentences I noticed that gave me this feeling were: “Skömmu seinna kom hún út aftur til að vitja um kúna.” This sentence sounds similar in both versions of the synthesizer. “Þegar hann er kominn nokkuð á veg,” This sentence sounds more Icelandic in the diphone-based version. 2.8.5 Vowels I noticed a difference in the two versions’ pronunciation of the following vowels and diphthongs. The y in “stygg” [] sounds better in the diphone-based version. In the formantbased version it sounds a bit too Swedish. The ei in “lengi” [] sounds better in the formant-based model, but the ei in “gengur” [] sounds better in the diphone-based model. This seems a bit strange and I cannot explain why this seems to be the case. 2.8.6 Fricatives and Plosives I tried making the system spell out single letters and came to the conclusion that most of the sounds were similar in both versions of the synthesizer except for the letters n, r and t. I found that these letters sound better in the formant-based system. They sound more “Swedish” in the diphone-based system, especially the n and the r, the t sounds strange but I’m not familiar enough with Swedish to be able to assert that it sounds Swedish. Most Icelandic speakers pronounce the word “bálki” with an unvoiced l []. The diphone-based version of the synthesizers did this as well. The formant-based version however, pronounced it as “banki” with a voiced n []. I noticed two cases with plosives that did not sound correctly. The first is the word “ekki” [], which in the format-based version sounded more like “ehhi” []. In the diphone-based version however, it sounds more normal. The second case was the word “tröllskessa” which I expected to be read as “trödlskessa” [] with the plosive d followed by an unvoiced l. Both versions of the synthesizer however read “tröllskessa” [], with a 12.2.2016 10 Speech Syntesis for Icelandic long voiced l. In this particular word this pronunciation is not the correct one, but ll is pronounced as a long l in many foreign words, such as ball, gella and bolla, as well as in pet names, such as Palli, Kalli and Alla. 2.8.7 Concatenated Words Concatenated words are a known problem in speech synthesis, as they don’t follow the same pronunciation rules as other words. Their pronunciation is closer to that of each part on its own than of the two (or more) parts concatenated. Therefore, these words and their special pronunciational rules have to be specifically listed in a lexicon in the system. The word “jafnnær” is pronounced “jabbær” [] instead of “jabnær” [] by both the versions of the synthesizer. The word “jafnvel” [] is however pronounced correctly in both versions. At first I thought this was because “jafn-nær” is a concatenated word and did not exist in the system's lexicon, leading to the rule stating that fn is to be pronounced pn [] being used and the second n ignored. I do not however believe that this is the case, because even if this rule was used, there should still be an n in the pronunciation. 2.8.8 Length The lenght of vowels and consonants in accented syllables has been studied and is included in the systems. However, unaccented syllables have not been studied thoroughly and therefore the model for length used in the systems is somewhat lacking. It would also be helpful to study pauses in Icelandic, their length and their placement in utterances. 2.8.9 Sound As we know speech synthesizers do not sound quite like a human voice. One can always hear the difference. In general a formant synthesizer sounds a bit “mechanical”, whereas a diphone synthesizer sounds more natural. In both cases the flow of the speech is however a bit unnatural. What is perhaps most striking in both systems is that the utterances sound like a row of independent words rather than whole sentences. They also have a relative amount of wrong syllable length and pause length. The prosodic structure of the utterances will have to be reasonably better for the “mechanical” sound of the systems to be acceptably small. Another aspect that adds to the “mechanical” feeling of the sounds has to do with the fact that the systems produce a “neutral declarative” speech. This is to say that the output is monotonic, and lacks the relevant intonational tunes. This is done on purpose, since without real-world information and semantic knowledge of the text it is difficult to know which tune to apply. The “mechanical sound” of the formant-based system becomes quite annoying after a while of listening to it. This is a bit better in the diphone-based version. Here the system sounds more like a human voice. However, the unnatural flow of the voice is still a bit irritating in the long run. 12.2.2016 11 Speech Syntesis for Icelandic 2.8.10 Extra Noise Sometimes the adaptation of the sounds results in some extra noise in the speech synthesizer output. In the diphone based version there is some extra sound in the background in the sentence “Heyrir hann þá, að Búkolla baular, dálítið nær en í fyrra sinn.” This is audible in “heyrir” and in “fyrra sinn”. This background noise is not present in this particular sentence in the formant-based version. 2.8.11 Other Aspects It was brought to my attention that the formant-based system reads “Jóhannsson” [] as “Jóhannon” []. The diphone-based system is able to read this correctly. The synthesizer is not intelligent enough to know to switch between languages in the text, as it obviously is only able to read the text and not understand it. It will therefore read English text within an Icelandic text with an Icelandic accent unless expressively told otherwise. This could be improved with a language guesser that determined the language of the text before attempting to transcribe it to speech. At TextCat homepage, http://odur.let.rug.nl/~vannoord/TextCat/competitors.html, is a list of existing language guessers and many of them can recognize Icelandic. 3 Conclusions Some work needs still to be done until the speech synthesizer can be useful for other than the blind and disabled who are already using it. The main problem is that the synthesizer does not yet sound natural enough to be likely to be accepted by people without handicaps. This is also true for many other synthesizers and languages. The diphone-based system has made the synthesizer more pleasant to listen to, which is an important aspect for the ordinary user. As many of the system’s shortcomings are in the sounds and the flow it could be argued that what needs to be done at this point to improve the system is to look at the pronunciation and length rules and improve them. My experiments with the two types of synthesizers lead me to the conclusion that the diphone based synthesizer can indeed be made to read faster or slower depending on the needs of the listener. My feeling is that there is even longer until it can be used to simulate different kinds of voices sufficiently well to be widely used. 12.2.2016 12 Speech Syntesis for Icelandic 4 Appendix A Búkolla. Einu sinni voru karl og kerling í koti sínu. Þau áttu einn son, en þótti ekkert vænt um hann. Ekki voru fleiri menn en þau þrjú í kotinu. Eina kú áttu þau karl og kerling; það voru allar skepnurnar. Kýrin hét Búkolla. Einu sinni bar kýrin, og sat kerlingin sjálf yfir henni. En þegar kýrin var borin og heil orðin, hljóp kerling inn í bæinn. Skömmu seinna kom hún út aftur til að vitja um kúna. En þá var hún horfin. Fara þau nú bæði, karlinn og kerlingin, að leita kýrinnar og leituðu víða og lengi, en komu jafnnær aftur. Voru þau þá stygg í skapi og skipuðu stráknum að fara og koma ekki fyrir sín augu aftur, fyrr en hann kæmi með kúna; bjuggu þá strák út með nesti og nýja skó, og nú lagði hann á stað eitthvað út í bláinn. Hann gekk lengi, lengi, þangað til hann settist niður og fór að éta. Þá segir hann: "Baulaðu nú, Búkolla mín, ef þú ert nokkurs staðar á lífi." Þá heyrir hann, að kýrin baular langt, langt í burtu. Gengur karlsson enn lengi, lengi. Sest hann þá enn niður til að éta og segir: "Baulaðu nú, Búkolla mín, ef þú ert nokkurs staðar á lífi." Heyrir hann þá, að Búkolla baular, dálítið nær en í fyrra sinn. Enn gengur karlsson lengi, lengi, þangað til hann kemur fram á fjarskalega háa hamra. Þar sest hann niður til að éta og segir um leið : "Baulaðu nú, Búkolla mín, ef þú ert nokkurs staðar á lífi." Þá heyrir hann, að kýrin baular undir fótum sér. Hann klifrast þá ofan hamrana og sér í þeim helli mjög stóran. Þar gengur hann inn og sér Búkollu bundna undir bálki í hellinum. Hann leysir hana undir eins og leiðir hana út á eftir sér og heldur heimleiðis. Þegar hann er kominn nokkuð á veg, sér hann, hvar kemur ógnarstór tröllskessa á eftir sér og önnur minni með henni. Hann sér, að stóra skessan er svo stórstíg, að hún muni undir eins ná sér. Þá segir hann: "Hvað eigum við nú að gera, Búkolla mín?" Hún segir: "Taktu hár úr hala mínum, og leggðu það á jörðina." Hann gjörir það. Þá segir kýrin við hárið: "Legg ég á, og mæli ég um, að þú verðir að svo stórri móðu, að ekki komist yfir nema fuglinn fljúgandi." Í sama bili varð hárið að ógnastórri móðu. Þegar skessan kom að móðunni, segir hún: "Ekki skal þér þetta duga, strákur. Skrepptu heim, stelpa," segir hún við minni skessuna, "og sæktu stóra nautið hans föður míns." Stelpan fer og kemur með ógnastórt naut. Nautið drakk undir eins upp alla móðuna. Þá sér karlsson, að skessan muni þegar ná sér, því hún var svo stórstíg. 12.2.2016 13 Speech Syntesis for Icelandic Þá segir hann: "Hvað eigum við nú að gera, Búkolla mín?" "Taktu hár úr hala mínum, og leggðu það á jörðina," segir hún. Hann gerir það. Þá segir Búkolla við hárið: "Legg ég á, og mæli ég um, að þú verðir að svo stóru báli, að enginn komist yfir nema fuglinn fljúgandi." Og undir eins varð hárið að báli. Þegar skessan kom að bálinu, segir hún: "Ekki skal þér þetta duga, strákur. Farðu og sæktu stóra nautið hans föður míns, stelpa," segir hún við minni skessuna. Hún fer og kemur með nautið. En nautið meig þá öllu vatninu, sem það drakk úr móðunni, og slökkti bálið. Nú sér karlsson, að skessan muni strax ná sér, því hún var svo stórstíg. Þá segir hann: "Hvað eigum við nú að gera, Búkolla mín?" "Taktu hár úr hala mínum, og leggðu það á jörðina," segir hún. Síðan segir hún við hárið: "Legg ég á, og mæli ég um, að þú verðir að svo stóru fjalli, sem enginn kemst yfir nema fuglinn fljúgandi." Varð þá hárið að svo háu fjalli, að karlsson sá ekki nema upp í heiðan himininn. Þegar skessan kemur að fjallinu, segir hún: "Ekki skal þér þetta duga, strákur. Sæktu stóra borjárnið hans föður míns, stelpa!" segir hún við minni skessuna. Stelpan fer og kemur með borjárnið. Borar þá skessan gat á fjallið, en varð of bráð á sér, þegar hún sá í gegn, og tróð sér inn í gatið, en það var of þröngt, svo hún stóð þar föst og varð loks að steini í gatinu, og þar er hún enn. En karlsson komst heim með Búkollu sína, og urðu karl og kerling því ósköp fegin. 12.2.2016 14 Speech Syntesis for Icelandic 5 References Dutoit, Thierry. 1997. An Introduction to Text-to-Speech Synthesis. Kluwer Academic Publishers, The Netherlands. Jurafsky, Daniel, & James H. Martin. 2000. Speech and Language Processing. An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition. Prentice Hall, New Jersey. Klatt D. 1987. Review of text-to-speech conversion for English. Journal of the Acoustical Society of America, Vol.82. p. 737-793. Pétur Helgason. 1990. Lokaskýrsla verkefnis um tölvutal. Málvísindastofnun HÍ, Verkfræðideild HÍ, Öryrkjabandalagið. Varile, Giovanni Battista & Antonio Zampolli (editors). 1997. Survey of the State of the Art in Human Language Technology. Linguistica Computazionale, Volume XII – XIII. Giardini Editori e Stampatori, Pisa. p. 165-197. 12.2.2016 15 Speech Syntesis for Icelandic