ICANN02 tutorial - Electrical and Computer Engineering

advertisement

SIGNAL and IMAGE DENOISING

USING VC-LEARNING THEORY

Vladimir Cherkassky

Dept. Electrical & Computer Eng.

University of Minnesota

Minneapolis MN 55455 USA

Email cherkass@ece.umn.edu

Tutorial at ICANN-2002

Madrid, Spain, August 27, 2002

2

OUTLINE

1. BACKGROUND

Predictive Learning

VC Learning Theory

VC-based model selection

2. SIGNAL DENOISING as FUNCTION ESTIMATION

Principal Issues for Signal Denoising

VC framework for Signal Denoising

Empirical comparisons:

synthetic signals

ECG denoising

3. IMPROVED VC-BASED SIGNAL DENOISING

Estimating VC-dimension implicitly

FMRI Signal Denoising

2D Image Denoising

3

4. SUMMARY and DISCUSSION

1. PREDICTIVE LEARNING and VC THEORY

• The problem of predictive learning:

GIVEN past data + reasonable assumptions

ESTIMATE unknown dependency for future predictions

• Driven by applications (NOT theory):

medicine

biology: genomics

financial engineering (i.e., program trading, hedging)

signal/ image processing

data mining (for marketing)

........

• Math disciplines:

function approximation

pattern recognition

statistics

optimization …….

4

5

MANY ASPECTS of PREDICTIVE LEARNING

• MATHEMATICAL / STATISTICAL

foundations of probability/statistics and function

approximation

• PHILOSOPHICAL

• BIOLOGICAL

• COMPUTATIONAL

• PRACTICAL APPLICATIONS

• Many competing methodologies

BUT lack of consensus (even on basic issues)

6

STANDARD FORMULATION for PREDICTIVE LEARNING

X

Generator

of X-values

Learning

f*(X)

machine

X

Z

System

y

• LEARNING as function estimation = choosing the ‘best’ function

from a given set of functions [Vapnik, 1998]

• Formal specs:

Generator of random X-samples from unknown probability distribution

System (or teacher) that provides output y for every X-value according to

(unknown) conditional distribution P(y/X)

Learning machine that implements a set of approximating functions

f(X, w) chosen a priori where w is a set of parameters

7

• The problem of learning/estimation:

Given finite number of samples (Xi, yi), choose from a given set of

functions f(X, w) the one that approximates best the true output

Loss function L(y, f(X,w)) a measure of discrepancy (error)

Expected Loss (Risk)

R(w) = L(y, f(X, w)) dP(X, y)

The goal is to find the function f(X, wo) that minimizes R(w) when the

distribution P(X,y) is unknown.

Important special cases

(a) Classification (Pattern Recognition)

output y is categorical (class label)

approximating functions f(X,w) are indicator functions

For two-class problems common loss

L(y, f(X,w)) = 0

if y = f(X,w)

L(y, f(X,w)) = 1

if y f(X,w)

(b) Function estimation (regression)

output y and f(X,w) are real-valued functions

Commonly used squared loss measure:

L(y, f(X,w)) = [y - f(X,w)]2

8

relevant for signal processing applications (denoising)

WHY VC-THEORY?

• USEFUL THEORY (!?)

- developed in 1970’s

-

constructive methodology (SVM) in mid 90’s

-

wide acceptance of SVM in late 90’s

-

wide misunderstanding of VC-theory

• TRADITIONAL APPROACH to LEARNING

GIVEN past data + reasonable assumptions

ESTIMATE unknown dependency for future predictions

(a) Develop practical methodology (CART, MLP etc.)

(b) Justify it using theoretical framework (Bayesian,

ML, etc.)

(c) Report results; suggest heuristic improvements

9

(d) Publish paper (optional)

VC LEARNING THEORY

•

Statistical theory for finite-sample estimation

•

Focus on predictive non-parametric formulation

•

Empirical Risk Minimization approach

•

Methodology for model complexity control (SRM)

•

VC-theory unknown/misunderstood in statistics

References on VC-theory:

V. Vapnik, The Nature of Statistical Learning Theory, Springer 1995

10

V. Vapnik, Statistical Learning Theory, Wiley, 1998

V. Cherkassky and F. Mulier, Learning From Data: Concepts, Theory and

Methods, Wiley, 1998

11

CONCEPTUAL CONTRIBUTIONS of VC-THEORY

• Clear separation between

problem statement

solution approach (i.e. inductive principle)

constructive implementation (i.e. learning algorithm)

- all 3 are usually mixed up in application studies.

• Main principle for solving finite-sample problems

Do not solve a given problem by indirectly solving a

more general (harder) problem as an intermediate step

- usually not followed in statistics, neural networks and

applications.

Example: maximum likelihood methods

• Worst-case analysis for learning problems

Theoretical analysis of learning should be based on the

worst-case scenario (rather than average-case).

12

13

STATISTICAL vs VC-THEORY APPROACH

GENERIC ISSUES in Predictive Learning:

• Problem Formulation

Statistics: density estimation

VC-theory: application-dependent

Signal Denoising ~ real-valued function estimation

• Possible/ admissible models

Statistics: linear expansion of basis functions

VC-theory: structure

Signal Denoising ~ orthogonal bases

• Model selection (complexity control)

Statistics: resampling, analytical (AIC, BIC etc)

VC-theory: resampling, analytical (VC-bounds)

Signal Denoising ~ thresholding using statistical models

14

Structural Risk Minimization (SRM) [Vapnik, 1982]

• SRM Methodology (for model selection)

GIVEN training data and a set of possible models (aka approximating

functions)

(a) introduce nested structure on approximating functions

Note: elements Sk are ordered according to increasing complexity

So

S1 S2 ...

Examples (of structures):

- basis function expansion (i.e., algebraic polynomials)

- penalized structure (penalized polynomial)

- feature selection (sparse polynomials)

(b) minimize Remp on each element Sk (k=1,2...) producing increasingly

complex solutions (models).

(c) Select optimal model (using VC-bounds on prediction risk), where

optimal model complexity ~ minimum of VC upper bound.

15

MODEL SELECTION for REGRESSION

• COMMON OPINION

VC-bounds are not useful for practical model selection

References:

C. Bishop, Neural Networks for Pattern Recognition.

B. D. Ripley, Pattern Recognition and Neural Networks

T. Hastie et al, The Elements of Statistical Learning

• SECOND OPINION

VC-bounds work well for practical model selections

when they can be rigorously applied.

References:

V. Cherkassky and F. Mulier, Learning from Data

V. Vapnik, Statistical Learning Theory

V. Cherkassky et al.(1999), Model selection for

regression using VC generalization bounds, IEEE Trans.

Neural Networks 10, 5, 1075-1089

• EXPLANATION (of contradiction)

NEED: understanding of VC theory + common sense

16

ANALYTICAL MODEL SELECTION for regression

• Standard Regression Formulation

y g (x) noise

• STATISTICAL CRITERIA

- Akaike Information Criterion (AIC)

2d ^ 2

AIC (d ) Remp (d )

n

where d = (effective) DoF

- Bayesian Information Criterion (BIC)

d ^2

BIC (d ) Remp (d ) (ln n)

n

AIC and BIC require noise estimation (from data):

^ 2

^

n

1 n

( yi yi ) 2

n d n i 1

17

• VC-bound on prediction risk

General form (for regression)

an

h ln 1 ln

h

R (h) Remp (h)1 c

n

1

Practical form

1

h h h ln n

R(h) Remp (h)1

ln

n

n

n

2

n

where h is VC-dimension (h = effective DoF in practice)

NOTE: the goal is NOT accurate estimation of RISK

• Common sense application of VC-bounds requires

- minimization of empirical risk

- accurate estimation of VC-dimension

first, model selection for linear estimators;

second, comparisons for nonlinear estimators.

18

EMPIRICAL COMPARISON

• COMPARISON METHODOLOGY

- specify an estimator for regression (i.e.,

polynomials, k-nn, subset selection…)

- generate noisy training data

- select optimal model complexity for this data

- record prediction error as

MSE (model, true target function)

REPEAT model selection experiments many times for

different random realizations of training data

DISPLAY empirical distrib. of prediction error (MSE) using

standard box plot notation

NOTE: this methodology works only with synthetic data

19

• COMPARISONS for linear methods/ low-dimensional

Univariate target functions

(a) Sine squared function sin 2 x

2

1

0.8

0.6

0.4

0.2

0

0

0.2

0.4

0.6

0.8

1

(b) Piecewise polynomial

1

0.8

0.6

0.4

0.2

0

0

0.2

0.4

0.6

0.8

1

20

• COMPARISONS for sine-squared target function,

polynomial regression

0.2

Risk

(MSE)

0.1

0

AIC

BIC

SRM

BIC

SRM

15

Dof

FFf

FF

10

5

AIC

(a) small size n=30, =0.2

0.04

Risk

(MSE)

0.02

0

AIC

BIC

SRM

AIC

BIC

SRM

14

DoF

8

(b) large size n=100, =0.2

21

• COMPARISONS for piecewise polynomial function,

Fourier basis regression

(a) n=30, =0.2

0.2

Risk

0.1

(MSE)

0

AIC

BIC

SRM

BIC

SRM

15

DoF

5

AIC

(b) n=30, =0.4

0.4

Risk

(MSE)

))

0.2

0

AIC

BIC

SRM

AIC

BIC

SRM

12

Dof

F

8

4

22

2. SIGNAL DENOISING as FUNCTION ESTIMATION

6

5

4

3

2

1

0

-1

-2

0

100

200

300

400

500

600

700

800

900

1000

10

20

30

40

50

60

70

80

90

100

6

5

4

3

2

1

0

-1

-2

-3

0

23

SIGNAL DENOISING PROBLEM STATEMENT

• REGRESSION FORMULATION

Real-valued function estimation (with squared loss)

Signal Representation:

y wi g i ( x)

i

linear combination of orthogonal basis functions

• DIFFERENCES (from standard formulation)

- fixed sampling rate (no randomness in X-space)

- training data X-values = test data X-values

• SPECIFICS of this formulation

computationally efficient orthogonal estimators, i.e.

- Discrete Fourier Transform (DFT)

- Discrete Wavelet Transform (DWT)

24

ISSUES FOR SIGNAL DENOISING

• MAIN FACTORS for SIGNAL DENOISING

- Representation (choice of basis functions)

- Ordering (of basis functions)

- Thresholding (model selection, complexity control)

Ref: V. Cherkassky and X. Shao (2001), Signal estimation and

denoising using VC-theory, Neural Networks, 14, 37-52

•

For finite-sample settings:

Thresholding and ordering are most important

•

For large-sample settings:

representation is most important

•

CONCEPTUAL SIGNIFICANCE

- wavelet thresholding = sparse feature selection

- nonlinear estimator suitable for ERM

25

VC FRAMEWORK for SIGNAL DENOISING

• ORDERING of (wavelet ) coefficients =

= STRUCTURE on orthogonal basis functions in

f ( x, w) wi gi ( x)

i

Traditional ordering

w 0 w1 w n 1

Better ordering

| w 0 | | w1 |

| w n 1 |

1 n 1

0

f

f

f

Issues: other (good) orderings, i.e. for 2D signals

• VC THRESHOLDING (MODEL SELECTION)

optimal number of basis functions ~ minimum of VC-bound

1

h h h ln n

R(h) Remp (h)1

ln

n n n 2n

where h is VC-dimension (h = effective DoF in practice)

usually h = m (the number of chosen wavelets or DoF)

Issues: estimating VC-dimension as a function of DoF

26

WAVELET REPRESENTATION

• Wavelet basis functions : symmlet wavelet

0.1

0.05

0

-0.05

-0.1

0

0.2

0.4

0.6

0.8

1

In decomposition f ( x, w) wi gi ( x)

i

1

basis functions are g s ,c ( x)

s

xc

s

where s is a scale parameter and c is a translation parameter.

x ci

w0

f m x, w wi

i 1

si

m1

Commonly use wavelets with fixed scale and translation parameters:

sj = 2-j, where j = 0, 1, 2, …, J-1

ck(j) = k2j, where k = 0, 1, 2, …, 2j-1

So the basis function representation has the form:

f x, w w jk 2 j x k

j

k

27

DISCRETE WAVELET TRANSFORM

Given n = 2J samples (xi, yi) on [0,1] interval

Calculate n wavelet coefficients in

n

y wi i

i 1

Specify ordering of coefficients {wi}, so that an estimate

m

yˆ m ( x) w i i ,

i 1

specifies an element of VC structure.

Empirical risk (fitting error) for this estimate:

|| y ( x) yˆ m ( x) || 2

Determine optimal element

m̂opt

Obtain denoised signal from

DWT.

(model complexity)

m̂opt

coefficients via inverse

28

Example: Hard and Soft Wavelet Thresholding

Wavelet Thresholding Procedure:

1) Given noisy signal obtain its wavelet coefficients wi.

2) Order coefficients according to magnitude

w 0 w1 w n 1

3) Perform thresholding on coefficients wi to obtain

4) Obtain denoised signal from

ŵi

using inverse transform

Details of Thresholding:

For Hard Thresholding:

wi

ˆ

wi

0

if wi t

if wi t

For Soft Thresholding:

wˆ i sgn( wi )(| wi | t )

Threshold value t is taken as:

t 2 ln n

ŵi .

(a.k.a. VISU method)

where is estimated noise variance.

Another method for selecting threshold is SURE.

29

EMPIRICAL COMPARISONS for denoising

• SYNTHETIC SIGNALS

true (target) signal is known

easy to make comparisons between methods

6

Blocks

4

2

y

0

-2

-4

-6

Heavisine

0

0.2

0.4

0.6

0.8

1

t

• REAL – LIFE APPLICATIONS

true signal is unknown

methods’ comparison becomes tricky

30

COMPARISONS for Blocks and Heavisine signals

• Experimental setup

Data set: 128 noisy samples with SNR = 2.5

Prediction accuracy: NRMS error (truth, estimate)

Estimation methods:

VISU(soft thresh), SURE(hard thresh) and SRM

Note: SRM = VC Thresholding = Vapnik's Method (VM)

All methods use symmlet wavelets.

0.1

0.05

0

-0.05

-0.1

0

0.2

Symmlet 'mother' wavelet

0.4

0.6

0.8

1

31

• Comparison results

0.8

NRMS for Blocks Function

100

DOF for Blocks Function

0.6

50

0.4

0.2

0

visu

sure

vm

visu

NRMS for Heavisine Function

80

0.6

60

0.4

40

0.2

20

0

visu

sure

(c)

vm

(b)

(a)

0.8

sure

vm

0

DOF for Heavisine Function

visu

sure

(d)

vm

32

VISU method

33

SURE method

34

VC-based denoising

35

ELECTRO-CARDIOGRAM (ECG) DENOISING

Ref: V. Cherkassky and S. Kilts (2001), Myopotential denoising of ECG

signals using wavelet thresholding methods, Neural Networks, 14, 11291137

Observed ECG = ECG + myopotential_noise

36

CLEAN PORTION of ECG:

37

NOISY PORTION of ECG

38

RESULT of VC-BASED WAVELET DENOISING

Sample size 1024, chosen DOF = 76, MSE = 1.78e5

Reduced noise, better identification of P, R, and T waves

vs SOFT THRESHOLDING (best wavelet method)

DOF = 325, MSE = 1.79e5

NOTE: MSE values refer to the noisy section only

39

4. IMPROVED VC-BASED SIGNAL DENOISING

Need correct VC-dim when using VC-bounds

VC-dimension for nonlinear estimators

VC-dimension = DoF only hold true for linear estimator

Wavelet thresholding = nonlinear estimator

Estimate ‘correct’ VC-dimension as:

h

1

m

How to find good δ value ?

Standard VC-denoising: VC-dimension = DoF (h = m)

Improved VC-denoising: using ‘good’ δ

estimating VC-dimension in VC-bounds

value for

40

EMPIRICAL APPROACH for ESTIMATING VC-DIM.

An IDEA: use known form of VC-bound to estimate

optimal dependency δ opt (m,n) for several synthetic

signals.

HYPOTHESIS: if VC-bounds are good, then using

estimated dependency δ opt (m,n) will provide good

denoising accuracy for all other signals as well.

IMPLEMENTATION: For a given known noisy signal

- using specific δ in VC-bound yields DoF value m*(δ);

- we can find opt. DoF value mopt (since the target is known)

- then opt. δ can be found from equality mopt ~ m*(δ)

- the problem is dependence on random noisy signal.

STABLE DEPENDENCY between δopt and mopt

stable relation exists independent of noisy signal

opt n (mopt )

If mopt/n is less than 0.2, the relation is linear:

41

opt n (mopt ) a0 (n) a1 (n)

mopt

n

,

if

mopt

n

0.2

42

EMPIRICAL RESULTS

Estimation of the relation between δopt and mopt.

Target Signals Used

0.4

3

0.3

2

0.2

1

0.1

0

f(x)

4

f(x)

0.5

0

-1

-0.1

-2

-0.2

-3

-0.3

-4

-0.4

-5

-0.5

0

0.2

0.4

0.6

0.8

-6

0

1

0.2

0.4

0.6

0.8

1

0.8

1

x

x

(b) heavisine

(a) doppler

2

6

1.5

5

1

0.5

f(x)

f(x)

4

3

0

-0.5

2

-1

1

0

0

-1.5

0.2

0.4

0.6

0.8

1

-2

0

0.2

x

(c) spires

0.4

0.6

x

(d) dbsin

Samples Size: 512 pts and 2048 pts

SNR Range: 2-20dB (for 512pts), 2-40dB (for 2048 pts)

43

Linear Approximation

1

Empirical data

Linear approximation

0.95

0.9

opt

0.85

0.8

0.75

0.7

0.65

0.6

0.04

0.06

0.08

0.1

m

0.12

/n

0.14

0.16

0.18

0.2

opt

(a) n = 512

1

Empirical data

Linear approximation

0.95

0.9

0.85

opt

0.8

0.75

0.7

0.65

0.6

0.55

0.5

0.05

0.1

m /n

opt

(b) n = 2048

0.15

0.2

44

45

VC-dimension Estimation

VC-dimension as a function of DoF(m) for ordering:

| w 0 | | w1 |

| w n 1 |

1 n 1

0

f

f

f

for n = 2048 samples

1400

1200

1000

h

800

600

400

200

0

0

100

200

300

400

500

m

n=2048pts

NOTE: when m/n is small (< 5%) then h m.

600

46

PROCEDURE for ESTIMATING δopt

For a given noisy signal, find an intersection between

- stable dependency and

- signal-dependent curve

0.5

Estimated dependency

Signal-dependent Curve

0.4

m/n

0.3

0.2

0.1

0

0.6

0.7

0.8

0.9

1

47

COMPARISON RESULTS

Comparisons between IVCD, SVCD, HardTh, SoftTh.

NOTE: for HardTh, SoftTh the value of threshold is taken as:

t 2 ln n

Target function used:

6

8

7

5

6

5

4

f(x)

f(x)

4

3

3

2

2

1

0

1

-1

0

0

0.2

0.4

0.6

0.8

-2

0

1

x

0.2

0.4

0.6

0.8

1

0.8

1

x

(a) spires

(b) blocks

1

3

0.8

2

0.6

0.4

1

f(x)

f(x)

0.2

0

0

-0.2

-1

-0.4

-0.6

-2

-0.8

-1

0

0.2

0.4

0.6

x

(c) winsin

0.8

1

-3

0

0.2

0.4

0.6

x

(d) mishmash

48

Sample Size = 512pts, High Noise (SNR = 3dB)

prediction risk

prediction risk

0.5

0.5

0.45

0.45

0.4

0.4

0.35

risk

risk

0.35

0.3

0.3

0.25

0.25

0.2

0.2

0.15

0.15

0.1

0.1

IVCD(0.78)

SVCD

HardTh

SoftTh

IVCD(0.78)

(a) spires

SVCD

HardTh

SoftTh

(b) blocks

prediction risk

prediction risk

0.5

1

0.45

0.4

0.9

0.8

0.3

risk

risk

0.35

0.25

0.7

0.2

0.6

0.15

0.1

0.5

0.05

IVCD(0.81)

SVCD

HardTh

(c) winsin

SoftTh

IVCD(0.5)

SVCD

HardTh

(d) mishmash

SoftTh

49

Sample Size = 512pts, Low Noise (SNR = 20dB)

prediction risk

0.04

0.035

0.035

0.03

0.03

0.025

risk

0.025

0.02

0.02

0.015

0.015

0.01

0.01

0.005

0.005

IVCD(0.63)

SVCD

HardTh

SoftTh

IVCD(0.64)

(a) spires

16

x 10

-3

SVCD

HardTh

SoftTh

(b) blocks

prediction risk

prediction risk

1

0.9

14

0.8

12

0.7

10

0.6

risk

risk

risk

prediction risk

8

0.5

0.4

6

0.3

4

0.2

2

0.1

IVCD(0.74)

SVCD

HardTh

(c) winsin

SoftTh

IVCD(0.5)

SVCD

HardTh

(d) mishmash

SoftTh

50

Sample Size = 2048pts, High Noise (SNR = 3dB)

prediction risk

prediction risk

0.5

0.5

0.45

0.45

0.4

0.4

0.35

0.35

0.3

risk

risk

0.3

0.25

0.25

0.2

0.2

0.15

0.15

0.1

0.1

0.05

0.05

IVCD(0.87)

SVCD

HardTh

SoftTh

IVCD(0.86)

(a) spires

HardTh

SoftTh

(b) blocks

prediction risk

prediction risk

0.5

1

0.45

0.95

0.4

0.9

0.35

0.85

0.3

0.8

0.25

0.75

risk

risk

SVCD

0.7

0.2

0.65

0.15

0.6

0.1

0.55

0.05

0.5

0

IVCD(0.88)

SVCD

HardTh

(c) winsin

SoftTh

IVCD(0.5)

SVCD

HardTh

(d) mishmash

SoftTh

51

Sample Size = 2048pts, Low Noise (SNR = 20dB)

x 10

-3

prediction risk

12

x 10

-3

prediction risk

11

10

10

9

8

8

risk

risk

7

6

6

5

4

4

3

2

2

IVCD(0.79)

SVCD

HardTh

SoftTh

IVCD(0.79)

(a) spires

-3

prediction risk

1

9

0.9

8

0.8

7

0.7

6

0.6

5

0.5

4

0.4

3

0.3

2

0.2

1

0.1

SVCD

HardTh

(c) winsin

SoftTh

prediction risk

10

IVCD(0.85)

HardTh

(b) blocks

risk

risk

x 10

SVCD

SoftTh

IVCD(0.5)

SVCD

HardTh

(d) mishmash

SoftTh

52

FMRI SIGNAL DENOISING

MOTIVATION: understanding brain function

APPROACH: functional MRI

DATA SET: provided by CMRR at University of Minnesota

- single-trial experiments

- signals (waveforms) recorded at a certain brain location

show response to external stimulus applied 32 times

- Data Set 1 ~ signals at the visual cortex in response to

visual input light blinking 32 times

- Data Set 2 ~ signals at the motor cortex recorded when the

subject moves his finger 32 times

FMRI denoising = obtaining a better version of noisy signal

53

54

Denoising comparisons:

Visual Cortex Data

1

0.5

0

-0.5

org sig

-1

0

200

400

600

800

1000

1200

1400

1600

1800

2000

1

0.5

0

-0.5

SoftTh

-1

0

200

400

600

800

1000

1200

1400

1600

1800

2000

1

0.5

0

-0.5

IVCD

-1

0

200

400

600

800

1000

1200

1400

1600

1800

SoftTh

IVCD(0.8)

DoF

77

101

MSE

0.0355

0.0247

MSE

Signal Power

0.5640

0.3371

2000

55

Motor Cortex Data

1

0.5

0

-0.5

org sig

-1

0

200

400

600

800

1000

1200

1400

1600

1800

2000

1

0.5

0

-0.5

SoftTh

-1

0

200

400

600

800

1000

1200

1400

1600

1800

2000

1

0.5

0

-0.5

IVCD

-1

0

200

400

600

800

1000

1200

1400

1600

1800

SoftTh

IVCD(0.9)

DoF

104

148

MSE

0.0396

0.0235

MSE

Signal Power

0.5140

0.3053

2000

56

IMAGE DENOISING

• Same Denoising Procedure:

- Perform DWT for a given noisy image

- Order (wavelet) coefficients

- Perform thresholding (using VC-bound)

- Obtain denoised image

• Main issue: what is a good ordering (structure) ?

- Ordering 1 ('Global' Thresholding)

Coefficients are ordered by their magnitude:

w 0 w1 w n 1

- Ordering 2 (same as for 1D signals in VC-denoising)

Coefficients are ordered by magnitude penalized by scale S:

| w0 | | w1 |

| wn 1 |

1 n 1

S0

S

S

- Ordering 3 ('level-dependent' thresholding):

| w0 |

S

-

0

| w1 |

S

1

| wn 1 |

S n 1

Ordering 4 (tree-based): see references

J. M. Shapiro, Embedded Image Coding using Zerotrees of Wavelet coefficients,

IEEE Trans. Signal Processing, vol. 41, pp. 3445-3462, Dec. 1993

57

Zhong & Cherkassky, Image denoising using wavelet thresholding and model

selection, Proc. ICIP, Vancouver BC, 2000

Example of Artificial Image

Image Size: 256256, SNR(Noisy Image) = 3dB

Original Image

Noisy Image

Denoised by HardTh

(DoF = 241, MSE = 0.01858)

Denoised by VC method

(DoF = 104, MSE = 0.02058)

58

VC-based Model Selection

1.4

empirical risk

VC bound

prediction risk

1.2

Risk(MSE)

1

0.8

0.6

0.4

0.2

0

0

200

400

600

800

1000

59

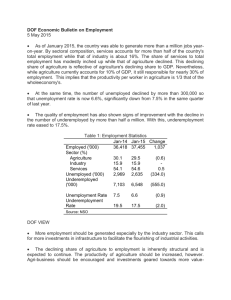

4. SUMMARY and DISCUSSION

• Application of VC-theory to signal denoising

• Using VC-bounds for nonlinear estimators

• Using VC-bounds for sparse feature selection

• Extensions and future work:

- image denoising;

- other orthogonal estimators;

- SVM

• Importance of VC methodology: structure, VC-dimension

• QUESTIONS

ACKNOWLEDGENT:

This work was supported in part by NSF grant ECS-0099906.

Many thanks to Jun Tang from U of M for assistance in

preparation of tutorial slides.

60