Linear feature enhancement using conjugate directional derivatives

advertisement

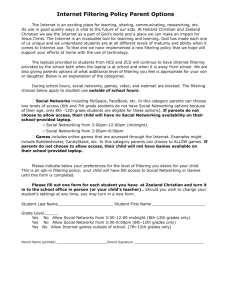

A Neural System for Managing and Manipulating Directional Filtering in Image Processing XIUJUAN GUO1,2 Department of Computer Science Jilin Architectural and Civil Engineering Institute 27 Honqi Street, Changchun, Jilin Province, China 2 College of Geoexploration Science and Technology Jilin University, Changchun, China 1 Abstract - Identifying linear information in some selective directions is required sometimes in image processing. Directional filters can be made in some specific directions so that the interested linear features in these directions are enhanced. Traditionally the results of directional filtering in these directions are presented in separate images, which is inefficient in revealing the relationships between linear features in these directions. In this paper, we propose a new approach that uses a neural system to coordinate all the results from directional filtering in several directions to produce an integrated image that presents the enhanced linear features in one or more of these directions. The synthetic and real examples presented in this paper prove that the combination of neural system and directional filtering is useful for linear feature enhancement. Key-Words: - Directional filtering, Neural system, Image processing, Linear enhancement 1 Introduction Various derivative operators are used for linear enhancement [1]. Directional filters are used to enhance linear features in a specific direction and normally based on selective horizontal derivatives. In theory, the linear features perpendicular to the filter movement are enhanced and those parallel to the filter movement are minimized. To illustrate linear features in several directions of an image, one needs to make several independent images resulted from each of these directions. This is inefficient in revealing the relationships between linear features in these directions. In this paper, we propose a new method that coordinates all the results of directional filtering in several directions using a neural system to produce an integrated image that combines the enhanced features from one or more interested directions. A synthetic example and a real image are used to test the usefulness of this new technique. 2 Concept of the Neural System for Coordinating Directional Filtering Directional filtering can be achieved using space domain filters, Fourier transformation, Randon transformation and other methods [1,2,3,4]. Although higher order derivatives can be used as filters, in this paper we only use the first order derivatives for performing directional filtering. Assuming directional filtering has been applied to an original file (P0) in n directions and the corresponding files are P1, P2, , Pn, we can design a neural system to handle and further manipulate these files. By changing some parameters of such a neural system, an appropriate image that presents the enhanced information in one or more specific directions can be generated. The individual files of directional filtering in the n different directions form the input vector (P) of the neural system, and a set of adjustable factors form the weight vectors (W) of the system shown in Figure 1. This system also has a zero bias and a set of adjustable transfer functions. The output is controlled by adjusting either the weight vectors or transfer functions, or both. For example, if the directional filtering is applied in the x-axis and its conjugated y-axis separately, the result from the x-axis filtering will enhance the linear features in the y-axis and minimize the linear features in the x-axis. In contrary, the result from the y-axis filtering will enhance the linear features in the x-axis and minimize the linear features in the y-axis. If we use the results from both the x-axis (P1) and y-axis (P2) filtering as the neural inputs, and factors 0 and 1 as the neural weights, the corresponding input vector will be P = [P1, P2], and the weight vectors will be W0 = [0, 0]; W1 = [0, 1]; W2 = [1, 0]; W3 = [1, 1]. If the transfer function is set as F = 1, these weight vectors will in turn produce a black image, the y-axis enhanced image, the x-axis enhanced image and the enhanced image in both the x and y axes, respectively. The last image will show the combined linear features in both the conjugated x and y directions, which is called the conjugate directional filtering (CDF) [5]. W11 P1 P2 Pn W1n F B 1 Figure 1. Schematic diagram of a neural system 3 A Synthetic Example We first use a synthetic example to demonstrate the usefulness of this neural system for handling directional filtering. The synthetic model is comprised of two centred squares and a ‘plus’ sign in the middle of the squares (Fig. 2a). All objects in Figure 2a are clearly shown because the differences between adjacent objects are profound. The model used to test this system has the same structure as shown in Figure 2a, but the central ‘plus’ is hidden because the contrast between the ‘plus’ and the inner square is very weak (Fig. 2b). After applying directional filtering in both the horizontal and vertical directions, the resulted data are used as the a b d e input vector (P = [Px, Py]) to the neural system. Selection of W1 = [1, 0] and F = 1 produces an image that shows the result of horizontal filtering. Vertical edges of the hidden ‘plus’ and the inner square are enhanced whereas the horizontal linear features are removed except at the ends of the horizontal line of the ‘plus’ sign (Fig. 2c). Another selection of W2 = [0, 1] and F = 1 allows the horizontal edges of the hidden ‘plus’ and inner square to be presented only (Fig. 2d). The other selection of W3 = [1, 1] and F = 1 generates an image that combines conjugate linear features in both the vertical and horizontal directions together. Both of the square and the hidden ‘plus’ sign are clearly outlined (Fig. 2e). c f Figure 2. Structure of the synthetic model (a), original image of the model (b), image resulted from W1 = [1, 0] and F = 1 (c), image resulted from W2 = [0, 1] and F = 1 (d), image resulted from W3 = [1, 1] and F = 1 (e), and image resulted from W3 = [1, 1] and F = abs (f). It is noticeable that the boundaries of the same object have different grey scales in Figure 2e. This is because directional filtering always results in a pair of ‘positive’ and ‘negative’ changes along the parallel boundaries of a symmetric shape. The combination of W3 = [1, 1] and F = abs makes all the boundaries of an object equally grey-scaled (Fig. 2f). 4 An Aerial Photograph of City View We use the neural system to handle directional filtering of an aerial photograph of a city view to demonstrate the usefulness of the system in the enhancement of conjugated linear features. There are buildings, streets, trees, cars on parking areas and other objects on the image (Fig. 3a). Most of these objects are arranged in either the north-south direction or the east-west direction, or in the both directions. Figure 3b shows the result after applying conventional Laplacian filtering. As expected, the linear features are outlined on this Laplacian image, a but most background information or low-frequency components, is removed. Directional filtering is applied to this photograph in the conjugate EW and NS directions, and consequently the two processed files are used as the neural input vector (P = [PEW, PNS]). In order to integrate these two files into one image that also retains the original information, a weight vector W = [1,1,1] is used to manipulate the input. The first factor indicates that the original file is counted as one unit in the output whereas the transfer function is fixed as F = 1. The input produces an image that shows more features in both the EW and NS directions (Fig. 3c) with background information retained. We may feel that the background information is too strong in Figure 3c. A weight vector of W = [1,3,3] can increase directionally filtered information by 3 times more than the background, which is shown in Figure 3d. b c d Figure 3. Original street photograph (a), Laplacian image (b), image resulted from W = [1,1, 1] (c), and image resulted from W = [1,3, 3] (d). 5 Conclusion The synthetic and real examples presented above prove that the neural system is a useful tool to handle directional filtering for linear feature enhancement. The adjustable weight vectors in the neural system allow not only the result from a specific direction to be displayed independently, but also the results from more directions to be further enhanced and integrated into one image, for which the traditional directional filtering is not able to achieve. The selective transfer functions in the neural system also allow further image manipulation to be performed for some specific purposes. References [1] B Jahne, Digital image processing: concepts, algorithms and scientific applications, Springer, 1997. [2] S R Deans, Radon transform and some of its applications, John Wiley and Sons, 1983. [3] G Beylkin, Discrete Radon transform: IEEE Trans. Acoustics, Speech and Signal Processing, Vol.35, pp.162-172, 1987. [4] J G Proakis and D G Manolakis, Digital signal processing: principles, algorithms and applications, Prentic-Hall International, 1996. [5] W. Guo, D. Li, & A. Watson, Conjugated linear feature enhancement by conjugate directional filtering, IASTED international conference on visualization, imaging and image processing, Marbella, Spain, 2001.