2.The proposed CCL algorithm - School of Electronics, Electrical

advertisement

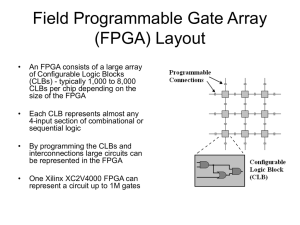

An FPGA Implementation of Image Component Labelling D Crookes and K Benkrid School of Computer Science, The Queen’s University of Belfast, Belfast, BT7 1NN, UK ABSTRACT Connected Component Labelling (CCL) is an important task in intermediate image processing. Several algorithms have been developed to handle this problem. Hardware implementations have typically been based on massively parallel architectures, with one logical processing element per pixel. This approach requires a great deal of logic, so current solutions are often implemented in VLSI rather than on FPGAs, and are limited in the size of image which can be labelled. This paper describes an architecture based on a serial, recursive algorithm for CCL which is well suited to implementation on an FPGA with off-chip RAM. The algorithm iteratively scans the input image, performing a non-zero maximum neighbourhood operation. A complete forward pass is followed by an inverse pass in which the image is scanned in reverse order. In the general case, the process is repeated until no change in the image occurs. For a 256 by 256 image, the whole circuit fits easily on a XC4010E FPGA chip (20 by 20 CLBs). Using bit serial arithmetic, it runs at 76 MHz (over 70 passes per second for 16 bits per pixel). In the general case, the time to label a whole image depends on the shapes of the objects, which is a disadvantage. However, given prior knowledge of the shapes, an upper limit on the number of required passes can be set in advance. The big advantage of the algorithm is that its hardware implementation is easily scaleable to images of any size. It can be defined at a high level within our application environment, which enables automatic generation of architectures for an FPGA-based image coprocessor. Keywords: Labelling, Intermediate level image processing, Serial recursive algorithm, FPGA(s). 1. INTRODUCTION Connected Component Labelling is an important task in intermediate image processing with a large number of applications1,2. The problem is to assign a unique label to each connected component in the image while ensuring a different label for each distinct object as illustrated in Figure 1. By assigning a unique label to each connected region, higher level image processing operations can identify, extract, and process each object separately. 30 30 30 30 30 30 30 30 30 10 10 10 10 10 10 10 10 10 20 20 20 20 20 20 20 20 20 20 20 20 Figure 1: A labelled Image. Email: (D.Crookes,K.Benkrid)@qub.ac.uk; Telephone: +44 (0) 1232 274626; Fax: +44 (0) 1232 683890 To date, many algorithms have been developed to handle this problem3. Some have been designed for implementation on software, and some for hardware. Hardware implementations have typically been based on massively parallel architectures. Early parallel techniques for image labelling were based on a nearest neighbour algorithm, dedicating one Processing Element (PE) for each pixel where labels are broadcast locally between neighbours’ processors. They were proposed for 2D mesh-connected cellular arrays and ILLIAC-type computers4. Using this approach, a time complexity of O(N2) was obtained for an NxN image. Another algorithm is based on a global approach, and is designed for a reconfigurable-mesh type architecture5,6. It reduces the complexity to O(N). The reconfigurable mesh is an NxN array of PE’s that are connected to a reconfigurable broadcast bus. Yet another architecture based on a boundary processing method 7, reduces the complexity to O(LogN). The underlying strategy of this approach is based on constructing a boundary list for each connected component, labelling these boundaries, and then propagating the labels to the pixels within each component. Another parallel architecture has been proposed which also takes a global approach. It runs on a one-dimensional array of processors, and aims to minimise the electronic structure 8. The algorithm is based on a divide and conquer paradigm in which the image is first partitioned into several sub-images; then, local labels are computed within each sub-image. These results are then merged to compute the labels for the entire image. It leads to a complexity of O(NXLogN). Though implementable on FPGAs, it requires four XC4025 chips (32x32 CLBs each) to implement a labelling circuit for a 32x32 image size only. These massively parallel approaches (based on one PE per pixel) inevitably require a great deal of logic, so current solutions are often implemented in VLSI rather than on FPGAs. Their main disadvantage is that they are generally limited in the size of image, which can be labelled on FPGAs (e.g. 32x32). This paper describes an architecture based on a serial, recursive algorithm for CCL which is well suited to implementation on an FPGA with off-chip RAM. Its main advantage is that it is readily scaleable, and that it can label images of any size (within reason), given the appropriate FPGA. In the following section, we describe the algorithm used. We then describe the hardware architecture, after which we give details of the FPGA implementation (on a Xilinx XC4010E FPGA). Simulation results are given. 2.THE PROPOSED CCL ALGORITHM Given an arbitrary input image, the CCL algorithm which we use is an iterative one. Initially each object pixel is taken to be a separate object; then, by repeatedly applying a recursive neighbourhood operation, connected pixels are ‘merged’ by being made to share a common pixel value (the maximum in the neighbourhood). The key to requiring only a small number of iterations is the recursive nature of the neighbourhood operation, in which the result pixel is stored back in the image being labelled: this enables intermediate labels to be propagated across the image in a single pass. In more detail, the algorithm involves the following steps: Step 1: Threshold the input image to obtain a binary image. This will make all pixels in the objects equal to 1 and all other pixels equal to 0. Step 2: The thresholded binary image is initially labelled by assigning a different label to every non-zero pixel (i.e. initially treating each pixel as a separate object). Step 3: Apply a recursive ‘non-zero maximum’ neighbourhood operation on the image, using the window given in Figure 2. During this operation, each result pixel is stored back in the source image. A complete forward pass is followed by an inverse pass. Step 4: Repeat Step 3 until there is no change in the image. The following pseudo-code therefore expresses the basic algorithm: Threshold the image; Do initial labelling of all object pixels; Repeat Apply a forward recursive ‘non-zero max’ neighbourhood operation; Apply a backward recursive ‘non-zero max’ neighbourhood operation; Until (No change in the image); //Forward pass // Backward pass The application of the ‘non-zero maximum’ operations is illustrated in Figure 2. Input Image Result Image P5 P2 Forward Pass P4 P1 P3 P3 Backward pass P1 P4 P2 P5 If (P1=0) then return 0 Else return maximum(P1, P2, P3, P4, P5) Figure 2: CCL neighbourhood operation. 3. THE HARDWARE ARCHITECTURE Previous work by Donachy et al9 has resulted in an FPGA architecture for recursive neighbourhood operations such as ‘nonzero maximum’ above. This architecture is a serial one, where pixels are fed to the FPGA one at a time. A one-line on-chip buffer is required, with five maximum-of-two operators, plus the necessary control to implement the recursive aspect of the operation. However, even given this architecture, the hardware implementation of this algorithm faces several further challenges: The multi-pass scheme suggests repeated reconfiguration of the circuit between the forward pass and the backward pass (and vice-versa). This reconfiguration would slow the overall operating speed considerably. The solution to this problem is to keep the same circuit configuration, but reverse the order in which the image is supplied to the circuit: i.e. reverse the image instead of the window. The latter is achieved by storing the output pixels in reverse order in both cases (so that after a forward+backward pass the image pixels are in the original order). Thus, a forward pass would translate to a forward scan of the image (from the top left corner to the bottom right one), whereas a backward pass would translate to a backward scan of the image (from the bottom right corner to the top left one), but using the same circuit. As mentioned before, the initial labelling has to assign a different label to every non-zero pixel. For a NxN image size, this could require 2Log2 N bits per pixel to represent intermediate label values. In order to reduce the number of bits per pixel, the initial labelling technique we adopt is: firstly to give the first non-zero pixel the highest label, and decrease the label value for subsequent non-zero pixels. Secondly, it gives adjacent non-zero pixels, within the same column (assuming a vertical scan), the same label; this will use fewer label values, thus reducing the required pixel word length. The latter could be determined in advance by knowing of the shape and maximum number of objects. Note that starting this initial labelling technique at the maximum label allows us to predetermine the label of one object in advance, which is the maximum pixel value chosen. If we wish to include the initial thresholding and initial labelling on-the-fly (which we do), then the first pass is special. Subsequent passes should bypass these stages. An unattractive solution would be to reconfigure the whole circuit after the first pass. To avoid that, our implementation provides extra logic for bypassing this stage in subsequent passes without reconfiguring. Detecting if a pass has resulted in any change needs to be done on-the-fly, to avoid a separate pass for this purpose. A flag is maintained during processing, and is set to 1 if and when any result pixel differs from its original value. To test for termination of the whole algorithm, this flag must be 0 for two consecutive passes (one forward and one backward). The organisation of the proposed architecture is given in Figure 3. The ‘maximum of five’ neighbourhood operation is split into four ‘maximum of two’ operations. The recursive storing back of result pixels into the source image is implemented by a feedback loop. In order to eliminate the propagation of the pixel label from the bottom of an image column to the top of the following one, a column counter is provided to inhibit this propagation. In order to make the design scaleable and compact, we used a bit serial pipelining. For efficient implementation of the ‘maximum of two’ operators, data is processed Most Significant Bit First (MSBF). 5 MAXIMUM MAXIMUM PIXEL DELAY 4 MAXIMUM PIXEL DELAY COLUMN COUNTER 3 INPUT IMAGE 5 4 3 2 1 T H R E S H O L D NON-ZERO INITIAL LABELLING 1 2 MAXIMUM L I N E OUTPUT IMAGE B U F F E R Figure 3: Architecture of the Labelling Unit. A forward pass is followed by an inverse one in which the input image (the output image of the previous pass) is scanned in reverse order (actually implemented by storing the output image in reverse order). The process is repeated until no change in the image occurs. The system architecture is illustrated in Figure 4. Source Image FPGA Chip Off-chip RAM Labelling Unit “Start” Controller “Finish” Comparator Figure 4: System architecture. The controller block generates the proper off-chip RAM address sequences. In addition, it generates the signal ‘Start’, which initiates the first pass, when it is set to one. It is set to zero in subsequent passes, thus bypassing the Threshold and the Initial Labelling. Given prior knowledge of the shapes in the source image, an upper limit on the number of required passes can be set in advance, and in some common cases labelling may need only one forward+backward pass. 4. FPGA IMPLEMENTATION The CCL circuit has been implemented for Xilinx XC4000 series FPGAs. The Threshold block is bit parallel, and uses dedicated fast carry logic; it is highly scaleable. A 16 bit threshold block occupies 9 CLBs. The Initial Labelling block occupies 3 CLBs per bit. The non-zero maximum unit occupies 14 CLBs whereas the other maximum units occupy 4 CLBs. The line buffer is implemented using XC4000 Select-RAMTM. An N pixel line buffer occupies N/2 +2 CLBs. The whole CCL circuit has been implemented in an XC4010E-1 FPGA chip (20 by 20 CLBs). For a 256 by 256 image, it fits easily on to the XC4010E. 5. SIMULATION RESULTS Timing simulations show that the circuit can run at a speed of 76 MHz (over 70 passes per second for 16 bits per pixel). For 256x256 images in which the objects can be labelled by a single forward+backward pass, input images can be thresholded and labelled in real time at approximately 35 fps. Larger images will take proportionately longer, since the algorithm is O(N2) for an NXN image. In order to verify the correctness of the design, we have simulated it using Xilinx Foundation Simulator for a variety of images. Figure 5 presents the simulation results for an 8 X8 image. Original binary image First pass (Forward scan) Initial Labelling 64 63 64 63 64 64 61 64 64 62 64 62 61 64 62 62 64 62 61 64 62 62 Figure 5: Simulation Example of Connected Component Labelling. In this particular case, one forward pass is sufficient to label the image. However, that is not true in the general case. Objects of the forms given in Figure 6 cannot be labelled in one pass. In this case two passes – one forward and one backward - are necessary. Original binary image Initial Labelling 64 62 62 First pass (Forward scan) 64 64 60 64 64 63 64 60 59 60 59 62 61 60 63 62 61 60 Second pass (Backward scan) 61 64 64 61 61 61 61 64 64 61 61 61 61 64 64 61 61 Figure 6: Another simulation example. 61 61 61 61 6. CONCLUSION In this paper, we have presented an architecture based on a serial, recursive algorithm for Connected Component Labelling. This architecture is well suited to implementation on an FPGA with off-chip RAM. The algorithm iteratively scans the input image, performing a non-zero maximum neighbourhood operation. The resulting pixels are stored back into the input image, so an intermediate label is partially propagated throughout a component during a single pass. An inverse pass in which the image is scanned in reverse order follows a complete forward pass. In the general case, the process is repeated until no change in the image occurs. For 256x256 images, using 16 bits per pixel, the current architecture delivers 70 passes per second. For the common case where in practice a single forward+backward pass is sufficient, a frame rate of approximately 35 fps can be achieved. In practice, in some circumstances, a smaller word length can be used (e.g. 10 bits), which results in proportionately faster operation. Although the algorithm has been implemented in bit serial, it is possible to implement it using digit serial arithmetic (or even fully parallel) in order to speed up the circuit at the expense of higher complexity. The main advantage of this algorithm is that its hardware implementation is easily scaleable to realistic size images. It can be defined at a high level within our application environment, which enables automatic generation of architectures for an FPGA-based image coprocessor10. On the other hand, because a single pass is not sufficient to label the whole image in the general case, this algorithm requires an off-chip memory to store intermediate images. In addition, it may not be possible to predict the number of passes required. Both these factors mean that the algorithm is not yet well suited to real time general purpose labelling using an FPGA embedded in a camera. REFERENCES D. L. Milgram, “Region extraction using convergent evidence”, Computer Graphics and Image Processing, vol. 5, no. 2, pp. 561-572, 1988. 2. L. C. Sanz and D. Petkovic, “Machine vision algorithms for automated inspection of thin-film disk heads”, IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 10, no. 6, pp. 830-848, 1988. 3. H. M. Alnuweiri, and V. K. Prasanna, “Parallel architectures and algorithms for image component labeling”, IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol. 14, No. 10, pp. 1014-1034, Oct. 1992. 4. C. R. Dyer and A. Rosenfeld, “Parallel image processing by memory augmented cellular automata”, IEEE Transactions on Pattern Analysis and Machine Intelligence, pp. 29-41, Jan. 1981. 5. M. Maresca, and H. Li, M. Lavin, “Connected component labeling on Polymorphic-Torus architecture”, IEEE Int. Conf. Computer Vision and Pattern Recognition, Ann Arbor, pp. 951-956, 1988. 6. R. Miller, and V. K. Prasanna-Kumar, “Meshes with reconfigurable buses”, Proc. 5th MIT Conf. Advanced Research in VLSI, pp. 163-178, March 1988 7. S. Olariu, J. L. Schwing and J. Zhang, “Fast component labelling and convex hull computation on reconfigurable meshes”, Image and Vision Computing, Vol. 11, No. 7, pp.447-455, 1993. 8. E. Mozef, S. Weber, J. Jaber and G. Prieur, “Parallel architecture dedicated to image component labeling in O(nLog n): FPGA implementation”, Proc. SPIE, Vol. 2784, pp.120-125, 1996. 9. P. Donachy, D. Crookes, A. Bouridane, K. Alotaibi, and A. Benkrid, “Design and implementation of a high level image processing machine using reconfigurable hardware”, Proceedings SPIE’98, Vol. 3526, p. 2-13, 1998. 10. K. Benkrid, D. Crookes, A. Bouridane, P. Corr and K.Alotaibi, ‘A High Level Software Environment for FPGA Based Image Processing’, To appear in Proceedings IPA 99’, Manchester, July 1999. 1.