Instrument Calibration Procedure

advertisement

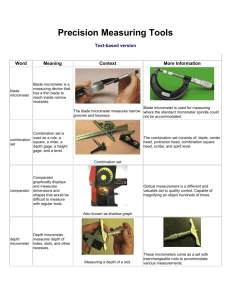

Instrument Calibration Procedure Internal and External Dial, Vernier and Digital Calipers and Outside Micrometers GPC CAL001 DATE 10/07 REV. 0 Quality Assurance Manager Approved Date: SECTION1 INTRODUCTION AND DESCRIPTION 1.1 This procedure describes the calibration of Internal and External Dial, Vernier and Digital Calipers and Outside Micrometers. The instrument being calibrated is referred to herein as the TI (Test Instrument) 1.2 This procedure includes test and essential performance parameters only. Any malfunction noticed during calibration, whether specifically tested for or not, should be corrected. Table 1 Calibration Description TI Dial, Vernier and Digital Calipers Outside Micrometers TI Characteristics Zero indication test Performance Specification Test point 0 in. Tolerance +/- .0005 in. Outside accuracy Range 0 to 12” Tolerance: see Table II Inside accuracy Range 0 to 12” Tolerance: see Table II Depth gage accuracy Range 0 to 12” Length and Linearity Range: 0 to 12” Test Method Determined by sliding the jaws together and reading the TI indication. Comparison to gage blocks between the TI jaws. Comparison to gage blocks dimensions placing the blocks with attached caliper jaws outside the TI jaws. Comparison to gage block dimensions, placing the TI depth gage block and reading the TI outside dimension scale. Measured by comparing TI indications to gage block dimensions setup to test the basic length and micrometer head linearity. SECTION 2 EQUIPMENT REQUIREMENTS Table 2 Equipment Requirements Item 2.1 Gage Block Set 2.2 Low power magnifier 2.3 Micrometer wrench 2.4 Small jewelers screwdriver 2.5 Small clamp 2.6 Light machine oil or spray 2.7 Clean flat surface Minimum Use Specifications Range .050- 4” Tolerance .00005 To aid in reading the TL vernier or barrel scale Adjustment of micrometer Adjustment of caliper Hold gage blocks for inside measurement comparison Lubrication of slides or thimble Surface Plate or Steel Block Calibration Equipment ESSM #SE0060 SECTION 3 PRELIMINARY OPERATIONS 3.1 TI INSPECTION 3.2 Ensure that the work area is clean, well illuminated, free from excessive drafts, free from excessive humidity, and that the rate of temperature change does not exceed 4 oF per hour. 3.3 Ensure that the gage block set (item 2.1) is clean and that the TI and the gage blocks have been allowed to stabilize at the ambient temperature for a minimum of 2 hours. CALIPERS 3.4 Ensure that the TI jaws slide smoothly and freely along the full length of the TI beam, if not take corrective action. 3.5 Inspect to ensure the jaws are free of nicks and burrs and that it is clean and free from damage that would impair its operation. NOTE TI test point are basically determined by selecting 4 test point within the first inch of range and 4 to 8 point additional test points extending over the remainder of the TI range at approximately equal spacing. 3.6 In order top minimize the number of gage blocks needed to test calipers with higher ranges, select test point at 25, 50, 75, and 100% of the TI range beyond the 1st inch and round to the nearest ½ inch. Use major vernier graduations or normal electronic digital values as test points as necessary. OUTSIDE MICROMETERS 3.7 Slowly rotate the TI micrometer thimble, and ensure that it operates smoothly its entire range. 3.8 The TI length measurement test should be preformed at approximately 6 points across the range of the TI. For example, 0 to 1” micrometer may have calculated test points at .000, .200, .400, .600, .800 and 1.000 inch. SECTION 4 CALIBRATION PROCESS CALIPERS NOTES Unless otherwise specified, verify the results of each test and take corrective action whenever the test requirement is not being met before proceeding. Cotton gloves should be worn when handling gage blocks to prevent the transfer of body heat, protect the gage surfaces. 4.1 ZERO TEST 4.1.1 Slide the TI jaws together, ensuring that no light is visible between the jaws measuring surfaces. If the TI has inside measurement capability, verify that the dial indicator or vernier indicates zero, as necessary. Adjust the dial bezel, if necessary. 4.1.2 Tighten the TI sliding jaw set screw, if applicable 4.1.3 If the TI has a digital readout, depress the zero set and verify that the digital indication reads 0.000. 4.1.4 If the TI is a vernier type, verify that the TI zero marks are aligned, as applicable. 4.2 OUTSIDE ACCURACY TEST 4.2.1 Determine the gage blocks required to obtain test points at a minimum of 4 point throughout the first inch of the TI as follows .125, .300, .650, and 1.000 inch. 4.2.2. Open the TI to beyond the first test point. Insert the gage blocks and close the jaws until they are firmly in contact with the gage block. Repeat each reading 3-5 times and verify that each indication does not exceed +/- ¼ of the least dial graduation. 4.2.3 Verify that the first inch test points are within +/- .001 inch for 0-6” range and +/.002 for 0-12”calipers. 4.2.4 Repeat steps 4.2.2 and 4.2.3 for the remaining test points of 25%, 50%, 75% and full range (100%). 4.3 INSIDE ACCURACY TEST 4.3.1 Determine the gage blocks required to obtain test points at a minimum of 4 point throughout the first inch of the TI as follows .125, .300, .650, and 1.000 inch. 4.3.2. Using a three gage block set-up. Place the test gage block between two other blocks and secure with a thumb screw type clamp (Figure 1). Open the TI to the approximately the first test point. Insert the TI and open the jaws until they are firmly in contact with the inside of the gage blocks. Repeat each reading 3-5 times and verify that each indication does not exceed +/-¼ of the least dial graduation. 4.3.3 Verify that the first inch test points are within +/- .001 inch for 0-6” range and +/.002 for 0-12”calipers. 4.3.4 Repeat steps 4.2.2 and 4.2.3 for the remaining test points of 25%, 50%, 75% and full range (100%). Test Point Gage Block Gage Block Gage Block Clamp Figure 1 Inside Measurement Set-up 4.4 DEPTH ACCURACY 4.4.1 Position the end of the TI beam against a 1.0 inch gage block surface with the end of the rod against the surface plate or steel gage block. 4.4.2 Ensure that the TI gage measuring surfaces are squarely placed against the surface of the gage block and the surface plate. 4.4.3 Make any necessary final adjustments and note the scale indication. 4.4.4 Verify that the value(s) noted in the preceding step is within +/- .001 for 0-6” range and +/- .002 for 0-12”calipers. 4.4.5 Perform steps 4.4.1 through 4.4.4 for each additional inch of depth gage range, changing gage blocks, as necessary. SECTION 5 CALIBRATION PROCESS OUTSIDE MICROMETERS 5.1 Determine the gage blocks required to obtain test points at a minimum of 5 point. (Lowest TI indication, 20%, 40%, 60%, 80% and full range.) 5.1.1 Adjust the spindle several tenths of an inch from zero, based on the TI basic length. Starting with the gage block equal to the lowest TI range, slide the gage block(s) between the anvil and spindle. 5.1.2 Adjust the TI as applicable to contact the gage block(s). Repeat each reading 2-3 times and verify each indication. Refer to Table 3 Micrometer Calibration Tolerance. 5.1.3 Repeat step 5.1.1 & 5.1.2 through the complete range of the TI. 5.2 If the TI is the interchangeable anvil type, attach the each anvil in range and verify as outlined in paragraph 5.1-5.1.3. Table 3 Micrometer Calibration Tolerance Micrometer Size Range Calibration Tolerance Calibration Tolerance w/vernier Without vernier 0-1” +/- .0001 +/- .001 1”-2” through 9”-10” +/- .0002 +/- .001 10”-11” and greater +/- .0003 +/- .002 SECTION 6 LABELING AND RECORDS 6.1 Complete a Measuring and Test Calibration Record (QA-4) for each item calibrated. Utilize additional QA-4 form as continuation sheet as needed. 6.2 Affix a calibration label to the TI with the date calibrated, date of next calibration and who performed the calibration. 6.2.1 6.3 Special calibration label shall be applied to any item that has not been calibrated to it’s fully range of capability. All completed calibration records shall be file and maintained.