A problem I foresee is that when the input is something other than a

advertisement

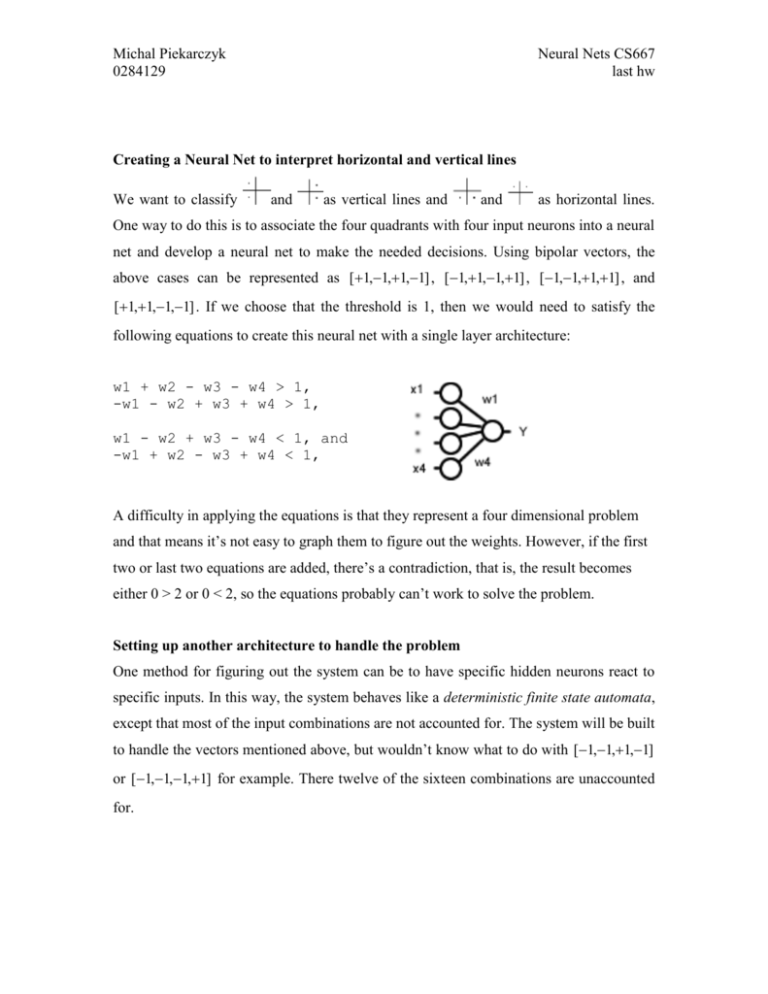

Michal Piekarczyk 0284129 Neural Nets CS667 last hw Creating a Neural Net to interpret horizontal and vertical lines We want to classify and as vertical lines and and as horizontal lines. One way to do this is to associate the four quadrants with four input neurons into a neural net and develop a neural net to make the needed decisions. Using bipolar vectors, the above cases can be represented as [1,1,1,1] , [1,1,1,1] , [1,1,1,1] , and [1,1,1,1] . If we choose that the threshold is 1, then we would need to satisfy the following equations to create this neural net with a single layer architecture: w1 + w2 - w3 - w4 > 1, -w1 - w2 + w3 + w4 > 1, w1 - w2 + w3 - w4 < 1, and -w1 + w2 - w3 + w4 < 1, A difficulty in applying the equations is that they represent a four dimensional problem and that means it’s not easy to graph them to figure out the weights. However, if the first two or last two equations are added, there’s a contradiction, that is, the result becomes either 0 > 2 or 0 < 2, so the equations probably can’t work to solve the problem. Setting up another architecture to handle the problem One method for figuring out the system can be to have specific hidden neurons react to specific inputs. In this way, the system behaves like a deterministic finite state automata, except that most of the input combinations are not accounted for. The system will be built to handle the vectors mentioned above, but wouldn’t know what to do with [1,1,1,1] or [1,1,1,1] for example. There twelve of the sixteen combinations are unaccounted for. Michal Piekarczyk 0284129 Neural Nets CS667 last hw If there’s one error in the input, there will always be two equally likely choices of what it really was, so the problem can’t have an error-handling solution. For example, can be either or . The design chosen—with the weight diagram below—identifies each line as only depending on two inputs. For example, the neuron for depends on A and C, and the weights on B and D are 0 and aren’t drawn. If A is +1, and C is +1, then y in 1 / 2 1 / 2 1 / 2 1 / 2 . The bias attached only lets the sum to be positive if two of the inputs are positive. The same kind of decision is made for each line. Next, another layer with two neurons has each neuron performing an OR function. If the inputs to the neural net are only limited to the four lines, then both wouldn’t fire +1, so and really needs to use an XOR, but an OR is simpler and will do just fine. This time the biases are positive. For example, if then indicates the line was a top horizontal line, will receive y in 1 / 2 1 / 2 1 / 2 1 / 2 . This yields that the line is horizontal. But, if neither nor fire +1, then will receive y in 1 / 2 1 / 2 1 / 2 1 / 2 , yielding a negative one, or a false for a horizontal, but also true for a vertical—if we limit the input to the four line types. Finally, the output neuron uses the policy that horizontal lines will be denoted with +1, but verticals will get -1. This time no bias is necessary, but the weights are opposite signs, so a horizontal line will cause y in 1 1 / 2 (1) (1 / 2) 1 , y in 1 1 / 2 (1) (1 / 2) 1 . while any vertical gives Michal Piekarczyk 0284129 Neural Nets CS667 last hw **note that all connections are feedforward** A comment can be made about the need for hidden layers to reiterate why a single layer approach wouldn’t work. The above is the minimum number of layers needed to solve the problem if we want to limit one decision per layer. Above, the decisions were in identifying which of the four lines was represented, and then in classifying the lines into different types. Without a hidden layer, there’s no decision-making phase.