Standard support vector machines (SVMs)

advertisement

Proximal Support Vector Machine Using Local Information

Xubing Yang1,2 Songcan Chen1

Bin Chen1 Zhisong Pan3

1 Department of Computer Science and Engineering

Nanjing University of Aeronautics & Astronautics, Nanjing 210016, China

2 College of Information Science and Technology,

Nanjing Forestry University, Nanjing 210037, China

3. Institute of Command Automation,

PLA University of Science Technology, Nanjing 210007, China

Abstract: Instead of standard support vector machines (SVMs) that classify points to one of two disjoint

half-spaces by solving a quadratic program, the plane classifier GEPSVM (Proximal SVM Classification via

Generalized Eigenvalues) classifies points by assigning them to the closest of two nonparallel planes which

are generated by their corresponding generalized eigenvalue problems. A simple geometric interpretation of

GEPSVM is that each plane is closest to the points of its own class and furthest to the points of the other

class. Analysis and experiments have demonstrated its capability in both computation time and test

correctness. In this paper, following the geometric intuition of GEPSVM, a new supervised learning method

called Proximal Support Vector Machine Using Local Information (LIPSVM) is proposed. With the

introduction of proximity information (consideration of underlying information such as correlation or

similarity between points) between the training points, LIPSVM not only keeps aforementioned

characteristics of GEPSVM, but also has its additional advantages: (1) robustness to outliers; (2) each plane

is generated from its corresponding standard rather than generalized eigenvalue problem to avoid matrix

singularity; (3) comparable classification ability to the eigenvalue-based classifiers GEPSVM and LDA.

Furthermore, the idea of LIPSVM can be easily extended to other classifiers, such as LDA. Finally, some

experiments on the artificial and benchmark datasets show the effectiveness of LIPSVM.

Keywords: proximal classification; eigenvalue; manifold learning; outlier

1. Introduction

Standard support vector machines (SVMs) are based on the structural risk minimization (SRM) principle

and aim at maximizing the margin between the points of two-class dataset. For the pattern recognition case,

Corresponding author: Tel: +86-25-84896481-12106; Fax: +86-25-84498069; email: s.chen@nuaa.edu.cn (Songcan Chen),

xbyang@nuaa.edu.cn (Xubing Yang) , b.chen@nuaa.edu.cn (Bin Chen) and pzsong@nuaa.edu.cn (Zhisong Pan)

they have shown their outstanding classification performance [1] in many applications, such as handwritten

digit recognition [2,3], object recognition [4], speaker identification [5], face detection in images and text

categorization [6,7]. Although SVM is a powerful classification tool, it requires the solution of quadratic

programming (QP) problem. Recently, Fung and Mangasarian introduced a linear classifier, proximal

support vector machine (PSVM) [8], at KDD2001 as variation of a standard SVM. Different from SVM,

PSVM replaces the inequality with the equality in the defining constraint structure of the SVM framework.

Besides, it also replaces the absolute error measure by the squared error measure in defining the

minimization problem. The authors claimed that in doing so, the computational complexity can be greatly

reduced without resulting in discernible loss of classification accuracy. Furthermore, PSVM classifies

two-class points to the closest of two parallel planes that are pushed apart as far as possible. GEPSVM [9] is

an alternative version to PSVM, which relaxes the parallelism condition on the PSVM. Each of the

nonparallel proximal planes is generated by a generalized eigenvalue problem. Its performance has been

showed in both computational time and test accuracy in [9]. Similar to PSVM, the geometric interpretation

of GEPSVM is that each of two nonparallel planes is as close as possible to one of the two-class data sets

and as far as possible from the other class data set.

In this paper, we propose a new nonparallel plane classifier LIPSVM for binary classification. Different

from GEPSVM, LIPSVM introduces proximity information between the data points into constructing

classifier. As for so-called proximity information, it is often measured by the nearest neighbor relations

lurking in the points. Cover and Hart [10] had firstly concluded that almost the half of the classification

information is contained in the nearest neighbors. For the purpose of classification, the basic assumption

here is that the points sampled from the same class have higher correlation/similarity (for example, they are

sampled from an unknown identical distribution) than those from the different ones [11]. Furthermore,

especially in recent years, many researchers have reported that most of the points of a dataset are highly

correlated, at least locally, or the data set has inherent geometrical property (for example, a manifold

structure) [12,13]. This issue explains the successes of the increasingly popular manifold learning methods,

such as Locally Linear Embedding (LLE) [14], ISOMAP [15], Laplacian Eigenmap [16] and their extensions

[17,18,19]. Although those algorithms are efficient for discovering intrinsic feature of the lower-dimensional

manifold embedded in the original high-dimensional observation space, up to now many open problems still

have not been efficiently solved for supervised learning, for instance, data classification. One of the most

important reasons is that it is not necessarily reasonable to suppose that the manifolds in different class will

be well-classified in the same lower-dimension embedded space. Furthermore, the intrinsic dimensionality of

a manifold is usually unknown a priori and can not be reliably estimated from the dataset. In this paper,

quite different from the aforementioned manifold learning methods, LIPSVM needs not consider how to

estimate intrinsic dimension of the embedded space and only requests the proximity information between

points which can be derived from their nearest neighbors.

We highlight the contributions of this paper.

1) Instead of generalized eigenvalue problems in GEPSVM algorithm, LIPSVM only needs to solve

standard eigenvalue problems.

2) With introducing this proximal information into constructing LIPSVM, we expect that the so-developed

classifier is to be robust to outliers.

3) In essence, GEPSVM is derived by a generalized eigenvalue problem through minimizing a kind of

Rayleigh quotient. For the two real symmetric matrices appearing in GEPSVM criterion, if both are positive

semi-definite or singular, an ill-defined operation will be yielded due to floating-point imprecisions. So,

GEPSVM adds a perturbation to one of the singular (or semi-definite positive) matrix. Although the authors

also claimed that this perturbation acts as some kind of regularization, the real influence in this setting of

regularization is not yet well understood. In contrast, LIPSVM need not to care about the matrix singularity

due to adoption of a similar formulation to the Maximum Margin Criterion (MMC) [20], but it is worthwhile

noting that MMC just is a dimensionality reduction method rather than a classification method.

4) The idea of LIPSVM is applicable to a wide range of binary classifiers, such as LDA.

The rest of this paper is organized as follows. In Section 2, we review some basic work about GEPSVM.

Mathematical description of LIPSVM will appear in section 3. In Section 4, we extend this design idea of

LIPSVM to LDA. And in Section 5, we provide the experimental results on some artificial and public

datasets. Finally, we conclude the whole paper in Section 6.

2. A brief review on GEPSVM algorithm

GEPSVM [9] algorithm has been validated that it is effective for binary classification. In this section, we

briefly illustrate its main idea.

Given a training set of two pattern classes X

(i )

[ x1(i ) , x2(i ) ,

, xN(ii) ] , i = 1, 2 with Ni n-dimensional

patterns in the ith class. Throughout the paper, superscript “T” denotes transposition, and “e” is a

case-dependent dimensional column vector whose entries are all ones. Denote the training set by a N1n

matrix A (Ai, the ith row of A, corresponds to the ith pattern of Class 1) and the N2n matrix B (Bi has the

same meaning of Ai), respectively. GEPSVM attempts to seek two non-parallel planes (eq (1)) in

n-dimensional input space respectively,

xT w1 r1 0, xT w2 r2 0 ,

(1)

where w i and ri mean the weight and threshold of the given ith plane. The geometrical interpretation,

each plane should be closest to the points of its own class and furthest from the points of the other class,

leads to the following optimization problem,

min

( w, r ) 0

|| Aw er ||2 || [ wr ] ||2

|| Bw er ||2

,

(2)

where is a nonnegative regularization factor, and ||.|| means the 2-norm. Let

G: = [A e]T[A e] + I ,

H: = [B e]T[B e],

z: =[wT r]T ,

(3)

then, w.r.t the first plane of (1), formula (2) becomes:

min r ( z ) :

z 0

z T Gz

,

z T Hz

(4)

where both matrices G and H are positive semi-definition when =0. Formula (4) can be solved by the

following generalized eigenvalue problem

G z = H z (z 0).

(5)

When either of G and H in eq (5) is a positive definite matrix, the global minimum of (4) is achieved at an

eigenvector of the generalized eigenvalue problem (5) corresponding to the smallest eigenvalue. So in many

real-world cases, a regularization factor must be set to a positive constant, especially in some Small Size

Sample (SSS) problems. The 2nd plane can be obtained with a similar process.

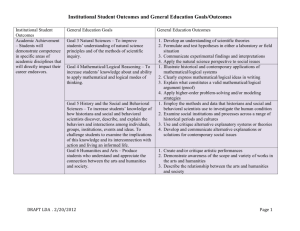

Under the foresaid optimization criterion, GEPSVM attempts to estimate planes in input space for the

given data, that is, each plane is generated or approximated by the data points of its corresponding class. In

essence, the points in the same class are fitted using a linear function. However, in the viewpoint of

regression, it is not quite reasonable to take an outlier (a point far from the most samples) as a normal sample

in data fitting methodology when outliers are present. Furthermore, the outliers usually conduct erroneous

data information, even misguide a fitting. So they can heavily affect fitting effect in most cases as shown in

Fig. 1 where two outliers are added. Obviously, the plane generated by GEPSVM is heavily biased due to

presence of the two outliers. Therefore, in this paper, we attempt to define a new robust criterion to seek the

planes which not only substantially considers original data distribution, but also can be resistant to outliers

(see red dashed lines in Fig. 1, which are generated from LIPSVM).

C1 points

C2 points

LIPSVM

GEPSVM

margin points

Fig.1 The planes learned by GEPSVM and LIPSVM, respectively. The red (dashed) lines come from LIPSVM, and the black

(solid) ones, from GEPSVM. Data points in Class 1 are symbolize with “o”, and in Class 2, with “□”. Those symbols with

additional “+” stand for marginal points (k2-nearest neighbors to Class 1) in Class 2. The two points, far away from most of

data points in Class 1, can be thought as outliers. It is also illustrates the intra-class graph and its connected relationship of data

points in Class 1.

In what follows, we detail our LIPSVM.

3. Proximal Support Vector Machine Using Local Information (LIPSVM)

In this section, we introduce our novel classifier LIPSVM, which contains the following two steps.

In the first step, constructing the two graphs characterize the intra-class denseness and the inter-class

separability respectively. Each vertex in the graphs corresponds to a sample of the given data, as described

in many graph-based machine learning methods [13]. Due to the one-to-one correspondence between

“vertex” and “sample”, we will not strictly distinguish them hereafter. In the intra-class graph, an edge

between a vertex pair is added when the corresponding sample pair is each other’s k1-nearest neighbors

(k1-NN) in the same class. In the inter-class graph, the vertex pair, whose corresponding samples come

from different classes, is connected when one of the pair is a k2 -NN of the other. For the intra-class case,

the points in high density regions (hereafter we call them interior points) have more chance to become

nonzero-degree vertexes; while the points in low density regions, for example, outliers, become more likely

isolated vertexes (zero-degree). For the inter-class case, the points in the marginal regions (marginal points)

have more possibility to become nonzero-degree vertexes. Intuitively, if a fitting plane of one class is far

away from the marginal points of the other class, at least in linear-separable case, this plane may be far

away from the rest.

In the second step, only those nonzero-degree points are used to training classifier. Thus, LIPSVM can

restrain outlier to great extent (see Fig.1).

The training time cost of LIPSVM is from the two aspects: One is from selection of interior points and

marginal points, and the other from the optimization of LIPSVM. The following analysis indicates that

LIPSVM performs faster than GEPSVM: 1) LIPSVM just requires solving a standard eigenvalue problem,

while GEPSVM needs to solve a generalized eigenvalue problem; 2) After finishing samples selection, the

size of the selected samples used to training LIPSVM is smaller than that of the GEPSVM.. For example, in

Fig.1, the first plane (the top dash line of Fig.1) of LIPSVM is closer to the interior points of Class 1 and

faraway from the marginal points of Class 2. Accordingly, the training set size of LIPSVM is smaller than

that of GEPSVM.

In the next subsection, we firstly derive the linear LIPSVM. Then, we develop its corresponding nonlinear

one with kernel tricks.

3.1 Linear LIPSVM

Corresponding to Fig.1, the two adjacent matrices of each plane are respectively denoted by S and R and

defined as follows:

l ( 0) xl Nek1 (m) xm Nek1 (l )

Slm

,

otherwise

0

(6)

m ( 0) xm Nek2 (l )

Rlm

,

otherwise

0

(7)

where Nek1 (l ) denotes a set of the k1-nearest neighbors in the same class of the sample x l , and Nek1 (l ) a

set of data points composed of k2-nearest neighbors (k2-NN) in the different class of the sample x l . When

Slm > 0 or Rlm > 0 , an undirected edge between x l and x m is added to the corresponding graph. As a

result, a linear plane of LIPSVM can be produced from those nonzero-degree vertexes.

3.1.1 Optimization criterion of LIPSVM

Analogously to GEPSVM, LIPSVM also tries to seek two nonparallel planes as described in eq (1). With

the similar geometric intuition of GEPSVM, we define an optimization criterion to determine the plane of

Class 1 as follows:

2

m i n j 11 l 11S jl w(1T xl( 1 ) r 1 2) j 1

R wT(1xm (2 r)

1 m 1 jm

N

s.t .

N

N

w1| |= | |

N

1

)2

(8)

1.

(9)

By simplifying (8), we obtain the following expression:

l 1 dl (w1T xl(1) r1 )2 m1 fm (w1T xm(2) r1 )2

N1

N2

(10)

where the weight dl j 11 S jl and f m j 11 R jm , l 1, 2,

N

, N1; m 1, 2,

N

, N2 .

For geometrical interpretability (see 3.1.3), we define the weights dl and f m as:

ìï 1,

dl = ïí

ïï 0,

î

ìï 1,

f m = ïí

ïï 0,

î

$ j0 , S j0l ¹ 0

(11)

otherwise

$ m0 , R jm0 ¹ 0

(12)

otherwise

where j0 Î {1, 2,L , N1} and m0 Î {1, 2,L , N2 } .

Next we discuss how to solve this optimization problem.

3.1.2 Analytic Solution and Theoretical Justification

Define a Lagrange multiplier function based on the objective function (10) and equality constraints (9) as

follows:

L(w1 , r1 , )

N1

l 1

dl (w1T xl(1) r1 )2 m21 f m (w1T xm(2) r1 )2 (w1T w1 1) .

N

(13)

Setting the gradients of L with respect to w1 and r1 equal to zero gives the following optimality conditions:

L

N

N

2 l 11 dl ( xl(1) )( xl(1) )T m21 f m ( xm(2) )( xm(2) )T w1

w1

2

L

2

r1

(14)

d m21 f m r1 0 .

l 1 l

(15)

N1

l 1

dl xl(1) m21 f m xm(2) r1 2 w1 ,

N

d ( xl(1) )T m21 f m ( xm(2) )T w1

l 1 l

N1

N

2

N1

N

Simplifying (14) and (15), we obtain the following simple expression with matrix form:

T

( X1 DX T1 X FX

( De1 X Fe2 r) 1 w ,

2

2)w 1 X

1

(16)

(eT D X1 T eT F 2XT)

where X i ( x1(i ) , x2(i ) ,

and n2

N2

m1

w

n( 1n 2)r 1 ,0

(17)

1

, xN(ii) ), D diag(d1 , d 2 ,

, d N1 ) , F diag( f1 , f 2 ,

, f N2 ) , n1 l 11 dl ,

N

fm .

When n1 = n2 , the variable r1 disappears from expression (17). So, we discuss the solutions of the

proposed optimization criterion in two cases:

1) when n1 n2 , the optimal solution to the above optimization problem is obtained from solving the

following eigenvalue problem after substituting eq (17) into (16).

XTX T w1 w1

(18)

r1 eT MX T w1 /(n1 n2 )

(19)

where X [ X1 , X 2 ] , M diag ( D, F ) , and T M Mee M /(n1 n2 ) .

T

2) when n1 n2 , r1 disappears from eq. (17). And eq.(17) becomes:

(eT DX1T eT FX 2T ) w1 0 .

(20)

T

Left multiplying eq. (16) by w1 , and substituting eq (20) into it, we obtain a new expression as follows:

w1T XMX T w1 .

When

(21)

is an eigenvalue of the real symmetrical matrix XMX T , the solutions of eq. (21) can be

obtained from the following standard eigen-equation (details is described in Theorem 1)

XMX T w1 w1 .

In this situation, r1 can not be solved through (16) and (17). So instead, we directly define r1 with the

intra-class vertexes as follows:

r1 w1T l 11 dl xl(1) / n1 = w1T X1 De / n1

N

(22)

An intuition for the above definition is from that a fitting plane/line passing through the center of the

given points has less regression loss in a sense of MSE [21].

Next, importantly, we will prove that the optimal normal vector w1 of the first plane is exactly an

eigenvector of the aforementioned eigenvalue problem corresponding to a smallest eigenvalue (Theorem 2).

Theorem 1.

Let A nn be a real symmetric matrix, for any unit vector w n , if the w is a solution

of the eigen-equation Aw w , then it must satisfy w T Aw , where

satisfies w T Aw and is an eigenvector of matrix A , then

R . Conversely, if w

and w must satisfy Aw w .

Theorem 2. The optimal normal vector w1 of the first plane is exactly the eigenvector corresponding to

smallest eigenvalue of the eigen-equation derived from objective (8) subject to constraint (9).

Proof: we rewrite eq. (10) (equivalent to objective (8)) as follows:

Let l 11 dl (w1T xl(1) r1 )2 m21 f m (w1T xm(2) r1 )2

N

N

w1T l 11 dl ( xl(1) )( xl(1) )T w1 2r1 l 11 dl ( xl(1) )T w1 r12 l 11 dl

N

N

N

w1T m21 f m ( xm(2) )( xm(2) )T w1 2r1 m21 f m ( xm(2) )T w1 r12 m21 f m

N

N

N

Simplifying the above expression and representing it in matrix form, we obtain

w1T XMX T w1 2r1eT MX T w1 (n1 n2 )r12

(23)

1) When n1 n2 , substituting eq. (18), (19) and (9) into (23), we get the following expression.

w1T XMX T w1 w1T X ( MeeT M ) X T w1 /(n1 n2 )

w1T XTX T w1

=

(24)

Thus, the optimal value of the optimization problem is the smallest eigenvalue of the eigen-equation (18).

Namely, w1 is an eigenvector corresponding to the smallest eigenvalue.

2) When n1 = n2 , eq (17) becomes

(eT DX1T eT FX 2T ) w1 0 .

(25)

Eq (25) is equivalent to the following expression:

eT MX T w1 = 0 .

Substituting (26) and (21) into (23), we obtain

w1T XMX T w1 2r1eT MX T w1 (n1 n2 )r12 = w1T XMX T w1 = ,

where

is still an eigenvalue of eq (18).

This ends our proof of the Theorem 2. #

(26)

3.1.3 Geometrical Interpretation

According to the defined notations, eq (10) implies that LIPSVM only concerns those samples whose

(1)

weights (.dl or fm) are greater than zero. For example, xi will be present in eq (10) if and only if its

weight di 0 . As a result, the points of a training set of LIPSVM are selectively generated by the two NN

matrices S and R, which can lead LIPSVM to robustness due to eliminating or restraining effect of outliers.

Fig.1 gives an illustration in which the two outliers in Class 1 are eliminated during selecting samples

process. Similarly, x m , its corresponding marginal point, will be kept in (10) when f m 0 . In most cases,

(2)

the number of marginal points is far less than that of the given points. Thus, the number of the samples used

to train LIPSVM can be reduced. Fig. 1 also illustrates those marginal points as marked “+”. Since the

T

distance of a point x to a plane x w r 0 is measured as | x w r | / || w || [22], the expression

T

( w1T x r1 ) 2 stands for the square distance of the points x to the plane xT w1 r1 0 . So, the goal of

training LIPSVM is to seek the plane x w1 r1 0 as close to the interior points in Class 1 as possible

T

and as faraway from the marginal points in Class 2 as possible. This is quite consistent with the foresaid

optimization objective.

Similarly, the second plane for the other class can be obtained. In the following, we are in a position to

describe its nonlinear version.

3.2 Nonlinear LIPSVM (Kernelized LIPSVM)

In real world, the problems encountered can not always effectively be handled using linear methods. In

order to make the proposed method able to accommodate nonlinear cases, we extend it to the nonlinear

counterpart by well-known kernel trick [22,23]. These non-linear kernel-based algorithms, such as KFD

[24,25,26], KCCA [27], and KPCA [28,29], usually use the “kernel trick” to achieve their non-linearity. This

conceptually corresponds to first mapping the input into a higher-dimensional feature space (RKHS:

Reproducing Kernel Hilbert Space) with some non-linear transformation. The “trick” is that this mapping is

never given explicitly, but implicitly induced by a kernel. Then those linear methods can be applied in this

newly-mapped data space RKHS [30,31]. Nonlinear LIPSVM also follows this process.

(1)

Rewrite the training set x1 ,

, xN(1)1 , x1(2) ,

, xN(2)2 as x1 , x2 ,L , x N . For convenience of description,

we define Empirical Kernel Map (EMP, for more detail please sees [23,32]) as follows:

Kemp : x Rn

Kemp ( x) R N

where Kemp ( x) [k ( x1 , x),

, k ( xN , x)]T . Function k ( x , y ) stands for an arbitrary Mercer kernel, for

any n-dimensional vectors x and y, which maps them into a real number in R. A frequently used kernel in

nonlinear classification is Gaussian with the expression k ( x, y) exp( || x y ||2 / 2 ) , where

is

a positive constant and called as the bandwidth.

Similarly, we consider the following kernel-generated nonlinear plane, instead of the aforementioned

linear case in input space.

uiT K e m(px )+ b =i 0, i 1, 2.

(27)

Due to the k1-NN and k2-NN relationship graphs and matrices (denoted by S and R ), we consider the

following optimization criterion instead of the original one in the input space as (8) and (9) with an entirely

similar argument.

min j 11 l 11 S jl (u1T Kemp ( xl(1) ) b1 )2 j 11 m21 R jm (u1T Kemp ( xm(2) ) b1 )2

(28)

s.t .

(29)

N

N

u1 | |= | |

N

N

1.

With an analogous manipulation to the linear case, we also have the following eigen-system

(when n1 n2 )

YTY T u1 u1

where Y [ K emp ( x1 ), K emp ( x2 ),

, K emp ( x N )] ,

M diag ( D, F ) , and T M Mee T M

/(n1 n2 ) . Specification about the parameters is completely analogous to linear LIPSVM.

3.3 Links with previous approaches

Due to its simplicity, effectiveness and efficiency, LDA is still a popular dimensionality reduction in

many applications such as handwritten digit recognition [33], face detection [34,35], text categorization [36]

and target tracking [37]. However, it also has several essential embarrassments such as singularity of the

scatter matrix in SSS case and the problem of rank limitation. To attack these limitations, in [42], we have

previously designed alternative LDA (AFLDA) by introducing a new discriminant criterion. AFLDA

overcomes the rank limitation and at the same time, mitigates the singularity. Li and Jiang [20] exploited the

average maximal margin principle to define a so-called Maximum Margin Criterion (MMC) and derived

alternative discriminant analysis approach. Its main difference from LDA criterion is to adopt the trace

difference instead of the trace ratio between the between-class scatter and the within-class scatter, as a result,

bypassing both the singularity and the rank limitation. In [13], Marginal Fisher Analysis (MFA) establishes a

similar formulation of the trace ratio between the two scatters to LDA but further incorporates manifold

structure of the given data to find the projection directions in the PCA transformed subspace. Doing so

avoids the singularity. Though there are many methods to overcome the problems, their basic philosophy are

similar, thus, we just mention a few above here. Besides, these methods are largely dimensionality reduction,

despite of different definitions for the scatters in the objectives while in order to perform classification task

after dimensionality reduction, they all use the simple and popular nearest neighbor and thus are generally

also viewed as an indirect classification method. In contrast, SVM is a directly-designed classifier based on

the SRM principle by maximizing the margin between the two-class given data points and has been shown

superior classification performance in most real cases. However, SVM requires solving a QP problem.

Unlike its solving as described in section I, PSVM [8] utilizes 2-norm and equality constraints and only

needs to solve a set of linear equations for seeking two parallel planes. While GEPSVM [9] relaxes this

parallelism and aims to obtain two nonparallel planes from two corresponding generalized eigenvalue

problems, respectively. However, it also encounters the singularity problem for which the authors used the

regularization technique. Recently, Guarracino and Cifarelli gave a more flexible setting technique for the

regularization parameter to overcome the same singularity problem and named so-proposed plane classifier

as ReGEC [38,39]. ReGEC seeks two planes simultaneously just from a single generalized eigenvalue

equation (the two planes correspond respectively to the maximal and minimal eigenvalues), instead of two

equations in GEPSVM. In 2007, an incremental version of ReGEC, termed as I-ReGEC[40], is proposed,

which first performed a subset selection and then used the subset to train ReGEC classifier for performance

gain. A common point of these plane-type classifiers in overcoming the singularity all adopt the

regularization technique. However, for one thing, the selection of the regularization factor is a key to

performance of solution and still open up to now. For another thing, the introduction of the regularization

term in GEPSVM unavoidably departs from its original geometricism partially. A major difference of our

LIPSVM from them is no need of regularization due to that the solution of LIPSVM is just an ordinary

eigen-system. Interestingly, the relation between LIPSVM and MMC is quite similar to that between

GEPSVM and regularized LDA [41]

LIPSVM is developed by fusing proximity information so as to not only keep the characteristics, such as

geometricism of GEPSVM, but also possess its own advantages as described in Section I. Extremely, when

the number of NN, k, takes large enough, for instance, set k=N, all the given data points can be used for

training LIPSVM. As far as geometrical principle, i.e. each approximate plane is as closest to data points of

its own class as possible and as furthest to points of the other class as possible, the geometricism of LIPSVM

is in complete accordance with that of GEPSVM. So, with the inspiration of MMC, LIPSVM can be seen as

a generalized version of GEPSVM.

4. A byproduct inspired by LIPSVM

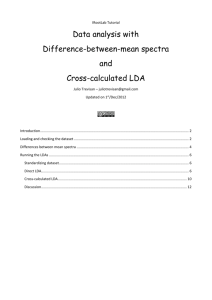

LDA [42] has been widely used for pattern classification and can be analytically solved by its

corresponding eigen-system. Fig.2 illustrates a binary classification problem and the data points of each class

are generated from a non-Gaussian distribution. The solid (black) line stands for the LDA optimal decision

hyperplane which is directly generated from the two-class points, while the dashed (green) line is obtained

by LDA only with those so-called marginal points.

C1 points

C2 points

support vectors

margin points

LDA

MarginLDA

Fig.2 Two-class non-Gaussian data points and their discriminant planes generated by LDA. Symbol “o” stands for those points

in Class 1, while “□” is for Class 2. The marginal points, marked “x”, come from aforementioned inter-class relationship

graphs. The solid (black) line is a discriminant plane obtained by LDA with all training samples, while for the dashed (green)

one, obtained by LDA only with those marginal points.

From Fig.2 and the design idea of LIPSVM, a two-step LDA can be developed through using those

interior points or marginal points in the two classes. Firstly, select those nonzero-degree points of k-NN

graphs from training samples; secondly, train LDA with the selected points. Further analysis and experiments

are discussed in Section 5.

In what follows, we turn to our experimental tests and some comparisons.

5. Experimental Validations and Comparisons

To demonstrate the performance of our proposed algorithm, we report results on one synthetic toy-problem

and UCI [43] real-world datasets in two parts: 1) comparisons among LIPSVM, ReGEC, GEPSVM and

LDA; 2) comparisons between LDA and their variants. The synthetic data set named “CrossPlanes” consists

of two-class samples generated respectively from two intersecting planes (lines) plus Gaussian noise. In this

section, all computational time was obtained on a machine running Matlab 6.5 on Windows xp with a

Pentium IV 1.0GHz processor and 512 megabytes of memory.

5.1 Comparisons among LIPSVM, ReGEC, GEPSVM and LDA

In this subsection, we test the foresaid classifiers with linear and Gaussian kernel, respectively.

Table 1 shows a comparison of LIPSVM versus ReGEC, GEPSVM, LDA. When a linear kernel is used,

ReGEC has two regularization parameter δ1 and δ2, while each of GEPSVM and LIPSVM has a single

parameter: δ for GEPSVM and k for LIPSVM1. Parameters δ1 and δ2 were selected from {10i|i= -4,-3…

3, 4}, δ and C were selected from the values {10i|i=-7,-6… 6, 7}, and k of NN in LIPSVM was selected

from {2, 3, 5, 8} by using 10 percent of each training set as a tuning set. According to the suggestion [9],

the tuning set of GEPSVM was not returned to the training fold to learn the final classifier once the

parameter was determined. When facing singularity of both augmented sample matrices, a small disturbance,

such as ηI, will be added to the G in ReGEC. In addition to reporting the average accuracies across the 10

folds, we also performed paired t-tests [44] in comparing LIPSVM to ReGEC, GEPSVM and LDA. The

p-value for each test is the probability of the observed or a greater difference assumption of the null

hypothesis that there is no difference between test set correctness distributions. Thus, the smaller the p-value,

the less likely that the observed difference resulted from identical test set correctness distributions. A typical

threshold for p-value is 0.05. For example, the p-value of the test when comparing LIPSVM and GEPSVM

on the Glass data set is 0.000 (< 0.05), meaning that LIPSVM and GEPSVM have different accuracies on

this data set. Table 1 shows that GEPSVM, ReGEC and LIPSVM significantly outperform LDA on the

CrossPlanes.

1

The parameter k, is the number of NN. In the experiments, we use Euclidean distance to evaluate the nearest neighbors of the

samples xi with assumption k1=k2=k.

Table 1. Linear Kernel LIPSVM, ReGEC, GEPSVM and LDA, 10-fold average testing correctness (Corr) (%) and

their

standard deviation (STD), p-values, average 10-fold training time (Time, sec.).

Dataset

m×n

LIPSVM

Corr ± STD

Time (s)

ReGEC

Corr ± STD

p-value

Time (s)

GEPSVM

Corr ± STD

p-value

Time (s)

LDA

Corr ± STD

p-value

Time (s)

Glass

214×9

87.87±1.37

0.0012

80.46±6.01*

0.012

0.0010

63.28±4.81*

0.000

0.0072

91.029±2.08

0.131

0.0068

Iris23

100×4

95.00±1.66

0.0011

90.00±4.00

0.142

0.0008

93.00±3.00

0.509

0.0055-

97.00±1.53

0.343

0.0006

Sonar

208×60

80.43±2.73

0.0150

67.14±5.14*

0.001

0.0043

76.00±2.33

0.200

0.0775

71.57±2.07*

0.016

0.0037

Liver

345×6

72.48±2.48

0.0013

66.74±3.67

0.116

0.0019

59.13±2.03*

0.002

0.043

61.96±2.59*

0.021

0.0009

Cmc

1473×8

92.64±0.51

0.0020

75.52±3.88*

0.000

0.0028

66.52± 1.02*

0.000

0.0199-

67.45±0.65*

0.000

0.0020-

Check

1000×2

51.60±1.30

0.0009

51.08±2.34

0.186

0.0007

50.35±1.25

0.362

0.0098

48.87±1.43

0.229

0.0013-

Pima

746×8

76.04±1.11

0.0070

74.88±1.70

0.547

0.0034

75.95±1.12

0.912

0.0537

76.15±1.30

0.936

0.0021

Mushroom

8124×22

80.12±3.21

6.691

80.82±1.87

0.160

8.0201

81.10±1.38

0.352

9.360

75.43±2.35

0.138

6.281

CrossPlanes

200×2

96.50±1.58

0.0008

95.00±1.00

0.555

0.0201

96.50±1.58

1.000

0.0484

53.50±17.33*

0.000

0.0160

The p-values were from a t-test comparing each algorithm to LIPSVM. Best test accuracies are in bold. An asterisk (*)

denotes a significant difference from LIPSVM based on p-value less than 0.05, and underline number means minimum training

time. Data set Iris23 is a fraction of UCI Iris dataset with versicolor vs. virginica.

Table 2 reports a comparison among the four eigenvalue-based classifiers using a Gaussian kernel. The

kernel width-parameter σ was chosen from the value {10i|i = -4,-3… 3, 4} for all the algorithms. The

tradeoff parameter C for SVM was selected from the set {10i|i = -4,-3… 3, 2}, while the regularization

factors δ in GEPSVM and δ1, δ2 in ReGEC were all selected from the set {10i|i = -4,-3… 3, 4}. For KFD

[24], when the symmetrical matrix N was singular, the regularization trick was adopted by setting N=N+ηI,

where η (>0) was selected from {10i|i = -4,-3… 3, 4}. I is an identity matrix with the same size of N. The k

in the nonlinear LIPSVM is the same as that in its linear case. Parameter selection was done by comparing

the accuracy of each combination of parameters on a tuning set consisting of a random 10 percent of each

training set. On the synthetic data set CrossPlanes, Table 2 reports that LIPSVM, ReGEC and GEPSVM are

also significantly outperform LDA.

Table 2. Gaussian Kernel LIPSVM, ReGEC2, GEPSVM, SVM and LDA, 10-fold average testing correctness (Corr) (%)

and their standard deviation (STD), p-values, average 10-fold training time (Time, sec.)

Dataset

m×n

LIPSVM

Corr ± STD

Time (s)

ReGEC

Corr ± STD

p-value

Time (s)

GEPSVM

Corr ± STD

p-value

Time (s)

LDA

Corr ± STD

p-value

Time (s)

WPBC

194×32

77.51±2.48

76.87±3.63

0.381

0.1939

63.52±3.51*

0.000

3.7370

65.17±2.86*

0.001

0.1538

88.10±3.92*

0.028

28.41

87.43±1.31*

0.001

40.30

93.38±2.93

0.514

24.51

0.4068

91.46±8.26*

0.012

0.8048

46.99±14.57*

0.000

1.5765

87.87±8.95*

0.010

0.6049

Glass

214×9

97.64±7.44

0.0500

93.86±5.31

0.258

0.2725

71.04±17.15*

0.002

0.5218

89.47±10.29

0.090

0.1775

Cmc

1473×8

92.60±0.08

34.1523

93.05±1.00

0.362

40.4720

58.50±12.88*

0.011

57.2627

82.74±4.60*

0.037

64.4223

WDBC

569×30

90.17±3.52

1.9713

91.37±2.80

0.225

5.4084

37.23±0.86*

0.000

5.3504

92.65±2.36

0.103

3.3480

Water

116×38

79.93±12.56

0.1543

57.11±3.91*

0.003

0.1073

45.29±2.69*

0.000

1.3229

66.53±18.06*

0.036

0.0532

CrossPlanes

200×2

98.75±1.64

0.2039

98.00±0.00

1.00

1.9849

98.13±2.01

0.591

2.2409

58.58±10.01*

0.000

1.8862

0.0908

Check

1000×2

92.22±3.50

31.25

Ionosphere

351×34

98.72±4.04

The p-values were from a t-test comparing each algorithm to LIPSVM. Best test accuracies are in bold. An asterisk (*)

2

Gaussian ReGEC Matlab code available at: http://www.na.icar.cnr.it/~mariog/

denotes a significant difference from LIPSVM based on p-value less than 0.05, and underline number means minimum training

time.

5.2 Comparisons between LDA and its extensions

In this subsection, we made comparisons on computational time and test accuracies among LDA and their

extended versions. In order to avoid unbalanced classification problem, the extended classifiers, respectively

named as Interior_LDA and marginal_LDA , were trained on two-class interior points and marginal points.

Tables 3 and 4 report comparisons between LDA and its variants, respectively.

Table 3. Linear kernel LDA and its variants: Interior_LDA and Marginal_LDA, 10-fold average testing

correctness ±STD, p-value, average 10-fold training time (Time, sec.)

Dataset

m×n

LDA

Correctness ± STD

Time(seconds)

Interior_LDA

Correctness ± STD

p-value

Time(seconds)

Marginal_LDA

Correctness ± STD

p-value

Time(seconds)

Glass

214×9

91.03±6.58

0.202

90.63±6.37

0.343

0.094

92.13±4.78

0.546

0.005

Sonar

208×60

71.54±6.55

0.192

71.21±8.33

0.839

0.072

72.29±8.01

0.867

0.023

Liver

345×6

61.96±8.18

0.728

67.29±6.45*

0.007

0.350

64.86±7.80*

0.024

0.121

Cmc

1473×8

77.45±2.07

60.280

77.92±2.34

0.178

25.227

75.86±3.08

0.56

0.253

Ionosphere

351×34

84.92±6.74

0.867

88.23±4.15

0.066

0.053

85.80±6.21

0.520

0.023

Check

1000×2

48.87±4.43

0.0013

51.62±5.98

0.254

0.0030

52.01±4.32

0.130

0.0011

Pima

746×8

76.15±4.12

0.0021

76.58±3.33

0.558

0.0010

75.39±5.00

0.525

0.0005

The p-values were from a t-test comparing LDA variants to LDA. Best test accuracies are in bold. An asterisk (*) denotes a

significant difference from LDA based on p-value less than 0.05, and underline number means minimum training time.

Table 4. Gaussian kernel LDA, Interior_LDA and Marginal_LDA, 10-fold average testing correctness±STD,

p-value, average 10-fold training time (Time, sec.).

Dataset

m×n

LDA

Correctness ± STD

Time(s)

Interior_LDA

Correctness ± STD

p-value

Time(s)

Marginal_LDA

Correctness ± STD

p-value

Time(s)

Pima

746×8

66.83±4.32

6.354

63.46±6.45

0.056

2.671

65.10±6.60

0.060

0.478

Ionosphere

351×34

91.55±6.89

0.658

89.78±3.56

0.246

0.052

73.74±7.24*

0.000

0.019

Check

1000×2

93.49±4.08

15.107

92.54±3.30

0.205

10.655

86.64±3.37*

0.001

0.486

Liver

345×6

66.04±9.70

0.641

64.43±8.52

0.463

0.325

57.46±9.29*

0.012

0.092

Monk1

432×6

66.39±7.57

1.927

68.89±8.86

0.566

0.381

65.00±9.64

0.732

0.318

Monk2

432×6

57.89±8.89

1.256

57.60±8.76

0.591

0.420

67.89±8.42

0.390

0.186

Monk3

432×6

99.72±0.88

1.276

99.72±0.88

1.000

0.243

98.06±1.34*

0.005

0.174

The p-values were from a t-test comparing LDA variants to LDA. Best test accuracies are in bold. An asterisk (*) denotes a

significant difference from LDA based on p-value less than 0.05, and underline number means minimum training time.

Table 3 says that for linear case, LDA and its variants have insignificant performance difference on most

data sets. But Table 4 indicates that a significant difference exists between nonlinear LDA and

Marginal_LDA. Furthermore, compared to its linear one, as described in [24], the nonlinear LDA is more

likely prone to singular due to higher (even infinite) dimensionality in kernel space.

5.3 Comparison between LIPSVM and I-ReGEC

As mentioned before, the recently-proposed I-ReGEC [40] also involves a subset selection and is much

related to our work. However, it is worth to point out several differences between LIPSVM and I-ReGEC: 1)

I-ReGEC adopts an incremental fashion to find the training subset while LIPSVM does not, 2) I-ReGEC is

sensitive to its initial selection as the authors declared, while ours does NOT involve such selection and thus

does not suffer from such a problem; 3) I-ReGEC seeks its two nonparallel hyperplanes from one single

generalized eigenvalue problem with respect to all the classes, while LIPSVM does it from corresponding

ordinary eigenvalue problem with respect to each class;4)I-ReGEC does not take underlying proximity

information between data points into account in constructing classifier while LIPSVM just does.

In what follows, we give a comparison of their classification accuracies using Gaussian kernel and

tabulate the results in Table 5 (where I-ReGEC results are directly copied from [40])

Table 5 Test accuracies of I-ReGEC and LIPSVM with Gaussian kernel

dataset

I-ReGEC

Test accuracy

LIPSVM

Test accracy

Banana

85.49

86.23

German

73.50

74.28

Diabetis

74.13

75.86

Bupa-liver

63.94

70.56

WPBC*

60.27

64.35

Thyroid

94.01

94.38

Flare-solar

65.11

64.89

Table 5 says that in 6 out of the 7 datasets, the test accuracies of LIPSVM are better than or comparable to

those of I-ReGEC.

6. Conclusion and Future Work

In this paper, following the geometrical interpretation of GEPSVM and fusing the local information into

the design of classifier, we propose a new robust plane classifier termed as LIPSVM and its nonlinear

version derived by so-called kernel techniques. Inspired by the MMC criterion for dimensionality reduction,

we define a similar criterion for designing LIPSVM (classifier) and then seek two nonparallel planes to

respectively fit the two given classes by solving the two corresponding standard rather than generalized

eigenvalue problems in GEPSVM. Our experimental results on most public datasets used here demonstrate

that LIPSVM obtains statistically comparable testing accuracy to the foresaid classifiers. However, we also

notice that due to the limitation of the current algorithms in solving larger scale eigenvalue problem,

LIPSVM also inherits such a limitation. Our future work includes that how to go further for solving real

large classification problems unfitted in memory, for both linear and nonlinear LIPSVM. We also plan to

explore some heuristic rules to guide the parameter selection of KNN.

Acknowledgment

The corresponding author would like to thank Natural Science Foundations of China Grant No. 60773061

for partial support.

Reference

[1]. C.J.C. Burges. A tutorial on support vector machine for pattern recognition. Data Mining and Knowledge Discovery,

vol.2(2), 1-47, 1998.

[2]. B. Scholkopf, A. Smola, K.-R Muller. Nonlinear component analysis as a kernel eigenvalue problem. Neural computation,

1998, 10: 1299-1319

[3]. B. Scholkopf, P. Simard,

A.Smola, and V. Vapnik. Prior Knowledge in Support Vector Kernels. In M. Jordan, M.Kearns,

and S.Solla, editors, Advances in Neural Information Processing Systems 10, Cambridge, MA: MIT Press, 1998: 640-646

[4]. V.Blanz, B.Scholkopf, H.Bulthoff, C.Burges, V.Vapnik and T.Vetter. Comparison of view-based object recognition

algorithms using realistic 3d models. Artificial Neural Networks, Proceedings of the International Conference on Artificial

Neural Networks, (Eds.) C. von der Malsburg, W. von Seelen, J.-C. Vorbrüggen, B. Sendhoff. Springer Lecture Notes in

Computer Science, Bochum 1996 1112, 251-256. (Eds.) von der Malsburg, C., W. von Seelen, J. C. Vorbrüggen and B.

Sendhoff, Springer (1996)

[5]. M. Schmidt. Identifying speaker with support vector networks. In Interface ’96 proceedings, Sydney, 1996

[6]. E.Osuna,, R.Freund and F. Girosi. Training support vector machines: an application to face detection. In IEEE Conference

on Computer Vition and Pattern Recognition, 1997: 130-136

[7]. T. Joachims. Text categorization with support vector machines: learning with many. relevant features. In: Proc of the 10th

European Conf on Machine learning, 1999:137-142.

[8]. G. Fung and O. L. Mangasarian. Proximal Support Vector Machine Classifiers. Proc. Knowledge Discovery and Data

Mining (KDD), F. Provost and R. Srikant, eds., pp. 77-86, 2001.

[9]. O. L. Mangasarian and Edward W. Wild, Multisurface Proximal Support Vector Machine Classification via Generalized

Eigenvalues. IEEE Transaction on Pattern Analysis and Machine Intelligence (PAMI), 28(1): 69-74. 2006

[10]. T. M.Cover and P. E. Hart. Nearest neighbor pattern classification. IEEE Transactions on Information Theory, 1967,

13:21-27

[11]. Y. Wu, K. Ianakiev and V. Govindaraju. Improved k-nearset neighbor classification. Pattern Recognition 35: 2311-2318,

2002

[12]. S. Lafon, Y. Keller and R. R. Coifman. Data fusion and multicue data matching by diffusion Maps. IEEE Trans. On

Pattern Analysis and Machine Intelligence (PAMI) 2006, 28(11): 1784-1797.

[13]. S. Yan, D. Xu, B. Zhang and H.-J. Zhang, Graph Embedding: A General Framework for Dimensionality Reduction,

Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR2005).

[14]. S. Roweis, L. Saul, Nonlinear dimensionality reduction by locally linear embedding, Science 290 (2000): 2323-2326

[15]. J. B. Tenenbaum, V. de Silva and J. C. Langford, A global geometric framework for nonlinear dimensionality reduction,

Science 290 (2000):2319-2323

[16]. M. Nelkin, P. Niyogi, Laplacian eigenmaps and spectral techniques for embedding and clustering, Advances in Neural

Information Processing Systems 14 (NIPS 2001), pp: 585-591, MIT Press, Cambridge, 2002.

[17]. H.-T Chen, H.-W. Chang, T.-L.Liu, Local discriminant embedding and its variants, in: Proceedings of International

Conference on Computer Vision and Pattern Recognition, 2005

[18]. P. Mordohai and G. Medioni, Unsupervised dimensionality estimation and manifold learning in high-dimensional spaces

by tersor voting. 19th International Joint Conference on Artificial Intelligence (IJCAI) 2005:798-803

[19]. K.Q. Weinberger and L.K.Saul. Unsupervised learning of image manifolds by semidefine programming. In Proc. Int. Conf.

on Computer Vision and Pattern Recognition, Pages II: 988-995, 2004

[20]. H. Li, T. Jiang and K. Zhang. Efficient and robust feature extraction by maximum margin criterion. In: Proc Conf.

Advances in Neural Information Processing Systems. Cambrigde, MA:MIT Press, 2004.97-104

[21]. V. Marno and N.Theo. Minimum MSE estimation of a regression model with fixed effects from a series of cross-sections.

Journal of Econometrics, 1993, 59(1-2), 125-136

[22]. H. Zhang, W.Huang, Z.Huang and B.Zhang. A Kernel Autoassociator Approach to Pattern Classification. IEEE

Transaction on Systems, Man, and Cybernetics –Part B: CYBERNETICS, Vol.35, No.3, June 2005.

[23]. B. Scholkopf and A. J. Smola. Learning With Kernels, Cambridge, MA: MIT Press, 2002.

[24]. S. Mika. Kernel Fisher Discriminants. PhD thesis, Technischen Universität, Berlin, Germany, 2002

[25]. S. Mika, G. Rätsch, J. Weston, B. Schölkopf and K.-R. Müller. Fisher discriminant analysis with kernels. In Y.-H. Hu,

E.Wilson J.Larsen, and S. Douglas, editors, Neural Networks for Signal Processing IX, pp: 41-48. IEEE, 1999

[26]. S.Mike. A mathematical approach to kernel Fisher algorithm. In Todd K.Leen and Thomas G.Dietterich Völker Tresp,

editors, Advances in Neural Information Processing Systems 13, pages: 591-597, Cambridge, MA, 2001.MIT Press

[27]. M. Kuss, T. Graepel, The geometry of kernel canonical correlation analysis, Technical Report No.108, Max Planck

Institute for Biological Cybernetics, Tubingen, Germany, May 2003

[28]. B.Schlkopf, A.Smola and K.-R. Miller, Nonlinear component analysis as a kernel eigenvalue problem, Neural

Computation, vol. 10(5), 1998

[29]. B.Scholkopf, A.Smola, and K.-R.Muller, Kernel principal component analysis, in Advance in Kernel Methods, Support

Vector Learning, N.Scholkopf, C.J.C.Burges, and A. Smola, Eds. Combridge, MA: MIT Press, 1999

[30]. T.P. Centeno and N.D.Lawrence. Optimizing kernel parameters and regularization coefficients for non-linear discriminant

analysis, Journal of Machine Learning Research 7 (2006): 455-491

[31]. K. R. Müller , S. Mika, G. Rätsch, et al. An introduction to kernel-based learning algorithms. IEEE Transaction on Neural

Network, 2001, 12(2):181-202

[32]. M. Wang and S. Chen. Enhanced FMAM Based on Empirical Kernel Map, IEEE Trans. on Neural Networks, Vol. 16(3):

557-564.

[33]. P.Berkes. Handwritten digit recognition with nonlinear Fisher discriminant analysis, Proc. of ICANN Vol. 2, Springer,

LNCS 3696, 285-287

[34]. Liu CJ, Wechsler H. A shape- and texture-based enhanced Fisher classifier for face recognition. IEEE Transactions on

Image Processing, 2001,10(4):598~608

[35]. S. Yan, D. Xu, L. zhang, Q. Yang, X. Tang and H. Zhang. Multilinear discriminant analysis for face recognition, IEEE

Transactions on Image Processing (TIP), 2007, 16(1): 212-220

[36]. T.Li, S.Zhu, and M.Ogihara. Efficient multi-way text categorization via generalized discriminant analysis. In Proceedings

of the Twelfth International Conference on Information and Knowledge Management (CIKM’03): 317-324, 2003

[37]. R. Lin, M. Yang, S. E. Levinson. Object tracking using incremental fisher discriminant analysis. 17th International

Conference on Pattern Recognition (ICPR’04) (2) : 757-760, 2004

[38]. M.R. Guarracino, C. Cifarelli, O. Seref, P. M. Pardalos. A Parallel Classification Method for Genomic and Proteomic

Problems, 20th International Conference on Advanced Information Networking and Applications - Volume 2 (AINA'06),

2006, Pages 588-592.

[39]. M.R. Guarracino, C. Cifarelli, O. Seref, M. Pardalos. A Classification Method Based on Generalized Eigenvalue

Problems, Optimization Methods and Software, vol. 22, n. 1 Pages 73-81, 2007.

[40].C. Cifarelli, M.R. Guarracino, O. Seref, S. Cuciniello and P.M. Pardalos. Incremental classification with generalized

eigenvalues. Journal of Classification, 2007, 24:205-219

[41]. J. Liu, S. Chen, and X. Tan, A Study on three Linear Discriminant Analysis Based Methods in Small Sample Size Problem,

Pattern Recognition, 2008,41(1): 102-116

[42]. S. Chen and X. Yang. Alternative linear discriminant classifier. Pattern Recognition, 2004, 37(7): 1545-1547

[43]. P.M. Murphy and D.W. Aha. UCI Machine Learning Repository, 1992, www.ics.uci.edu/~mlearn/ML Repository.html.

[44]. T. M. Mitchell. Machine Learning. Boston: McGraw-Hill, 1997