Hashing

advertisement

S.K.H. Kei Hau Secondary School 2003-2004

Hashing

S.K.H. Kei Hau Secondary School

Department of Computer

AL Computer Studies

Hashing

1. Linear search

2. Hashing and hashing functions

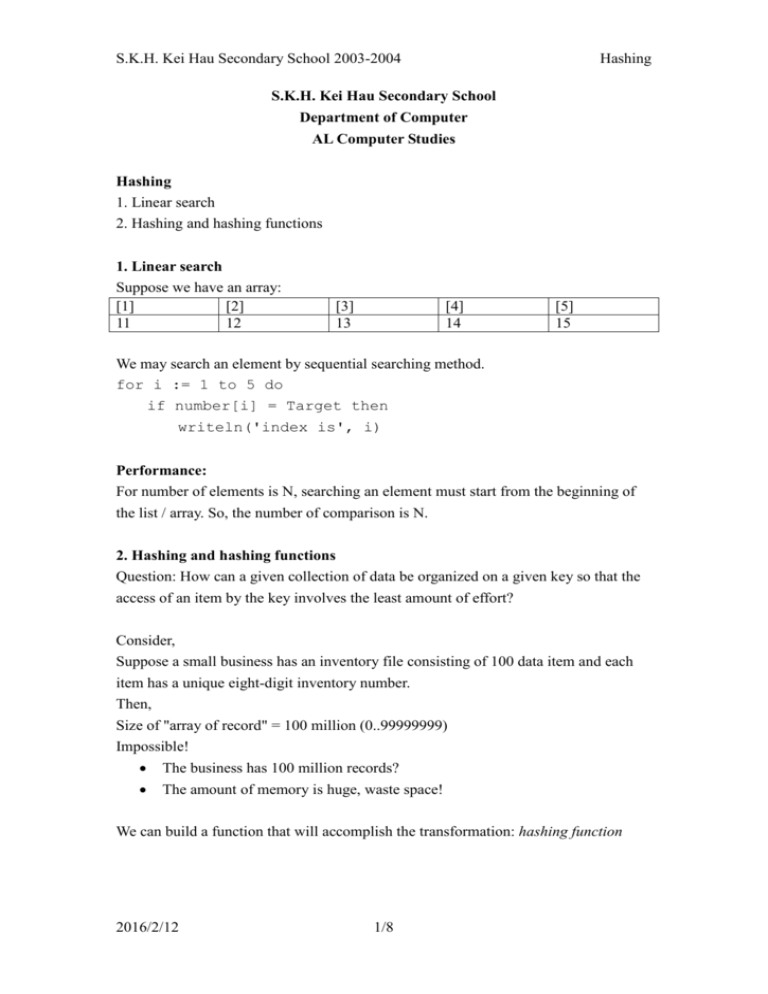

1. Linear search

Suppose we have an array:

[1]

[2]

11

12

[3]

13

[4]

14

[5]

15

We may search an element by sequential searching method.

for i := 1 to 5 do

if number[i] = Target then

writeln('index is', i)

Performance:

For number of elements is N, searching an element must start from the beginning of

the list / array. So, the number of comparison is N.

2. Hashing and hashing functions

Question: How can a given collection of data be organized on a given key so that the

access of an item by the key involves the least amount of effort?

Consider,

Suppose a small business has an inventory file consisting of 100 data item and each

item has a unique eight-digit inventory number.

Then,

Size of "array of record" = 100 million (0..99999999)

Impossible!

The business has 100 million records?

The amount of memory is huge, waste space!

We can build a function that will accomplish the transformation: hashing function

2016/2/12

1/8

S.K.H. Kei Hau Secondary School 2003-2004

Hashing

Hashing function

The function that transforms the key (inventory number) into the corresponding array

index is called a hash function.

Example

H(k) = k DIV 10000

when k = 12347296, k = 1234

H(k) = k MOD 10000

when k = 12347296, k = 7296

However, it may cause many-to-one situation, collision occurs!

Resolving collisions by chaining

Records in the same slot are linked into a list.

T

0

i

49

86

52

m-1

h(49) = h(86) = h(52) = i

Analysis of chaining

We make the assumption of simple uniform hashing:

Each key k K of keys is equally likely to be hashed to any slot of table T,

independent of where other keys are hashed.

Let n be the number of keys in the table

Let m be the number of slots

Define the load factor of T to be n/ m , which is the average number of

keys per slot.

Search cost

Expected time to search for a record with a given key = O (1 ) :

1: apply hash function and access slot. (nearly to be direct access)

: search the list

expected search time = O(1) if O(1) , or equivalently, if n=O(m)

2016/2/12

2/8

S.K.H. Kei Hau Secondary School 2003-2004

Hashing

Choosing a hash function

The assumption of simple uniform hashing is hard to guarantee, but several common

techniques tend to work well in practice as long as their deficiencies (不足) can be

avoided.

Desirata:

A good hash function should distribute the keys uniformly into the slots of the

table.

Regularity in the key distribution should not affect this uniformity.

Quick to compute.

Note: non-uniform distribution may cause larger collision chance.

1. Division method

Assume all keys are integers, and define

h(k) = k mod m.

For decimal number: if m = 10r, the hash doesn't even depend on all bits of k.

Similarly, for binary number: if m = 2r, then the has doesn't even depend on all

the bits of k:

o If k = 1011000111011010, and r = 6, then h(k) = 011010

Pick m to be a prime not too close to power of 2 or 10 and not otherwise used

prominently in the computing environment.

2. Folding method

Divide key into sections and add together.

h(k) = p + q + r, where p, q, r are the digits of k

h(245690127) = 245+690+127

Subtract, multiply and divide are available

3. Middle-Squaring

Take some digits from the middle of the number and square them

2016/2/12

3/8

S.K.H. Kei Hau Secondary School 2003-2004

Hashing

4. Multiplication method (optional)

Assume that all keys are integers, m = 2r, and our computer has w-bit words. Define

h(k ) ( A K mod 2w )rsh( w r )

where rsh is the 'bit-wise right-shift" operator and A is an odd integer in the range

2w-1<A<2w.

Don't pick A too close to 2w

Multiplication modulo 2w is fast

The rsh operator is fast

Suppose that m = 8 = 23 and that our computer has w = 7-bit words:

1011001 = A

1101011 = k

X__________________________

10010100110011

h(k)

Resolving collisions by open addressing

No storage is used outside of the hash table itself.

Insertion systematically probes the table until an empty slot is found.

The hash function depends on both the key and probe number.

Example of open addressing

Insertion

Insert key k = 496:

T

0

0. Probe h(496,0)

586

133

204

collision

481

m-1

2016/2/12

4/8

S.K.H. Kei Hau Secondary School 2003-2004

Hashing

T

0

0. Probe h(496,0)

1. Probe h(496,1)

586

133

collision

204

481

m-1

T

0

0. Probe h(496,0)

1. Probe h(496,1)

586

133

2. Probe h(496,2)

204

496

481

Insertion

m-1

2016/2/12

5/8

S.K.H. Kei Hau Secondary School 2003-2004

Hashing

Searching for key k=496:

T

0

0. Probe h(496,0)

1. Probe h(496,1)

2. Probe h(496,2)

586

133

204

496

481

m-1

Note:

Search uses the same probe sequence, terminating successfully if it finds the key and

unsuccessfully if it encounters an empty slot.

2016/2/12

6/8

S.K.H. Kei Hau Secondary School 2003-2004

Hashing

Probing Strategies

1. Linear probing

Given an ordinary hash function h' (k ) , linear probing uses the hash function

h(k , i) (h' (k ) i) mod m

This method, through simple, suffers from primary clustering, where long runs of

occupied slots build up, increasing the average search time. Moreover, the long runs

of occupied slots tend to get longer.

2. Double hashing

Given two ordinary hash functions h1(k) and h2(k), double hashing uses the function

h(k , i) (h1 (k ) i h2 (k )) mod m

This method generally produces excellent results, but h2(k) must be relatively prime

to m. One way is to make m a power of 2 and design h2(k) to produce only odd

numbers.

Analysis of open addressing

We make the assumption of uniform hashing:

Each key is equally likely to have any one of the m! permutations as its probe

sequence.

Theorem:

Given an open-addressed hash table with load factor n / m 1, the expected

number of probes in an unsuccessful search is at most

2016/2/12

7/8

1

1

S.K.H. Kei Hau Secondary School 2003-2004

Hashing

Proof of the theorem (optional)

At least one probe is always necessary

With probability n/m, the first probe hits an occupied slot, and a second probe

is necessary.

With probability (n-1) / (m-1), the second probe hits an occupied slot, and a

third is necessary.

With probability (n-2) / (m-2), the third probe hits an occupied slot, etc.

Observe that

ni

n

for i=1, 2, ..., n.

mi m

Therefore, the expected number of probes is

1

n

n 1

n2

1

1

1

...

1

...

m m 1 m 2 m n 1

1 (1 (1 (...(1 )...)))

1 2 3 ...

i

i 0

1

1

Implications of the theorem

If is constant, then accessing an open-addressed hash table takes constant

time.

If the table is half full, then the expected number of probes is 1/(-0.5) = 2

If the table is 90% full, then the expected number of probes is 1/(1-0.9) = 10

Reference

1. Guy J.Hale, Richard J. Easton, Applied Data Structures Using Pascal 1987, D.C.

Heath and company.

2. Charles E. Leiserson, Note of Introduction to Algorithm 2001, MIT

2016/2/12

8/8