Tute 2 Solutions

advertisement

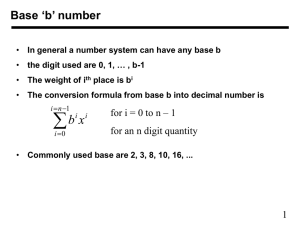

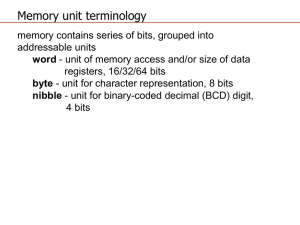

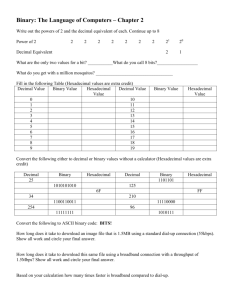

Solutions to selected problems from tutorial 2. 2.1 a. Each digit is one power of 6 increased from the previous digits. Therefore, starting from the right, the digits represent 1, 6, 36, 216, and 1296. b. The first printing of the book had a typo. There can not be a "7" in a base-6 number! (You may use this as a trick question if you wish. I have.) The correct value is 24531 6. For 245316, the decimal equivalent is 2 X 1296 + 4 x 216 + 5 x 36 + 3 x 6 + 1 = 3655. 2.2 In base-16, the digits from right to left have the power 1, 16, 256, 4096. In base-2, these are equivalent to 20, 24, 28, and 212, so they are the Oth, 4th, 8th, and 12th digits. 2.3 a. 4E16 = 4 x 16 + 14 = 80 b. 3D716 = 3 x 256 + 13 x 16 + 7 = 983 c. 3D7016 = 16 x 983 = 15728. The same result will, of course, be obtained from taking 3 x 4096 + 13 x 256 + 7 x 16. 2.4 The decimal range for an 18-bit word is O -- 218-1 = 0-- 262143 (256K) 2.5 The easiest way to solve this problem is to use the approximation 210 = 1000, and the fact that 2A X 2B = 2A+B. From this, 1,000,000 is approximately 210 x 210, or 20 bits, and 4,000,000 is approximately 220 x 4, or 22 bits. Therefore, the representation of 3,175,000 will require 22 bits, or 3 bytes. 2.8 a & e. 101101101 = 16D16 +10011011 = 9B16 1000001000 = 20816 b & e. 110111111 = 1BF16 +1 = 116 111000000 = 1CO16 c & e. 11010011 = D316 +10001010 = 8A16 101011101 = 15D16 2.11 The powers of digits in 8 are 1, 8, 64, 512, 4096. Therefore, 6026 – 1 x 4096 = 1970 - 3 x 512 = 434 - 6 x 64 = 50 - 6 x 8 = 2. The solution is 13662, 3.2 a. binary: 0101101011001101011000110001011010001100010110101hexadecimal: 2D 33 2E 31 34 31 35 octal: 055 063 054 061 064 061 065 decimal: 45 51 46 49 52 49 53 b. hexadecimal: 4E FI 6B F2 F5 FO 4B FI 3.3 Converting the code to hexadecimal, the message reads 54 68 69 73 20 69 73 20 45 41 53 59 21. Now reading from the table on page 65 of the textbook , the message is: This is EASY! 3.4 Reading from the table in Figure 3.9 (or the card in Figure 3.8), the message reads MICKEY MOUSE "LOVES" MINNIE, 5000 KISSES 3.5 b. The code is self-delimiting. Each combination of I's and O's is unique, so that a sliding pattern matcher can identify each code. A dropped bit during transmission would make it possible to "lose sync". Suppose the leading 0 in the character 01011 is dropped. If this character is followed by another character that starts with a 0, the system will read the code as the character as 101 10, and this error can propagate. If a bit gets switched, it is possible to confuse two characters, For example, if the character code 01101 becomes 01100, it is impossible to distinguish this character from 01110. This error is self-correcting in the sense that the next character can still be correctly identified. 3.6 The answer depends upon the system that the student is using, of course, but with most students working on ASCII-based systems, the expected integer values would be ORD(A) = 65, ORD(B) = 66, ORD(c) = 99, results taken from the ASCII table. If the student switches to an EBCDIC-based system, the results would change. 3.7 This is a programming problem. It can be solved most easily with a table. An algebraic approach is more difficult because the EBCDIC alphabetic values are split up differently than the ASCII codes. 4.1 a. Since an octal integer represents 3 bits, a six-digit octal number will represent an 18 bit number. Positive 18 bit binary numbers are represented by values from O to 011111111111111111. Positive octal numbers range from O to 3777777. Since each group of three bits represent a single octal digit, there is an exact one-to-one relationship between these values. Similarly, negative values range from 11111111111111111I to 100000000000000000. Negative octal values range from 777777 to 400000. Again there is a direct correspondence between these values, therefore there is a one-to-one relationship for all possible numbers, and the equivalency is proven. b, c. The largest positive integer will be 3777778, or 131071 decimal. d. The largest negative number is 4000008, or -131072 decimal. 4.2 a. The decimal number 1987 can be converted to binary form using either of the methods shown in Chapter 2. Using the “fit” method, 1987 = 1024 + 512 + 25~ + 128 + 32 + 16 + 8 + 1 = 11110111001. As a 16-bit number, the result is 0000011110111001. b. The 2's complement of -1987 is found by inverting the previous result, and adding 1 to the inverted value: 1111100001000110+ 1=1111100001000111 c. The 4-digit hexadecimal equivalent of the answer in part (in) comes from dividing the binary value into hexadecimal parts, 4 bits at a time: 1111100001000110 = F84616 The six-digit number will thus be FFF846. An alternative way to see this: the student can convert the positive value in part b fr~n 4-digit hex to 6-digit hex by adding two additional O's at the left end of the number. Since each hex O complements to F, the two additional F's at the left end are apparent. 4.4 Converting 19575 to binary yields binary value 100110001110111. Since the binary value requires 15 bits with the left-most digit a I, it is not possible to represent the value in less than 16 bits. If the system doesn't check for overflow (some don't!) then it will perform the 2's complement to handle the minus sign. This gives 011001110001001 = +13193 as a final value. Note that the answer and the original value sum to 32768, which is 2'5. In other words, the answer has wrapped around as a result of the overflow. This is a situation one sees when the result of integer arithmetic operations exceed the integer size on systems that do not check for overflow. 4.5 1's complement 2's comglement 1111111111101111 1111111111110000 b. 100111100001001 1011000011110110 1011000011110111 c.0100111000100100 1011000111011011 1011000111011100 a. 5.5 10000 a. 110110.011011 = 1.10110011011 x 25. The representation is 0 10000011 11011001101100000000000 b. -1.1111001 is represented as 1 10000000 11111001000000000000000 c. -4F7F16 = -100111101111111 = -1.00111101111111 x 214. The representation is 1 10001110 10011110111111100000000 d. 0.00000000111111 = l.11111 x 2-9. The representation is 0 01110101 11111100000000000000000 d. 0. 1100 x 236 = 1.100 X 235. The representation is 0 10100011 11000000000000000000000 e. 0. 1100 x 2-36 = 1~100 X 2-37 The representation is 0 01011011 1100000000000000000000O 5.7 a. 171.62510 = 10101011.101. The IEEE 754 format requires the number to be normalized as 1.0101011101 x 26. Using 8 bit excess-127 notation for the exponent, and not storing the first digit of the mantissa gives the result 0 10000101 01010111010000000000000. The number 343.250 is double 171.625. Therefore, the exponent increases by 1, giving 0 10000110 01010111010000000000000. The number 1716.25 is 10 x 171.625, which doesn't simplify the solution (which is the point, of course). 1716.25 = 11010110100.01 = 1.101011010001 x 210. This gives the result 0 10001001 10101101000100000000000. ____________________________