CLARIN_Standards_Speech

advertisement

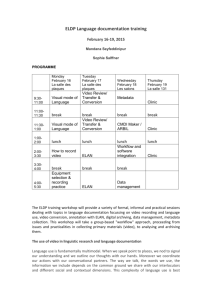

Multimedia Corpora (Media encoding and annotation) (Thomas Schmidt, Dieter van Uytvanck, Kjell Elenius, Paul Trilsbeek, Iris Vogel) 1. General distinctions / terminology A Speech Corpus (or spoken corpus) is a database of speech audio files with some kind of associated symbolic transcriptions. We start with a description of a terminology for various speech corpora. They are used in a variety of domains including, speech technology, dialogue and multiparty speech research, phonetics, sociolinguistics, sociology and also for the documentation of unwritten languages. Consequently, there is no consistent terminology, let alone a unique taxonomy to classify and characterise different types of speech corpora. Instead of attempting to define such a terminology here, we will characterise different types of corpora by describing some of their prototypical exponents. The categories and their characterising features are meant neither to be mutually exclusive (in fact, many existing corpora are a mixture of the types), nor to necessarily cover the whole spectrum of speech corpora. A Phonetic Speech Corpus is recorded with the principal aim of carrying out research into the phonetics, phonology and prosody of language. A typical phonetic corpus contains recordings of read or prompted speech. The utterances are often syllables, words or sentences that are phonetically transcribed, sometimes augmented with prosodic labels. The first systematic speech recordings were done on analogue tapes for basic phonetic analysis. In fact, speech recordings used to be made on analogue tape recorders up to the 1990’s but are nowadays always made digitally, which naturally facilitates their handling by computers. As an example EUROM1, a European multilingual speech corpus, was recorded on tape in the beginning of the 1990’s and later digitally converted. TIMIT, an Acoustic-Phonetic Continuous Speech Corpus of American English, is a prototypical phonetic corpus. Also monologues and dialogues may be used for phonetic research. In the Swedish SweDia 2000 project more than 100 persons were recorded for dialect research. The informants were encouraged to speak monologues but also read word lists. Also recordings of endangered languages or languages that have no writing system may be seen as phonetic corpora. The UCLA Phonetics Lab Language Archive containing more than 200 languages is such a corpus. A Speech Technology Corpus is recorded with the aim of building, training or evaluating speech technology applications such as speech recognizers, text-to-speech systems and dialogue systems. It typically contains recordings of read or prompted speech by one speaker in a studio for text-to-speech systems or more than hundreds of persons for speech recognition systems. The latter are often recorded in an office setting or recorded over mobile and/or fixed telephones. They are usually balanced over age and gender. The SpeechDat project with up to 5000 speakers per language recorded over fixed telephone networks is a prototypical speech recognition database as well as the Speecon project, in which the recordings were made on location with high quality and also simple hands-free microphones. In Speecon the environment was varied from office to nosier backgrounds such as vehicles and public locations. These recordings are usually transcribed in standard orthography possibly with labels for various acoustic noises and disturbances as well as truncated utterances. Dialogue systems require recordings of spontaneous speech, see below, in order to be able to handle communication with people in real interactions. Also recordings of broadcast news are frequently used for speech recognition research and are relatively inexpensive to record. Besides containing spontaneous speech they include further challenges such as speaker change and non-speech events like music and jingles. The 1996 English Broadcast News Speech corpus is an example of these. A Spontaneous Speech Corpus is aimed at exploring language as used in spontaneous spoken, everyday interaction. It contains recordings of authentic dialogues or multi-party speech. It is usually transcribed orthographically with additional characteristics of spontaneous speech, e.g. pauses, reductions, mispronunciations, corrections, filled pauses and laughter. Prototypical corpora are the Santa Barbara Corpus of Spoken American English, based on a large body of recordings of naturally occurring spoken interaction from all over the United States. Map task corpora in which the subjects discuss an optimal route based on somewhat diverging maps have frequently been used for discourse analysis, e.g. the HCRC Map Task Corpus. The ISL Meeting Corpus consists of 18 meetings, with an average number of 5.1 participants per meeting. Spontaneous speech corpora may be used for many different purposes: development of dialogue systems, different linguistic aspects such as turn taking and also for sociological research. In Europe the AMI Meeting Corpus has been an important source for research on multi-party interaction. An Expressive Speech Corpus is recorded in order to capture how emotions affect the acoustic speech signal. There are in principle three different ways of recording these: Let a person, usually an actor/actress, record utterances with different emotions, generally selected from the facial expressions described by the psychologist Paul Ekman as universal across different cultures, namely: anger, disgust, fear, joy, sadness, and surprise. An exponent is the Berlin Database of Emotional Speech. Induced emotions, e.g. a procedure in which the subjects watch a film intended to evoke specific emotions or the Velten technique where the subjects read emotional scenarios or emotionally loaded sentences to "get into" the wanted mood. “Real” emotions from recorded spontaneous speech. A difficulty with these is their labeling, since the actual emotion of the speaker is almost impossible to know with certainty. “Real” emotions may also be a blend of different prototypical emotions. Instances of emotions are furthermore rare in natural interactions. Typical corpora with spontaneous emotions are recorded at call centers such as the French CEMO corpus contains real agent-client recordings from a medical emergency call center. The French EmoVox Corpus was collected from an EDF (French electricity utility) call center. 1.2. Speech annotation A speech corpus contains recorded speech and associated transcriptions of the recordings. These generally include orthographic transcriptions of words, but may also include a variety of information at different levels regarding phonemes, prosody, emotions, parts-of-speech, dialogue events, noises, speaker change and so on. The levels may be synchronized or not. From here on I have copied Thomas Schmidt’s paper except for section 2. Audio encoding and section 3.1.13 Wavesurfer //Kjell 1.3. Data models/file formats vs. Transcription systems/conventions Annotating an audio or video file means systematically reducing the continuous information contained in it to discrete units suitable for analysis. In order for this to work, there have to be rules which tell an annotator which of the observed phenomena to describe (and which to ignore) and how to describe them. Rather than providing such concrete rules, however, most data models and file formats for multimedia corpora remain on a more abstract level. They only furnish a general structure in which annotations can be organised (e.g. as labels with start and end points, organised into tiers which are assigned to a speaker) without specifying or requiring a specific semantics for these annotations. These specific semantics are therefore typically defined not in a file format or data model specification, but in a transcription convention or transcription system. Taking the annotation graph framework as an example, one could say that the data model specifies that annotations are typed edges of a directed acyclic graph, and a transcription convention specifies the possible types and the rules for labelling the edges. Typically, file formats and transcription systems thus complement each other. Obviously, both types of specification are needed for multimedia corpora, and both can profit from standardisation. We will treat data models and file formats in sections 3.1 to 3.3 and transcription conventions/systems in section 3.4. Section 3.5 concerns itself with some widely used combinations of formats and conventions. 1.4. Transcription vs. Annotation/Coding vs. Metadata So far, we have used the term annotation in its broadest sense, as, for example, defined by Bird/Liberman (2001: 25f): [We think] of ‘annotation’ as the provision of any symbolic description of particular portions of a pre-existing linguistic object In that sense, any type of textual description of an aspect of an audio file can be called an annotation. However, there are also good reasons to distinguish at least two separate types of processes in the creation of speech corpora. MacWhinney (2000: 13) refers to them as transcription and coding. It is important to recognize the difference between transcription and coding. Transcription focuses on the production of a written record that can lead us to understand, albeit only vaguely, the flow of the original interaction. Transcription must be done directly off an audiotape or, preferably, a videotape. Coding, on the other hand, is the process of recognizing, analyzing, and taking note of phenomena in transcribed speech. Coding can often be done by referring only to a written transcript. Clear as this distinction may seem in theory, it can be hard to draw in practice. Still, we think that it is important to be aware of the fact that media annotations (in the broad sense of the word) are often a result of two qualitatively different processes – transcription on the one hand, and annotation (in the narrower sense) or coding on the other hand. Since the latter process is less specific to multimedia corpora (for instance, the lemmatisation of an orthographic spoken language transcription can be done more or less with the same methods and formats as a lemmatisation of a written language corpus), we will focus on standards for the former process in section 3 of this chapter. For similar reasons, we will not go into detail about metadata for multimedia corpora. Some of the formats covered here (e.g. EXMARaLDA) contain a section for metadata about interactions, speakers and recordings, while others (e.g. Praat) do not. Where it exists, this kind of information is clearly separated from the actual annotation data (i.e. data which refers directly to the event recorded rather than to the circumstances in which it occurred and was documented) so that we think it is safe to simply refer the reader to the relevant CLARIN documents on metadata standards. 2. Audio encoding 2.1. Uncompressed: WAV, AIFF, AU or raw header-less PCM, NIST Sphere 2.2. Formats with lossless compression: FLAC, Monkey's Audio (filename extension APE), WavPack (filename extension WV), Shorten, TTA, ATRAC Advanced Lossless, Apple Lossless, MPEG-4 SLS, MPEG-4 ALS, MPEG-4 DST, Windows Media Audio Lossless (WMA Lossless). 2.3. Compressed Formats: MP3, OGG, Flac, WMA, Vorbis, Musepack, AAC, ATRAC 3. Speech annotation 3.1. Tools and tool formats 3.1.1. ANVIL (Annotation of Video and Language Data) Developer: Michael Kipp, DFKI Saarbrücken, Germany URL: http://www.anvil-software.de/ File format documentation: Example files on the website, file formats illustrated and explained in the user manual of the software ANVIL was originally developed for multimodal corpora, but is now also used for other types of multimedia corpora. ANVIL defines two file formats, one for specification files and one for annotation files. A complete ANVIL data set therefore consists of two files (typically, one and the same specification file will be used with several annotation files in a corpus, though). The specification file is an XML file telling the application about the annotation scheme, i.e. it defines tracks, attributes and values to be used for annotation. In a way, the specification file is thus a formal definition of the transcription system in the sense defined above. The annotation file is an XML file storing the actual annotation. The annotation data consists of a number of annotation elements which point into the media file via a start and an end offset and which contain one ore several feature value pairs with the actual annotation(s). Individual annotation elements are organised into a number of tracks. Tracks are assigned a name and one of a set of predefined types (primary, singleton, span). ANVIL’s data model can be viewed as a special type of an annotation graph. It is largely similar to the data models underlying ELAN, EXMARaLDA, FOLKER, Praat and TASX. 3.1.2. CLAN (Computerized Language Analysis)/CHAT (Codes for the Human Analysis of Transcripts) / Talkbank XML Developers: Brian MacWhinney, Leonid Spektor, Franklin Chen, Carnegie Mellon University, Pittsburgh URL: http://childes.psy.cmu.edu/clan/ File format documentation: CHAT file format documented in the user manual of the software, Talkbank XML format documented at http://talkbank.org/software/, XML Schema for Talbank available from http://talkbank.org/software/. The tool CLAN and the CHAT format which it reads and writes were originally developed for transcribing and analyzing child language. CHAT files are plain text files (various encodings can be used, UTF-8 among them) in which special conventions are used to mark up structural elements such as speakers, tier types, etc. Besides defining formal properties of files, CHAT also comprises instructions and conventions for transcription and coding – it is thus a file format as well as a transcription convention in the sense defined above. The CLAN tool has functionality for checking the correctness of files with respect to the CHAT specification. This functionality is comparable to checking the well-formedness of an XML file and validating it against a DTD or schema. However, in contrast to XML technology, the functionality resides in software code alone, i.e. there is no explicit formal definition for correctness of and no explicit data model (comparable to a DOM for XML files) for CHAT files. CHAT files which pass the correctness check can be transformed to the Talbank XML format using a software called chat2xml (available from http://talkbank.org/software/chat2xml.html). There is a variant of CHAT which is optimised for conversation analysis style transcripts (rather than child language transcripts). The CLAN tool has a special mode for operating on this variant. 3.1.3. ELAN (EUDICO Linguistic Annotator) Developer: Han Sloetjes, MPI for Psycholinguistics, Nijmegen URL: http://www.lat-mpi.eu/tools/elan/ File format documentation: 3.1.4. EXMARaLDA (Extensible Markup Language for Discourse Annotation) Developers: Thomas Schmidt, Kai Wörner, SFB Multilingualism, Hamburg URL: http://www.exmaralda.org File format documentation: Example corpus on the tool’s website, DTDs for file formats at http://www.exmaralda.org/downloads.html#dtd, data model and format motivated and explained in [Schmidt 2005a] and [Schmidt2005b]. EXMARaLDA’s core area of application are different types of spoken language corpora (for conversation and discourse analysis, for language acquisition research, for dialectology), but the system is also used for phonetic and multimodal corpora (and for the annotation of written language). EXMARaLDA defines three inter-related file formats – Basic-Transcriptions, Segmented-Transcriptions and List-Transcriptions. Only the first of these two are relevant for interoperability issues. A Basic-Transcription is an annotation graph with a single, fully ordered timeline and a partition of annotation labels into a set of tiers (aka the “Single timeline multiple tiers” data model: STMT). It is suitable to represent the temporal structure of transcribed events, as well as their assignment to speakers and to different levels of description (e.g. verbal vs. non-verbal). A Segmented-Transcription is an annotation graph with a potentially bifurcating time-line in which the temporal order of some nodes may remain unspecified. It is derived automatically from a BasicTranscription and adds to it an explicit representation of the linguistic structure of annotations, i.e. it segments temporally motivated annotation labels into units like utterances, words, pauses etc. Segmented transcriptions are derived automatically from Basic-Transcriptions. EXMARaLDA’s data model can be viewed as a special type of an annotation graph. It is largely similar to the data models underlying ANVIL, ELAN, FOLKER, Praat and TASX. 3.1.5. Praat Developers: Paul Boersma/David Weenink URL: http://www.fon.hum.uva.nl/praat/ 3.1.6. Transcriber Developers: Karim Boudahmane, Mathieu Manta, Fabien Antoine, Sylvain Galliano, Claude Barras URL: http://trans.sourceforge.net/en/presentation.php 3.1.7. FOLKER (FOLK Editor) Developer: Thomas Schmidt, Wilfried Schütte, Martin Hartung URL: http://agd.ids-mannheim.de/html/folker.shtml File format documentation: Example files, XML Schema and (German) documentation of the data model and format on the tool’s website 3.1.8. EMU Speech Database System URL: http://emu.sourceforge.net/ 3.1.9. Phon Developers: Greg Hedlung, Yvan Rose URL: http://childes.psy.cmu.edu/phon/ 3.1.9. XTrans, MacVissta (http://sourceforge.net/projects/macvissta/) 3.1.10. TASX Annotator, WinPitch, Annotation Graph Toolkit Development discontinued. 3.1.11. Transana, F4, SACODEYL Transcriptor No structured data, RTF 3.1.12. Multitool, HIAT-DOS, syncWriter, MediaTagger “Deprecated” 3.1.13 Wavesurfer Developers: Kåre Sjölander and Jonas Beskow, KTH http://www.speech.kth.se/wavesurfer/ 3.2. Other (“generic”) formats 3.2.1. TEI transcriptions of speech 3.2.2. Annotation Graphs / Atlas Interchange Format / Multimodal Exchange Format 3.2.3. BAS Partitur Format 3.3. Interoperability of tools and formats Imports ELAN, Praat ELAN, Praat CHAT, Praat, Transcriber CHAT,ELAN, FOLKER, Praat, Transcriber, TASX, WinPitch, HIAT-DOS, syncWriter, TEI, AIF EXMARaLDA --- ELAN, EXMARaLDA, TEI --- FOLKER - - - Praat FOLKER CHAT ANVIL EXMARaLDA + + + + - ELAN Praat EXMARaLDA FOLKER ELAN Exports + - + - + - + + + - - + - ANVIL CHAT CHAT ANVIL Imports EXMARaLDA FOLKER Praat Exports --ELAN, EXMARaLDA, Praat CHAT, Praat CHAT, ELAN, FOLKER, Praat, Transcriber, TASX, TEI, AIF ELAN Tool ANVIL CLAN ELAN EXMARaLDA - - - + + - + + - - + + + + + - + + - - Praat - - - - - - - - - - - 3.4. Transcription conventions / Transcription systems 3.4.1. Systems for phonetic transcription: IPA SAMPA, X-SAMPA ToBi 3.4.2. Systems for orthographic transcription CA CHAT DT GAT GTS/MSO6 HIAT ICOR 3.5. Commonly used combinations of formats and conventions CLAN + CHAT CLAN + CA EXMARaLDA + HIAT FOLKER + GAT (ICOR + TEI?) 4. Summary / Recommendations 4.1. Media Encoding 4.2. Annotation Criteria: - Format is XML based, supports Unicode Format is based on / can be related to a format-independent data model Tool is stable, development is active Tool runs on different platforms Tool format can be imported by other tools or exported to other tool formats Recommendable without restrictions: ANVIL, ELAN, EXMARaLDA, FOLKER Recommendable with some restrictions: CHAT (no data model), Praat (not XML), Transcriber (development not active), Phon (early development stage) Recommendable only with severe restrictions: TASX (development abandoned, tool not officially available anymore), AG toolkit (development abandoned at early stage), WinPitch (development status unclear), MacVissta (development abandoned at early stage, Macintosh only) Not recommendable: Transana (no suitable file format), F4 (dito) Obsolete: syncWriter, HIAT-DOS, MediaTagger Use tool-internal mechanisms for additional validation Use tested/documented combinations of tool/conventions http://www.ldc.upenn.edu/Catalog/CatalogEntry.jsp?catalogId=LDC93S1 References Wilting, J., Krahmer, E., Swerts, M., 2006. Real vs. acted emotional speech. In: Proceedings of the Ninth International Conference on Spoken Language Processing (Interspeech 2006 - ICSLP), Pittsburgh, PA, USA, pp. 805-808.