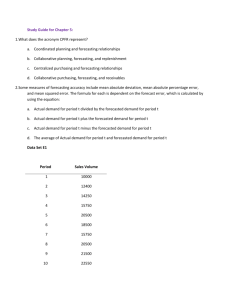

time-series forecasting with feed-forward neural

advertisement