Comparison of Feature Reduction Techniques for Use in

95/100

Comparison of Feature Reduction Techniques for Use in

Invasive Species Discrimination

Terrance West and Wesley Johnson, Student Member IEEE

Abstract

For this study, three different feature reduction techniques are compared. The three techniques are the spectral band grouping (SBG), linear discriminant analysis

(LDA), and sequential parametric projection pursuits (SPPP) methods. The different methods are used to reduce the number of features of a given Cogongrass-Johnsongrass dataset. The reduced features are then subjected to nearest neighbor (NN) classification and jackknife (JK) testing. Results show that the SBG method performs the best, followed the SSP and LDA methods, respectively.

Introduction

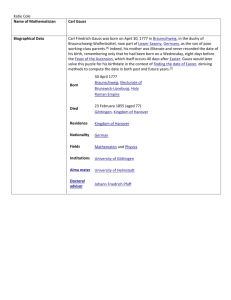

Invasive species can be defined as organisms that, primarily introduced by humans, are non-native to a region and whose introduction has or may have adverse effects on the ecosystem of that region. A highly problematic terrestrial invasive species in the southeastern United States is Cogongrass ( Imperata cylindrica ), a weed that is known to facilitate wildfires and overtake native vegetation, such as the structurally similar Johnsongrass ( Sorghum halepense ), effectively causing high damage to other organisms’ habitats [1]. As Cogongrass is a very difficult pest to control, an increasingly important issue has been to monitor the growth and migration of this invasive species using remotely sensed data. A picture comparing Cogongrass to Johnsongrass is shown in Figure 1 [1-3].

Figure 1 Visual comparison of (a) Cogongrass and (b) Johnsongrass

When dealing with remotely sensed data, there is often a need to reduce the observed data using dimensionality reduction methods. The reasons for needing this feature reduction include reduced time consumption and cost effectiveness. However, it is impossible to know which methods perform better, that is, yield more accurate results, until a side-by-side comparison has been made. The purpose of this project was to design a classification system to conduct and analyze such a comparison. This paper will discuss the background behind several feature reduction methods, followed by methods used in this investigation and the results obtained. Appropriate conclusions will then be drawn and possibilities for future work will be determined.

Background

Data or feature reduction is an integral part of most classification systems. It is important that the right technique be applied for a given dataset, classifier, or testing method, or poor results may be obtained. The following is a discussion of various feature reduction techniques and how they have been used in the area of remote sensing.

I. Averaging

The amount of data recorded by a system can be reduced by averaging certain portions of the data. This is akin to lowpass filtering, or smoothing, of the data. Backer et al . used this idea of averaging in their study of hyperspectral data classification. After selecting the best features of the given data, the selected features were averaged spectrally with their respective local features in order to optimally reduce data dimensionality, thereby achieving the best possible classification accuracies [4]. A similar technique was used by Venkataraman, as windows of spectral bands were averaged to create an optimal dataset for classification [5]. These techniques are similar to the Savistky-Golay method, which smooths datasets by fitting polynomial functions of varying degrees to those datasets [6]. In radar, Monakov et al . used spatial averaging to design a practical polarimetric scatterometer system [7].

II. Wavelets

Wavelets are really a collection of ideas rather than a single technique. Their roots can be found in mathematics, civil engineering, electrical engineering, computer science, and physics. They get their name from the fact that they are “small waves.” In the same way that Fourier analysis can be used to reconstruct signals from many sine waves, wavelet analysis can be used to reconstruct signals from many “prototype” waves, where the “prototype” is a wavelet function. For example, the wavelet function could be a windowed square wave or a windowed sine wave. Wavelets are a powerful signal processing tool because they allow for multiresolution analysis [8].

In the area of remote sensing, wavelets have been used to analyze hyperspectral reflectance, extract features, and provide feature dimensionality reduction using subsets or combinations of wavelet coefficients. Wavelets have also been used for texture analysis in the spatial domain [9-12].

III. Principal Component Analysis

Component analysis is concerned with finding the direction(s) in a feature space that give(s) lower-dimensional representations for given data. Using principal component analysis (PCA), these directions are the eigenvectors corresponding to the largest eigenvalues of the covariance matrix for a given data set. The resulting coefficients, or principal components, are useful for representing data, and have been widely used for data compression and feature extraction in remotely sensed data [13-16].

While PCA is a useful method for representing data, and an optimal one for data compression in the sense of improved mean square error (MSE), it may or may not be the best choice when attempting to extract features for the purpose of discriminating between classes [13]. Malhi et al . developed a successful PCA-driven feature selection scheme for use in the supervised and unsupervised classifications of possibly defective machine bearings [17]. Stamkopoulos et al . used a nonlinear PCA method to extract features from patient electrocardiograms for improved classification accuracies of ischemic cardiac beats [18]. Alternately, Cheriyadat and Bruce found that PCA was not necessarily an appropriate method for feature extraction when using hyperspectral data for target detection, since PCA is an unsupervised approach that is based on the covariance matrix of the entire given dataset [19].

IV. Linear Discriminant Analysis

Fisher's linear discriminant analysis (LDA) is a method that has been widely employed in the area of feature extraction and optimization [20-23]. LDA determines the best direction along which data can be projected in terms of class separation. It gives a linear function that maximizes the ratio of interclass variance to intraclass variance. In other words, it helps to separate given classes from each other and to compact the data samples of each individual class into tighter clusters. The overall result is a greatly reduced feature space dimensionality with the best possible class separations that will aid in class discrimination [13]. However, just because LDA is specifically aimed at reducing feature dimensionality for use in discrimination does not mean that is the best method for every situation. For example, if the probability density function of one class is contained within the probability density function of another class, no amount of linear discriminant analysis will result in adequate classification accuracies [13]. In these situations, a non-linear discriminant analysis method is required.

V. Optimal Data Grouping

When dealing with large data sets, it is sometimes a good approach to group the data. This grouping provides for data reduction, and, when it is done optimally, it can achieve high classification accuracies. One such optimal data grouping method is the spectral band grouping method, or, the best bands method [4].

The idea behind best bands is to find the best possible combination of features, in this case bands, that gives the maximum area, Az , underneath the receiver operating characteristic (ROC) curve. The best band combinations are found by an iterative operation. The first two bands are combined, and ROC analysis is performed. This is repeated until all possible combinations of two bands are analyzed, and the combination

with the best Az value is kept. Next, a third band is combined, and ROC analysis is performed in order to determine that band’s contribution. If the added band gives a better

Az value, then it is kept. Otherwise it is discarded from the group. This process of keeping or discarding bands leads to the best band combination that gives the best, or highest, Az value. This means that the resulting combination of features, or bands, is the best for use in discrimination between two classes [4-5].

The best bands method has been applied in several applications. For example,

Serpico et al . used the best bands method to optimally group hyperspectral signature bands to aid in optimizing the classification accuracies associated with specific image data, such as Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) data [24].

Mathur also used the best bands method for using hyperspectral signatures to detect

Cogongrass from other grasses [25]. Similarly, Chang et al . developed a band selection technique based on a combination of the best bands method with a band decorrelation method for use in hyperspectral image classification [16].

VI. Projection Pursuits

The method of projection pursuits has been applied to a few types of multispectral and hyperspectral applications [15, 26-27]. Jimenez and Landgrebe investigated parallel and sequential projection pursuit methods in a target recognition application to distinguish between two agriculture crops, soybean and corn [27]. In another study, Lin and Bruce evaluated the use of projection pursuits for dimensionality reduction using hyperspectral data for agricultural applications involving the detection of two target weeds, sicklepod and cocklebur [15]. They investigated three types of projection pursuits: parallel

parametric projection pursuits (PPPP), projection pursuits best band selection (PPBBS), and sequential parametric projection pursuits (SPPP).

The principal objective of projection pursuits is to overcome the “curse of dimensionality” while at the same time retaining information within the hyperspectral signal that is pertinent to target detection and classification. The goal of projection pursuits is to find a transformation matrix A that can transform the high dimensional data into an optimum low dimensional space which maximizes class separation, or target detection. The mathematical form of projection pursuit is given simply by

Y

A

T

X j where X j

is the original hyperspectral data ( d x M ) of class j , A is the transformation matrix ( d x n ), and Y is the projected data ( n x M ). The d in the original data is the number of spectral bands, M is the number samples being projected, and n is the dimension of the subspace in which the data will be projected. The columns of the transformation matrix A are orthogonal. The nonzero elements in the column of the transformation matrix contain the weights of the potential projections. The transformation matrix A is optimized by the projection index which is usually expressed as I ( A T X ). In a two class problem, the optimization can be based on the classification separation of the two classes. The optimization is performed by transforming the two classes into a lower dimension subspace. After the classes have been transformed, the projection index is measured to determine if the transformation is improving class separation. The selection of the correct projection index can greatly affect the efficacy of the projection pursuits method.

Methodologies

I. Flow Chart

Below is a block diagram representing the designed classification system.

II. Data Collection

Figure 2 Block Diagram of Classification System

The data for this project was collected via an Analytical Spectral Devices (ASD)

Fieldspec Pro handheld spectroradiometer, which captures individual hyperspectral signatures of a target in question. The device has a spectral range of 350 – 2500 nm, spectral resolution of 3 nm @ 700 nm and 10 nm @ 1400 - 2100 nm, and uses a single

512 element silicon photodiode array for sampling 350 - 1000 nm and two separate, graded index Indium-Gallium-Arsenide photodiodes for the 1000 - 2500 nm range [28].

The device was held approximately four feet above nadir when each measurement was taken and a 25

instantaneous field of view (IFOV) foreoptic was used to take all readings. The dataset consisted of 130 Cogongrass samples and 130 Johnsongrass samples collected at Eastman Farms on Artesia Road in Oktibbeha County, Mississippi.

All samples were collected within +/- 2 hours of solar noon over one weekend in August of 2004, and targets were not revisited.

III. Feature Reduction

A. Spectral Band Grouping

In order to study the effects of the spectral band grouping method upon this project's two-class problem, spectrally-reduced datasets had to be created. Hyperspectral signatures with approximately 1nm resolution per spectral band were used to create lower spectral resolution datasets. The spectral resolution of these high resolution signatures was reduced, or grouped, using an averaging filter. The data was subjected to a moving average, which was used to average a progressive number of samples, N , where N represents the given level of downsampling. The resulting averaged sample was then placed in its appropriate position in the final dataset for that given N . For example, if N =

30, the first 30 samples of the original dataset would be averaged. The resulting data

sample would be the first data sample of the respective downsampled dataset. Beginning with data sample 31, the next 30 data samples of the original dataset would be averaged, with the result being the second data sample of the downsampled dataset. This process would be repeated until all the original data samples had been used. Thus a new downsampled dataset would be created.

Datasets were simulated for each of the following levels of spectral resolution, or in this case reduction, since the original test data was high spectral resolution: [1 5 10 50

100 500 1000]. A level of 1 indicates the spectral resolution is at the original full resolution. A level of L indicates that the spectral resolution was reduced by a factor of

L , and that the resulting spectral resolution is 1/ L that of the original dataset. It can be seen that the levels of spectral resolution decrease in a sort of logarithmic fashion. It is necessary to note that spectral resolution can be viewed in many ways, such as the number of bands in a signal, the spectral sampling period (separation between adjacent band center points), the FWHM, or 2 times the FWHM (if accounting for Nyquist

Theory). For this reduction method, the spectral resolution is defined as the FWHM.

That is, the sampling period and the FWHM are equivalent.

B. Linear Discriminant Analysis

The application of linear discriminant analysis to this project's two-class problem is very straightforward. First, within-class scatter matrix is computed as follows.

S w

c n i

1 j

1

( x i , j

m i

) t

( x i , j

m i

)

Next, the weighting function, w , is computed in the following manner. w

S w

1

( m

1

m

2

)

Finally, the linearly transformed, or reduced, dataset y is obtained by implementing the following formula. y

w t x

C. Sequential Projection Pursuits

This section contains the constraints and approach of how the SPPP algorithm constructs the matrix A . The dimension of the projection matrix is determined by the number bands or features the training samples has and group of neighboring bands . The columns of the transformation matrix are orthogonal and contain zero and nonzero elements. The columns of the matrix A are zero for every entry except for the position of the adjacent bands. The structure of the transformation matrix A is shown in Figure 3, where n represents the length of the elements in each vector and the k represents the total number of groups. In the adjacent band selection, no two groups will have the same feature, which is illustrated in Figure 3. Every group of adjacent bands will be linearly combined to produce one feature. The manner in which these bands are combined depends on which projection is selected for that group. In this study, three potential projections were available for each group. These included a simple average, a Gaussianweighted average, and PCA. The projection that maximized the projection index, i.e.

Bhattacharyya distance, was selected for each group. Hence the name “projection pursuits.” In this study, Bhattacharyya distance is used as the projection index. The mathematical expression for the Bhattacharyya distance for a two-class problem is shown below,

B

1

8

( M

2

M

1

) T

1

2

2

1

( M

2

M

1

)

1

2 ln

1

2

1

2

2 where M i

is the mean of class i and the Σ i

is the covariance for class i .

A

A

1 , 1

A

1 , n

0

0

0

0

A

2 , 1

A

2 , n

0

0

A k

1 , n

0

0

0

0

A k

1 , 1

A

0

0

k , 1

A k , n

Figure 3 SPPP transformation matrix formulization

The SPPP procedure is completed as follows:

1.

An initial matrix A

is constructed, for which each adjacent band A k-1,1

– A k-1,n

is chosen.

2.

Using the initial matrix A

, the high dimensional data is transformed and an initial global Bhattacharyya distance is computed, B

.

3.

While maintaining all A k,n

’s constant, A

1,1

– A

1,n

group is split in half and the high dimensional data set is transformed and the global Bhattacharyya distance is computed, B

1

.

4.

Step 3 is repeated for each value of k , until each group of adjacent bands has been spilt and a Bhattacharyya distance B i

is calculated for i =1:k creating a

Bhattacharyya distance vector B .

5.

The maximum value in vector the m th

B is obtained, B m

. Holding all groups constants,

group is split in the initial transform A

and the matrix is stored.

6.

The Bhattacharyya distance B m

is compared to B

and if B m

> B

then B

= B m

and steps 2 – 6 are repeated until the global Bhattacharyya index stops increasing or the number of groups in the transformation matrix exceeds the original number of adjacent bands.

IV. Classification

For classification purposes the reduced features for each method are subjected to jacknife testing, which takes a given dataset, randomly sorts it, and then takes one half to use as training data and leaves the other half to use as testing data. A nearest neighbor classifier is then used to classify the features.

Results

Results for the SBG, LDA, and SPPP feature reduction methods can be seen in

Figures 4, Tables 1 and 2, and Figure 5, respectively.

100

95

90

85

80

75

1 2 5 10

Increasing FWHM

50 100 500

Figure 4 Overall Accuracies for SBG Method

1000

Table 1 Error Matrix for LDA Method

Cogongrass Johnsongrass

Producer

Accuracies (%)

User

Accuracies (%)

Overall Accuracy

90

89

88

87

86

85

84

83

82

81

80

79

Table 2 Representative Portion of S w

Matrix for LDA Method

2.56E-04 2.56E-04 2.57E-04 2.58E-04 2.60E-04 2.61E-04 2.63E-04 2.63E-04 2.64E-04

2.55E-04 2.55E-04 2.56E-04 2.57E-04 2.59E-04 2.60E-04 2.61E-04 2.62E-04 2.63E-04

2.55E-04 2.55E-04 2.56E-04 2.57E-04 2.59E-04 2.60E-04 2.61E-04 2.62E-04 2.63E-04

2.56E-04 2.56E-04 2.57E-04 2.58E-04 2.60E-04 2.61E-04 2.62E-04 2.63E-04 2.64E-04

2.57E-04 2.57E-04 2.58E-04 2.60E-04 2.62E-04 2.63E-04 2.64E-04 2.65E-04 2.65E-04

2.59E-04 2.59E-04 2.60E-04 2.62E-04 2.63E-04 2.64E-04 2.66E-04 2.66E-04 2.67E-04

2.60E-04 2.60E-04 2.61E-04 2.63E-04 2.64E-04 2.66E-04 2.67E-04 2.67E-04 2.68E-04

2.61E-04 2.61E-04 2.62E-04 2.64E-04 2.66E-04 2.67E-04 2.69E-04 2.69E-04 2.70E-04

2.62E-04 2.62E-04 2.63E-04 2.65E-04 2.66E-04 2.67E-04 2.69E-04 2.70E-04 2.71E-04

50 55

Group Size

60 65

Figure 5 Overall Accuracies for SPPP Method

Conclusions

It can be seen in Figure 4 that the SBG method generally results in very high classification accuracies. However, the accuracies are seen to increase for a moment as

the FWHM increases, or, as the spectral resolution as defined for this problem decreases.

This is likely due to the fact that there exists some high frequency noise that is smoothed out by the moving average filter that is used to create the spectrally-reduced datasets.

However, high accuracies remain throughout the spectral ranges, and it is very surprising to see the high accuracies that are sustainable for relatively low spectral resolutions.

The results in Table 1 show that the LDA method results in very poor classification accuracies for both classes. This is surprising, since LDA is designed to find the optimum direction along which classes can be discriminated. However, consider the portion of the S w

matrix shown in Table 2. It can be seen that the matrix is very sparse, that is, it contains many values that are very close to zero. In this case, then, the inverse of the matrix is ill-defined, that is,

S w

* S w

1

I where I is the identity matrix. This results in poor results for the LDA method.

Results for the SPPP method in Figure 5 show that groupings of 55-65 give high accuracies in the range of about 80-90%, with the highest accuracies occurring for the odd grouping numbers. This is surprising, since it would normally be assumed that the larger groupings would result in higher accuracies due to large band correlations.

Acknowledgements

We would like to thank John Ball and Abhinav Mathur for assisting in gathering the data for this project. We would also like to thank Dr. Lori Mann Bruce for allowing us to use code for simple classification purposes.

References

[1] E. R. R. L. Johnson and D. G. Shilling, PCA Alien Plant Working Group – Cogon

Grass(Imperata cylindrical), “Cogon Grass,” [Online]. Available: http://www.nps.gov/plants/alien/fact/imcy1.htm

[2] K. D. Burnell, J. D. Byrd, Jr., D. B. Mask, J. W. Barnett, C. M. Cofer, L. M. Bruce,

“Differentiation of Cogongrass (Imperata cylindrical) and other Grassy Weeds using

Hyperspectral Reflectance Data,”

Weed Sci. Soc. Am. Abst.

, vol. 40, 2003.

[3] J. Taylor, Jr., J. Byrd, K. Burnell, B. Mask, J. Barnett, L. M. Bruce, Y. Huang, M.

Carruth, “Using Remote Sensing to Differentiate Cogongrass [Imperata cylindrical (L.)

Beauv.] and other Grassy Weeds,”

Proc. 7 th

International Conference on the Ecology and

Management of Alien Plant Invasions , 2003.

[4] S. De Backer, P. Kempeneers, W. Debruyn, and P. Scheunders, “A Band Selection

Technique for Spectral Classification,”

IEEE Geoscience and Remote Sensing Letters , vol. 2, no. 3, pp. 319-323, July 2005.

[5] S. Venkataraman, “Exploiting Remotely Sensed Hyperspectral Data Via Spectral

Band Grouping for Dimensionality Reduction and Multiclassifiers,” Master’s Thesis submitted to Mississippi State University , August 2005.

[6] B. Smith, Fundamentals of Fourier Transform Infrared Spectroscopy , CRC Press

LLC, Boca Raton, Florida, 1996.

[7] A. A. Monakov, J. Vivekanandan, A. S. Stjernman, and A. K. Nystrom, “Spatial and

Frequency Averaging Techniques for a Polarimetric Scatterometer System,”

IEEE

Transactions on Geoscience and Remote Sensing , vol. 32, no. 1, pp. 187-196, January

1994.

[8] C. S. Burrus, R. A. Gopinath, and H. Guo, Wavelets and Wavelet Transforms ,

Prentice-Hall, 1998.

[9] Y. Huang, L.M. Bruce, J. Byrd, B. Mask, “Using Wavelet Transforms of

Hyperspectral Reflectance Curves for Automated Monitoring of Imperata cylindrica

(cogongrass),”

Proc. IEEE International Geoscience and Remote Sensing Symposium, pp. 2244-2246, Sydney, Australia, July 2001.

[10] J. Li and L. M. Bruce, “Wavelet-Based Feature Extraction of Improved Endmember

Abundance Estimation in Linear Unmixing of Hyperspectral Signals,”

IEEE Tranactions on Geoscience and Remote Sensing , vol. 42, no. 3, pp. 644-649, March 2004. (See

Correction, IEEE Transactions on Geoscience and Remote Sensing , vol. 42, no. 5, pp.

1122, May 2004.)

[11] L. M. Bruce, C. H. Koger and J. Li, “Dimensionality-reduction of Hyperspectral

Data using Discrete Wavelet Transform Feature-extraction,” IEEE Transactions on

Geoscience and Remote Sensing , vol. 40, no. 10 pp.2318-2338, October 2002.

[12] L. M. Bruce, H. Tamhankar, A. Mathur, and R. King, “Multiresolutional Texture

Analysis of Multispectral Imagery for Automated Ground Cover Classification,’

Proc.

IEEE International Geoscience and Remote Sensing Symposium , vol. 1, pp. 312-314,

June 2002.

[13] R. O. Duda, P. E. Hart, and D. G. Stork, Pattern Classification (2nd ed), Wiley,

New York, NY, 2000.

[14] A. Kaarna, P. Zemcik, H. Kalviainen, and J. Parkkinen, “Compression of

Multispectral Remote Sensing Images using Clustering and Spectral Reduction,” IEEE

Transactions on Geoscience and Remote Sensing , vol. 38, no. 2, pp. 1073-1082, March

2000.

[15] A. Ifarraguerri, and C. Chang, “Unsupervised Hyperspectral Image Analysis with

Projection Pursuit,”

IEEE Transactions on Geoscience and Remote Sensing , vol. 38, no.

6, pp. 2529-2538, November 2000.

[16] C. Chang, Q. Du, T. Sun, and M. L. G. Althouse, “A Joint Band Prioritization and

Band-Decorrelation Approach to Band Selection for Hyperspectral Image Classfication,”

IEEE Transactions on Geoscience and Remote Sensing , vol. 37, no. 6, pp. 2631-2641,

November 1999.

[17] A. Malhi and R. X. Gao, “PCA-Based Feature Selection Scheme for Machine

Defect Classification,”

IEEE Transactions of Instrumentation and Measurement , vol. 53 no. 6, pp. 1517-1525, December 2004.

[18] T. Stamkopoulos, K. Diamantaras, N. Maglaveras, and M. Strintzis, “ECG Analysis using Nonlinear PCA Neural Networks for Ischemia Detection,”

IEEE Transactions on

Signal Processing , vol 46, no. 11, pp. 3058-3067, November 1998.

[19] A. Cheriyadat , L.M. Bruce

, “

Why Principal Component Analysis is not an

Appropriate Feature Extraction Method for Hyperspectral Data,”

Proc. IEEE Geoscience and Remote Sensing Symposium ,vol. 6 , pp.3420 – 3422, 21-25, July 2003.

[20] C. Chang and H. Ren, “An Experiment-Based Quantitative and Comparative

Analysis of Target Detection and Image Classification Algorithms for Hyperspectral

Imagery,” IEEE Transactions on Geoscience and Remote Sensing , vol. 38, no. 2, pp.

1044-1063, March 2000.

[21] C. Chang, “Target Signature-Constrained Mixed Pixel Classification of

Hyperspectral Imagery,”

IEEE Transactions on Geoscience and Remote Sensing , vol. 40, no. 5, pp. 1065-1081, May 2002.

[22] A. V. Bogdanov, S. Sandven, O. M. Johannessen, V. Y. Alexandrov, and L. P.

Bobylev, “Multisensor Approach to Automated Classification of Sea Ice Image Data,”

IEEE Transactions on Geoscience and Remote Sensing , vol. 43, no. 7, pp. 1648-1664,

July 2005.

[23] H. Lin and L. M. Bruce, “Projection Pursuits for Dimensionality Reduction of

Hyperspectral Signals in Target Recognition Applications,”

IEEE Proc. International

Geoscience and Remote Sensing Symposium , vol. 2, pp. 960-963, 2004.

[24] S. B. Serpico, M. D’Inca, and G. Moser, “Design of Spectral Channels for

Hyperspectral Image Classification,” Proc. IEEE International Geoscience and Remote

Sensing Symposium , vol. 2, pp. 956-959, 2004.

[25] A. Mathur, “Dimensionality Reduction of Hyperspectral Signatures for Optimized

Detection of Invasive Species,” Master’s Thesis submitted to Mississippi State

University , December 2002.

[26] L. M. Bruce and H. Lin, “Projection Pursuits for Dimensionality Reduction of

Hyperspectral Signals in Target Recognition Applications,”

IEEE International

Geoscience and Remote Sensing Symposium (IGARSS) , vol. 2, pp. 960-963, 2004.

[27] L. O. Jimenez, D. A. Landgrebe, “Projection Pursuits for High Dimensional Feature

Reduction: Parallel and Sequential Approaches”,

IEEE International Geoscience and

Remote Sensing Symposium (IGARSS) , vol. 1, pp. 148-150, 1995

[28] Analytical Spectral Devices FieldspecPro Spectroradiometer specifications.

Available: http://asdi.com/products_specifications-FSP.asp

APPENDIX A: MATLAB CODE

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% This program uses a moving average to simulate

% spectrally reduced data as a function of

% FWHM's

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%% clear all ; close all ; warning off ; clc; load( 'cogon.txt' ); load( 'johnson.txt' ); data = [cogon,johnson]; class_num = 2;

%%%%%%%%%%%%% spectral band grouping method rate = [1 2 5 10 50 100 500 1000]; acc = []; for i = 1:length(rate)

filter = ones(1, rate(i))/rate(i); % create averaging filter

averaged = conv2(filter,data); % average data

new_averaged = averaged(rate(i):length(data),:); % truncate partial overlaps

reduced_data = downsample(new_averaged,rate(i)); % create reduced dataset

target = reduced_data(:,randperm(130));

nontarget = reduced_data(:,randperm(130)+130);

train_data = [[ones(1,65);target(:,1:65)],[2*ones(1,65);nontarget(:,1:65)]];

test_data = [[ones(1,65);target(:,66:130)],[2*ones(1,65);nontarget(:,66:130)]];

conf = nearest_neighbor(train_data, test_data, class_num);

acc = [acc;conf(3,3)];

clear reduced_data ;

clear train_data ;

clear test_data ;

end acc % display overall accuracies of spectral band grouping method

%%%%%%%%% lda method lin_acc = linear_discriminant(data);

%%%%%%%%% pp method pp_50_cog = xlsread( '8443_Final_Proj.xls' , 'Results_50_8443_Cogon' ); pp_50_john = xlsread( '8443_Final_Proj.xls' , 'Results_50_8443_Johnson' ); pp_55_cog = xlsread( '8443_Final_Proj.xls' , 'Results_55_8443_Cogon' ); pp_55_john = xlsread( '8443_Final_Proj.xls' , 'Results_55_8443_Johnson' ); pp_60_cog = xlsread( '8443_Final_Proj.xls' , 'Results_60_8443_Cogon' ); pp_60_john = xlsread( '8443_Final_Proj.xls' , 'Results_60_8443_Johnson' ); pp_65_cog = xlsread( '8443_Final_Proj.xls' , 'Results_65_8443_Cogon' ); pp_65_john = xlsread( '8443_Final_Proj.xls' , 'Results_65_8443_Johnson' ); pp_50 = [pp_50_cog,pp_50_john]; pp_55 = [pp_55_cog,pp_55_john]; pp_60 = [pp_60_cog,pp_60_john]; pp_65 = [pp_65_cog,pp_65_john]; target_50 = pp_50(:,randperm(130)); nontarget_50 = pp_50(:,randperm(130)+130); target_55 = pp_55(:,randperm(130)); nontarget_55 = pp_55(:,randperm(130)+130); target_60 = pp_60(:,randperm(130)); nontarget_60 = pp_60(:,randperm(130)+130); target_65 = pp_65(:,randperm(130)); nontarget_65 = pp_65(:,randperm(130)+130); train_data_50 = [[ones(1,65);target_50(:,1:65)],[2*ones(1,65);nontarget_50(:,1:65)]]; test_data_50 = [[ones(1,65);target_50(:,66:130)],[2*ones(1,65);nontarget_50(:,66:130)]]; train_data_55 = [[ones(1,65);target_55(:,1:65)],[2*ones(1,65);nontarget_55(:,1:65)]]; test_data_55 = [[ones(1,65);target_55(:,66:130)],[2*ones(1,65);nontarget_55(:,66:130)]]; train_data_60 = [[ones(1,65);target_60(:,1:65)],[2*ones(1,65);nontarget_60(:,1:65)]]; test_data_60 = [[ones(1,65);target_60(:,66:130)],[2*ones(1,65);nontarget_60(:,66:130)]]; train_data_65 = [[ones(1,65);target_65(:,1:65)],[2*ones(1,65);nontarget_65(:,1:65)]]; test_data_65 = [[ones(1,65);target_65(:,66:130)],[2*ones(1,65);nontarget_65(:,66:130)]]; conf_50 = nearest_neighbor(train_data_50, test_data_50, class_num) conf_55 = nearest_neighbor(train_data_55, test_data_55, class_num) conf_60 = nearest_neighbor(train_data_60, test_data_60, class_num) conf_65 = nearest_neighbor(train_data_65, test_data_65, class_num)

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% This program uses reduces datasets using LDA

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

function lin_acc = linear_discriminant(data) class_num = 2; target = data(1:2049,1:130)'; nontarget = data(1:2049,131:260)'; x1 = target; x2 = nontarget; m1 = mean(x1)'; m2 = mean(x2)';

S1 = 0;

S2 = 0; for i = 1:10

S1 = S1 + (x1(i,:)' - m1)*(x1(i,:)' - m1)';

S2 = S2 + (x2(i,:)' - m2)*(x2(i,:)' - m2)'; end

Sw = S1 + S2; w = inv(Sw)*(m1 - m2); y1 = w'*x1'; y2 = w'*x2'; train_data = [[ones(1,65);y1(:,1:65)],[2*ones(1,65);y2(:,1:65)]]; test_data = [[ones(1,65);y1(:,66:130)],[2*ones(1,65);y2(:,66:130)]]; train_class_dist = [65 65]; conf = nearest_neighbor(train_data, test_data, class_num) lin_acc = conf(3,3) function project_8443 close all ; warning off ; clc; clear all ; disp(sprintf( 'Enter the size of each weight group? ' )); u = input( '--> ' , 's' ); disp(sprintf( '\n' )); disp(sprintf( 'Enter the number of features in each samples ? ' )); v = input( '--> ' , 's' );

num_group = str2num(u); %initial number of groups of bands for tranform matrix A temp = floor((str2num(v))/num_group);

Sig_group_size = temp* num_group;

% twest_cogon_samples= load ('H:twest_cogon_samples_cropped_2050.txt'); %assuming data1 is target class and data2 is nontarget

% twest_johnson_samples= load ('H:twest_johnson_samples_cropped_2050.txt'); %and data is organized such that hyperspectral sigs

% %are in columns and both data1 and data2 have same number

% %spectral bands twest_cogon_samples= load ( 'H:twest_test_cogon.txt' ); %assuming data1 is target class and data2 is nontarget twest_johnson_samples= load ( 'H:twest_test_johnson.txt' ); %and data is organized such that hyperspectral sigs

%are in columns and both data1 and data2 have same number

%spectral bands twest_cogon_samples = twest_cogon_samples(1:Sig_group_size,1:65); twest_johnson_samples = twest_johnson_samples(1:Sig_group_size,1:65);

ppindex=0;

[num_band,num_sigs1]=size(twest_cogon_samples); %determine number of bands and number

[num_band,num_sigs2]=size(twest_johnson_samples); %of training sigs in each class

%*****************initialize matrix A**********************************

PCA_coeff = pca_coeff(twest_cogon_samples,twest_johnson_samples,num_group); % PCA Coeff.s for the LDA transformation

% matrix

% matrix

A_PCA = PCA_mat(num_band,num_sigs1,PCA_coeff,num_group); % Constructs the PCA transformation matrix

[O P]=size(A_PCA);

A_LDA = ones(0,P);

A_gaussian = Gaussian(num_band,num_sigs1,num_group); % Constructs the Gaussian transformation matrix

A_average = Average(num_band,num_sigs2,num_group); % Constructs the Average transformation

Matrix

%**********************************************************************

y1_gaussian = pptransform(twest_cogon_samples,A_gaussian); %transform Target using the gaussian transformation Matrix

y2_gaussian = pptransform(twest_johnson_samples,A_gaussian); %transform Nontarget using the gaussian transformation Matrix

y1_PCA = pptransform(twest_cogon_samples,A_PCA); %transform Target using the PCA transformation Matrix

y2_PCA = pptransform(twest_johnson_samples,A_PCA); %transform Nontarget using the PCA transformation Matrix

y1_average = pptransform(twest_cogon_samples,A_average); %transform Target using the average transformation Matrix

y2_average = pptransform(twest_johnson_samples,A_average); %transform Nontarget using the average transformation Matrix

%**************************************************************************

% Calculates the Bhattacharyya distance between the non-target and the target

pp_gauss_index = BD_Cal(y1_gaussian',y2_gaussian');

pp_average = BD_Cal(y1_average',y2_average');

NA = length(pp_average);

pp_LDA_index = zeros(1,NA);

pp_PCA_index = BD_Cal(y1_PCA',y2_PCA');

pp_LDA_index = zeros(1,length(pp_PCA_index));

%**************************************************************************

% Returns the highiest value between the two potential project and the projection

[H_BD_proj,type] = BD_Comp(pp_gauss_index,pp_average,pp_LDA_index,pp_PCA_index,4);

%**************************************************************************

% Builds the Opt. Transformation Matrix

TransConst1 = TransConst(type,A_gaussian,A_average,A_LDA,A_PCA);

%**************************************************************************

% Determines the overall Bhattacharayya Distance for intitial Transfomation

% matrix

y1_intitial_cogon = pptransform(twest_cogon_samples,TransConst1); %transform Target using the intitial transformation Matrix

y2_intitial_johnson = pptransform(twest_johnson_samples,TransConst1); %transform Nontarget using the intitial transformation Matrix

Max_initial_Bhatt = Bhattacharyya(y1_intitial_cogon,y2_intitial_johnson); % Calculates the

Bhattacharyya distance for the int. Transformation Matrix

Bhatt_Save = [];

%**************************************************************************

% Sequential Projection Pursuit

%************************************************************************** n = 0;

Max_num_grp = 0;

Bhatt_old_index = Max_initial_Bhatt;

Bhatt_new_index = Max_initial_Bhatt + 1;

while (Bhatt_new_index >= Bhatt_old_index)& (num_group >= Max_num_grp), %Loop for Sequential

Projection Pursuit

n = n+1; if n > 1

Bhatt_old_index = Bhatt_new_index; end ;

Bhatt_Save(n)=Bhatt_new_index;

%**************************************************************************

% Sequential Projection Pursuit

%**************************************************************************

% Performs the parallel projection pursuit for the Gaussian potential

% projection

[Split_gauss Split_Gauss_Bhatt Gauss_coeff_Matx] =

Parallel_A_Gauss_Seq(TransConst1,twest_cogon_samples,twest_johnson_samples,num_group,Max_initia l_Bhatt);

%**************************************************************************

% Performs the parallel projection pursuit for the Average potential

% projection

[Split_average Split_Avg_Bhatt Avg_coeff_Matx] =

Parallel_A_Average_Seq(TransConst1,twest_cogon_samples,twest_johnson_samples,Max_initial_Bhatt);

%**************************************************************************

NL = length(Split_average);

BL = length(Split_Avg_Bhatt);

NC = length(Avg_coeff_Matx);

Split_LDA = zeros(1,NL);

Split_LDA_Bhatt = zeros(1,BL);

LDA_coeff_Matx = ones(1,NC);

%**************************************************************************

% Performs the parallel projection pursuit for the PCA potential

% projection

[Split_PCA Split_PCA_Bhatt PCA_coeff_Matx] =

Parallel_A_PCA_Seq(TransConst1,twest_cogon_samples,twest_johnson_samples,Max_initial_Bhatt);

%**************************************************************************

%Compares the Bhattacharyya Distances of the results of the split group

%transformation matrix and returns the projection for each group with the

%highest projection

[Max_Split_Bhatt Max_Split_Proj Max_Max_Bhatt Max_Max_index]=

Sequential_Bhatt_Comp(Split_Avg_Bhatt,Split_PCA_Bhatt,Split_LDA_Bhatt,Split_Gauss_Bhatt);

%**************************************************************************

%Constructs the Transformation with the highest Class seperation

[Max_Trans_Matrix] =

Max_Mat_Seq(TransConst1,Max_Split_Proj,Max_Max_Bhatt,Max_Max_index,Avg_coeff_Matx,PCA_co eff_Matx,LDA_coeff_Matx,Gauss_coeff_Matx);

%**************************************************************************

%**************************************************************************

Bhatt_new_index =Max_Max_Bhatt;

TransConst1=Max_Trans_Matrix;

[M Max_num_grp]= size(TransConst1);

end ; twest_test_cogon= load ( 'H:twest_test_cogon.txt' ); %assuming data1 is target class and data2 is nontarget twest_test_johnson= load ( 'H:twest_test_johnson.txt' );

Results_8443_Johnsongrass = Max_Trans_Matrix'*twest_test_johnson(1:Sig_group_size,:);

Results_8443_Cogongrass = Max_Trans_Matrix'*twest_test_cogon(1:Sig_group_size,:); function [Split_gauss Split_Bhatt Atemp_coeff_Matx ]=

Parallel_A_Gauss_Seq(A,Target,Nontarget,num_group,Max_int)

%*****************************************************************

% Parallel_A_Gauss(A)

%

%

% A is a N x M matrices (N-spectral bands and M signatures)

% Atemp is a N x (M+1)

%

% Split_gauss : 4 = The Bhattacharyya Distance Increased

% 0 = The Bhattacharyya Distance did not increased

%

%

% Written by Terrance West April 10,2005

%

%******************************************************************

% A = ones(10,25); % Used to check function

[N,M] = size (A);

Atemp_gauss_split = zeros(N,M+M); for k = 1:M

Atemp = zeros(N,M+1); if (k == 1) % Accounts for the first column in the transformation matrix

Atemp(:,k+2:M+1) = A(:,k+1:M); % Places coefficients of old transformation

% matrix in new matrix accounting for the

% group split

group = find(A(:,k) ~= 0); % Finds the number of elements that are not zero

% in the kth group.

groupsize = size(group); % Finds the total number of elements in a column

group1 = group(1:(ceil(groupsize/2))); % Splits the coefficents in to two groups

group2 = group(((ceil(groupsize/2)+1):groupsize)); % Splits the coefficients in to two groups

% x1= (-ceil(size(group1)/2):1:(ceil(size(group1))/2)-1)'; % Computes the coefficients for the gaussian potential projection for

% gausst1 = zeros(1,ceil(size(group1))); % group1

% gausst1 = gaussmf(x1,[ceil(size(group1))/4 0]);

% x2= (-ceil(size(group2)/2):1:(ceil(size(group2))/2)-1)'; % Computes the coefficients for the gaussian potential projection for

% gausst2 = zeros(1,ceil(size(group2))); % group2

% gausst2 = gaussmf(x2,[ceil(size(group2))/4 0]);

[N1,M1]= size(group1);

[N2,M2]= size(group2);

gausst1 = gausswin(N1); % Computes the coefficients for the gaussian potential projection for group1

gausst2 = gausswin(N2); % Computes the coefficients for the gaussian potential projection for group2

Atemp(group1,k) = gausst1(group1,k); % Places the coefficients in to the new transformation matrix

Atemp(group2,k+1) = gausst2(1:(size(group2)),k); % Places the coefficients in to the new transformation matrix

Atemp_gauss_split(group1,k) = gausst1(group1,k); % Places the coefficients in to the new transformation matrix

Atemp_gauss_split(group2,k+1) = gausst2(1:(size(group2)),k); % Places the coefficients in to the new transformation matrix

% xlswrite('H:\Project Pursuit\Atemp_gauss.xls',Atemp,k); % Stores Transformation matrix in excel sheet

% which corresponds to with group was split.

Y_Target=pptransform(Target,Atemp); % Transforms the Target

Y_Nontarget=pptransform(Nontarget,Atemp); % Transforms the Nontarget

Bhatt_dist_gauss(k) = Bhattacharyya(Y_Target,Y_Nontarget); % Calculates the Bhattacharyya distance between the target and nontarget if Bhatt_dist_gauss(k)> Max_int

Split_gauss(k) = 4; else

Split_gauss(k) = 0; end ;

% xlswrite('H:\Project Pursuit\Bhatt_gauss_overall.xls',Bhatt_dist,k); % Stores the Bhattacharyya distance % which corresponds to with group was spli t.

elseif (k == M) % Accounts for the last column in the transformation matrix

Atemp(:,1:k-1) = A(:,1:k-1); % Places coefficients of old transformation

% matrix in new matrix accounting for the

% group split

group = find(A(:,k) ~= 0); % Finds the number of elements that are not zero

% in the kth group.

groupsize = size(group); % Finds the total number of elements in a column

group1 = group(1:(ceil(groupsize/2))); % Splits the coefficents in to two groups

group2 = group(((ceil(groupsize/2)+1):groupsize)); % Splits the coefficients in to two groups

% x1= (-ceil(size(group1)/2):1:(ceil(size(group1))/2)-1)'; % Computes the coefficients for the gaussian potential projection for

% gaussEDt1 = zeros(1,ceil(size(group1))); % group1

% gaussEDt1 = gaussmf(x1,[ceil(size(group1))/4 0]);

%

% x2= (-ceil(size(group2)/2):1:(ceil(size(group2))/2)-1)'; % Computes the coefficients for the gaussian potential projection for

% gaussEDt2 = zeros(1,ceil(size(group2))); % group2

% gaussEDt2 = gaussmf(x2,[ceil(size(group2))/4 0]);

[Ned1,Med1]=size(group1);

[Ned2,Med2]=size(group2);

gaussEDt1 = gausswin(Ned1);

gaussEDt2 = gausswin(Ned2);

Atemp(group1,k) = gaussEDt1; % Places the coefficients in to the new transformation matrix

Atemp(group2, k+1) = gaussEDt2; % Places the coefficients in to the new transformation matrix

Atemp_gauss_split(group1,(2*k)-1) = gaussEDt1; % Places the coefficients in to the new transformation matrix

Atemp_gauss_split(group2,(2*k+1)-1) = gaussEDt2; % Places the coefficients in to the new transformation matrix

% xlswrite('H:\Project Pursuit\Atemp_gauss.xls',Atemp,k); % Stores Transformation matrix in excel sheet

% which corresponds to with group was split.

Y_Target=pptransform(Target,Atemp); % Transforms the Target

Y_Nontarget=pptransform(Nontarget,Atemp); % Transforms the Nontarget

Bhatt_dist_gauss(k) = Bhattacharyya(Y_Target,Y_Nontarget); % Calculates the Bhattacharyya distance between the target and nontarget if Bhatt_dist_gauss(k)> Max_int

Split_gauss(k) = 4; else

Split_gauss(k) = 0; end ;

% xlswrite('H:\Project Pursuit\Bhatt_gauss_overall.xls',Bhatt_dist,k); % Stores the Bhattacharyya distance else

Atemp(:,1:k-1) = A(:,1:k-1); % Places coefficients of old transformation

% matrix in new matrix accounting for the

% group split

Atemp(:,k+2:M+1)=A(:,k+1:M); % Places coefficients of old transformation

% matrix in new matrix accounting for the

% group split

group = find(A(:,k) ~= 0); % Finds the number of elements that are not zero

% in the kth group.

groupsize= size(group); % Finds the total number of elements in a column

group1 = group(1:(ceil(groupsize/2))); % Splits the coefficents in to two groups

group2 = group(((ceil(groupsize/2)+1):groupsize)); % Splits the coefficients in to two groups

% x1= (-ceil(size(group1)/2):1:(ceil(size(group1))/2)-1)'; % Computes the coefficients for the gaussian potential projection for

% gaussMt1 = zeros(1,ceil(size(group1))); % group1

% gaussMt1 = gaussmf(x1,[ceil(size(group1))/4 0]);

%

% x2= (-ceil(size(group2)/2):1:(ceil(size(group2))/2)-1)'; % Computes the coefficients for the gaussian potential projection for

% gaussMt2 = zeros(1,ceil(size(group2))); % group2

% gaussMt2 = gaussmf(x2,[ceil(size(group2))/4 0]);

[N1,M1]= size(group1);

[N2,M2]= size(group2);

gaussMt1 = gausswin(N1);

gaussMt2 = gausswin(N2);

Atemp(group1,k) = gaussMt1; % Places the coefficients in to the new transformation matrix

Atemp(group2, k+1) = gaussMt2; % Places the coefficients in to the new transformation matrix

Atemp_gauss_split(group1,(2*k)-1) = gaussMt1; % Places the coefficients in to the new transformation matrix

Atemp_gauss_split(group2,(2*k+1)-1) = gaussMt2; % Places the coefficients in to the new transformation matrix

% xlswrite('H:\Project Pursuit\Atemp_gauss.xls',Atemp,k); % Stores Transformation matrix in excel sheet

% which corresponds to with group was split.

Y_Target=pptransform(Target,Atemp); % Transforms the Target

Y_Nontarget=pptransform(Nontarget,Atemp); % Transforms the Nontarget

Bhatt_dist_gauss(k) = Bhattacharyya(Y_Target,Y_Nontarget); % Calculates the Bhattacharyya distance between the target and nontarget if Bhatt_dist_gauss(k)> Max_int

Split_gauss(k) = 4; else

Split_gauss(k) = 0; end ;

% xlswrite('H:\Project Pursuit\Bhatt_gauss_overall.xls',Bhatt_dist,k); % Stores the Bhattacharyya distance end ; end ;

Split_Bhatt = Bhatt_dist_gauss;

Split_gauss = Split_gauss;

Atemp_coeff_Matx = Atemp_gauss_split; function [Max_Trans_Matrix] =

Max_Mat_Seq(TransConst1,Max_Proj,Max_Max_Bhatt,Max_Max_index,Avg_coeff_Matx,PCA_coeff_M atx,LDA_coeff_Matx,Gauss_coeff_Matx);

%**************************************************************************

% This function constructs the Overall Transformation Matrix for the Sequential Projection Pursuits

% [Max_Trans_Matrix] = Max_Mat_Seq(TransConst1,Max_Proj,Max_Max_Bhatt,Max_Max_index);

%

%

% 1 = Average has the greatest

% 2 = PCA has the greatest

% 4 = Gauss has the greatest

%

% Written by Terrance West August 1, 2005

%**************************************************************************

[M N]= size(TransConst1);

Max_Trans = zeros(M,N+1);

if (Max_Max_index == 1) % Constructs the Maximum Transformation matrix if the first group was chosen

Max_Trans(:,Max_Max_index+2:N+1)= TransConst1(:,Max_Max_index+2:N); switch (Max_Proj(Max_Max_index)); case (1)

Max_Trans(1:M,Max_Max_index:(Max_Max_index+1)) =

Avg_coeff_Matx(1:M,Max_Max_index:(Max_Max_index+1)); case (2)

Max_Trans(1:M,Max_Max_index:(Max_Max_index+1)) =

PCA_coeff_Matx(1:M,Max_Max_index:(Max_Max_index+1)); case (3)

Max_Trans(1:M,Max_Max_index:(Max_Max_index+1)) =

LDA_coeff_Matx(1:M,Max_Max_index:(Max_Max_index+1)); case (4)

Max_Trans(1:M,Max_Max_index:(Max_Max_index+1)) =

Gauss_coeff_Matx(1:M,Max_Max_index:(Max_Max_index+1)); end ; else if (Max_Max_index == N) % Constructs the Maximum Transformation matrix if the last group was chosen

Max_Trans(:,1:Max_Max_index-1)= TransConst1(:,1:Max_Max_index-1); switch (Max_Proj(Max_Max_index)); case (1)

Max_Trans(1:M,Max_Max_index:(Max_Max_index+1)) =

Avg_coeff_Matx(1:M,2*Max_Max_index-1:(2*Max_Max_index+1)-1); case (2)

Max_Trans(1:M,Max_Max_index:(Max_Max_index+1)) =

PCA_coeff_Matx(1:M,2*Max_Max_index-1:(2*Max_Max_index+1)-1); case (3)

Max_Trans(1:M,Max_Max_index:(Max_Max_index+1)) =

LDA_coeff_Matx(1:M,2*Max_Max_index-1:(2*Max_Max_index+1)-1); case (4)

Max_Trans(1:M,Max_Max_index:(Max_Max_index+1)) =

Gauss_coeff_Matx(1:M,2*Max_Max_index-1:(2*Max_Max_index+1)-1); end ; else % Constucts the Transformation matrix if the group was not the last and not the first group chosen

Max_Trans(:,1:Max_Max_index-1)=TransConst1(:,1:Max_Max_index-1);

Max_Trans(:,Max_Max_index +2:N+1) = TransConst1(:,Max_Max_index +1:N); switch (Max_Proj(Max_Max_index)); case (1)

Max_Trans(1:M,Max_Max_index:(Max_Max_index+1)) =

Avg_coeff_Matx(1:M,2*Max_Max_index-1:(2*Max_Max_index+1)-1); case (2)

Max_Trans(1:M,Max_Max_index:(Max_Max_index+1)) =

PCA_coeff_Matx(1:M,2*Max_Max_index-1:(2*Max_Max_index+1)-1); case (3)

Max_Trans(1:M,Max_Max_index:(Max_Max_index+1)) =

LDA_coeff_Matx(1:M,2*Max_Max_index-1:(2*Max_Max_index+1)-1); case (4)

Max_Trans(1:M,Max_Max_index:(Max_Max_index+1)) =

Gauss_coeff_Matx(1:M,2*Max_Max_index-1:(2*Max_Max_index+1)-1); end ; end ; end ;

Max_Trans_Matrix = Max_Trans;

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% This function accepts and classifies data samples using a distance-weighted

% nearest neighbor classification scheme

%

% INPUT VARIABLES

% train_data -- training data for the system

% test_data -- test data for the system

% class_num -- number of classes to use in classification

%

% OUTPUT VARIABLES

% conf -- confusion matrix with user, producer, and overall accuracies

%

%

% NOTE: This function assumes that all data samples are given in columns

% and that the first row of each data file is a header giving the

% class number ("ground truth") of the data.

%

% written by: Wesley Johnson 07/27/05

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

%%%%%%%%%%%%%%%%%%%%%%%%%%% function conf = nearest_neighbor(train_data, test_data, class_num)

conf = zeros(class_num + 1,class_num + 1); % create null confusion matrix based on number of classes

[tr_row,tr_col] = size(train_data); % get sizes of training and test data

[ts_row,ts_col] = size(test_data); train_class_index = train_data(1,:); test_class_index = test_data(1,:); for i = 1:ts_col % nearest neighbor classification

class_index = train_class_index;

test_data_sample = test_data(:,i); % get i-th test sample for j = 1:tr_col % get Euclidean distances between i-th test sample and all training samples

dist(j) = sqrt(sum((train_data(:,j) - test_data_sample).^2)); end

[sorted_dist, sorted_dist_index] = sort(dist); % sort distances in ascending order for m = 1:length(sorted_dist_index) % sort class index according to sorted distances

sorted_class_index(m) = class_index(sorted_dist_index(m)); end for n = 1:class_num % get vector of weighted sums

weight(n) = sum(1./(sorted_dist(find(sorted_class_index == n)))); end

find_max = find(weight == max(weight)); % find out if maximum of weight occurs in more than one place if length(find_max) == 1 % classify test data sample

classification(i) = find_max; else

classification(i) = find_max(1); end end

for o = 1:class_num % create confusion matrix

class_test = find(test_class_index == o); for q = 1:length(class_test) if classification(class_test(q)) == o

conf(o,o) = conf(o,o) + 1; else

conf(o,classification(class_test(q))) = conf(o,classification(class_test(q))) + 1; end end end for p = 1:class_num % compute accuracies

conf(p,class_num + 1) = 100*(conf(p,p)/sum(conf(p,1:class_num))); % producer accuracies if isnan(conf(p,class_num + 1)) == 1

conf(p,class_num + 1) = 0; end

conf(class_num + 1,p) = 100*(conf(p,p)/sum(conf(1:class_num,p))); % user accuracies if isnan(conf(class_num + 1,p)) == 1

conf(class_num + 1,p) = 0; end

conf(class_num + 1,class_num + 1) = conf(class_num + 1,class_num + 1) + conf(p,p); % get sum of correct classifications to use in

% overall classification end conf(class_num + 1,class_num + 1) = 100*(conf(class_num + 1,class_num +

1)/sum(sum(conf(1:class_num,1:class_num)))); % overall accuracy