2.6 Notes - Parkland School District

advertisement

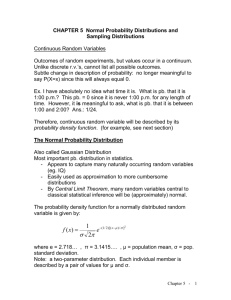

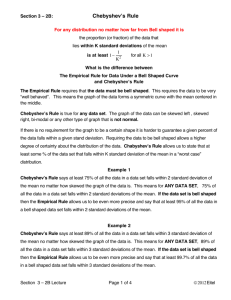

CP Prob & Stats Section 2.6 – Interpreting the Standard Deviation In this section, we learn how the standard deviation provides a measure of variability for a single sample. To understand how the standard deviation provides a measure of variability of a data set, we need to consider a specific data set and answer the following questions: 1. How many measurements are within 1 standard deviation of the mean? 2. How many measurements are within 2 standard deviations of the mean? As our data set, let’s use the average SAT scores for each of the 50 states and the District of Columbia in 2000. State Alabama Alaska Arizona Arkansas California Colorado Connecticut Delaware D.C. Florida Georgia Hawaii Idaho Illinois Indiana Iowa Kansas SAT Score 1114 1034 1044 1117 1015 1071 1017 998 980 998 974 1007 1081 1154 999 1189 1154 State SAT Score Kentucky 1098 Louisiana 1120 Maine 1004 Maryland 1016 Massachusetts 1024 Michigan 1126 Minnesota 1175 Mississippi 1111 Missouri 1149 Montana 1089 Nebraska 1131 Nevada 1027 N. Hampshire 1039 N. Jersey 1011 N. Mexico 1092 New York 1000 N. Carolina 988 State SAT Score N. Dakota 1197 Ohio 1072 Oklahoma 1123 Oregon 1054 Pennsylvania 995 Rhode Island 1005 S. Carolina 966 S. Dakota 1175 Tennessee 1116 Texas 993 Utah 1139 Vermont 1021 Virginia 1009 Washington 1054 W. Virginia 1037 Wisconsin 1181 Wyoming 1090 The mean of this data is 1066. The standard deviation of the data is 66. We look at the intervals X s to X s and X 2s to X 2s. These are the intervals 1 standard deviation from the mean and 2 standard deviations from the mean, respectively. With our data set, ( X s, X s) = (1000, 1132) and ( X 2s, X 2s) = (934, 1198). Within 1 standard deviation of the mean are 34 measurements or 66.7 % of the data. All 51 measurements, or 100 % of the data, fall within 2 standard deviations of the mean. These observations identify criteria for interpreting a standard deviation that apply to any set of data, whether a population or a sample. There are 2 rules for interpreting the standard deviation, Chebyshev’s Rule and the Empirical Rule. Chebyshev’s Rule: This applies to any set of data, regardless of the shape of the frequency distribution of the data. a. No useful information is provided on the fraction of measurements that fall within 1 standard deviation of the mean. 3 of the measurements will fall within 2 standard deviations of the mean; 4 ( X 2s, X 2s) for samples and ( 2 , 2 ) for populations b. At least c. At least 8 of the measurements will fall within 3 standard deviations of the mean; 9 ( X 3s, X 3s) for samples and ( 3 , 3 ) for populations d. Generally, for any number k greater than 1, at least (1 1 k2 ) of the measurements will fall within k standard deviations of the mean; ( X ks, X ks) for samples and ( k , k ) for populations Note: This rule gives the smallest percentages that are mathematically possible. In reality, the true percentages can be much higher than those stated. Empirical Rule: This applies only to data sets with normal distributions, that is, the frequency distributions are symmetric (i.e. mean equals median), unimodal, and bell-shaped, or mound-shaped. a. Approximately 68 % of the measurements fall within 1 standard deviation of the mean; ( X s, X s) for samples and ( , ) for populations b. Approximately 95 % of the measurements fall within 2 standard deviations of the mean; ( X 2s, X 2s) for samples and ( 2 , 2 ) for populations c. Approximately 99.7 % (essentially all) of the measurements fall within 3 standard deviations of the mean; ( X 3s, X 3s) for samples and ( 3 , 3 ) for populations Note: These percentages are only approximations. The real percentages could be higher or lower depending on the data set. We can use Chebyshev’s Rule and the Empirical Rule as a “check” when calculating the standard deviation, since sometimes we forget to take the square root of the variance. From the two Rules, we know that most of the data is within 2 standard deviations of the mean, and almost all of the data is within 3 standard deviations of the mean. Therefore, it’s expected that the range of measurements is between 4 and 6 standard deviations in length. Sometimes, can use s ≈ range/4 to obtain a crude, and usually conservatively large, approximation for s. (No substitute for actually calculating s.) Larger data sets typically have extreme values, in which case the range of the data may exceed 6 standard deviations.