elps5266-sup-0001-Suppmat

advertisement

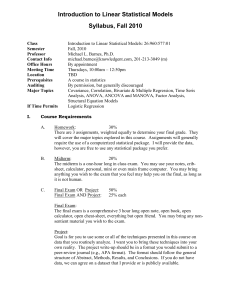

Supplementary Material Methods Logistic Regression Logistic regression (LogR) forms a best fitting equation or function using the maximum likelihood method, which maximizes the probability of classifying the observed data into the appropriate category given the regression coefficients. The goal is to correctly predict the category of outcome for individual cases using the most parsimonious model. To accomplish this goal, a model is created that includes all predictor variables that are useful in predicting the response variable. Logistic regression generates the coefficients (and its standard errors and significance levels) of a formula to predict a logit transformation of the probability of presence of the characteristic of interest: logit(𝑝) = 𝑏0 + 𝑏1 𝑋1 + 𝑏2 𝑋2 +…𝑏𝑘 𝑋𝑘 where 𝑝 is the probability of presence of the characteristic of interest. The logit transformation is defined as the logged odds 𝑜𝑑𝑑𝑠 = 𝑝 𝑝𝑟𝑜𝑏𝑎𝑏𝑖𝑙𝑖𝑡𝑦 𝑜𝑓 𝑝𝑟𝑒𝑠𝑒𝑛𝑐𝑒 𝑜𝑓 𝑐ℎ𝑎𝑟𝑎𝑐𝑡𝑒𝑟𝑖𝑠𝑡𝑖𝑐 = 1 − 𝑝 𝑝𝑟𝑜𝑏𝑎𝑏𝑖𝑙𝑖𝑡𝑦 𝑜𝑓 𝑎𝑏𝑠𝑐𝑒𝑛𝑐𝑒 𝑜𝑓 𝑐ℎ𝑎𝑟𝑎𝑐𝑡𝑒𝑟𝑠𝑖𝑡𝑖𝑐 𝑝 andlogit(𝑝) = ln [1−𝑝] Rather than choosing parameters that minimize the sum of squared errors (like in ordinary regression), estimation in logistic regression chooses parameters that maximize the likelihood of observing the sample values. Model Validation In logistic regression, two hypotheses are of interest: the null hypothesis, which is when all the coefficients in the regression equation take the value zero, and the alternative hypothesis that the model with predictors currently under consideration is accurate and differs significantly from the null hypothesis. The likelihood ratio test is based on -2 log likelihood (–2LL) ratio. It tests the difference between –2LL for the full model with predictors and –2LL for initial chi-square in the null model with only a constant in it. It measures the improvement in fit that the explanatory variables make compared to the null model. The Wald statistic, akin to the t-test in linear regression, is an alternative way of assessing the contribution of each predictor to a model. It is given by: Wald =[ 𝐵 2 𝑆.𝐸 ] , where B is the regression coefficient and SE is the standard error of the regression coefficient. Each Wald statistic is compared to a chi-square distribution with one degree of freedom. The Nagelkerke R square is defined as: 𝐿(0) 2⁄𝑛 2 𝑅 2 ⁄𝑅max , where 𝑅 2 = 1 − (𝐿(𝜃̂)) 2 and 𝑅max = 1 − (𝐿(0)) 2⁄𝑛 where L(0) is the likelihood of obtaining the observations if the independent variables had no effect on the outcome,𝐿(𝜃̂)is the likelihood of the model with a given set of parameter estimates) and n is the sample size. The Hosmer-Lemeshow test [1] is a statistical test for goodness of fit for the logistic regressionmodel. The data are divided into approximately ten groups defined by increasing order of estimated risk. The observed and expected number of cases in each group is calculated and a chi-squared statistic is calculated as follows: 𝑛 𝐻=∑ 𝑔=1 (𝑂𝑔 − 𝐸𝑔 )2 𝐸𝑔 (1 − 𝐸𝑔 ⁄𝑛𝑔 ) with 𝑂𝑔 , 𝐸𝑔 and 𝑛𝑔 the observed events, expected events and number of observations for the 𝑔th risk decile group, and n the number of groups. The test statistic follows a chi-squared distribution with n-2 degrees of freedom. A large value of chi-squared (with small p-value < 0.05) indicates poor fit and small chi-squared values (with larger p-value closer to 1) indicate a good logistic regression model fit. The receiver operating characteristic (ROC) curve is very useful for evaluating the predictive accuracy of a chosen model in logistic regression [2]. The curve is obtained by plotting sensitivity (True Positive) against 1 – specificity (False Positive). A perfect classification with 100% true positive and 0% false positive would have an area equal to 1. Model Classification By taking the exponential of both sides of the regression equation, the equation can be rewritten as: 𝑜𝑑𝑑𝑠 = 𝑝 = 𝑒 𝑏0 × 𝑒 𝑏1 𝑋1 × 𝑒 𝑏2 𝑋2 × 𝑒 𝑏3 𝑋3 × … × 𝑒 𝑏𝑘𝑋𝑘 1−𝑝 Once the logit is estimated, then the probability that the outcome is skin or menstrual blood is given by: 𝑃= exp(ln(𝑂)) 1 + exp(ln(𝑂)) 𝑝 where the odd ratio 𝑂 = 1−𝑝 Outliers and Influential cases Standardized residuals are defined as the difference between the observed response and the predicted probability: 𝑅𝑒𝑠𝑖𝑑𝑢𝑎𝑙 = 𝑦 − 𝑃𝑟𝑒𝑑𝑖𝑐𝑡𝑒𝑑 𝑃𝑟𝑜𝑏𝑎𝑏𝑖𝑙𝑖𝑡𝑦 𝑍= 𝑅𝑒𝑠𝑖𝑑𝑢𝑎𝑙 √(𝑃𝑟𝑒𝑑𝑖𝑐𝑡𝑒𝑑 𝑃𝑟𝑜𝑏𝑎𝑏𝑖𝑙𝑖𝑡𝑦 ) (1−𝑃𝑟𝑒𝑑𝑖𝑐𝑡𝑒𝑑 𝑃𝑟𝑜𝑏𝑎𝑏𝑖𝑙𝑖𝑦) The Cook distance is defined as: ℎ 𝑍2 Cook D = , (1−ℎ)2 where Z is the standard residual and h is the leverage value. The Cook distance measures the influence of a case. It measures the effect of deleting a case on the model residuals. References 1. Hosmer, W., Lemeshow, S., Applied Logistic Regression, John Wiley & Sons, Inc., Hoboken, NJ 2000. 2. Agresti, A., Categorical Data Analysis, John Wiley & Sons, Hoboken 2002. Supplementary Table 1. Logistic Regression Model – Menstrual Blood Menstrual Blood B S.E. Wald df Sig. miR185-5p by miR144-5p by miR144-3p -0.005 0.002 5.600 1 0.018 miR185-5p 3.718 1.540 5.828 1 0.016 -32.017 12.907 6.153 1 0.013 Constant B = regression coefficient S.E. = standard error of regression coefficient df = degrees of freedom Sig. = significance Supplementary Table 2.Area under ROC (Receiver Operating Curve) for the MB Assay. Menstrual Blood Area Std. Error Asymptotic Sig. 0.994 0.006 0.000 Asymptotic 95% Confidence Interval Lower Bound Upper Bound 0.982 1.000 Supplementary Table 3. Case List – Menstrual blood model Case 100 Selected Status S Observed y 1** Predicted 0.052 Predicted Group 0 Temporary Variable Resid ZResid 0.948 4.285 Supplementary Table 4. Summary Statistics for the Menstrual Blood Model Model Validation Area Under Receiver Operating Curve Classification: Training Set Classification: Test set Model chi-square Nagelkerke R-square Hosmer-Lemeshow Test Estimated Area under Operating Curve 95 Percent Confidence Interval Optimal Threshold True Positive Rate False Positive Rate 46.429 (p < 0.001) 86.2% 3.492 (p = 0.900) 99.4% 98.2%-100% 0.5 87.5% (7/8) 0% False Negative Rate 12.5% (1/8) Correct Classification Rate True Positive Rate False Positive Rate 99% (102/103) 100% 0% False Negative Rate 0% Supplementary Figure Legends Supplementary Figure 1. A) Receiver Operating Curve for the Menstrual Blood LogR Model B) Histogram of estimated probabilities. The symbol of each case represents the group to which the case actually belongs. The green colored symbols represent menstrual blood cases, the red colored symbols represent the non-menstrual blood cases. X-axis: LogR Pvalue. Y-axis: number of cases (samples).