DANIEL_HABTEGEBRIEL_YOHANNES(ASSIGMENT

advertisement

DANIEL HABTEGEBRIEL YOHANNES

- CS3

Introduction

Embedded systems are a ubiquitous component of our everyday lives. We

interact with hundreds of tiny computers every day that are embedded into our

houses, our cars, our toys, and our work. As our world has become more complex,

so have the capabilities of the microcontrollers embedded into our devices. The

ARM® Cortex™-M family represents a new class of microcontrollers much more

powerful than the devices available ten years ago. The purpose of this class is to

present the design methodology to train young engineers to understand the basic

building blocks that comprise devices like a cell phone, an MP3 player, a

pacemaker, antilock brakes, and an engine controller.

An embedded system is a system that performs a specific task and has a

computer embedded inside. A system is comprised of components and interfaces

connected together for a common purpose. This class is an introduction to

embedded systems. Specific topics include microcontrollers, fixed-point numbers,

the design of software in C, elementary data structures, programming

input/output including interrupts, analog to digital conversion, digital to analog

conversion.

In general, the area of embedded systems is an important and growing discipline

within electrical and computer engineering. In the past, the educational market of

embedded systems has been dominated by simple microcontrollers like the PIC,

the 9S12, and the 8051. This is because of their market share, low cost, and

historical dominance. However, as problems become more complex, so must the

systems that solve them. A number of embedded system paradigms must shift in

order to accommodate this growth in complexity. First, the number of

calculations per second will increase from millions/sec to billions/sec. Similarly,

the number of lines of software code will also increase from thousands to

millions. Thirdly, systems will involve multiple microcontrollers supporting many

simultaneous operations. Lastly, the need for system verification will continue to

grow as these systems are deployed into safety critical applications. These

changes are more than a simple growth in size and bandwidth. These systems

must employ parallel programming, high-speed synchronization, real-time

operating systems, fault tolerant design, priority interrupt handling, and

networking. Consequently, it will be important to provide our students with these

types of design experiences. The ARM platform is both low cost and provides the

high-performance features required in future embedded systems. In addition, the

ARM market share is large and will continue to grow. As of July 2013, ARM reports

that over 35 billion ARM processors have been shipped from over 950 companies.

Furthermore, students trained on the ARM will be equipped to design systems

across the complete spectrum from simple to complex. The purpose of this course

is to bring engineering education into the 21st century.

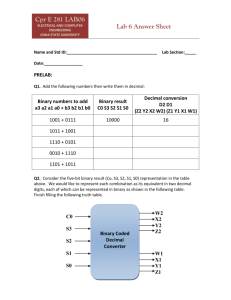

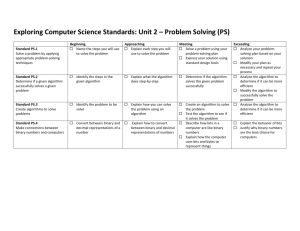

2.1. Binary number systems

To solve problems using a computer we need to understand numbers and what

they mean. Each digit in a decimal number has a place and a value. The place is a

power of 10 and the value is selected from the set {0, 1, 2, 3, 4, 5, 6, 7, 8, 9}. A

decimal number is simply a combination of its digits multiplied by powers of 10.

For example

1984 = 1•103 + 9•102 + 8•101 + 4•100

Fractional values can be represented by using the negative powers of 10. For

example,

273.15 = 2•102 + 7•101 + 3•100 + 1•10-1 + 5•10-2

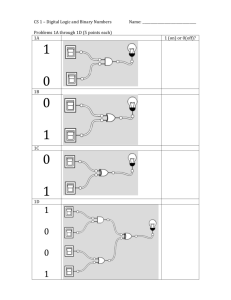

In a similar manner, each digit in a binary number has a place and a value. In

binary numbers, the place is a power of 2, and the value is selected from the set

{0, 1}. A binary number is simply a combination of its digits multiplied by powers

of 2. To eliminate confusion between decimal numbers and binary numbers, we

will put a subscript 2 after the number to mean binary. Because of the way the

microcontroller operates, most of the binary numbers in this class will have 8, 16,

or 32 bits. An 8-bit number is called a byte, and a 16-bit number is called a

halfword. For example, the 8-bit binary number for 106 is

011010102 = 0•27 + 1•26 + 1•25 + 0•24 + 1•23 + 0•22 + 1•21 + 0•20 =

64+32+8+2 = 106

4 down vote

Technically you can not convert a binary represented number into a decimal,

because computers do not have any storage facility to represent decimal

numbers.

Practically this might sound absurd, since we are always dealing with numbers in

decimal representation. But these decimal representations are never actually

stored in decimal. Only thing a computer does is converting a number into

decimal representation when displaying it. And this conversion is related to

program construction and library design.

I'll give a small example on C language. In C you have signed and unsigned integer

variables. When you are writing a program these variables are used to store

numbers in memory. Who knows about their signs? The compiler. Assembly

languages have signed and unsigned operations. Compiler keeps track of the sign

of all variables and generate appropriate code for signed and unsigned case. So

your program works with signed or unsigned integers perfectly when it is

compiled.

Assume you used a printf sentence to print an integer variable and you used %d

format converter to print the value in decimal representation. This conversion will

be handled by printf function defined in standart input output library of C. The

function reads the variable from memory, converts the binary representation to

decimal representation by using a simple base conversion algorithm. But the

target of the algorithm is a char sequence, not an integer. So this algorithm does

two things, it both converts binary to decimal representation; and it converts bits

to char values (or ASCII codes to be more precise). printf should know the sign of

the number to carry on the conversion successfully and this information is again

supplied by the compiler constructs placed at compile time. By using these

constructs printf could check whether the integer is signed or unsigned and use

the appropriate conversion method.

Other programming languages follow similar paths. In essence numbers are

always stored in binary. The signed or unsigned representation is known by

compiler/interpreter and thus is a common knowledge. The decimal conversion is

only carried on for cosmetic reasons and the target of conversion is a char

sequence or a string.