Preliminary simulation In the lexical route of the dual route model

Preliminary simulation

In the lexical route of the dual route model (DRC) of word recognition and reading aloud

(Coltheart et al., 2001), each unit in the orthographic lexicon is connected with the corresponding unit in the phonological lexicon. The weights of these connections are fixed, and the value is the same for all of the connections. A priming function could be implemented by having the strength of these connections change as a function of experience, rather than being fixed. We propose that each time a word is read, the connection between its orthographic and its phonological lexical representations gets strengthened. In this way, the next time that word is presented, it will be read faster, since its phonological representation will be retrieved more quickly.

Take as an example the target word LANA and its neighbour word RANA. Consider firstly the situation where RANA has not been previously presented as a target. When LANA is presented, its neighbour RANA will be activated in the orthographic lexicon, and will exert some inhibition on the target’s entry in the orthographic lexicon. The orthographic entry for the neighbour RANA will activate the entry for RANA in the phonological lexicon and so there will be some inhibition of the target’s entry in the phonological lexicon. Now consider the situation where the neighbour RANA has been previously presented as a target. According to our hypothesis, the connection between the orthographic and phonological lexical entries for RANA will have been strengthened by this prior presentation. So when the orthographic entry for RANA is activated by the presentation of LANA, the consequent activation of the phonological entry for RANA will be greater and so the inhibition exerted on the phonological entry for the target LANA will be greater. Feedback from the phonological lexicon to the orthographic lexicon will contribute activation of the orthographic lexical entry for RANA and the strength of this feedback will be greater if RANA has been previously presented; so there will also be more inhibition of the target entry LANA in the orthographic lexicon if RANA had previously been presented than if it had not. And clearly the larger the number of neighbours of LANA that precede it in the experiment the larger will be the cumulative lexical interference effect exerted upon LANA.

In order to investigate the feasibility of this account, we implemented it with the DRC computational model of reading aloud. We introduced a form of learning into the model by strengthening the link between a word’s entry in the orthographic lexicon and its entry in the phonological lexicon each time the word was read by the model. The equation for updating the strength of this link was:

NewLinkStrength = OldLinkStrength + (1-OldLinkStrength)*UpdateIncrement

1

where UpdateIncrement is a parameter controlling how large the update in link strength that occurs each time a word is read. We used a value of .90 for this parameter. We found that we needed to change the default values of three of the DRC model’s parameters. Firstly, in order for a word to activate its orthographic neighbours in the orthographic lexicon (as the account given above requires) we had to reduce the value of the Letter-to-OrthpgraphicLexiconInhibition parameter from its default value of .48 (which is so high that it prevents any orthographic neighbours from being activated) down to a value of .36. Then, again to allow the activation of orthographic neighbours in the orthographic lexicon, we removed lateral inhibition in the orthographic lexicon by setting to zero the value of the parameter controlling such inhibition (the default parameter value here is .06).

Finally, to cause the competition between entries in the phonological lexicon which we propose is critical for the cumulative inhibition effect, we considerably increased the value of the parameter controlling this inhibition, from its default value of .07 to a value of .65. We then chose a set of

English words in exactly the same way as the Italian words were chosen, and constructed 10 different random orders of presentation for them in which ordinal position of presentation of words from each of the 12 word sets was and lag between successive presentations were orthogonally varied. The 10 lists of stimuli were then run through the DRC model.

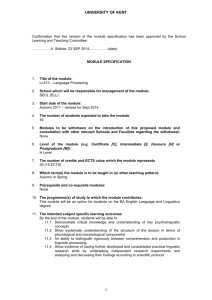

The model made 6.3% reading errors, which were excluded from RT analyses. Figure M1 plots RTs against Ordinal Position Within-Category. We analyzed the data by means of linear mixed effects models. We first built a model (s1) with lists and item identity as random factors.

Then, we defined a second model adding Position Within-List as a fixed factor to s1. Since the improvement in the model fit was not significant, Chi2(3) = .03, p > .95, we dropped Position

Within-List from the analysis. We than built a third model, s3, adding Ordinal Position Within-

Category to s1, and compared the two models. Comparison of s1 and s3 revealed that the improvement in the model fit was significant, Chi2(1) = 1473.5, p < .001. In s3, the fixed effect of

Ordinal Position Within-Category was significant, t(896) = 4.0, p < .001. The effect of Lag was tested adding it as a fixed factor to s3: the increase in the model fit was not significant, Chi2(1) = .4, p > .57, meaning that the number of trials intervening between two members of a given orthographic category is irrelevant to the effect of ordinal position. A further analysis was performed on m3 to test linear, quadratic, and cubic components of the Ordinal Position Withincategory effect. Whereas the linear component was significant, t(896) = 4.0, p < .05, neither the quadratic component, |t| < 1, nor the cubic component, |t| < 1, proved significant. To conclude, the analyses revealed a significant effect of Ordinal Position Within-List, which is linear and unaffected by Lag.

2

Thus the performance of the DRC model – once a parameter controlling the update in link strength that occurs each time a word is read is added – simulates the performance of human readers.

71,5

71,0

70,5

70,0

69,5 y = 0,434x + 68,9

R

2

= 0,95

69,0

1 2 3 4 5

Ordinal Position Within-Category

Fig. M1. Mean DRC model RTs according to the ordinal position of items within orthographic category.

3