Assumptions of Binomial Distribution

advertisement

Binomial Distribution

After studying the random variables and discrete probability distributions, we need to look a little more

closely at a special type of discrete distribution, one that is closely related to the example we used

earlier about the number of boys when you have 4 kids. What characterize these types of distributions is

that they can all be seen as repeated coin flips – you can call the two outcomes boys/girls, heads/tails,

or whatever. But the mathematics is really the same.

Binomial Random Distribution based on a Fair Coin

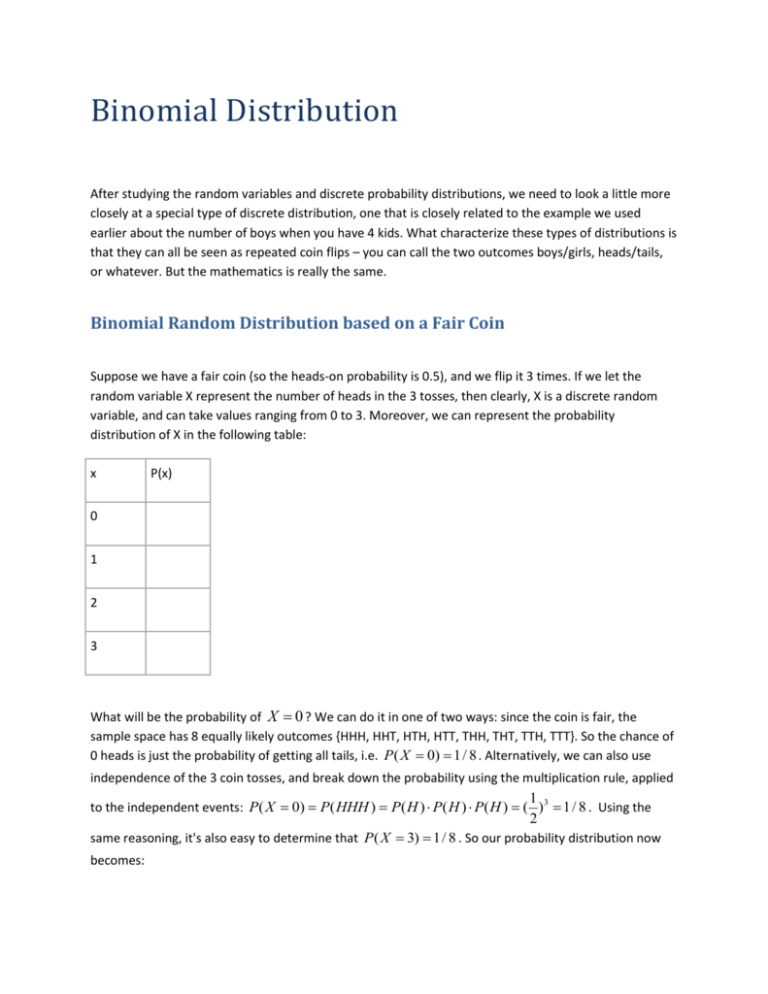

Suppose we have a fair coin (so the heads-on probability is 0.5), and we flip it 3 times. If we let the

random variable X represent the number of heads in the 3 tosses, then clearly, X is a discrete random

variable, and can take values ranging from 0 to 3. Moreover, we can represent the probability

distribution of X in the following table:

x

P(x)

0

1

2

3

What will be the probability of X 0 ? We can do it in one of two ways: since the coin is fair, the

sample space has 8 equally likely outcomes {HHH, HHT, HTH, HTT, THH, THT, TTH, TTT}. So the chance of

0 heads is just the probability of getting all tails, i.e. P ( X 0) 1/ 8 . Alternatively, we can also use

independence of the 3 coin tosses, and break down the probability using the multiplication rule, applied

1

2

to the independent events: P( X 0) P( HHH ) P( H ) P( H ) P( H ) ( )3 1/ 8 . Using the

same reasoning, it's also easy to determine that P ( X 3) 1/ 8 . So our probability distribution now

becomes:

x

P(x)

0

1/8

1

2

3

1/8

The probability P( X 1) is slightly more complicated. Using the classical probability approach, the

event that "there is 1 head in 3 tosses" includes 3 separate outcomes: {HTT, THT, TTH}. So the

probability of getting 1 head is P ( X 1) 3 / 8 .

But there is another way that uses the additional rule and multiplication rule together that you should

know: since "1 head" is technically a union of the three outcomes: HTT, THT, TTH, and they are mutually

exclusive; we may use the addition rule applied to the mutually exclusive events:

P( X 1) P(HTT or THT or TTH)

P( HTT ) P(THT ) P(TTH )

1 1 1 1 1 1 1 1 1

2 2 2 2 2 2 2 2 2

You probably noticed that the three probabilities being added are all the same, so we can shorten it as:

3

1 3

P ( X 1) 3

2 8

So the factor of 3 comes from the use of the addition rule, and the (1/ 2)3 is a result of applying the

multiplication rule. Using the same type of analysis, we can also determine that P( X 2) 3 / 8 .

Hence the completed probability distribution is the following:

x

P(x)

0

1/8

1

3/8

2

3/8

3

1/8

This Binomial distribution also allows us to answer other questions related to the experiment, and it’s

useful to look at some of the common phrases used to describe these events:

The probability of the coin landing heads more than once is denoted by P ( x 1) .

The probability of the coin landing heads fewer than three times is denoted P( x 3) .

The probability of the coin landing heads at least twice is denoted by P ( x 2) . The "at least"

phrasing means we're looking for the probability of the coin landing heads two or more times.

The probability of the coin landing heads at most twice is denoted by P ( x 2) . The "at most"

phrasing means we're looking for the probability of the coin landing tails two times or fewer.

The probability of the coin landing heads between one and three times, inclusive, is denoted by

P (1 x 3) .

Assumptions of Binomial Distribution

What you just saw was a binomial distribution, which is the generalized version of a fixed number of

coin flips. Here are the assumptions of the binomial distribution that were listed in the lecture:

1. There are a fixed number of trials. (represented by the variable n )

2. Each trial has 2 outcomes (called "success" and "failure" for convenience)

3. The trials are all independent of one another.

4. The probability of success is the same for all trials. (this probability is represented by p , and q

represents the probability of failure -- the opposite of success)

For the experiment above, the number of trails n 3 , and the probability of success p 0.5 . Using

these two parameters, we can determine the entire probability distribution. Although the calculations

using the addition and multiplication rules may seem complicated, it's quite important to understand

them, since as we see next, it's the only way to evaluate the binomial probabilities when the heads-on

probability p is no longer equal to 0.5.

Binomial Distribution based on an Unfair Coin

To see the flexibility of the binomial distribution, let's imagine that someone glued some chewing gum

on one side of the coin (on a side note, one of my previous Math 15 students did this as part of his term

project. So we know this can be done). As a result, the coin is no longer fair. The same can be said about

the chance of having boys: in reality, it’s never 50%. Around the world, the birth rate for boys is slightly

higher than the girls. How do we calculate the binomial probabilities if the heads-on probability is not

50%?

Suppose the chewing gum makes heads occur more (60% of the time) often than tails (40% of the time).

So how would this affect the probability distribution of a "bent" coin if it's still tossed 3 times?

Since the coin is no longer the same, we will give this random variable a different name -- Y . The

distribution of Y includes the same range of values as X , since the number of trials n did not change.

The only thing that changes was that p changed from 0.5 to 0.6, and q changed from 0.5 to 0.4 (but

we really just need to know one of them)

y

P(y)

0

1

2

3

But instead of P ( X 0) 1/ 8 , which is what you expect from a fair coin. We can expect that

P(Y 0) will be less than 1/8, since the chewing gum has made the tail less likely to turn up at each

toss. Here we can no longer using the classical probability approach of "number of ways that event

occurs / size of sample space", since the outcomes are clearly not equally likely. For example, HHH is

now much more likely than TTT.

So instead of counting the event and dividing everything by 8, we will have to use the multiplication rule,

since the 3 tosses are still independent of each other. So to find P(Y 0) , we break it town as follows:

P(Y 0) P(T ) P(T ) P(T )

0.4 0.4 0.4 0.064

This is quite a bit less than 1/8=0.125, as expected, but we can also write the probability in a slightly

different format that leads to a general formula:

P(Y 0) 1 0.60 0.43

It might be strange to include 0.60 here, since it's just another way to write 1 in there. But if you think

about it a little differently, 0.60 also means there are zero heads, so the power for the head/tail

probability corresponds to the number of heads/tails in the n tosses.

Let's move on to look at P(Y 1) . Using the same analysis we did above for P( X 1) , we can use the

addition rule and multiplication rule together as follows:

P(Y 1) P(HTT or THT or TTH)

P( HTT ) P(THT ) P(TTH )

0.6 0.4 0.4 0.4 0.6 0.4 0.4 0.4 0.6

3 0.61 0.42 0.288

Although there are 3 different orders that 1 head, 2 tails can occur in 3 tosses, each outcome has the

same probability, which is reflected in the factor of 3. Using the same strategy, we can look at the

probability of 2 heads:

P(Y 2) 3 0.62 0.41 0.432

And 3 heads:

P(Y 3) 1 0.63 0.40 0.216

To make sure, we can verify that this is indeed a probability distribution by adding up the probabilities:

0.064 0.288 0.432 0.216 1 .

y

P(y)

0

0.064

1

0.288

2

0.432

3

0.216

General Formula for Binomial Probability

To summarize, to find the probability of any number of heads, we use the following general principle:

probability of x heads (Number of ways x heads appear in n tosses) p x q n x

What if we toss the same bent coin more than 3 times? Things get a little more complicated from here.

For example, if we toss the coin 4 times, then to find the probability there are exactly 2 heads, there are

actually 6 different orders: HHTT, HTTH, HTHT, TTHH, THTH, THHT. The kind of math you need to work

out the number of ways x heads come up in n tosses is related to something called “Pascal Triangle”,

and the subject of combinatorics, which is a branch of mathematics. For those of you who are interested,

you can learn more about combinatorics in Math 4 (Discrete Mathematics), taught at SRJC.

If you don't want to look under the hood of the binomial formula, a convenient alternative is to use

GeoGebra or your graphing calculator (included in the textbook).

Binomial Calculator in GeoGebra

Open up the probability calculator window by selecting it from the View menu. A dialogue box should

appear, though it may appear behind the main window.

1. Ensure that Binomial mode is selected from the pull-down menu.

2. Toggle between P ( x k ) , P(k1 x k2 ) , and P ( x k ) by selecting the -], [-], and [- buttons,

respectively.

3. From here, one need only insert the relevant information into the relevant fields, and record the

calculated value.

Notice that GeoGebra always assume the equal sign is included in the probability calculations. So if you

wish to calculate something that doesn’t include the equal sign, e.g. P ( X 4) , make sure to rewrite it

so that you are evaluating the same event in GeoGebra (e.g. P ( X 3) )

Example: Rolling a fair die

Problem: What is the probability of rolling a fair die six times and getting two fours?

First you may wonder: is this a binomial experiment? Although the die has 6 sides, we are only

interested in only two kinds of outcomes: four and not four. Let’s check the rest of the assumptions:

there is a fixed number of trials (six), trials in the experiment can be defined in terms of success and

failure (rolling a four is a success, not rolling a four is considered a failure), and each trial is an

independent event (since rolling a number does not affect future die rolls)

In this experiment there are six trials, so n 6 .

A success in this experiment is rolling a four, and the probability of this event is

1

5

, so p .

6

6

A failure in this experiment is not rolling a four, and the probability of this event is

5

5

, so q . Even if

6

6

this probability was unknown, it could be found by remembering that $ q $ is the complement of $ p $.

It is known that p

1

and that p q 1 , so q 1 p 1 1/ 6 5 / 6 .

6

The probability of rolling two fours in the experiment is denoted by P ( x 2) , since this is the

probability of rolling exactly two fours. The information known is thus:

n6

1

6

5

q

6

p

Using this as input in GeoGebra, it is found that the probability of rolling a fair die six times and getting

exactly two fours is approximately 0.2009:

P( x 2) 0.2009

Mean and Standard Deviation of Binomial Distribution

Earlier in the chapter, we saw that the population mean, or the expected value, of a discrete probability

distribution is defined as follows:

x P( X )

For a binomial distribution, the same equation would apply, and one just has to make sure to add up all

the rows in the probability distribution. So for the bent coin we saw above, we will need to add another

column to represent what is being added:

y

P(y)

y P( y )

0

0.064

0

1

0.288

0.288

2

0.432

0.864

3

0.216

0.648

Adding up numbers in the last column, we found the expected value:

x P( X ) 0 0.288 0.864 0.648 1.8

But it should not surprise you that we could have used a much simpler method: simply multiplying the

number of tosses by the heads-on probability: 3*0.6 = 1.8 will give us the same answer. After all, if

roughly 60% of the tosses are heads, then the expected value should just be 60% of all tosses.

It turns out that this intuitive reasoning is totally legitimate: if we use the complicated Binomial

probability formula introduced above to evaluate each P(x) in the expected value, at the end of the day

(omitting a full page of derivation, which you don’t have to know), the expected value of a Binomial

distribution is simply:

np

It’s rare that the expected value of a discrete distribution turns out to be so simple. But this is really an

exception rather than the norm.

By using the same complicated formula, the variance for a binomial probability distribution is also

remarkably simple:

2 npq

In this formula, n is the number of trials in the experiment and p is the probability of success, and q=1-p

is the probability of failure.

Since it’s the square root of variance, the Standard Deviation for a binomial probability distribution is:

npq

Again, n is the number of trials in the experiment, p is the probability of success, and q is the

probability of failure.

Example: Germination of Seeds

When you buy seed packets, it often says how many percent of the seeds are supposed to germinate.

Some seeds are more viable than others, and usually the viability decreases during storage. Imagine you

bought a packet of 50 seeds that says that 70% of them are supposed to germinate. If you plant all of

the seeds, how many starter plants can you expect to grow?

It turns out this problem has all the characteristics of a binomial experiment: we have a fixed number of

trials (50 seeds per packet), the same success rate for each seed (70%), and they are relatively

independent (assuming you are providing the ideal soil). So using one of the tools listed above, we can

calculate the expected value as:

n p 50*0.7 35

and the standard deviation is:

npq 50*0.7*0.3 3.2

These parameters help you make a fairly good prediction about the germination: most likely, your

starter plants will be within two standard deviation of the mean: ( 2 , 2 ) , which is between

28.6 and 41.4. So if you want to leave enough room for 42 plants, then you will definitely not run out of

space.

Binomial Distribution for a Large Number of Trials

The binomial distribution starts to look more and more symmetrical when you increase the number of

trials n . Suppose we flip the aforementioned coin 50 times, i.e. $n=50$ and $p=0.6$, we can look at the

histogram of the binomial distribution in GeoGebra:

This histogram resembles the so-called “bell-shaped curve”, which we will study in Chapter 7. This

handy connection also forms the basis of doing statistical inference on the underlying parameter p, and

allows us to answer questions such as “if we get 64 heads out of 100 tosses, is it likely that the coin is

fair?”