set theory

advertisement

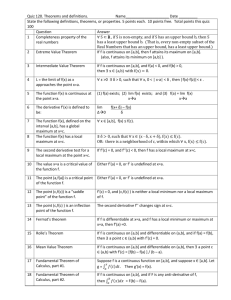

Summary of Stat 581/582

Text: A Probability Path by Sidney Resnick

Taught by Dr. Peter Olofsson

Chapter 1: Sets and Events

1.2 : Basic Set Theory

SET THEORY

The sample space, , is the set of all possible outcomes of an experiment.

e.g. if you roll a die, the possible outcomes are ={1,2,3,4,5,6}.

A set is finite/infinitely countable if it has finitely many points.

A set is countable/denumerable if there exists a bijection (i.e. 1-1 mapping) to the natural

numbers, N={1,2,3,…}

e.g. odd numbers, integers, rational numbers (Q = {m/n} where m and are integers)

The opposite of countable is uncountable.

e.g. = real numbers, any interval (a,b)

If you take any two points in a set and there are infinitely many points between them, then the set

is considered to be dense.

e.g. Q,

Note: A set can be dense and be countable OR uncountable.

The power set of is the class of all subsets of , denoted 2 (or P[]). (i.e. if has “n”

elements, P[] has 2n elements.)

Note: if is infinite and countable, then P[] is uncountable.

SET OPERATIONS

Complement: C :

: or or both

Union:

Intersection:

: and

SET LAWS

Associativity: C C

Distributivity: t t

tT tT

c

De Morgan’s: t tc

tT

tT

* The opposite is true for all of the above.

1.3 : Limits of Sets

inf

k k

kn

k n

sup

k k

kn

k n

inf

k k ={ : n for all but finitely many n}

n 1 k n

n 1 k n

o {An occurs eventually}

sup

lim sup n n

k k ={ : n for infinitely many n}

n 1 k n

n 1 k n

o {An occurs infinitely often}

lim inf n n lim sup n n

lim inf n n

lim inf n n c lim sup n cn

(and vice versa)

If liminf = limsup = An, then the limit exists and is An.

1.5 : Set Operations and Closure

A is called a field or algebra if:

1. A

2. A c A

3. , A A

A field is closed under complements, finite unions, and intersections.

is called a -field or -algebra if:

1.

2. c

3. 1 , 2 ,... i

i 1

A -field is closed under complements, countable unions, and intersections.

Note: , is the smallest possible -field and 2 is the largest possible -field.

The sets in a -field are called measurable and the space , is called a measurable space.

1.6 : The -field Generated by a Given Class C

Corollary: The intersection of -fields is a -field.

Let C be a collection of subsets of . The -field generated by C, denoted C is a -field

satisfying:

a) C C

b) If B’ is some other -field containing C, then B’ C

C is also known as the minimal -field.

Chapter 2: Probability Spaces

2.1: Basic Definitions and Properties

Fatou’s Lemma (probabilities):

lim sup

lim inf

lim inf

lim sup

n

n

n

n

n

n n

n

A function F : 0,1 satisfying

(1) F is right continuous

(2) F is monotone non-decreasing

(3) F has limits at

is called a probability distribution function.

2.2: More on Closure

P is a -system if it is closed under finite intersections. That is, , P P.

A class of subsets L of that satisfies

(1) L

(2) L c L

(3) n m, n m , n L n n L

is called a -system.

Dynkin’s Theorem:

(a) If P is a -system and L is a -system such that P L, then (P) L.

(b) If P is a -system, (P)=L(P). That is, the minimal -field over P equals the minimal system over P.

Proposition 2.2.4: If a class C is both a -system and a-system, then it is a -field.

A class S of subsets is a semialgebra if the following hold:

, S

(i)

(ii) S is a p-system

(iii) If S , then there exist some finite n and disjoint sets C1 ,, Cn , with each

Ci S c i 1 Ci .

n

Chapter 3: Convergence Concepts

3.1: Inverse Maps

Let and ' be two spaces and let : ' .

For a set ' ' the set 1 ' : ( ) ' is called the inverse map of ' .

1 ' is also called the -field generated by , denoted .

Proposition 3.1.1: If ' is a -field on ' , then 1 ' is a -field on .

3.2: Measurable Maps, Random Elements, Induced Probability Measures

A pair set , field (e.g. , ) is called a measurable space.

is a random variable if : , , .

is measurable if 1 ' is a set in ' ' . (i.e. 1 ' )

Measurable sets are “events”.

Constant functions are always measurable.

Proposition 3.2.2: Let : 1 , 1 2 , 2 and : 2 , 2 3 , 3 be two measurable

maps. Then ( ) : 1 , 1 3 , 3 is also measurable.

The Borel -field in k is the class of open (or half-open or closed) rectangles.

is a random vector 1 , 2 , are random variables.

Corollary 3.2.2: If is a random vector and g : k is measurable, then g is a

random variable.

The distribution of X (a.k.a. probability measure induced by X) is denoted 1 .

Chapter 4: Convergence Concepts

4.1: Basic Definitions

Independence events: i i for all I in {1,2,…,n}.

iI iI

Independent classes: Classes C i are independent if for any choice i Ci , i=1,…,n, the events

1 ,, n are independent.

Theorem 4.1.1: If C1 ,, C n are independent -systems, then C1 ,, C n are independent.

4.2: Independent Random Variables

Independent random variables: Random variables are independent if their -fields are

independent.

Corollary 4.2.2: The discrete random variables 1 ,, k with countable range R are

k

independent iff i xi , i 1, , k i xi for all xi R, i=1,…,k.

i 1

4.5: Independence, Zero-One Laws, Borel-Cantelli Lemma

Borel-Cantelli Lemma I: Let {An} be any sequence of events such that

(i.e.

n

n

converges), then P(An i.o.) = 0.

Borel-Cantelli Lemma II: If A1,…, An are independent events such that

n

(i.e.

n

diverges), then P(An i.o.) = 1.

A property that holds with probability = 1 is said to hold almost surely (a.s.).

NOTE:

1

n

n

is divergent

1

2

is convergent

Let n be a sequence of random variables and define Fn n1 , n 2 , , n=1,2,…

The tail -field, , is Fn . Events in are called tail events. A tail event is NOT

n

affected by changing the values of finitely many Xn.

A -field all of whose events have probabilities equal to 0 or 1 are called almost trivial (a.t.).

Kolmogorov’s 0-1 Law: If n are independent random variables with tail -field , then

0 or 1 . (This, in turn, implies that the tail -field is a.t.).

Note: if we assume iid, then more events have the “0-1” property.

Lemma 4.5.1: If g is an a.t. -field and X is measurable w.r.t g, this implies that X is constant

a.s.

An event is called symmetric if its occurrence is not affected by any permutation of finitely many

Xk.

Notes:

Tail events are not affected by the VALUES and symmetric events are not affected by

the ORDER!

All tail events are symmetric (converse is false)

Hewitt-Savage 0-1 Law: If n are iid, then all symmetric events have probability 0 or 1.

Chapter 5: Integration and Expectation

5.1: Preparation for Integration

n

A simple function is a random variable of the form ai i where

i 1

ai , i , i 1,2,, n and 1 ,, n form a partition of .

Properties of Simple Functions:

n

1. If X is simple, then ai i , where is constant.

i 1

2. If X,Y are simple, then (ai b j ) i j .

i, j

3. If X,Y are simple, then (ai b j ) i j .

i, j

4. If X,Y are simple, then Min ( , ) Min (ai , b j ) i j or

i, j

Max ( , ) Max (ai , b j ) i j .

i, j

Theorem 5.1.1 - Measurability Theorem: Let X be a nonnegative random variable. Then there

exists a sequence of simple functions {Xn} 0 n .

5.2: Expectation and Integration

Expectation of X with respect to P: Let X be a simple function, then

n

n

i 1

i 1

n

d ai i d ai d ai i .

Note: .

i

i 1

Steps to show expectation:

1. Indicators: Let

n

2. Simple Functions: Let ai i

i 1

3. Nonnegative Random Variables: Suppose 0 , Xn a sequence of simple functions and

0 n .

4. General Functions:

If n and m , then E(X) is well-defined if

lim

lim

n

m .

n

m

X is integrable if E[|X|]< , where |X| = . Therefore X is integrable

, (then E[X] will be finite).

Monotone Convergence Theorem (for expectation): 0 n n or

lim

lim

n

n .

n

n

Inequalities:

(a) Modulus:

.

(b) Markov:

Suppose L1 . For any >0,

(c) Chebychev:

Assume , Var .

.

Var

2

.

(d) Triangle:

1 2 1 2

(e) Weak Law of Large Numbers (WLLN): The sample average of an iid sequence

approximates the mean. Let n , n 1 be iid with finite mean and variance and suppose

n

i

lim

i 1

2

0.

n and Var(X)= is finite. Then for any 0,

n n

5.3: Limits and Integrals

Monotone Convergence Theorem (for series): If j 0 are nonnegative random variables for

n 1, then j j . (i.e. the expectation and infinite sum can be interchanged)

j 1 j 1

Fatou’s Lemma (expectations):

lim sup

lim inf

lim inf

lim sup

n

n

n

n

n

n n

n

Dominated Convergence Theorem (for expectations): If n and there exists a

dominating random variable L1 n , then n and n 0 .

# NOT IN BOOK – General Measure and Integration Theory #

A function : is called a measure if

(i) 0

(ii) 0

(iii) 1 , 2 , disjoint i i

i

i

PPN: n n

If C n where C n C and C n n, then is called -finite on the class C .

n 1

THM: If on asystem P and are -finite on P, then on (P).

THM: A -finite measure on a semialgebra S, has a unique extension to (P).

5.5: Densities

Let X be a random variable. If there exists a function f f x dx for all Borel sets

B, then f is called the density of X.

THM: If f exists, then g x g ( x) f ( x)dx .

Note: dF(x) = f(x)dx

LEMMA: If

fd gd f g a.e.

5.6: The Riemann vs. Lebesgue Integral

Lebesgue Measure ():

(singleton) = 0

(interval) = length of interval

Steps to show integration:

1. Indicators: Let f

fd d d

n

2. Simple Functions: Let f ai i

i 1

3. Nonnegative Random Variables: Suppose f 0 , f n simple and 0 f n f . Define

fd lim f

n

n

d .

4. General Functions: f f f

and define

(4) DNE if “ ”.

fd f

d f d

Types of Integration:

Riemann – approximate area under a curve via rectangles

Lebesgue – approximates via a measure. This is the limit of simple functions.

Note: Riemann divides the domain and Lebesgue divides the range of a function.

THM: If Riemann exists, then Lebesgue integral exists. The converse is not necessarily true.

A property which holds everywhere except on a set A with (A)=0 is said to hold -almost

everywhere (-a.e.).

THM: g is Riemann Integrable g is continuous a.e.

Fatou’s Lemma (integrals):

lim inf

lim sup

lim inf

lim sup

f

d

f

d

f

d

n

n

n

n n

n f n d

n

Dominated Convergence Theorem (for integrals): Let f , f 1 , f 2 , be a sequence of

functions f n f . If there exists a function g f g n and

f

n

gd , then

d fd .

Monotone Convergence Theorem (for integrals): Let f , f 1 , f 2 , be a sequence of

nonnegative functions f n f , n . Then

f

n

d fd .

Identity: lim sup n f n lim inf n f n

5.7: Product Spaces

1 2 1 , 2 : 1 1 , 2 2 is called the product space.

If 1 and 2 then the set 1 , 2 : 1 , 2 is called a (measurable)

rectangle.

The -field generated by the rectangles is called the product -field, denoted 1 2 .

For C 1 2 we define the sections as C x y : x, y C and C y x : x, y C .

Sections (of functions and sets) are measurable.

Define measures 1 , 2 on 1 2 , 1 2 :

1 C C x d x

1

2 C C y d y

2

Both measures equal , when C .

If are probability measures, then 1 , 2 are probability measures.

THM: If are -finite, then there exists a unique measure on 1 2 such that

.

NOTE:

5.9: Fubini’s Theorem

g d exists, then

g d g d d

Fubini’s Theorem: If

g d

1 2

1

2

2

1

g d d .

Special Cases when Theorem Holds:

1. g 0 (Tonelli’s Theorem)

2.

g d (i.e. g integrable) (often called Fubini’s Theorem)

Chapter 6: Convergence Concepts

Suppose (,F,P) is a probability space, then X : (, F ) (, B) is a random variable.

The distribution of X is denoted P X 1 PX

If is a measure on (,F), then f 1 is the induced measure on (,G).

Almost Sure

P( X n X ) 1 X n X a.s.

P( | X

n 1

n

X | ) 0 X n X a.s.

PPN: X n X a.s. & Yn Y X n Yn X Y (this holds for Lp and therefore in

probability and in distribution.

In Probability

P

P(| X n X | ) n

0 0 X n

X

THM 6.3.1(b): Convergence in probability can be characterized by convergence a.s of

subsequences:

P

Xn

X Each X nk has a further subsequence X nk i X nk i X a.s.

COR 6.3.1

X n X a.s. & g continuous g X n g X a.s.

P

P

Xn

X & g continuous g X n

gX

COR 6.3.2: Version of DCT

X n X a.s., | X n | Y & EY EX n EX

P

P

PPN: X n

X & Xn

Y P X Y 1 , therefore cannot have different

limits in probability. Same holds for a.s and Lp.

In Lp

LP

X

Let p>0. E | X n X | P 0 X n

LP

LP

PPN: X n

X , g continuous & bounded g X n

gX

LP

Lr

X , for some p and r<p, then X n

X.

PPN: If X n

In Distribution

Let X (X1, X2, …) with distribution function F (F1, F2, …) where F(x)=P(X x),

d

X.

F1(x)=P(X1 x), etc… Fn x F x x, F continuous X n

Relationships between types of convergence:

P

X n X a.s. X n

X

LP

P

X n

X Xn

X

P

d

Xn

X Xn

X

Inequalities

Chebyshev’s: P| Y |

Holder’s:

E | Y |P

P

for any p>0 & >0.

1 1

p

1 , then E| XY | E | X |

p q

Special case of Holder’s is when p=q=2 (i.e. Cauchy-Schwartz).

If p>1, q>1, &

E | X Y |p

Minkowski’s: For p >1,

For p=1, this is the Triangle Inequality.

1

p

E | X |p

1

p

E | Y |p

1

p

1

p

E | Y |q

.

1

q

.

Chapter 7: Law of Large Numbers & Sums of Independent Variables

n

Weak Law of Large Numbers (WLLN) - THM 7.2.1: (where bn=n & Sn X j )

j 1

n

Let X1, X2, … independent & an E X j ;| X j | n , then if

j 1

P| X

n

(1)

j 1

| n n

0

1 n

E X 2j ;| X j | n n

0

2

n k 1

S an P

0 .

n

n

Let X1, X2, … i.i.d. & an n E X j ; | X j | n , then if

(2)

j

(1) n P| X j | nn

0

1

n

E X 2j ; | X j | n

0

n

S an P

0 .

n

n

(2)

LEMMA:

S n an P

a

S

P

0 & n a n

a

n

n

n

Kolmogorov’s Convergence Criterion – THM 7.3.3:

Let X1, X2, … independent. If

Var X j , then

j 1

The convergence of

X j is determined by

j 1

X

j 1

j

E X j converges a.s.

EX .

j 1

j

Kronecker’s Lemma – 7.4.1:

Let {Xk} and {bn} be sequences of real numbers bn . If

1 n

X j n

0 .

bn j 1

COR 7.4.1:

Suppose E X n2 n .

Xj

b

j 1

j

converges, then

bn &

j 1

Var X j

b

2

j

Sn ESn

0 a.s.

bn

Kolmogorov’s Strong Law of Large Numbers (SLLN) – THM 7.5.1:

Let X1, X2, … i.i.d. with finite mean m (i.e. E| X1 | ).

S

Then n a.s.

n

Chapter 8: Convergence in Distribution

CDF of a random variable is defined as F(x) = P[X x].

F is a possible cdf iff:

1. 0 F x 1

2. F is nondecreasing

3. F is right-continuous

If F 0 and F 1 we call F proper (or non-defective). It is assumed that all cdf’s are

proper.

d

if Fn x F x x where F is continuous.

Convergence in Distribution: n

w

F x .

This is also called weak convergence and can be denoted Fn x

Lemma 8.1.1: If two cdf’s agree on a dense set, then they agree everywhere.

A dense set has no gaps.

LEMMA: A cdf has at most countably many discontinuities.

w

F , then F is unique.

PPN: If Fn

d

. Then there exists a random variable

Skorohod’s Theorem: Suppose n

# 1# , #2 , on the probability space 0,1, Borel , Lebesgue # , #n n , n 1,2,

d

d

and #n # a.s.

d

d

and g continuous, then g n

g .

Continuous Mapping Theorem: If n

Theorem 8.4.1: n h n h for all bounded and continuous functions h.

Chapter 9: Characteristic Functions and the Central Limit Theorem

Moment Generating Function: t e t

Characteristic Function: t e it cost isin t ; t

Maps from the Real Numbers to the Complex Plane

Chf always exists, whereas mgf does not.

Properties of CHF:

0 1

, independent

' 0 i

o In Generality:

n 0 i n n

Theorem 9.5.1: uniquely determines the distribution of X.

d

n t t t .

Theorem 9.5.2: n

The chf of a standard normal distribution is

t e

Theorem 9.7.1 (Central Limit Theorem, iid case):

Let 1 , 2 , be iid with mean and 2 .

n

Let S n k and let ~ 0,1 .

k 1

Then

S n n

n

d

.

[PROOF IS SHOWN ON NEXT PAGE]

t2

2

Proof of CLT (iid case)

Suppose =0 and 2 1 .

Sn

Let be the chf of Xk and n be the chf of

it Sn

Then n t e

n

it k

e

n

indep n it k

e

k 1

n

.

t

.

n

n

n

n

t 2 2 .

Want to show that t

e

n

k k

By Taylor’s Formula:

0 (assume after k=2, all functions are negligible. i.e.

k 0 k!

converge to 0 as n goes to infinity)

2

0 t 1 0 t 2 0

With t

, t

n

n

2n

n

0 =1

1 0 i = 0 (since we ‘supposed’ = 0)

2 0 1

2

1 t

t

n

2n

n

2

2

t

1 t n

2

n t t

.

e

n

2n

S

d

n

.

n

n

Delta Method

Let 1 , 2 , be iid. By CLT with

S n S n n

,

n

n

d

n

. Hence,

for large n, ~ , n .

This method is used to make inferences about .

2

PPN: If g ' 0, then

g g d

2

'

.

n

g

~

g

,

g

'

g

The k are said to satisfy the Lindeberg condition if

1

Sn

n

k 1

2

k

; k tSn n

0, t 0 .

Theorem 9.8.1 (Lindeberg-Feller CLT):

n

n

Let s n k2 Var k Var S n . Then the Lindeberg condition implies

k 1

k 1

Sn d

0,1 .

sn

n

Liapunov condition: For some 0 ,

PPN: Liapunov Lindeberg

n

Important relationship:

k ~ log n

k 1

1

k 1

2

k

S n2

0.

Chapter 10: Martingales

10.1: Prelude to Conditional Expectation: The Radon-Nikodym Theorem

DFN: If (B)=0 (B)=0, then is absolutely continuous with respect to (i.e. <<).

Radon-Nikodym Theorem – THM 10.1.2:

If << & is -finite, then a measurable function

f : B f d B Borel .

B

d

.

d

R-N Derivative: f

Chain Rule: If <<, then &

d d d

.

d d d

10.2: Definition of Conditional Expectation

Conditional Probability:

Let , , be a probability space & let g be a sub--field of B.

Take A . a unique random variable z

(1) z is measurable with respect to g

(2) A G z d G g.

G

A B

Discrete A | B B

In general:

Continuous f x | y f x, y

f y

Conditional Expectation:

Let z=E[X|g] be the conditional expectation of X with respect to g. Then it must fulfill

the following:

(1) z is measurable with respect to g

(2) X d z d G g.

G

G

Generally:

o If X is g-measurable, then E[X|g]=X.

o If X is independent of g, then E[X|g]=E[X].

f x, Y

dx

o EX | Y x f x, Y dx x

f Y

10.3: Properties of Conditional Expectation

1. Linearity: If , L1 and , , we have G G G .

a. s .

2. If G ; L1 , then G .

a.s.

3. ,

4. Monotonicity: If 0 and L1 , then G 0 a.s.

5. Modulus Inequality: If L1 , then G G .

6. Monotone Convergence Theorem: If L1 , 0 n , then n G G .

7. Monotone Convergence implies the Fatou Lemma:

lim inf

lim inf

n G

n G

a.

n

n

lim sup

lim sup

n G

n G

b.

n

n

8. Fatou implies Dominated Convergence: If n L1 , n L1 and n , then

lim

a.s. lim

n G

n G

n

n

9. Product Rule: Let X,Y be random variables satisfying X, YX L1 . If G , then

G G .

a. s .

10. Smoothing: If G1 G2 , then for L1 ,

a. G2 G1 G1

b. G1 G2 G1

NOTE: The smallest -field always wins.

10.4: Martingales

Martingales:

DFN: {Sn} is called a martingale with respect to {Bn} if

(a) Sn is measurable with respect to Bn n

(b) E[Sn+1 | Bn] = Sn

If (b) is “ ”, then it is a supermartingale. If “ ”, then it is a submartingale.

o Submartingales tend to increase

o Supermartingales tend to decrease

If {Sn} is a martingale, then E[Sn+k | Bn] = Sn k=1,2,…

If {Sn} is a martingale, then E[Sn] = constant

10.5: Martingales

10.6: Connections between Martingales and Submartingales

Any submartingale n , n , n 0 can be written in a unique way as the sum of a martingale

n , n , n 0 and an increasing process n , n 0. i.e. n n n

10.7: Stopping Times

A mapping : 0,1,2,, is a stopping time if n n , n 0,1,2, .

To understand this concept, consider a sequence of gambles. Then is the rule for when to stop

and n is the information accumulated up to time n. You decide whether or not to stop after the

nth gamble based on information available up to and including the nth gamble.

Optional Stopping Theorem: If Sn is a martingale and 1 2 3 are well-behaved

stopping times, then the sequence S1 , S 2 , S3 , is a martingale with respect to

1 , 2 , 3 , .

Consequences:

S1 S 2 S3

If we let 1 0 and 2 , then S 0 S .

is a well-behaved if:

(a) 1

S ; n

0

(b) S

(c)

n

10.9: Examples

Gambler’s Ruin

Basic concept: Imagine you initially have K dollars and you place $1 bet. You continue to bet

$1 after each win/loss. Playing this “game” called Gambler’s ruin, the ultimate question is, will

you hit $0 or $N first?

Formally written, suppose n are iid Bernoulli random variables (i.e. win/lose $1) satisfying

n

1

, and let 0 j0 (i.e. initial balance), n i j 0 , n 1 (i.e. balance after

2

i 1

th

n bet). This is a simple random walk starting at j0 . Assume 0 j0 . Will the random

walk hit 0 or N first?

n 1

j0

0, with probabilit y p 1

j

, with probabilit y 1 p 0

Branching Process

Basic concept: Consider a population of individuals who reproduce independently. Let X be a

random variable with offspring distribution p k k , k 0,1,2, and let m = E[X]. Start

with one individual (easiest to think of a single-celled organism) who has children according to

the offspring distribution. The children then have children of their own, independently, based on

the same distribution. Let n be the number of individuals in the nth generation 0 1 . The

process n is called a simple branching process or Galton-Watson process.

n 1

NOTE: n k , where k are the number of children of the kth individual in generation

k 1

(n-1). k are iid.

n m n

n

0, m 1

extinction

Hence n 1, m 1 stagnation

, m `

growth

Extinction: n 0 lim n n 0

n

Let p k k , k 0,1,2, . The function s s k p k , is called the probability

k 0

generating function (pgf) of X.

Let q = P(E), then you can solve for q via the equation s s .

subcritica l

m 1 1

m 1 1 critical

m 1 0 sup ercritical

Useful identity: Let 0 with mean and 2 (finite 2nd moment). Then

0

2

.

2

THM: Let Wn

actual population size

n

nn

. Then Wn is a martingale with

n m

exp ected population size

respect to the -field n 0 ,, n .

P(W=0) is either 1 or q.

If variance is finite, then P(W=0)=q.

COR: There exists an integrable random variable W such that Wn W a.s.

L2

S.

PPN: In a martingale, if 2nd moments are bounded, then S n

10.10: Martingale and Submartingale Convergence

, then there exists an

Doob’s Theorem: If S n is a submartingale such that sup n S n

integrable random variable S such that S n S a.s.

PPN: Nonnegative martingales always converge.