Abstract - JP InfoTech

advertisement

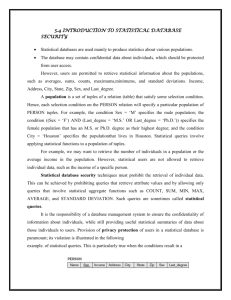

Image Search Reranking With Query-Dependent ClickBased Relevance Feedback ABSTRACT: Our goal is to boost text-based image search results via image reranking. There are diverse modalities (features) of images that we can leverage for reranking, however, the effects of different modalities are query-dependent. The primary challenge we face is how to fuse multiple modalities adaptively for different queries, which has often been overlooked in previous reranking research. Moreover, multimodality fusion without an understanding of the query is risky, and may lead to incorrect judgment in reranking. Therefore, to obtain the best fusion weights for the query, in this paper, we leverage click-through data, which can be viewed as an “implicit” user feedback and an effective means of understanding the query. A novel reranking algorithm, called click-based relevance feedback, is proposed. This algorithm emphasizes the successful use of clickthrough data for identifying user search intention, while leveraging multiple kernel learning algorithm to adaptively learn the query-dependent fusion weights for multiple modalities. We conduct experiments on a real-world data set collected from a commercial search engine with clickthrough data. Encouraging experimental results demonstrate that our proposed reranking approach can significantly improve the NDCG@10 of the initial search results by 11.62%, and can outperform several existing approaches for most kinds of queries, such as tail, middle, and top queries. EXISTING SYSTEM: There are two primary approaches in this direction: visual pattern mining and multi-modality fusion. The former approach focuses on mining visual recurrent patterns from the initial ranked list based on the following two assumptions: 1) the re-ranked list should not be changed too much from the initial ranked list, and 2) visually similar images should be ranked in close proximity to each other. This category treats different modalities independently and seldom deals with the problem of how to fuse multiple modalities, e.g., color, texture and shape. In contrast, multi-modality fusion aims to learn the modality weights in a linear or non-linear way to combine them to achieve better re-ranking performance. It is known that the effects of modalities are query-dependent. DISADVANTAGES OF EXISTING SYSTEM: For instance, for some queries like “heart” and “sun,” the color feature may be more useful, while for some queries such as “buildings,” the texture feature will be more effective. Learning appropriate modality weights for each query, nevertheless, is not a trivial problem but often remains overlooked in existing research. Although the above two directions of image search re-ranking have made great progress, challenges remain in determining whether an image is relevant to the search query. It is not easy to obtain sufficient and explicit user feedback as users are often reluctant to provide enough feedback to search engines. It should be noted that search engines can record queries issued by users and the corresponding clicked images. PROPOSED SYSTEM: We use click-through data and leverage multiple kernel learning algorithms simultaneously to boost image search performance. To the best of our knowledge, this is the first attempt at multi-modality fusion in image search re-ranking. We propose an effective novel image search re-ranking method, called clickbased relevance feedback, which transforms image re-ranking into a classification problem. It leverages the clicked images as positive data and images from other queries as negative data to improve classification accuracy and can automatically learn the fusion weight of each modality for different queries at the feature level. ADVANTAGES OF PROPOSED SYSTEM We not only give the overall performance that validates the effectiveness of our proposed re-ranking approach, click-based relevance feedback, through comparisons with several re-ranking approaches, but also verify the flexibility of our approach on various kinds of queries. Experiments conducted on a real-world dataset not only demonstrate the usefulness of click-through data, which can be viewed as the footprints of user behavior, in understanding user intention, but also verify the importance of query-dependent fusion weights for multiple modalities. SYSTEM ARCHITECTURE: User Search Log file Response MODULES: User-Specific Topic Modeling Feedback Sessions Click-Through Data Analysis Evaluation of Different Queries Pseudo Check Server MODULES DESCRIPTION: User-Specific Topic Modeling Users may have different intentions for the same query, e.g., searching for “jaguar” by a car fan has a completely different meaning from searching by an animal specialist. One solution to address these problems is personalized search, where userspecific information is considered to distinguish the exact intentions of the user queries and re-rank the list results. Given the large and growing importance of search engines, personalized search has the potential to significantly improve searching experience. Feedback Sessions The inferring user search goals for a particular query. Therefore, the single session containing only one query is introduced, which distinguishes from the conventional session. Meanwhile, the feedback session in this paper is based on a single session, although it can be extended to the whole session. The proposed feedback session consists of both clicked and unclicked URLs and ends with the last URL that was clicked in a single session. It is motivated that before the last click, all the URLs have been scanned and evaluated by users. Therefore, besides the clicked URLs, the unclicked ones before the last click should be a part of the user feedbacks. Click-Through Data Analysis We have collected query logs from an image search engine. The query logs are represented as plain text files that contain a line for each HTTP request satisfied by the Web server. For analyzing the click-through bipartite graph, we used all the queries in the log with at least one click. Evaluation of Different Queries After the overall evaluation on all the 60 queries in our dataset, we evaluate the proposed re-ranking approaches at the query category level. We group 60 queries in our dataset in two ways. First, according to the number of clicked images for a given query, we categorize queries into top queries, middle queries and tail queries For tail queries, the performance of click-boosting (CB) is worse than the baseline. As CB is particularly useful for queries where there is a high correlation between clicks and relevance, this situation is understandable as the number of clicked images for tail queries is extremely small, and some clicked images may just be clicked in association with user preferences or interests not image relevance. SYSTEM REQUIREMENTS: HARDWARE REQUIREMENTS: System : Pentium IV 2.4 GHz. Hard Disk : 40 GB. Floppy Drive : 1.44 Mb. Monitor : 15 VGA Colour. Mouse : Logitech. Ram : 512 Mb. SOFTWARE REQUIREMENTS: Operating system : Windows XP/7. Coding Language : JAVA/J2EE IDE : Netbeans 7.4 Database : MYSQL REFERENCE: Yongdong Zhang, Xiaopeng Yang, and Tao Mei, Image Search Reranking With Query-Dependent Click-Based Relevance Feedback”, IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 23, NO. 10, OCTOBER 2014