Warming - Open Evidence Project

advertisement

Topicality

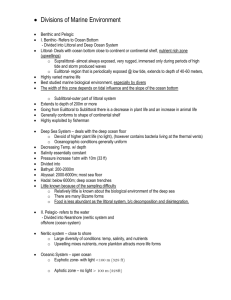

Topicality 1NC- Oceans

A. Interpretation- the Earth’s Oceans are the 5 major oceans

NALMS 14 – North American Lake Management Society, “WATER WORDS GLOSSARY”,

http://www.nalms.org/home/publications/water-words-glossary/O.cmsx

OCEAN

Generally, the whole body of salt water which covers nearly three fourths of the surface of the globe.

The average depth of the ocean is estimated to be about 13,000 feet (3,960 meters); the greatest

reported depth is 34,218 feet (10,430 meters), north of Mindanao in the Western Pacific Ocean. The

ocean bottom is a generally level or gently undulating plain, covered with a fine red or gray clay, or, in

certain regions, with ooze of organic origin. The water, whose composition is fairly constant, contains on

the average 3 percent of dissolved salts; of this solid portion, common salt forms about 78 percent,

magnesium salts 15-16 percent, calcium salts 4 percent, with smaller amounts of various other

substances. The density of ocean water is about 1.026 (relative to distilled water, or pure H2O). The

oceans are divided into the Atlantic, Pacific, Indian, Arctic, and Antarctic Oceans.

And, the federal government is the central government, distinguished from the states

OED 89 (Oxford English Dictionary, 2ed. XIX, p. 795)

b. Of or pertaining to the political unity so constituted, as distinguished from the separate states

composing it.

B. Violation- the IOOS is not limited to USFG action- it includes state, regional, and

private sectors

IOOS report to congress 13 [Official US IOOS report sent to congress. 2013, “U.S. Integrated Ocean Observing System (U.S. IOOS) 2013

Report to Congress,” http://www.ioos.noaa.gov/about/governance/ioos_report_congress2013.pdf //jweideman]

U.S. IOOS works with its eyes on the future. The successes of U.S. IOOS are achieved through cooperation and

coordination among Federal agencies, U.S. IOOS Regional Associations, State and regional agencies, and the private

sector. This cooperation and coordination requires a sound governance and management structure. In 2011 and 2012, program milestones

called for in U.S. IOOS legislation were achieved, laying the groundwork for more success in the future. First, the U.S. IOOS Advisory Committee

was established. Second, the Independent Cost Estimate was delivered to Congress. As part of the estimate, each of the 11 U.S. IOOS Regional

Associations completed 10-year build-out plans, describing services and products to address local user needs and outlining key assets required

to meet the Nation’s greater ocean-observing needs.

And, the IOOS also applies to the Great Lakes

NOS and NOAA 14 [Federal Agency Name(s): National Ocean Service (NOS), National Oceanic and Atmospheric Administration (NOAA), Department

of Commerce Funding Opportunity Title: FY2014 Marine Sensor and Other Advanced Observing Technologies Transition Project. “ANNOUNCEMENT OF FEDERAL

FUNDING OPPORTUNITY EXECUTIVE SUMMARY,” http://www.ioos.noaa.gov/funding/fy14ffo_msi_noaa_nos_ioos_2014_2003854.pdf //jweideman]

1. Marine Sensor Transition Topic: U.S.

IOOS seeks to increase the rate that new or existing marine sensor technologies are

transitioned into operations mode in order to facilitate the efficient collection of ocean, coastal and Great Lakes observations. The

Marine Sensor Transition topic is focused on transitioning marine sensors from research to operations mode to meet the demonstrated

operational needs of end-users. Letters of Intent (LOIs) are being solicited for this topic with particular emphasis on a) projects

comprised of multi-sector teams of partners, b) projects that will meet the demonstrated operational needs of end-users, and c) sensors that

are at or above TRL 6. Applicants with sensors for ocean acidification that are at or above TRL 6 are also eligible to apply to this topic if they

have strong commitments for operational transition

C. Voting issue for fairness and ground- extra topicality forces the neg to waste

time debating T just to get back to square one, and it allows the aff to gain

extra advantages, counterplan answers, and link turns to disads

2NC Impact-Education

Definitions are key to education about IOOS

IOOS report to congress 13 [Official US IOOS report sent to congress. 2013, “U.S. Integrated Ocean Observing System (U.S. IOOS) 2013 Report to

Congress,” http://www.ioos.noaa.gov/about/governance/ioos_report_congress2013.pdf //jweideman]

The use of standard terms or vocabularies to describe ocean observations data is critical to facilitating

broad sharing and integration of data. Working closely SECOORA and the community-based Marine Metadata Interoperability

Project, U.S. IOOS has published nine recommended vocabularies over the past 12 months for review by the ocean

observing community including lists for platforms, parameters, core variables, and biological terms. These efforts are helping lead the ocean

observing community towards significantly improved levels of consistency via an improved semantic framework through

which users can adopt recommended vocabularies or convert their vocabularies to terms that are

perhaps used more widely.

2NC Cards- Oceans

IOOS includes monitoring the Great Lakes

IOOS report to congress 13 [Official US IOOS report sent to congress. 2013, “U.S. Integrated Ocean Observing System (U.S. IOOS) 2013 Report to

Congress,” http://www.oos.noaa.gov/about/governance/ioos_report_congress2013.pdf //jweideman]

The IOOC recognizes that U.S. IOOS must be responsive to environmental crises while maintaining the regular

long-term ocean observation infrastructure required to support operational oceanography and climate

research. As a source of our Nation’s ocean data and products, U.S. IOOS often serves as a resource for the

development of targeted applications for a specific location or sector. At the same time, U.S. IOOS organizes

data from across regions and sectors to foster the national and international application of local data and

products broadly across oceans, coasts, and Great Lakes. Events over the last few years, including

Hurricane Sandy and the Deep Water Horizon oil spill have awakened U.S. communities to the value and

necessity of timely ocean information. IOOC commends U.S. IOOS for responsive and capable support to

the Nation in these events in addition to diverse everyday support to the Nation’s maritime economy.

We have much more work to do to build and organize the ocean-observing infrastructure of the Nateion and look forward to wrking with

congress on this continuing challenge.

Ocean exploration is distinct from Great Lakes observation

COR 01 ~ Committee On Resources, “OCEAN EXPLORATION AND COASTAL AND OCEAN OBSERVING

SYSTEMS”, Science Serial No. 107–26 Resources Serial No. 107–47, Accessed 7/3/14 //RJ

On a summer day, our eyes and ears can sense an approaching thunderstorm. Our senses are extended

by radar and satellites to detect advancing storm systems. Our senses are being extended yet again to

anticipate changing states affecting coasts and oceans, our environment, and our climate. To truly

understand the con- sequences of our actions on the environment and the environment’s impact on

us, data obtained through ocean exploration, coastal observations , and ocean observa- tions will be

critical.

‘‘Coastal observations’’ include observations in the Nation’s ports, bays, estuaries, Great

Lakes, the waters of the EEZ, and adjacent land cover . Some of the properties measured in coastal

zones, such as temperature and currents, are the same as those measured in the larger, basin-scale

ocean observation systems. However, the users and applications of those data can be quite different.

For those properties that are similar, there should be a consistent plan for deployment in the coastal

and open ocean systems so that coastal observations represent a nested hierarchy of observa- tions

collected at higher resolution than those from the open ocean.

“Oceans” are only the 5 major bodies of water – landlocked and adjacent lakes and

rivers are excluded.

Rosenberg 14 ~ Matt Rosenberg, Master's in Geography from CSU, “Names for Water Bodies”,

http://geography.about.com/od/physicalgeography/a/waterbodies.htm, accessed 7/3/14 //RJ

Water bodies are described by a plethora of different names in English - rivers, streams, ponds, bays,

gulfs, and seas, to name a few. Many of these terms' definitions overlap and thus become confusing

when one attempts to pigeon-hole a type of water body. Read on to find out the similarities (and

differences) between terms used to describe water bodies.

We'll begin with the different forms of flowing water. The smallest water channels are often called

brooks but creeks are often larger than brooks but may either be permanent or intermittent. Creeks are

also sometimes known as streams but the word stream is quite a generic term for any body of flowing

water. Streams can be intermittent or permanent and can be on the surface of the earth, underground,

or even within an ocean (such as the Gulf Stream).

A river is a large stream that flows over land. It is often a perennial water body and usually flows in a

specific channel, with a considerable volume of water. The world's shortest river, the D River, in Oregon,

is only 120 feet long and connects Devil's Lake directly to the Pacific Ocean.

A pond is a small lake, most often in a natural depression. Like a stream, the word lake is quite a generic

term - it refers to any accumulation of water surrounded by land - although it is often of a considerable

size. A very large lake that contains salt water, is known as a sea (except the Sea of Galilee, which is

actually a freshwater lake).

A sea can also be attached to, or even part of, an ocean. For example, the Caspian Sea is a large saline

lake surrounded by land, the Mediterranean Sea is attached to the Atlantic Ocean, and the Sargasso Sea

is a portion of the Atlantic Ocean, surrounded by water.

Oceans are the ultimate bodies of water and refers to the five oceans - Atlantic, Pacific, Arctic,

Indian, and Southern . The equator divides the Atlantic Ocean and Pacific Oceans into the North and

South Atlantic Ocean and the North and South Pacific Ocean.

The plan explodes ground- includes the great lakes

NOS and NOAA 14 [Federal Agency Name(s): National Ocean Service (NOS), National Oceanic and Atmospheric Administration (NOAA), Department of

Commerce Funding Opportunity Title: FY2014 Marine Sensor and Other Advanced Observing Technologies Transition Project. “ANNOUNCEMENT OF FEDERAL

FUNDING OPPORTUNITY EXECUTIVE SUMMARY,” http://www.ioos.noaa.gov/funding/fy14ffo_msi_noaa_nos_ioos_2014_2003854.pdf //jweideman]

1. Marine Sensor Transition Topic: U.S.

IOOS seeks to increase the rate that new or existing marine sensor technologies are

transitioned into operations mode in order to facilitate the efficient collection of ocean, coastal and Great Lakes observations. The

Marine Sensor Transition topic is focused on transitioning marine sensors from research to operations mode to meet the demonstrated

operational needs of end-users. Letters of Intent (LOIs) are being solicited for this topic with particular emphasis on a) projects

comprised of multi-sector teams of partners, b) projects that will meet the demonstrated operational needs of end-users, and c) sensors that

are at or above TRL 6. Applicants with sensors for ocean acidification that are at or above TRL 6 are also eligible to apply to this topic if they

have strong commitments for operational transition

2NC Cards- USFG

The data sharing components are the critical part of IOOS- they cant say they just

don’t do the extra-topical parts

IOOS report to congress 13 [Official US IOOS report sent to congress. 2013, “U.S. Integrated Ocean Observing System (U.S. IOOS) 2013

Report to Congress,” http://www.ioos.noaa.gov/about/governance/ioos_report_congress2013.pdf //jweideman]

Observations are of little value if they cannot be found, accessed, and transformed into useful products.

The U.S. IOOS Data Management and Communications subsystem, or “DMAC,” is the central operational

infrastructure for assessing, disseminating, and integrating existing and future ocean observations data.

As a core functional component for U.S. IOOS, establishing DMAC capabilities continues to be a principal

focus for the program and a primary responsibility of the U.S. IOOS Program Office in NOAA. Importance and

Objectives of DMAC Although DMAC implementation remains a work in progress, a fully implemented DMAC

subsystem will be capable of delivering real-time, delayed-mode, and historical data. The data will

include in situ and remotely sensed physical, chemical, and biological observations as well as modelgenerated outputs, including forecasts, to U.S. IOOS users and of delivering all forms of data to and from

secure archive facilities. Achieving this requires a governance framework for recommending and

promoting standards and policies to be implemented by data providers across the U.S. IOOS enterprise,

to provide seamless long-term preservation and reuse of data across regional and national boundaries and across disciplines. The governance

framework includes tools for data access, distribution, discovery, visualization, and analysis; standards for metadata, vocabularies, and quality

control and quality assurance; and procedures for the entire ocean data life cycle. The DMAC design must be responsive to user needs and it

must, at a minimum, make data and products discoverable and accessible, and provide essential metadata regarding sources, methods, and

quality. The overall DMAC objectives are for U.S. IOOS data providers to develop and maintain capabilities to: • Deliver

accurate and

timely ocean observations and model outputs to a range of consumers; including government,

academic, private sector users, and the general public; using specifications common across all providers

• Deploy the information system components (including infrastructure and relevant personnel) for full

life-cycle management of observations, from collection to product creation, public delivery, system documentation, and

archiving • Establish robust data exchange responsive to variable customer requirements as well as routine feedback, which is not tightly bound

to a specific application of the data or particular end-user decision support tool U.S.

IOOS daia providers therefore are being

the following DMAC- specific objectives: • A standards-based foundation for DMAC capabilities: U.S.

IOOS partners must clearly demonstrate how they will ensure the establishment and maintenance of a

standards- based approach for delivering their ocean observations data and associated products to users through local,

regional and global/international data networks • Exposure of and access to coastal ocean observations: U.S. IOOS partners must describe

how they will ensure coastal ocean observations are exposed to users via a service- oriented architecture

and recommended data services that will ensure increased data interoperability including the use of

improved metadata and uniform quality-control methods • Certification and governance of U.S. IOOS data and products:

encouraged lo address

U.S. IOOS partners must present a description of how they will participate in establishing an effective U.S. IOOS governance process for data

certification standards and compliance procedures. This objective is part of an overall accreditation process which includes the other U.S. IOOS

subsystems (observing, modeling and analysis, and governance)

“Federal Government” means the United States government

Black’s Law 99 (Dictionary, Seventh Edition, p.703)

The U.S. government—also termed national government

National government, not states or localities

Black’s Law 99 (Dictionary, Seventh Edition, p.703)

A national government that exercises some degree of control over smaller political units that have

surrendered some degree of power in exchange for the right to participate in national political matters

Central government

AHD 92 (American Heritage Dictionary of the English Language, p. 647)

federal—3. Of or relating

to the central government of a federation as distinct from the governments of its member

units.

IOOS includes state, regional, and private sectors

IOOS report to congress 13 [Official US IOOS report sent to congress. 2013, “U.S. Integrated Ocean Observing System (U.S. IOOS) 2013 Report to

Congress,” http://www.ioos.noaa.gov/about/governance/ioos_report_congress2013.pdf //jweideman]

U.S. IOOS works with its eyes on the future. The successes of U.S. IOOS are achieved through cooperation and

coordination among Federal agencies, U.S. IOOS Regional Associations, State and regional agencies, and the private

sector. This cooperation and coordination requires a sound governance and management structure. In 2011 and 2012, program milestones

called for in U.S. IOOS legislation were achieved, laying the groundwork for more success in the future. First, the \U.S. IOOS Advisory Committee

was established. Second, the Independent Cost Estimate was delivered to Congress. As part of the estimate, each of the 11 U.S. IOOS Regional

Associations completed 10-year build-out plans, describing services and products to address local user needs and outlining key assets required

to meet the Nation’s greater ocean-observing needs.

Disads

Politics

1NC – Politics Link

Causes fights – anti-environmentalism

Farr 2013

Sam, Member of the House of Representatives (D-CA) Chair of House Oceans Caucus, Review&Forecast

Collaboration Helps to Understand And Adapt to Ocean, Climate Changes http://seatechnology.com/pdf/st_0113.pdf

The past year in Washington, D.C., was rife with infighting between the two political parties. On issue after issue, the opposing sides were unable to reach a compromise on meaningful legislation for the American people. This division was most

noticeable when dis- cussing the fate of our oceans. The widening chasm between the two political

parties resulted in divergent paths for ocean policy: one with Presi- dent Barack Obama pushing the National

Ocean Policy forward, and the other with U.S. House Republicans under- mining those efforts with opposing

votes and funding cuts. Marine, Environmental Policy Regression The 112th Congress was called the "most antienviron- mental Congress in history" in a report published by House Democrats and has been credited for undermining the ma- jor

environmental legislation of the past 40 years. After the Tea Party landslide in the congressional elections of 2010,

conservatives on Capitol Hill began to flex their muscles to roll back environmental protections. Since taking

power in January 2011, House Republicans held roughly 300 votes to undermine basic environmental protections that have existed for decades.

To put that in per- spective, that was almost one in every five votes held in Congress during the past two years. These were votes to al- low

additional oil and gas drilling in coastal waters, while simultaneously limiting the environmental review process for offshore drilling sites. There

were repeal attempts to un- dermine the Clean Water Act and to roll back protections for threatened fish and other marine species. There

were also attempts to block measures to address climate changes, ig- noring the consequences of

inaction, such as sea level rise and ocean acidification.

2NC – Obama PC Link

Costs political capital, requires Obama to push

Farr 2013

Sam, Member of the House of Representatives (D-CA) Chair of House Oceans Caucus, Review&Forecast

Collaboration Helps to Understand And Adapt to Ocean, Climate Changes http://seatechnology.com/pdf/st_0113.pdf

Bui while the tide of sound ocean policy was retreating in Congress, it was rising on the other end of

Pennsylvania Avenue. The National Ocean Policy Understanding the need for a federal policy to address

the oceans after the Deepwater Horizon disaster, President Obama used an executive order in 2010 to

create the Na- tional Ocean Policy. Modeled after the Oceans-21 legisla- tion I championed in Congress,

this order meant that the U.S. would have a comprehensive plan to guide our stew- ardship of the

oceans, coasts and Great Lakes. Despite push back from the House of Representatives, the president

continued implementing his National Ocean Policy this past year through existing law, prioritizing ocean

stewardship and promoting interagency collaboration to ad- dress the challenges facing our marine

environment. For in- stance, the National Oceans Council created the www.data. gov/ocean portal,

which went live just before the start of 2012 and has grown throughout the year to provide access to

data and information about the oceans and coasts. The U.S. Geological Survey and other National Ocean

Council agencies have been working with federal and state partners to identify the data sets needed by

ocean users, such as fish- eries and living marine resources data, elevation and shore- line information,

and ocean observations.

2NC – NOAA Link

Congress hates increased funding for NOAA programs---the plan would be a fight

Andrew Jensen 12, Peninsula Clarion, Apr 27 2012, “Congress takes another ax to NOAA budget,”

http://peninsulaclarion.com/news/2012-04-27/congress-takes-another-ax-to-noaa-budget

Frustrated senators from coastal states are wielding the power of the purse to rein in the N ational O ceanic and

A tmospheric A dministration and refocus the agency's priorities on its core missions.¶ During recent appropriations subcommittee hearings April 17, Sen. Lisa Murkowski

ensured no funds would be provided in fiscal year 2013 for coastal marine spatial planning, a key component of

President Barack Obama's National Ocean Policy.¶ Murkowski also pushed for an additional $3 million for regional fishery management councils and secured $15 million for the Pacific Salmon

Treaty that was in line to be cut by NOAA's proposed budget (for $65 million total).¶ On April 24, the full Senate Appropriations Committee approved the Commerce Department budget with

language inserted by Sen. John Kerry, D-Mass., and Sen. Olympia Snowe, R-Maine, into NOAA's budget that would transfer $119 million currently unrestricted funds and require they be used

for stock assessments, surveys and monitoring, cooperative research and fisheries grants.¶ The $119 million is derived from Saltonstall-Kennedy funds, which are levies collected on seafood

imports by the Department of Agriculture. Thirty percent of the import levies are transferred to NOAA annually, and without Kerry's language there are no restrictions on how NOAA may use

the funds.¶

In a Congress defined by fierce partisanship, no federal agency has drawn as much fire from

both parties as NOAA and its Administrator Jane Lubchenco.¶ Sen. Scott Brown, R-Mass., has repeatedly demanded accountability for NOAA Office of Law Enforcement

abuses uncovered by the Commerce Department Inspector General that included the use of fishermen's fines to purchase a luxury boat that was only used for joyriding around Puget Sound.¶

There is currently another Inspector General investigation under way into the regional fishery management council rulemaking process that was requested last August by Massachusetts Reps.

John Tierney and Barney Frank, both Democrats.¶ In July 2010, both Frank and Tierney called for Lubchenco to step down, a remarkable statement for members of Obama's party to make

about one of his top appointments.¶ Frank introduced companion legislation to Kerry's in the House earlier this year, where it should sail through in a body that has repeatedly stripped out

tens of millions in budget requests for catch share programs. Catch share programs are Lubchenco's favored policy for fisheries management and have been widely panned after

implementation in New England in 2010 resulted in massive consolidation of the groundfish catch onto the largest fishing vessels.¶ Another New England crisis this year with Gulf of Maine cod

also drove Kerry's action after a two-year old stock assessment was revised sharply downward and threatened to close down the fishery. Unlike many fisheries in Alaska such as pollock, crab

and halibut, there are not annual stock assessment surveys around the country.¶ Without a new stock assessment for Gulf of Maine cod, the 2013 season will be in jeopardy.¶ "I applaud

Senator Kerry for his leadership on this issue and for making sure that this funding is used for its intended purpose - to help the fishing industry, not to cover NOAA's administrative overhead,"

Frank said in a statement. "We are at a critical juncture at which we absolutely must provide more funding for cooperative fisheries science so we can base management policies on sound

data, and we should make good use of the world-class institutions in the Bay State which have special expertise in this area."¶ Alaska's Sen. Mark Begich and Murkowski, as well as Rep. Don

Young have also denounced the National Ocean Policy as particularly misguided, not only for diverting core funding in a time of tightening budgets but for creating a massive new bureaucracy

that threatens to overlap existing authorities for the regional fishery management councils and local governments.¶ The first 92 pages of the draft policy released Jan. 12 call for more than 50

actions, nine priorities, a new National Ocean Council, nine Regional Planning Bodies tasked with creating Coastal Marine Spatial Plans, several interagency committees and taskforces, pilot

projects, training in ecosystem-based management for federal employees, new water quality standards and the incorporation of the policy into regulatory and permitting decisions.¶ Some of

the action items call for the involvement of as many as 27 federal agencies. Another requires high-quality marine waters to be identified and new or modified water quality and monitoring

protocols to be established.¶ Young hosted a field hearing of the House Natural Resources Committee in Anchorage April 3 where he blasted the administration for refusing to explain exactly

how it is paying for implementing the National Ocean Policy.¶ "This National Ocean Policy is a bad idea," Young said. "It will create more uncertainty for businesses and will limit job growth. It

will also compound the potential for litigation by groups that oppose human activities. To make matters worse, the administration refuses to tell Congress how much money it will be diverting

from other uses to fund this new policy."¶ Natural Resources Committee Chairman Doc Hastings, R-Wash., sent a letter House Appropriations Committee Chairman Hal Rogers asking that

every appropriations bill expressly prohibit any funds to be used for implementing the National Ocean Policy.¶ Another letter was sent April 12 to Rogers by more than 80 stakeholder groups

from the Gulf of Mexico to the Bering Sea echoing the call to ban all federal funds for use in the policy implementation.¶ "The risk of unintended economic and societal consequences remains

high, due in part to the unprecedented geographic scale under which the policy is to be established," the stakeholder letter states. "Concerns are further heightened because the policy has

already been cited as justification in a federal decision restricting access to certain areas for commercial activity."¶

million in budget requests for NOAA in fiscal year 2012

implementation policy in January anyway.

Congress refused to fund some $27

to implement the National Ocean Policy, but the administration released its draft

Russian Oil

Russian Oil DA 1NC

Russia’s economy is at a tipping point- its holding on because of oil

The economist 14 [The economist is an economic news source. May 3 2014,

“The crisis in Ukraine is hurting an already weakening economy” http://www.economist.com/news/finance-and-economics/21601536-crisis-ukraine-hurtingalready-weakening-economy-tipping-scales //jweideman]

WESTERN measures against Russia—asset freezes and visa restrictions aimed at people and firms close

to Vladimir Putin—may be pinpricks, but the crisis in Ukraine has already taken its toll on Russia’s

economy and financial markets. Capital flight in the first three months of 2014 is thought to exceed $60 billion. The stockmarket is

down by 20% since the start of the year and the rouble has dropped by 8% against the dollar. Worries about the devaluation feeding

through to consumer prices have prompted the central bank to yank up interest rates, from 5.5% at the

start of March to 7.5%. The IMF reckons the economy is in recession; this week it cut its growth forecast for 2014 from 1.3% to 0.2%. Despite

these upsets, Russia appears to hold strong economic as well as military cards. It provides 24% of the

European Union’s gas and 30% of its oil. Its grip on Ukraine’s gas and oil consumption is tighter still. That

makes it hard for the West to design sanctions that do not backfire.

Satellite mapping finds oil- it increases production capabilities

Short 11 [Nicholas M. Short, Sr is a geologist who received degrees in that field from St. Louis University (B.S.), Washington University (M.A.), and the

Massachusetts Institute of Technology (Ph.D.); he also spent a year in graduate studies in the geosciences at The Pennsylvania State University. In his early postgraduate career, he worked for Gulf Research & Development Co., the Lawrence Livermore Laboratory, and the University of Houston. During the 1960s he

specialized in the effects of underground nuclear explosions and asteroidal impacts on rocks (shock metamorphism), and was one of the original Principal

Investigators of the Apollo 11 and 12 moon rocks. 2011, “Finding Oil and Gas from Space” https://apollomapping.com/wpcontent/user_uploads/2011/11/NASA_Remote_Sensing_Tutorial_Oil_and_Gas.pdf //jweideman]

If precious metals are not your forte, then try the petroleum industry. Exploration

for oil and gas has always depended on surface

maps of rock types and structures that point directly to, or at least hint at, subsurface conditions favorable to

accumulating oil and gas. Thus, looking at surfaces from satellites is a practical, cost-effective way to

produce appropriate maps. But verifying the presence of hydrocarbons below surface requires two

essential steps: 1) doing geophysical surveys; and 2) drilling into the subsurface to actually detect and

extract oil or gas or both. This Tutorial website sponsored by the Society of Exploration Geophysicists is a simplified summary of the basics of

hydrocarbon exploration. Oil and gas result from the decay of organisms - mostly marine plants (especially microscopic algae and similar free-floating vegetation)

and small animals such as fish - that are buried in muds that convert to shale. Heating through burial and pressure from the overlying later sediments help in the

process. (Coal forms from decay of buried plants that occur mainly in swamps and lagoons which are eventually buried by younger sediments.). The decaying liquids

and gases from petroleum source beds, dominantly shales after muds convert to hard rock, migrate from their sources to become trapped in a variety of structural

or stratigraphic conditions shown in this illustration:The oil and gas must migrate from deeper source beds into suitable reservoir rocks. These are usually porous

sandstones, but limestones with solution cavities and even fractured igneous or metamorphic rocks can contain openings into which the petroleum products

accumulate. An essential condition: the

reservoir rocks must be surrounded (at least above) by impermeable (refers

to minimal ability to allow flow through any openings - pores or fractures) rock, most commonly shales.

The oil and gas, generally confined under some pressure, will escape to the surface - either naturally when the trap is intersected by downward moving erosional

surfaces or by being penetrated by a drill. If pressure is high the oil and/or gas moves of its own accord to the surface but if pressure is initially low or drops over

time, pumping is required.

Exploration for new petroleum sources begins with a search for surface manifestations

of suitable traps (but many times these are hidden by burial and other factors govern the decision to explore). Mapping of surface

conditions begins with reconnaissance, and if that indicates the presence of hydrocarbons, then detailed

mapping begins. Originally, both of these maps required field work. Often, the mapping job became easier by using aerial photos. After the mapping, much

of the more intensive exploration depends on geophysical methods (principally, seismic) that can give 3-D constructions of subsurface structural and stratigraphic

traps for the hydrocarbons. Then, the potential traps are sampled by exploratory drilling and their properties measured. Remote

sensing from

satellites or aircraft strives to find one or more indicators of surface anomalies. This diagram sets the framework for the

approach used; this is the so-called microseepage model, which leads to specific geochemical anomalies:

New US oil production collapses Russia’s economy

Woodhill 14 [Louis, Contributor at forbes, entrepenour and investor. 3/3/14, “It's Time To Drive Russia Bankrupt – Again”

http://www.forbes.com/sites/louiswoodhill/2014/03/03/its-time-to-drive-russia-bankrupt-again/ //jweideman]

The high oil prices of 1980 were not real, and Reagan knew it. They were being caused by the weakness of the U.S. dollar, which had lost 94%

of its value in terms of gold between 1969 and 1980. Reagan immediately decontrolled U.S. oil prices, to unleash the supply

side of the U.S. economy. Even more importantly, Reagan backed Federal Reserve Chairman Paul Volcker’s campaign to strengthen and stabilize the

U.S. dollar. By the end of Reagan’s two terms in office, real oil prices had plunged to $27.88/bbl. As Russia

does today, the old USSR depended upon oil exports for most of its foreign exchange earnings, and

much of its government revenue. The 68% reduction in real oil prices during the Reagan years drove the

USSR bankrupt. In May 1990, Gorbachev called German Chancellor Helmut Kohl and begged him for a loan of $12 billion to stave off financial disaster. Kohl

advanced only $3 billion. By August of 1990, Gorbachev was back, pleading for more loans. In December 1991, the Soviet Union collapsed. President

Bill Clinton’s “strong dollar” policy (implemented via Federal Reserve Vice-Chairman Wayne Angell’s secret commodity price rule system) kept real oil prices low

At real oil price levels

like this, Russia is financially incapable of causing much trouble. It was George W. Bush and Barack

Obama’s feckless “weak dollar” policy that let the Russian geopolitical genie out of the bottle. From the end of

during the 1990s, despite rising world oil demand. Real crude oil prices during Clinton’s time in office averaged only $27.16/bbl.

2000 to the end of 2013, the gold value of the dollar fell by 77%, and real oil prices tripled, to $111.76/bbl. It is these artificially high oil prices that are fueling Putin’s

mischief machine. The

Russian government has approved a 2014 budget calling for revenues of $409.6 billion,

spending of $419.6 billion, and a deficit of $10.0 billion, or 0.4% of expected GDP of $2.5 trillion. Unlike

the U.S., which has deep financial markets and prints the world’s reserve currency, Russia cannot run

large fiscal deficits without creating hyperinflation. Given that Russia expects to get about half of its

revenue from taxes on its oil and gas industry, it is clear that it would not take much of a decline in

world oil prices to create financial difficulties for Russia. Assuming year-end 2013 prices for crude oil ($111.76/bbl) and natural gas

($66.00/FOE* bbl) the total revenue of Russia’s petroleum industry is $662.3 billion (26.5% of GDP), and Russian’s oil and gas export earnings are $362.2 billion, or

14.5% of GDP. Obviously,

a decline in world oil prices would cause the Russian economy and the Russian

government significant financial pain.

Nuclear war

Filger ‘9 [Sheldon, founder of Global Economic Crisis, The Huffington Post,, 5.10.9, http://www.huffingtonpost.com/sheldon-filger/russian-economy-facesdis_b_201147.html // jweideman]

In Russia, historically, economic health and political stability are intertwined to a degree that is rarely

encountered in other major industrialized economies. It was the economic stagnation of the former Soviet Union that

led to its political downfall. Similarly, Medvedev and Putin, both intimately acquainted with their nation's history, are unquestionably

alarmed at the prospect that Russia's economic crisis will endanger the nation's political stability,

achieved at great cost after years of chaos following the demise of the Soviet Union. Already, strikes and protests are occurring among rank and file workers

facing unemployment or non-payment of their salaries. Recent polling demonstrates that the once supreme popularity ratings of Putin and Medvedev are

eroding rapidly. Beyond

the political elites are the financial oligarchs, who have been forced to deleverage,

even unloading their yachts and executive jets in a desperate attempt to raise cash. Should the

Russian economy deteriorate to the point where economic collapse is not out of the question, the

impact will go far beyond the obvious accelerant such an outcome would be for the Global Economic Crisis. There is a

geopolitical dimension that is even more relevant then the economic context . Despite its economic vulnerabilities and perceived

decline from superpower status, Russia remains one of only two nations on earth with a nuclear

arsenal of sufficient scope and capability to destroy the world as we know it. For that reason, it is not only President

Medvedev and Prime Minister Putin who will be lying awake at nights over the prospect that a national economic crisis can transform itself into a virulent and

destabilizing social and political upheaval. It just may be possible that U.S.

UQ: econ

Russia’s economy is weak, but growth is coming

ITARR-TASS 7/3 [Russian news agency. 7/3/14, “Russia’s economic growth to accelerate to over 3% in 2017” http://en.itar-tass.com/economy/738849

//jweideman]

The Russian government expects the national economy to grow, despite the unfavorable economic scenario for this year, Prime

Minister Dmitry Medvedev said on Thursday. Medvedev chaired a government meeting on Thursday to discuss the

federal budget parameters and state program financing until 2017. “This scenario (of Russia’s social and

economic development) unfortunately envisages a general economic deterioration and slower

economic growth this year. Then, the growth is expected to accelerate gradually to 2% next year and to

over 3% in 2017,” Medvedev said. The 2015-2017 federal budget will set aside over 2.7 trillion rubles ($78

billion) for the payment of wages and salaries, the premier said, adding the government’s social obligations were a priority in the

forthcoming budget period. More than a half of federal budget funds are spent through the mechanism of state

programs. In 2015, the government intends to implement 46 targeted state programs to the amount of

over 1 trillion rubles (about $30 billion) in budget financing, the premier said. The same budget financing principle will now

be used by regional and local governments, including Russia’s two new constituent entities - the

Republic of Crimea and the city of Sevastopol, the premier said.

Russia will avoid recession

AFP 7/10 [Agence France-Presse is an international news agency headquartered in Paris. It is the oldest news agency in the world and one of the largest.

7/10/14, “Russia avoids recession in second quarter” https://au.news.yahoo.com/thewest/business/world/a/24425122/russia-avoids-recession-in-second-quarter/

//jweideman]

Moscow (AFP) - Russia

managed to avoid sliding into a recession in the second quarter, its deputy economy

minister said Wednesday, citing preliminary estimates showing zero growth for the three months ending June. The emerging giant was

widely expected to post a technical recession with two consecutive quarters of contraction, after

massive capital flight over the uncertainty caused by the Ukraine crisis. "We were expecting a possible technical recession in

the second quarter," Russia's deputy economy minister Andrei Klepach said, according to Interfax news agency.

But it now "appears that we will avoid recession, our preliminary forecast is one of zero growth after

adjusting for seasonal variations," he said. Official data is due to be published by the statistics institute

during the summer, although no specific date has been given. Russia's growth slowed down sharply in the first quarter as investors, fearing the impact of

Western sanctions over Moscow's annexation of Crimea, withdrew billions worth of their assets. In the first three months of the year, output contracted by 0.3

percent compared to the previous quarter. Klepach

estimated that on an annual basis, Russia's annual growth would

better the 0.5 percent previously forecast. "The situation is unstable, but we think... that the trend

would be positive despite everything," he said, adding however that it would be a "slight recovery rather than solid growth". The

government has until now not issued any quarter to quarter growth forecast. Economy minister Alexei Ulyukayev on Monday issued a year-on-year forecast, saying

output expanded by 1.2 percent in the second quarter of the year.

Russia is experiencing growth

AFP 7/7 [Agence France-Presse is an international news agency headquartered in Paris. It is the oldest news agency in the world and one of the largest. 7/7/14,

“Russian output expands 1.2% in second quarter: economy minister” http://www.globalpost.com/dispatch/news/afp/140707/russian-output-expands-12-secondquarter-economy-minister-0 //jweideman]

Russia's economy minister said Monday that output expanded by 1.2 percent in the second quarter

compared to the same period last year, a preliminary figure that is "slightly better" than expected despite the Ukraine crisis. "The

results are slightly better than we predicted, with the emphasis on 'slightly'," economy minister Alexei Ulyukayev said. He added that the

"refined" official figure by Russia's statistics agency will be released later. The IMF said last month that Russia is already in recession, while the central bank said

growth in 2014 is likely to slow to just 0.4 percent. Ulyukayev

said that second quarter figure, up from 0.9 percent in the

first quarter and 0.2 percent better than his ministry's expectations, was likely bolstered by industrial

growth. "This year industry is becoming the main locomotive of growth," Ulyukayev told President Vladimir Putin in a

meeting, citing industrial growth reaching 2.5 percent, up from 1.8 percent in the first quarter.

UQ AT: sanctions

There won’t be more sanctions

Al Jazeera 14 [Al Jazeera also known as Aljazeera and JSC (Jazeera Satellite Channel), is a Doha-based broadcaster owned by the Al Jazeera Media Network,

June 5 2014. “G7 holds off from further Russia sanctions” http://www.aljazeera.com/news/europe/2014/06/g7-hold-off-from-further-russia-sanctions20146545044590707.html //jweideman]

Leaders of the G7 group of industrial nations have limited themselves to warning Russia of another

round of sanctions as they urged President Vladimir Putin to stop destabilising Ukraine. On the first day of the

group's summit in Brussels on Wednesday, the bloc said that Putin must pull Russian troops back from his country's border with Ukraine and stem the pro-Russian

uprising in the east of the country."Actions to destabilise eastern Ukraine are unacceptable and must stop," said the group in a joint statement after the meeting.

"We stand ready to intensify targeted sanctions and to implement significant additional restrictive measures to impose further costs on Russia should events so

require." However,

Angela Merkel, the German chancellor, said that further sanctions would take effect

only if there had been "no progress whatsoever". Al Jazeera's James Bays, reporting from Brussels, said: "The G7 leader are

talking here again about tougher sanctions on Russia. But they are talking about the same tougher sanctions they have been

talking about for months now." Meanwhile, Putin reached out a hand towards dialogue, despite being banned from the

summit following Russia's annexation of Crimea in March, saying that he was ready to meet Ukraine's president-elect Petro Poroshenko and US President Barack

Obama.

U-Production decline

Oil production is declining

Summers 14 [Dave, author for economonitor, july 7 14, “Oil Production Numbers Keep Going Down” http://www.economonitor.com/blog/2014/07/oilproduction-numbers-keep-going-down/ //jweideman]

One problem with defining a peak in global oil production is that it is only really evident sometime after the event, when one can look in the rear view mirror and

see the transition from a

growing oil supply to one that is now declining. Before that relatively absolute point, there

will likely come a time when global supply can no longer match the global demand for oil that exists at

that price. We are beginning to approach the latter of these two conditions, with the former being increasingly probable

in the non-too distant future. Rising prices continually change this latter condition, and may initially disguise the arrival of the peak, but it is becoming inevitable.

Over the past two years there has been a steady growth in demand, which OPEC expects to continue at around the 1 mbd range, as has been the recent pattern.

The challenge, on a global scale, has been to identify where the matching growth in supply will come from, given the declining production from older oilfields and

the decline rate of most of the horizontal fracked wells in shale. At

present the United States is sitting with folk being relatively

complacent, anticipating that global oil supplies will remain sufficient, and that the availability of enough

oil in the global market to supply that reducing volume of oil that the US cannot produce for itself will

continue to exist. Increasingly over the next couple of years this is going to turn out to have created a false sense of security, and led to decisions on

energy that will not easily be reversed. Consider that the Canadians have now decided to build their Pipeline to the Pacific. The Northern Gateway pipeline that

Enbridge will build from the oil sands to the port of Kitimat.

Oil production is decreasing off the coast

Larino 14 [Jennifer, Staff writer for NOLA.com | The Times-Picayune covering energy, banking/finance and general business news in the greater New Orleans

area. 7/8/14, “Oil, gas production declining in Gulf of Mexico federal waters, report says”

http://www.nola.com/business/index.ssf/2014/07/oil_gas_production_in_federal.html //jweideman]

Oil and gas found off the coast of Louisiana and other Gulf Coast states made up almost one quarter of all fossil

fuel production on federal lands in 2013, reinforcing the region's role as a driving force in the U.S. energy industry, according to updated

government data. But a closer look at the numbers shows the region's oil and gas production has been in

steady decline for much of the past decade. A new U.S. Energy Information Administration report shows

federal waters in the Gulf of Mexico in 2013 accounted for 23 percent of the 16.85 trillion British

thermal units (Btu) of fossil fuels produced on land and water owned by the federal government. That was

more than any other state or region aside from Wyoming, which has seen strong natural gas production in recent years. The report did not include data on oil and

gas production on private lands, which makes up most production in many onshore oil and gas fields, including the Haynesville Shale in northwest Louisiana. But

production in the offshore gulf has also fallen every year since 2003. According to the report, total fossil

fuel production in the region is less than half of what it was a decade ago, down 49 percent from 7.57 trillion Btu in 2003

to 3.86 trillion in 2013. The report notes that the region has seen a sharp decline in natural gas production as older offshore fields dry out and more

companies invest in newer gas finds onshore, where hydraulic fracturing has led to a boom production. Natural gas production in the offshore gulf was down 74

percent from 2003 to 2013. The region's oil production has declined, though less drastically. The offshore gulf produced about 447 million barrels of oil in 2013,

down from a high of 584 million barrels in 2010. Still, the region accounted for 69 percent of all the crude oil produced on all federal lands and waters last year.

Offshore well exploration is ineffective and expensive now

API 14 [The American Petroleum Institute, commonly referred to as API, is the largest U.S trade association for the oil and natural gas industry. January 2014,

“Offshore Access to Oil and Gas” http://www.api.org/policy-and-issues/policy-items/exploration/~/media/Files/Oil-and-Natural-Gas/Offshore/OffshoreAccessprimer-highres.pdf//jweideman]

Sometimes when a lease is not producing, critics claim it is “idle.” Much more often than not, non-producing leases are not idle at all; they are under geological

evaluation or in development and could become an important source of domestic supply. Companies

purchase leases hoping they will

hold enough oil or natural gas to benefit consumers and become economically viable for production.

Companies can spend millions of dollars to purchase a lease and then explore and develop it, only to

find that it does not contain oil and natural gas in commercial quantities. It is not unusual for a company

to spend in excess of $100 million only to drill a dry hole. The reason is that a company usually only has

limited knowledge of resource potential when it buys a lease. Only after the lease is acquired will the company be in a position to

evaluate it, usually with a very costly seismic survey followed by an exploration well. If a company does not find oil or natural gas in commercial quantities, the

company hands the lease back to the government, incurs the loss of invested money and moves on to more promising leases. If a company finds resources in

commercial quantities, it will produce the lease. But there sometimes can be delays — often as long as ten years — for environmental and engineering studies, to

acquire permits, to install production facilities (or platforms for offshore leases) and to build the necessary infrastructure to bring the resources to market.

Litigation, landowner disputes and regulatory hurdles also can delay the process .

Oil is getting harder to find

Spak 11 [Kevin, staff writer for newser. 2/16/11, “Exxon: Oil Becoming Hard to Find” http://www.newser.com/story/112166/exxon-oil-becoming-hard-tofind.html //jweideman]

Exxon Mobil Corp. is having trouble finding more oil, it revealed yesterday in its earnings call. The

company said it was depleting its reserves, replacing only 95 out of every 100 barrels it pumps. Though it tried

to put a positive spin on things by saying it had made up for the shortfall by bolstering its natural gas supplies, experts tell the Wall Street

Journal that’s probably a shift born of grim necessity. Oil companies across the board are drifting toward

natural gas, because oil is harder and harder to come by, according to the Journal. Most accessible fields are already tapped out,

while new fields are either technically or politically difficult to exploit. “The good old days are gone and not to be repeated,” says one analyst. Natural gas “is not

going to give you the same punch” as oil.

2NC Link Wall

Satellites prevent rig destroying eddys- empirically solves production

ESA 6 [European space agency, 1 March 2006, “SATELLITE DATA USED TO WARN OIL INDUSTRY OF POTENTIALLY DANGEROUS EDDY”

http://www.esa.int/Our_Activities/Observing_the_Earth/Satellite_data_used_to_ warn_oil_industry_of_potentially_dangerous_eddy//jweideman]

Ocean FOCUS began issuing forecasts on 16 February 2006 – just in time to warn oil production

operators of a new warm eddy that has formed in the oil and gas-producing region of the Gulf of

Mexico. These eddies, similar to underwater hurricanes, spin off the Loop Current – an intrusion of warm surface

water that flows northward from the Caribbean Sea through the Yucatan Strait – from the Gulf Stream and can cause extensive and costly

damage to underwater equipment due to the extensive deep water oil production activities in the region.The Ocean

FOCUS service is a unique service that provides ocean current forecasts to the offshore oil production industry to give

prior warning of the arrival of eddies. The service is based on a combination of state-of-the-art ocean

models and satellite measurements. Oil companies require early warning of these eddies in order to

minimise loss of production, optimise deep water drilling activities and prevent damage to critical equipment. The Loop Current and eddies

shedding from it pose two types of problems for underwater production systems: direct force and induced vibrations, which create more stress than direct force

and results in higher levels of fatigue and structural failure. The

impact of these eddies can be very costly in terms of

downtime in production and exploration and damage to sub sea components.

IOOS leads to more effective production

NOAA 13 [U.S. Department of Commerce www.ioos.noaa.gov May 2013 National Oceanic and Atmospheric Administration Integrated Ocean Observing

System (IOOS®) Program. May 2013, “IOOS®: Partnerships Serving Lives and Livelihoods”

http://www.ioos.noaa.gov/communications/handouts/partnerships_lives_livelihoods_flyer.pdf //jweideman]

IOOS supplies critical information about our Nation's waters. Scientists working to understand climate change, governments

adapting to changes in the Arctic, -nunicipalities monitoring local water quality, industries jnderstanding coastal and marine spatial planning all have the same need:

reliable and timely access to data and information that informs decision making. Improving Lives and Livelihoods IOOS

enhances our economy. Improved information allows offshore oil and gas platform and coastal operators,

municipal Dlanners, and those with interests n the coastal zone to minimize impacts of natural hazards,

sea level rise, and flooding. This infor- mation improves marine forecasts so mariners can optimize

shipping routes, saving time and reducing fuel expenses - translating into cost savings for consumers.IOOS

benefits our safety and environment. A network of water quality monitoring buoys on the Great Lakes makes beaches safer □y detecting and predicting the

Dresence of bacteria (E. coli). Mariners use IOOS wave and surface current data to navigate ships safely under bridges and in narrow channels.

Satellites are effective at finding new oil wells

DTU 9 [Technical University of Denmark (DTU) 2/27/9, “New Oil Deposits Can Be Identified Through Satellite Images”

http://www.sciencedaily.com/releases/2009/02/090226110812.htm //jweideman]

A new map of the Earth’s gravitational force based on satellite measurements makes it much less

resource intensive to find new oil deposits. The map will be particularly useful as the ice melts in the oil-rich Arctic regions. Ole

Baltazar, senior scientist at the National Space Institute, Technical University of Denmark (DTU Space),

headed the development of the map. The US company Fugro, one of the world’s leading oil exploration companies, is one of the companies

that have already made use of the gravitational map. The company has now initiated a research partnership with DTU Space. “Ole Baltazar’s gravitational

map is the most precise and has the widest coverage to date,” says Li Xiong, Vice President and Head Geophysicist with Fugro. “On account of its high resolution and

accuracy, the

map is particularly useful in coastal areas, where the majority of the oil is located.” Satellite

measurements result in high precision Ole Baltazar’s map shows variations in gravitational force across the

surface of the Earth and knowledge about these small variations is a valuable tool in oil exploration.

Subterranean oil deposits are encapsulated in relatively light materials such as limestone and clay and

because these materials are light, they have less gravitational force than the surrounding materials. Ole

Baltazar’s map is based on satellite measurements and has a hitherto unseen level of detail and accuracy. With this map in your hands, it is, therefore,

easier to find new deposits of oil underground. Climate change is revealing new sea regions The gravitational map from DTU Space is

unique on account of its resolution of only 2 km and the fact that it covers both land and sea regions. Oil companies use the map in the first phases of oil

exploration. Previously, interesting areas were typically selected using protracted, expensive measurements from planes or ships. The

interesting areas

appear clearly on the map and the companies can, therefore, plan their exploration much more

efficiently. “The map will also be worth its weight in gold when the ice in the Arctic seriously begins to melt, revealing large sea regions where it is suspected

that there are large deposits of oil underground. With our map, the companies can more quickly start to drill for oil in the right places without first having to go

through a resource-intensive exploration process,” explains Ole Baltazar. Based on height measurements instead of direct gravitation measurements The success of

the gravitational map is due in large part to the fact that it is not based on direct gravitation measurements but on observations of the height of the sea, which

reflects the gravitation. “Height

measurements have the advantage that it is possible to determine the

gravitational field very locally and thus make a gravitational map with a resolution of a few km. For

comparison, the resolution of satellite measurements of gravitational force is typically around 200 km. Satellite gravitation measurements are used, for example, to

explore conditions in the deeper strata of the Earth, but are not well suited to our purposes,” Ole Baltazar explains.

IL-Prices k2 russia’s economy

High oil prices are key to political and economic stability in Russia

Ioffe 12 [Julia, Foreign policy’s Moscow correspondant. 6/12/12, “What will it take to push russians over the edge”

http://www.foreignpolicy.com/articles/2012/06/12/powder_keg?page=0,1 //jweideman]

There is also the economic factor to consider. The Russian economy is currently growing at a relatively

healthy 3.5 percent, but it's useful to recall the whopping growth rates Russia was posting just a few

years ago. In 2007, the year before the world financial crisis hit Russia, Russia's GDP growth topped 8 percent. It had been

growing at that pace, buoyed by soaring commodity prices, for almost a decade, and it was not accidental that

this was the decade in which Putin made his pact with the people: You get financial and consumer

comforts, and we get political power. It's hard to maintain such a pact when the goodies stop flowing.

Which brings us to the looming issue of the Russian budget deficit. To keep the people happy and out of politics, the Russian

government has promised a lot of things to a lot of people. (Putin's campaign promises alone are

estimated by the Russian Central Bank to cost at least $170 billion.) To balance its budget with such

magnanimity, Russia needs high oil prices, to the point where last month, the Ministry of Economic

Development announced that an $80 barrel of oil would be a "crisis." Keeping in mind that oil is now about $98 a barrel, and

that Russia used to be able to balance its budgets just fine with oil at a fraction of the price, this doesn't look too good for Putin. Factor in the worsening European

crisis -- Europe is still Russia's biggest energy customer -- and the fact that the state has put off unpopular but increasingly necessary reforms, like raising utility

prices, and you find yourself looking at a powder keg.

Russia’s economy and regime is oil dependent- reverse causal

Schuman 12 [Michael, is an American author and journalist who specializes in Asian economics, politics and history. He is currently the Asia business

correspondent for TIME Magazine. July 5 2012, “Why Vladimir Putin Needs Higher Oil Prices” http://business.time.com/2012/07/05/why-vladimir-putin-needshigher-oil-prices/ //jweideman]

Falling oil prices make just about everyone happy. For strapped consumers in struggling developed nations, lower oil prices mean a smaller

payout at the pump, freeing up room in strained wallets to spend on other things and boosting economic growth. In the developing world, lower oil prices mean

reduced inflationary pressures, which will give central bankers more room to stimulate sagging growth. With the global economy still climbing out of the 2008

financial crisis, policymakers around the world can welcome lower oil prices as a rare piece of helpful news. But

Vladimir Putin is not one of

them. The economy that the Russian President has built not only runs on oil, but runs on oil priced

extremely high. Falling oil prices means rising problems for Russia – both for the strength of its economic

performance, and possibly, the strength of Putin himself. Despite the fact that Russia has been labeled

one of the world’s most promising emerging markets, often mentioned in the same breath as China and

India, the Russian economy is actually quite different from the others. While India gains growth benefits from an expanding

population, Russia, like much of Europe, is aging; while economists fret over China’s excessive dependence

on investment, Russia badly needs more of it. Most of all, Russia is little more than an oil state in

disguise. The country is the largest producer of oil in the world (yes, bigger even than Saudi Arabia), and Russia’s dependence on crude

has been increasing. About a decade ago, oil and gas accounted for less than half of Russia’s exports; in

recent years, that share has risen to two-thirds. Most of all, oil provides more than half of the federal

government’s revenues. What’s more, the economic model Putin has designed in Russia relies heavily not just on oil, but high oil prices. Oil

lubricates the Russian economy by making possible the increases in government largesse that have

fueled Russian consumption. Budget spending reached 23.6% of GDP in the first quarter of 2012, up from 15.2% four years earlier. What that

means is Putin requires a higher oil price to meet his spending requirements today than he did just a few

years ago. Research firm Capital Economics figures that the government budget balanced at an oil price of $55 a barrel in 2008, but that now it balances at

close to $120. Oil prices today have fallen far below that, with Brent near $100 and U.S. crude less than $90. The farther oil prices fall, the more pressure is placed

on Putin’s budget, and the harder it is for him to keep spreading oil wealth to the greater population through the government. With

a large swath of

the populace angered by his re-election to the nation’s presidency in March, and protests erupting on the streets of Moscow,

Putin can ill-afford a significant blow to the economy, or his ability to use government resources to firm up his popularity. That’s why

Putin hasn’t been scaling back even as oil prices fall. His government is earmarking $40 billion to support the economy, if necessary, over the next two years. He

does have financial wiggle room, even with oil prices falling. Moscow has wisely stashed away petrodollars into a

rainy day fund it can tap to fill its budget

needs. But Putin doesn’t have the flexibility he used to have. The fund has shrunk, from almost 8% of GDP

in 2008 to a touch more than 3% today. The package, says Capital Economics, simply highlights the weaknesses of Russia’s economy: This cuts

to the heart of a problem we have highlighted before – namely that Russia is now much more dependent on high and rising oil

prices than in the past… The fact that the share of ‘permanent’ spending (e.g. on salaries and pensions)

has increased…creates additional problems should oil prices drop back (and is also a concern from the perspective of

medium-term growth)…The present growth model looks unsustainable unless oil prices remain at or above $120pb.

IL-Production lowers prices

Production lowers oil prices

Leonardo 12 [Maugeri, Leonardo. “Global Oil Production is Surging: Implications for Prices, Geopolitics, and the Environment.” Policy Brief, Belfer Center for

Science and International Affairs, Harvard Kennedy School, June 2012.http://belfercenter.ksg.harvard.edu/files/maugeri_policybrief.pdf //jweideman]

Oil Prices May Collapse. Contrary to prevailing wisdom that increasing global demand for oil will increase

prices, the report finds oil production capacity is growing at such an unprecedented level that supply

might outpace consumption. When the glut of oil hits the market, it could trigger a collapse in oil prices.

While the age of "cheap oil" may be ending, it is still uncertain what the future level of oil prices might be. Technology may turn today's expensive oil into

tomorrow's cheap oil. The

oil market will remain highly volatile until 2015 and prone to extreme movements in

opposite directions, representing a challenge for investors. After 2015, however, most of the oil exploration and development

projects analyzed in the report will advance significantly and contribute to a shoring up of the world's production capacity. This could provoke

overproduction and lead to a significant, steady dip of oil prices, unless oil demand were to grow at a sustained yearly rate of at

least 1.6 percent trough 2020. Shifting Market Has Geopolitical Consequences. The United States could conceivably produce up to 65

percent of its oil consumption needs domestically, and import the remainder from North American

sources and thus dramatically affect the debate around dependence on foreign oil. However the reality will not

change much, since there is one global oil market in which all countries are interdependent. A global oil market tempers the meaningfulness of self- sufficiency, and

Canada, Venezuela, and Brazil may decide to export their oil and gas production to non- U.S. markets purely for commercial reasons. However, considering

the recent political focus on U.S. energy security, even the spirit of oil self-sufficiency could have

profound implications for domestic energy policy and foreign policy. While the unique conditions for the shale boom in the

United States cannot be easily replicated in other parts of the world in the short-term, there are unknown and untapped resources around the globe and the results

of future exploration development could be surprising. This combined with China's increasing influence in the Middle East oil realm will continue to alter the

geopolitics of energy landscape for many decades.

New production decreases global prices

Tallet et al 14 [Harry vidas, Martin Tallett Tom O’Connor David Freyman William Pepper Briana Adams Thu Nguyen Robert Hugman Alanna Bock ICF

International EnSys Energy. March 31, 2014. “The Impacts of U.S. Crude Oil Exports on Domestic Crude Production, GDP, Employment, Trade, and Consumer Costs”

http://www.api.org/~/media/Files/Policy/LNG-Exports/LNG-primer/API-Crude-Exports-Study-by-ICF-3-31-2014.pdf //jweideman]

The increase

in U.S. crude production accompanied by a relaxation of crude export constraints would tend to increase the

overall global supply of crude oil, thus pulling downward pressure global oil prices. Although the U.S. is the second

largest oil producer in the world and could soon be the largest by 2015, according to the International Energy Agency (IEA)48, the price impact of

crude exports is determined by the incremental production, rather than total production. For this study, ICF used

Brent crude as proxy for the global crude price as affected by forces of global crude supply and demand. The impact of lifting crude exports on Brent prices, as

shown in Exhibit 4-19, is relatively small, about $0.05 to $0.60/bbl in the Low- Differential Scenario and about $0.25 to $1,05/bbl in the High-Differential Scenario. It

should be noted that Brent prices are affected by various factors such as emerging supply sources, OPEC responses to

increasing U.S. and Canadian production, and geopolitical events. Changes in any of these factors could mean actual Brent prices would deviate significantly from

our forecasts. However, in general, higher global production leads to lower crude prices, all other factors being

equal.Allowing crude exports results in a Brent average price of $103.85/bbl over the 2015-2035 period, down S0.35/bbl from the Low-Differential Scenario without

exports and down $0.75/bbl from the High-Differential Scenario without exports.While global crude prices drop, domestic crude prices gain strength when exports

are allowed because lifting the restrictions helps relieve the U.S. crude oversupply situation and allows U.S. crudes to fully compete and achieve pricing in

international markets close to those of similar crude types. Exhibit 4-20 shows WTI prices increase to an average of $98.95/bbl over the 2015-2035 period in the

Low-Differential and High-Differential With Exports Cases as opposed to $96.70/bbl in the Low-Differential No Exports Case and $94.95/bbl in the High-Differential

No Exports Case. The range of increase related to allowing exports is $2.25 to $4.00/bbl averaged over 2015 to 2035.

Production decreases prices

Alquist and Guenette 13 [Ron Alquist and Justin-Damien Guénette work for the bank of Canada. 2013, “A Blessing in Disguise: The Implications of

High Global Oil Prices for the North American Market,” http://www.bankofcanada.ca/wp-content/uploads/2013/07/wp2013-23.pdf //jweideman]

The presence of these unconventional sources of oil throughout the world and the ability to recover them makes a large

expansion in the physical production of oil a possibility. Recent estimates suggest that about 3.2 trillion barrels of unconventional

crude oil, including up to 240 billion barrels of tight oil, are available worldwide (IEA 2012a). By 2035, about 14 per cent of oil production will consist of

unconventional oil, an increase of 9 percentage points.

The potential for unconventional oil extraction around the world has led

some oil industry analysts to describe scenarios in which the world experiences an oil glut and a decline

in oil prices over the medium term (Maugeri 2012).

Impact-Proliferaiton

Russia’s economy is key to stop proliferation

Bukharin 3 [Oleg, August, he is affiliated with Princeton University and received his Ph.D. in physics from the Moscow Institute of Physics and Technology

“The Future of Russia: The Nuclear Factor”, http://www.princeton.edu/~lisd/publications/wp_russiaseries_bukharin.pdf,//jweideman]

There are presently no definite answers about the future of the nuclear security agenda in Russia. The Russian nuclear legacy – its nuclear forces, the nuclear-weapons production and power-

What is

clear is that nuclear security and proliferation risks will be high as long as there remain their underlying

causes: the oversized and underfunded nuclear complex, the economic turmoil, and wide-spread crime and corruption. The magnitude of the

problem, however, could vary significantly depending on Russia’s progress in downsizing of its nuclear weapons complex; its ability to maintain core

competence in the nuclear field; availability of funding for the nuclear industry and safeguards and

security programs; political commitment by the Russian government to improve nuclear security; and

international cooperation. Economically-prosperous Russia, the rule of law, and a smaller, safer and more secure nuclear complex would

make nuclear risks manageable. An integration of the Russian nuclear complex into the world’s nuclear industry, increased transparency of nuclear operations, and

generation complex, huge stocks of nuclear-useable highly enriched uranium and plutonium, and environmental clean-up problems – is not going to go away anytime soon.

cooperative nuclear security relations with the United States and other western countries are also essential to reducing nuclear dangers and preventing catastrophic terrorism.

Proliferation is the worst – it destabilizes hegemony and all other nations and

escalates to nuclear war – deterrence doesn’t account for irrational actors

Maass 10 Richard Maass, working for his Ph. D. in political science at Notre dame University, and

currently teaches classes there on International Relations. 2010 “Nuclear Proliferation and Declining U.S.

Hegemony” http://www.hamilton.edu/documents//levitt-center/Maass_article.pdf

On August 29, 1949, The Soviet Union successfully tested its first nuclear fission bomb, signaling the end

of U.S. hegemony in the international arena. On September 11th, 2001, the world’s single most

powerful nation watched in awe as the very symbols of its prosperity fell to rubble in the streets of New

York City. The United States undisputedly “has a greater share of world power than any other country in

history” (Brooks and Wolforth, 2008, pg. 2). Yet even a global hegemon is ultimately fallible and vulnerable to rash acts

of violence as it conducts itself in a rational manner and assumes the same from other states. Conventional

strategic thought and military action no longer prevail in an era of increased globalization. Developing states and irrational actors play

increasingly influential roles in the international arena. Beginning with the U.S.S.R. in 1949, nuclear proliferation has

exponentially increased states’ relative military capabilities as well as global levels of political instability.

Through ideas such as nuclear peace theory, liberal political scholars developed several models under

which nuclear weapons not only maintain but increase global tranquility. These philosophies assume

rationality on the part of political actors in an increasingly irrational world plagued by terrorism,

despotic totalitarianism, geo-political instability and failed international institutionalism. Realistically,

“proliferation of nuclear [weapons]…constitutes a threat to international peace and security” (UN Security

Council, 2006, pg. 1). Nuclear security threats arise in four forms: the threat of existing arsenals, the emergence

of new nuclear states, the collapse of international non-proliferation regimes and the rise of nuclear

terrorism. Due to their asymmetric destabilizing and equalizing effects, nuclear weapons erode the

unipolarity of the international system by balancing political actors’ relative military power and security.

In the face of this inevitable nuclear proliferation and its effects on relative power, the United States must accept a position of

declining hegemony. Despite nuclear proliferation’s controversial nature, states continue to develop the technologies requisite for constructing nuclear

weapons. What motivates men to create “the most terrifying weapons ever created by human kind…unique in their destructive power and in their lack of direct

military utility”(Cirincione, 2007, pg. 47)? Why then do states pursue the controversial and costly path of proliferation? To states, nuclear

weapons

comprise a symbolic asset of strength and “as a prerequisite for great power status” (Cirincione, 2007, pg. 47). On a

simplistic level, nuclear weapons make states feel more powerful, respected and influential in world politics. When it is in their best interest, states

develop