Microsoft IT: A Case Study on “Hekaton” against RPM – SQL

Server 2014 CTP1

SQL Server Technical Article

Summary: This paper shares the approach used to understand and determine:

Using ‘Hekaton’ in SQL Server 2014 against RPM, including performance analysis.

Understand the specifics involved while migrating to Hekaton.

I/O latch can cause session delays that impact application performance. This white paper

describes the procedures and common I/O latch issues when migrating to Hekaton in SQL

Server 2014. It also includes challenges that occurred during the migration and the performance

analysis at different stages.

This content is suitable for developers, architects, and database administrators. It is assumed

that readers of this white paper have basic knowledge of SQL Server 2012/2014 and SQL

Server administration.

Writer: Priyanka Kulkarni, Microsoft | Prabhakaran Sethuraman (PRAB), Microsoft

Technical Reviewer: Prabhakaran Sethuraman (PRAB), Microsoft | Hariharan Sethuraman,

Microsoft | Mandi Ohlinger, Microsoft

Published: May 2014

Applies to: SQL Server 2014 CTP1, RPM

Copyright

This document is provided “as-is”. Information and views expressed in this document, including URL and

other Internet Web site references, can change without notice. You bear the risk of using it.

This document does not provide you with any legal rights to any intellectual property in any Microsoft

product. You can copy and use this document for your internal reference purposes.

© 2014 Microsoft. All rights reserved.

Contents

Contents ................................................................................................................................................ 3

Introduction .......................................................................................................................................... 4

About SQL Server 2014 ......................................................................................................................... 4

Understanding Hekaton ........................................................................................................................ 5

What is RPM? ........................................................................................................................................ 6

Performance issues in RPM .................................................................................................................. 7

Hekaton as a solution............................................................................................................................ 7

RPM to Hekaton Migration Case Study ................................................................................................ 8

Context ............................................................................................................................................. 8

Objectives ........................................................................................................................................ 9

Environments for performance analysis .......................................................................................... 9

Identification of Top 5 scenarios...................................................................................................... 9

Performance analysis after vanilla migration to SQL Server 2014 .................................................. 9

AMR Report: Identification of the top candidate table for migration ........................................... 11

Migration to Hekaton .................................................................................................................... 12

Procedure.......................................................................................................................... 12

Challenges ......................................................................................................................... 13

Post Migration Analysis....................................................................................................................... 15

Conclusion ........................................................................................................................................... 17

Appendix ............................................................................................................................................. 17

Acknowledgements............................................................................................................................. 17

Introduction

In-Memory OLTP (formally known as “Hekaton”) is a new database engine component fully integrated

into SQL Server 2014. It is optimized for OLTP workloads that access memory resident data. In-Memory

OLTP allows OLTP workloads to achieve remarkable performance improvements and reduce processing

time. Tables can be declared as ‘memory optimized’ to take advantage of In-Memory OLTP capabilities.

This paper describes the following:

Using "Hekaton" in SQL Server 2014 against RPM, including performance analysis.

Understanding challenges faced, the solutions drawn, and the improvement in the migration

process.

I/O latch can cause session delays that impact application performance. This white paper describes the

procedures and common I/O latch issues when migrating to Hekaton in SQL Server 2014. It also includes

challenges that occurred during the migration and the performance analysis at different stages.

This content is suitable for developers, architects, and database administrators. It is assumed that

readers of this white paper have basic knowledge of SQL Server 2012/2014 and SQL Server

administration.

About SQL Server 2014

At SQL PASS in November 2012, it was announced that In-memory OLTP (code-named Hekaton)

database technology is built into the next release of SQL Server. The latest release of the SQL Server

2014 Database Engine introduces new features and enhancements that increase the power and

productivity of architects, developers, and administrators who design, develop, and maintain data

storage systems. There are multiple areas in which the Database Engine has been enhanced, including:

Database Engine Feature Enhancements

Memory-Optimized Tables

SQL Server Data Files in Windows Azure

Host a SQL Server Database in a Windows Azure Virtual Machine

Backup and Restore Enhancements

New Design for Cardinality Estimation

Delayed Durability

AlwaysOn Enhancements

Partition Switching and Indexing

Managing the Lock Priority of Online Operations

Columnstore Indexes

Buffer Pool Extension

Incremental Statistics

Resource Governor Enhancements for Physical IO Control

Online Index Operation Event Class

Database Compatibility Level

Transact-SQL Enhancements

System View Enhancements

Security Enhancements

Deployment Enhancements

In this paper, the main focus is on the memory optimized tables. “Hekaton” is the code name for InMemory OLTP. These two terms are used interchangeably in this paper.

Understanding Hekaton

Figure 1: The SQL Server engine includes the In-Memory OLTP component

Memory-optimized tables

When memory-optimized tables are accessed, pages are not read into cache from disk. All the data is

stored in memory, all the time. A set of checkpoint files (used for recovery purposes) is created on

filestream filegroups that track changes to the data. The checkpoint files are append-only.

Operations on memory-optimized tables use the same transaction log used for operations on disk-based

tables. The transaction log is stored on disk. In a system crash or server shutdown, the rows of data in

memory-optimized tables can be recreated from the checkpoint files and the transaction log.

In-Memory OLTP provides the option to create a table that is non-durable and not logged using the

SCHEMA_ONLY option. As the option indicates, the table schema is durable, even though the data is not.

[1]

Indexes on memory-optimized tables

SQL Server 2014 introduces hash indexes for memory-optimized tables. Hash indexes are very efficient

for point lookup operations. Hash indexes require a simple lookup in a hash table rather than navigating

an index tree structure; which is required for traditional (non) clustered indexes. If you are looking for

the rows corresponding to a particular index key value, using a hash index is the way to go. Every

memory-optimized table must have at least one Hash index. Because of the nature of hash tables, rows

appear in the index in random order.

Indexes are never stored on disk and are not reflected in the on-disk checkpoint files. Operations on

indexes are never logged. The indexes are maintained automatically during all modification operations

on memory-optimized tables; just like B-tree indexes on disk-based tables. In a SQL Server restart, the

indexes on the memory-optimized tables are rebuilt as the data is streamed into memory. [1]

Concurrency improvements

When accessing memory-optimized tables, SQL Server uses optimistic multi version concurrency control.

Although SQL Server supports optimistic concurrency control with the snapshot-based isolation levels

introduced in SQL Server 2005, these optimistic methods acquire locks during data modification

operations. For memory-optimized tables, there are no locks acquired, and thus no waiting because of

blocking.

When using memory-optimized tables, it is still possible to experience waiting. There are other wait

types, such as waiting for a log write to complete. However, logging while updating memory-optimized

tables is much more efficient than logging for disk-based tables. Wait times are much shorter. And,

there are no waits for reading data from disk and no waits for locks on data rows. [1]

Feature Set

The best execution performance is obtained when using natively compiled stored procedures with

memory-optimized tables. However, there are limitations on the TSQL language constructs allowed

inside natively compiled stored procedures compared to the rich feature set available with interpreted

code in the TSQL language. In addition, natively compiled stored procedures can only access memoryoptimized tables and cannot reference disk-based tables. [1]

What is RPM?

The Resource and Project Management (RPM) application is a Microsoft IT LOB application. RPM

automates much of the resource management functionality. But it does not replace the level of

communication required among RMs, EMs, and Team Members for Resource Management to be

effective. The RPM application includes the following:

Allows RMs visibility to meet demand through resource requests. RMs are able to match a

resource to an engagement based on different criteria, such as Skill and Availability. RM’s are

able to view global and local, resources and can request global assistance if needed.

Allows requestors and RMs provide a clear description of requirements for an engagement and

view the progress and status of resource requests and bookings.

Allows PDMs to tailor the training of consultants to the needs of the market by providing supply

and demand data.

View various reports that track lead time, how quickly requests are being filled, bench time, and

many other factors.

The various systems that interact with RPM include the following:

Account

Master

Contract

System

Downstream

Reporting

Systems

RPM

Users

Vacation Plan

System

Skills Master

System

Figure 2: Interaction of systems used within services business

Performance issues in RPM

In FY13, after tuning performance on the DEV box, there is an average 40-50% improvement in the

following interfaces:

Request Get

Bookings Get

Project Search

However, the I/O latch waits continued to affect RPM performance. I/O latch waits occur when a needs

to wait for a page due to physical I/O. For example, a page is made available in the buffer pool for

reading or writing and SQL Server needs to retrieve it from disk.

In this situation, sessions experienced delays; hindering RPM performance.

Hekaton as a solution

After analyzing the performance issues faced by RPM and considering the benefits Hekaton, Hekaton is

explored as a solution to the performance concerns.

Figure 3: Understanding the performance gains

Hekaton is an ideal solution to latch-based performance difficulties for following reasons:

•

Memory-optimized tables (Hekaton) are stored differently than disk-based tables

•

Efficiency in accessing and processing data

•

No locks acquired, and thus no waiting because of blocking

•

Far less log data and needing fewer log writes

RPM to Hekaton Migration Case Study

Context

Resource Management is responsible for matching resources to projects; ensuring the right consultants

on the right projects at the right time. It is a managerial process that directly affects customer

satisfaction, business results, and the motivation, productivity, and professional development of the

consulting workforce. Resource and Project Management application automates as much of the

functionality of resource management as possible. It does not replace the level of communication

required among RMs, EMs, and Team Members in order for Resource Management to be effective.

Currently, the RPM database server uses SQL Server 2008 R2. Due to I/O latch waits, sessions experience

delays; decreasing RPM performance. In this white paper, we migrate to SQL Server 2014 with the

implementation of ‘Hekaton’ as a solution. We also share the challenges faced, the solutions, as well as

the improvements observed in the migration process.

Objectives

Perform a proof of concept that migrates the RPM database server from SQL Server 2008 R2 to

SQL Server 2014.

Leverage the ‘Hekaton’ feature of SQL Server 2014 to overcome the performance burden caused

by page I/O latch waits.

Execute extensive performance analysis over the existing and the to-be environments.

Explain the challenges faced and the improvements observed in the migration process.

Environments for performance analysis

The various performance analyses completed throughout the migration process used the following

three environments:

SQL Server

Server Name

RAM

Secondary

Memory

Location

2008

hyd2rxdssdev02

8 GB

230 GB

hyd

2014 - 1

hydrpmvm14

8 GB

100 GB

hyd

2014 - 2

hydrpmvm14 – with

Memory optimized

Schedule table

12 GB

100 GB

hyd

Table 1: SQL Server versions and complete system configurations under which the performance analyses is done

Identification of Top 5 scenarios

In order to execute the performance analysis of RPM in the existing (SQL Server 2008) and to-be (SQL

Server 2014) environments, five scenarios and their performance are evaluated:

1. Bookings search

2. Requests search

3. Resources search

4. Projects search

5. Project schedule search

The performance analysis in this white paper focuses on these five scenarios.

Performance analysis after vanilla migration to SQL Server 2014

At the first stage of analysis, there is a performance improvement in the five scenarios after a vanilla

migration to SQL Server 2014. Results include:

Bookings Procedure (result in minutes):

Version

State

Rows ret

Run 1

Run 2

Run 3

Run 4

Run 5

2008

Existing environment

16854

1:31

0:19

0:19

0:19

0:20

2014 - 1

Vanilla migration to 16890

SQL Server 2014 CTP1

0:46

0:18

0:19

0:18

0:16

Table 2: Performance (in minutes) for each run of Bookings Procedure in various environments

Requests Procedure (result in minutes):

Version

State

Rows ret

Run 1

Run 2

Run 3

Run 4

Run 5

2008

Existing environment

12474

0:49

0:20

0:17

0:17

0:21

2014 – 1

Vanilla migration to 12475

SQL Server 2014 CTP1

0:31

0:17

0:17

0:17

0:18

Table 3: Performance (in minutes) for each run of Requests Procedure in various environments

Resources Procedure (result in minutes):

Version

State

Rows ret

Run 1

Run 2

Run 3

Run 4

Run 5

2008

Existing environment

40906

2:24

0:34

0:28

0:26

0:31

2014 – 1

Vanilla migration to 40916

SQL Server 2014 CTP1

1:44

0:15

0:14

0:14

0:12

Table 4: Performance (in minutes) for each run of Resources Procedure in various environments

Projects Procedure (result in minutes):

Version

State

Rows ret

Run 1

Run 2

Run 3

Run 4

Run 5

2008

Existing environment

1064

0:16

0:16

0:01

0:01

0:01

2014 - 1

Vanilla migration to 1063

SQL Server 2014 CTP1

0:05

0:01

0:01

0:01

0:01

Table 5: Performance (in minutes) for each run of Projects Procedure in various environments

Project Schedule Procedure (result in minutes):

Version

State

Rows ret

Run 1

Run 2

Run 3

Run 4

Run 5

2008

Existing environment

459

0:08

0:00

0:00

0:00

0:00

2014 - 1

Vanilla migration to 459

SQL Server 2014 CTP1

0:05

0:00

0:00

0:00

0:00

Table 6: Performance (in minutes) for each run of Project Schedule Procedure in various environments

As you can see, there is an increase in performance even after a simple vanilla migration. This

performance improvement is attributed to the improved cardinality estimator in SQL Server 2014.

AMR Report: Identification of the top candidate table for migration

It can be difficult to determine which targets should take advantage of In-Memory OLTP. SQL Server

2014 CTP1 includes the AMR (Analysis, Migration, and Reporting) Tool to help decide which tables and

stored procedures can move to Hekaton or In-Memory OLTP. This tool is integrated with the Data

Collector.

Consider using the AMR Tool in the following scenarios:

Unsure which tables and stored procedures should migrate to In-Memory OLTP

Seeking validation for the migration plans

Evaluating the work needed to migrate tables and stored procedures

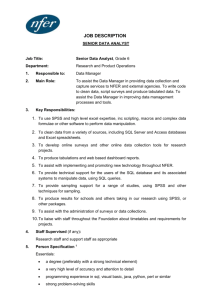

The following AMR report (Table usage) is generated and determines the most suitable candidate for

migration to Hekaton:

Figure 4: RPM AMR Report indicating ‘Schedule’ as the migration candidate

Graphically, it shows the best options for tables to transform to in-memory OLTP. The left axis indicates

‘Gain’. For example, how much you gain if you transform. The bottom axis indicates ‘Migration Work’.

For example, the work needed to transform. The best candidates for in-memory OLTP are listed in the

top-right of the graph. Based on the report, the ‘Schedule’ table is the best candidate for migration to

Hekaton. The Schedule table has Minimal migration work and high gain post- migration.

Migration to Hekaton

Procedure

1) Before creating memory optimized tables, create the file group and declare the database as memory

optimized:

ALTER DATABASE RPM ADD FILEGROUP rpm_mod CONTAINS MEMORY_OPTIMIZED_DATA;

GO

ALTER

DATABASE

RPM

ADD

FILE

(NAME='rpm_mod',

FILENAME='C:\RPMHekatonFilegroup\rpm_mod') TO FILEGROUP rpm_mod;

GO

2) Determine the bucket size and create the memory optimized table:

The Schedule table (candidate for migration in the AMR report) is converted to a memory-optimized

Schedule table. Only NON CLUSTERED HASH indices can be used in a memory optimized table. A hash

index consists of an array of pointers. Each element of the array is called a hash bucket. The bucket size

is chosen as equal to, or greater than the expected cardinality (the number of unique values) of the

index key column. There is a greater likelihood that each bucket only has rows with a single value in its

chain.

The memory optimized schedule table is created using the following:

CREATE TABLE [dbo].[Schedule]

(

[ScheduleID] [int] NOT NULL,

[BookingID] [int] NOT NULL,

[ResourceID] [int] NOT NULL,

[Date] [datetime] NOT NULL,

[Minutes] [int] NOT NULL,

[LastModifiedOnDate] [datetime] NOT NULL,

[LastModifiedByID] [int] NOT NULL,

[LastModifiedDate] [datetime] NULL

INDEX [IX_Schedule_BkId_ScheId] NONCLUSTERED HASH

(

[BookingID],

[ScheduleID]

)WITH ( BUCKET_COUNT = 33554432),

INDEX [IX_Schedule_Covering_Index] NONCLUSTERED HASH

(

[BookingID],

[ResourceID]

)WITH ( BUCKET_COUNT = 33554432),

INDEX [IX_ScheduleDate] NONCLUSTERED HASH

(

[Date]

)WITH ( BUCKET_COUNT = 33554432),

PRIMARY KEY NONCLUSTERED HASH

(

[ScheduleID]

)WITH ( BUCKET_COUNT = 33554432),

INDEX [ScheduleIX1D] NONCLUSTERED HASH

(

[ResourceID]

)WITH ( BUCKET_COUNT = 33554432),

INDEX [ScheduleIX2D] NONCLUSTERED HASH

(

[BookingID]

)WITH ( BUCKET_COUNT = 33554432),

INDEX [UI_DATE_RESOURCE_BOOKING] NONCLUSTERED HASH

(

[ResourceID],

[BookingID],

[Date]

)WITH ( BUCKET_COUNT = 33554432)

)WITH ( MEMORY_OPTIMIZED = ON , DURABILITY = SCHEMA_AND_DATA )

GO

When the new memory-optimized Schedule table is created, data from the non-memory optimized

Schedule table is inserted into this new table. The old Schedule table is dropped, renamed, and can be

used as backup. All further transactions on the Schedule table are performed on the new memoryoptimized version of the Schedule table. Since No UNIQUE indexes other than for the PRIMARY KEY are

allowed, the insertions are tracked by using Sequences.

Challenges

1) RAM size considerations

When the memory-optimized tables are accessed, pages are not read into cache from disk. All the

data is stored in memory, all the time; which is an important difference between memory-optimized

tables and disk-based tables. A set of checkpoint files (used only for recovery purposes) is created on

filestream filegroups that track changes to the data. The checkpoint files are append-only. Thus, monitor

the size of the memory optimized table and the RAM capacity. To help optimize performance, you can

modify the RAM available. If the SQL Server resides on a virtual machine, the amount of RAM is

configurable. To determine the new RAM size, use the following steps:

Measure OLTP size using sp_spaceused. This stored procedure gives the data plus the index size.

New RAM size = Old RAM + Memory Optimized table size

2) Reference constraints migration

When creating memory-optimized tables, no FOREIGN KEY or CHECK constraints are allowed. The

following procedures add the constraints to the memory-optimized tables:

IF EXISTS (SELECT 1 FROM BOOKINGS WHERE BOOKINGID=@BookingID

@ResourceID )

SELECT @Bookingsflag=1

AND RESOURCEID=

IF NOT EXISTS(SELECT ScheduleID

FROM Schedule WITH (SNAPSHOT)

WHERE BookingID = @BookingID

AND ResourceID = @ResourceID

AND [Date] = @Date) AND @Bookingsflag=1

AND @MSP_RESOURCES_EXTflag=1

BEGIN

INSERT Schedule(

BookingID

, ResourceID

, [Date]

, Minutes

, LastModifiedByID

, LastModifiedDate

)

VALUES(

@BookingID

, @ResourceID

, @Date

, ISNULL(@Hours, 0) * 60

, @LoggedInUserID

, GETUTCDATE()

)

END

ELSE

BEGIN

SET @ErrNo = 51104

Goto Crash

END

3) Indices Migration

When creating memory optimized tables, no UNIQUE indexes other than the PRIMARY KEY are

allowed. Also, range indexes are not available in CTP 1 (available in CTP2). As a result, all indices are

converted to NON CLUSTERED HASH indices.

4) Read isolation issue

READ_COMMITTED_SNAPSHOT is supported for memory-optimized tables with auto commit

transaction and only if the query does not access any disk-based tables. When accessing a memoryoptimized table from an explicit or implicit transaction that uses interpreted Transact-SQL, an isolation

level table is required. Therefore, all dependent procedures must include WITH (SNAPSHOT).

Post Migration Analysis

The post migration analysis is done at two levels: 1) Read level 2) Write level.

The procedures that have no dependencies on the memory optimized Schedule table show no

improvement in performance. Of the five scenarios, the following procedures are dependent on the

Schedule table and have improved performance:

•

Bookings Procedure

•

Resources Procedure

•

Get Project Schedule Procedure

Hekaton Read level performance:

At the read level, Bookings procedure demonstrated 187% improvement in first run:

Bookings Procedure

1:40

1:26

In Minutes

1:12

0:57

0:43

0:28

0:14

0:00

Run 1

Run 2

Existing environment : SQL Server 2008

Run 3

Run 4

Run 5

Vanilla migration to SQL Server 2014 CTP1

SQL Server 2014 with Hekaton

Figure 5: Performance (in minutes) of the Booking procedure in different environments

The Resources procedure demonstrated 215% improvement in first run:

Resources Procedure

2:24

In Minutes

1:55

1:26

0:57

0:28

0:00

Run 1

Run 2

Existing environment : SQL Server 2008

Run 3

Run 4

Run 5

Vanilla migration to SQL Server 2014 CTP1

SQL Server 2014 with Hekaton

Figure 6: Performance (in minutes) of the Resource procedure in different environments

The Get Project Schedule demonstrated 150% improvement in first run:

Get Project Schedule Procedure

0:08

0:07

In Minutes

0:05

0:04

0:02

0:01

0:00

Run 1

Run 2

Existing environment : SQL Server 2008

Run 3

Run 4

Run 5

Vanilla migration to SQL Server 2014 CTP1

SQL Server 2014 with Hekaton

Figure 7: Performance (in minutes) of the Get Project Schedule procedure in different environments

Hekaton write level constraints, solutions, and task completion

Constraint

Solution

1. IDENTITY columns not supported

Insertion of Primary Key ScheduleID is

accomplished by using the Sequence table to track

the IDs during insertion

2. Timestamp constraint / row version constraint

not supported

LastModifiedOnDate datatype converted to

datetime not null

3. DEFAULT constraint not supported

Ensuring assignment of GETDATE() to

LastModifiedOnDate at each stage

Conclusion

•

Using SQL Server In-Memory OLTP, you can create and work with tables that are memoryoptimized and efficient to manage. It also provides performance optimization for OLTP

workloads despite the various constraints.

•

After memory-optimizing the Schedule table, an average gain of 184 % was achieved.

•

Selective and incremental migration into In-memory OLTP provides predictable low latency and

high throughput with linear scaling for DB transactions.

Appendix

Acknowledgements

Many thanks for the technical information and input provided by Prabhakaran Sethuraman (PRAB) and

Lakshminarayanan A B. We would like to acknowledge the leadership and support provided by

Hariharan Sethuraman. Special thanks to the Microsoft community on the Internet who have taken

painstaking efforts and provided useful information available anytime, anywhere.

References:

[1] Kalen Delaney, “SQL Server In-Memory OLTP Internals Overview for CTP1 “in SQL Server Technical

Article

[2] SQL Server team, “SQL Server Blog” in Microsoft TechNet Blogs

For more information:

http://www.microsoft.com/sqlserver/: SQL Server Web site

http://technet.microsoft.com/en-us/sqlserver/: SQL Server TechCenter

http://msdn.microsoft.com/en-us/sqlserver/: SQL Server DevCenter

http://blogs.technet.com/b/dataplatforminsider/archive/2013/11/12/sql-server-2014-in-memory-oltpnonclustered-indexes-for-memory-optimized-tables.aspx

Did this paper help you? Please provide feedback. On a scale of 1 (poor) to 5 (excellent), how would you

rate this paper and why have you given this rating? For example:

Are you rating it high due to having good examples, excellent screen shots, clear writing, or

another reason?

Are you rating it low due to poor examples, fuzzy screen shots, or unclear writing?

This feedback helps improve the quality of white papers released.

Send feedback.