RUNNING HEAD: Task-constraints facilitate perspective inference

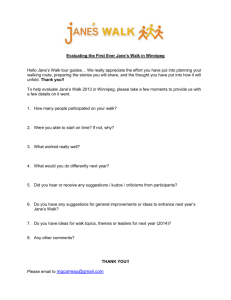

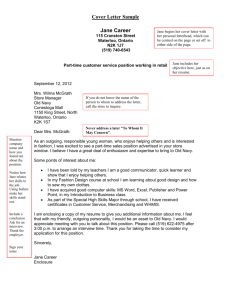

advertisement