ttk2013990131s1 - IEEE Computer Society

advertisement

AUTHOR ET AL.: TITLE

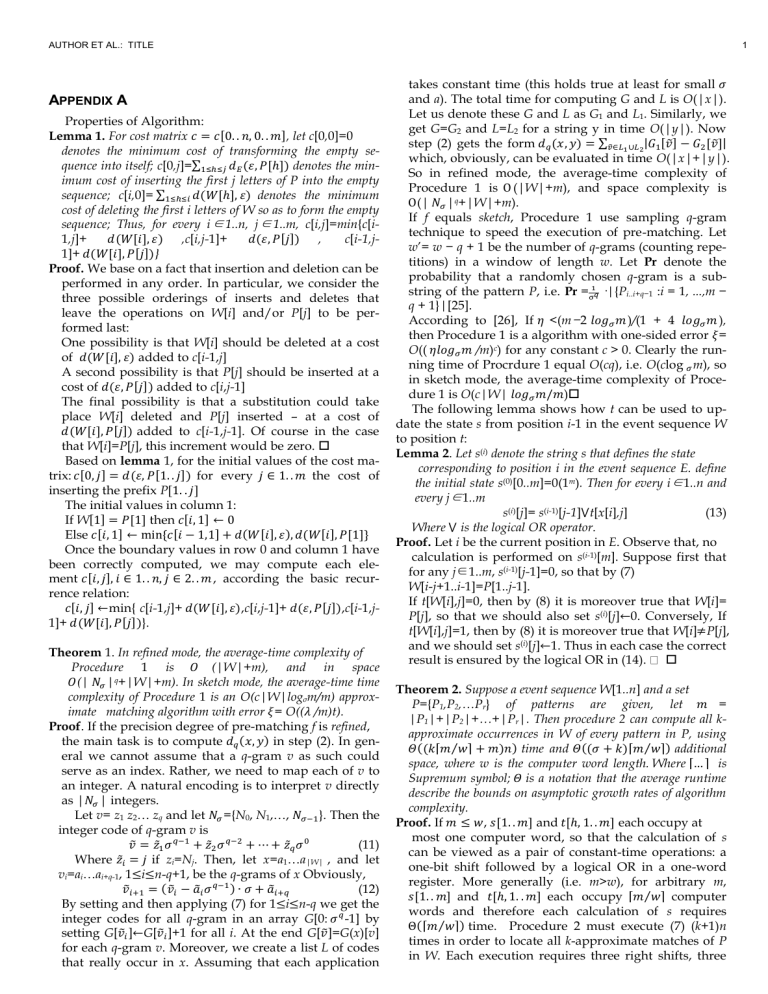

APPENDIX A

Properties of Algorithm:

Lemma 1. For cost matrix 𝑐 = 𝑐[0. . 𝑛, 0. . 𝑚], let c[0,0]=0

denotes the minimum cost of transforming the empty sequence into itself; c[0,j]=∑1≤ℎ≤𝑗 𝑑𝐸 (𝜀, 𝑃[ℎ]) denotes the minimum cost of inserting the first j letters of P into the empty

sequence; c[i,0]= ∑1≤ℎ≤𝑖 𝑑(𝑊[ℎ], 𝜀) denotes the minimum

cost of deleting the first i letters of W so as to form the empty

sequence; Thus, for every i ∈ 1..n, j ∈ 1..m, c[i,j]=min{c[i1,j]+

𝑑(𝑊[𝑖], 𝜀) ,c[i,j-1]+

𝑑(𝜀, 𝑃[𝑗]) ,

c[i-1,j1]+ 𝑑(𝑊[𝑖], 𝑃[𝑗])}

Proof. We base on a fact that insertion and deletion can be

performed in any order. In particular, we consider the

three possible orderings of inserts and deletes that

leave the operations on W[i] and/or P[j] to be performed last:

One possibility is that W[i] should be deleted at a cost

of 𝑑(𝑊[𝑖], 𝜀) added to c[i-1,j]

A second possibility is that P[j] should be inserted at a

cost of 𝑑(𝜀, 𝑃[𝑗]) added to c[i,j-1]

The final possibility is that a substitution could take

place W[i] deleted and P[j] inserted – at a cost of

𝑑(𝑊[𝑖], 𝑃[𝑗]) added to c[i-1,j-1]. Of course in the case

that W[i]=P[j], this increment would be zero.

Based on lemma 1, for the initial values of the cost matrix: 𝑐[0, 𝑗] = 𝑑(𝜀, 𝑃[1. . 𝑗]) for every 𝑗 ∈ 1. . 𝑚 the cost of

inserting the prefix P[1. . 𝑗]

The initial values in column 1:

If W[1] = 𝑃[1] then 𝑐[𝑖, 1] ← 0

Else 𝑐[𝑖, 1] ← min{𝑐[𝑖 − 1,1] + 𝑑(𝑊[𝑖], 𝜀), 𝑑(𝑊[𝑖], 𝑃[1]}

Once the boundary values in row 0 and column 1 have

been correctly computed, we may compute each element 𝑐[𝑖, 𝑗], 𝑖 ∈ 1. . 𝑛, 𝑗 ∈ 2. . 𝑚 , according the basic recurrence relation:

𝑐[𝑖, 𝑗] ←min{ c[i-1,j]+ 𝑑(𝑊[𝑖], 𝜀),c[i,j-1]+ 𝑑(𝜀, 𝑃[𝑗]),c[i-1,j1]+ 𝑑(𝑊[𝑖], 𝑃[𝑗])}.

Theorem 1. In refined mode, the average-time complexity of

Procedure 1 is 𝛰 (|W|+m), and in space

𝛰(| 𝑁𝜎 |q+|W|+m). In sketch mode, the average-time time

complexity of Procedure 1 is an O(c|W|logσm/m) approximate matching algorithm with error 𝜉= O((𝜆 /m)t).

Proof. If the precision degree of pre-matching f is refined,

the main task is to compute 𝑑𝑞 (𝑥, 𝑦) in step (2). In general we cannot assume that a q-gram v as such could

serve as an index. Rather, we need to map each of v to

an integer. A natural encoding is to interpret v directly

as |𝑁𝜎 | integers.

Let v= z1 z2… zq and let 𝑁𝜎 ={N0, N1,…, 𝑁𝜎−1 }. Then the

integer code of q-gram v is

𝑣̃ = 𝑧̃1 𝜎 𝑞−1 + 𝑧̃2 𝜎 𝑞−2 + ⋯ + 𝑧̃𝑞 𝜎 0

(11)

Where 𝑧̃𝑖 = 𝑗 if zi=Nj. Then, let x=a1…a|W| , and let

vi=ai…ai+q-1, 1≤i≤n-q+1, be the q-grams of x Obviously,

𝑣̃𝑖+1 = (𝑣̃𝑖 − 𝑎̃𝑖 𝜎 𝑞−1 ) ∙ 𝜎 + 𝑎̃𝑖+𝑞

(12)

By setting and then applying (7) for 1≤i≤n-q we get the

integer codes for all q-gram in an array G[0: 𝜎 𝑞 -1] by

setting G[𝑣̃𝑖 ]←G[𝑣̃𝑖 ]+1 for all i. At the end G[𝑣̃]=G(x)[v]

for each q-gram v. Moreover, we create a list L of codes

that really occur in x. Assuming that each application

1

takes constant time (this holds true at least for small 𝜎

and a). The total time for computing G and L is O(|x|).

Let us denote these G and L as G1 and L1. Similarly, we

get G=G2 and L=L2 for a string y in time O(|y|). Now

step (2) gets the form 𝑑𝑞 (𝑥, 𝑦) = ∑𝑣̃∈𝐿1∪𝐿2|𝐺1 [𝑣̃] − 𝐺2 [𝑣̃]|

which, obviously, can be evaluated in time O(|x|+|y|).

So in refined mode, the average-time complexity of

Procedure 1 is Ο (|W|+m), and space complexity is

Ο(| 𝑁𝜎 |q+|W|+m).

If f equals sketch, Procedure 1 use sampling q-gram

technique to speed the execution of pre-matching. Let

w’= w − q + 1 be the number of q-grams (counting repetitions) in a window of length w. Let Pr denote the

probability that a randomly chosen q-gram is a substring of the pattern P, i.e. Pr =σ1𝑞 ·|{Pi..i+q−1 :i = 1, ...,m −

q + 1}|[25].

According to [26], If 𝜂 <(m − 2 𝑙𝑜𝑔𝜎 𝑚 )/(1 + 4 𝑙𝑜𝑔𝜎 𝑚 ),

then Procedure 1 is a algorithm with one-sided error 𝜉=

O(( 𝜂𝑙𝑜𝑔𝜎 𝑚 /m)c) for any constant c > 0. Clearly the running time of Procrdure 1 equal O(cq), i.e. O(clog 𝜎 m), so

in sketch mode, the average-time complexity of Procedure 1 is O(c|W| 𝑙𝑜𝑔𝜎 𝑚/𝑚)

The following lemma shows how t can be used to update the state s from position i-1 in the event sequence W

to position t:

Lemma 2. Let s(i) denote the string s that defines the state

corresponding to position i in the event sequence E. define

the initial state s(0)[0..m]=0(1m). Then for every i∈1..n and

every j∈1..m

s(i)[j]= s(i-1)[j-1]⋁t[x[i],j]

(13)

Where ⋁ is the logical OR operator.

Proof. Let i be the current position in E. Observe that, no

calculation is performed on s(i-1)[m]. Suppose first that

for any j∈1..m, s(i-1)[j-1]=0, so that by (7)

W[i-j+1..i-1]=P[1..j-1].

If t[W[i],j]=0, then by (8) it is moreover true that W[i]=

P[j], so that we should also set s(i)[j]←0. Conversely, If

t[W[i],j]=1, then by (8) it is moreover true that W[i]≠P[j],

and we should set s(i)[j]←1. Thus in each case the correct

result is ensured by the logical OR in (14).

Theorem 2. Suppose a event sequence W[1..n] and a set

P={P1,P2,…Pr} of patterns are given, let 𝑚 =

|P1|+|P2|+…+|Pr|. Then procedure 2 can compute all kapproximate occurrences in W of every pattern in P, using

𝛩((𝑘⌈𝑚⁄𝑤⌉ + 𝑚)𝑛) time and 𝛩((𝜎 + 𝑘)⌈𝑚⁄𝑤⌉) additional

space, where w is the computer word length. Where ⌈… ⌉ is

Supremum symbol; 𝛩 is a notation that the average runtime

describe the bounds on asymptotic growth rates of algorithm

complexity.

Proof. If 𝑚 ≤ 𝑤, 𝑠[1. . 𝑚] and 𝑡[ℎ, 1. . 𝑚] each occupy at

most one computer word, so that the calculation of s

can be viewed as a pair of constant-time operations: a

one-bit shift followed by a logical OR in a one-word

register. More generally (i.e. m>w), for arbitrary m,

𝑠[1. . 𝑚] and 𝑡[ℎ, 1. . 𝑚] each occupy ⌈𝑚⁄𝑤⌉ computer

words and therefore each calculation of s requires

Θ(⌈𝑚⁄𝑤⌉) time. Procedure 2 must execute (7) (k+1)n

times in order to locate all k-approximate matches of P

in W. Each execution requires three right shifts, three

2

IEEE TRANSACTIONS ON JOURNAL NAME, MANUSCRIPT ID

AND operations and OR operation on each word of

each sl, l=1,2,…k, hence constant time per word.

Observe that the array 𝑡= 𝑡[1..𝜎,1..m] can be computed

in the pre-matching phase that initially sets every word

to 1𝑚 , then resets position 𝑡[𝑝[𝑗], 𝑗] ← 0 for every 𝑗 ∈

1. . 𝑚 , hence the array t can be computed in time

Θ(𝜎⌈𝑚⁄𝑤⌉ + m) and not cost the execution time of procedure 2.

(𝑖)

The additional bit operations required to correct the 𝑠𝑙

can be performed in constant time, and since they are

all logical operations, they can be performed on words.

The time complexity of Step (10) to step (19) is

Θ(𝑟(𝑚⁄𝑟) ), where 𝑚⁄𝑟 denotes the average length of

pattern 𝑃𝑚𝑢 , Thus, the time complexity of procedure 2 is

Θ((𝑘⌈𝑚⁄𝑤⌉ + 𝑚)𝑛), and (k+1) bit vectors and array t

need Θ((𝜎 + 𝑘)⌈𝑚⁄𝑤⌉) additional space.

REFERENCES

[25] Ukkonen, E., “Approximate string-matching with q-grams and

maximal

matches”,

Theoretical

Computer

Science,

vol. 92, no. 1, pp. 191-211, Jan. 6, 1992, doi:10.1016/03043975(92)90143-4.

[26] M. Kiwi1, G. Navarro, and C. Telha, “On-line approximate

string matching with bounded errors”, Theoretical Computer Science, vol. 412, no. 45, pp. 6359–6370, 21 October 2011.