Discover expoits and software vulnerabilities

advertisement

DISCOVER EXPOITS AND SOFTWARE VULNERABILITIES USING FUZZING

METHODS

Victor VARZĂ1

Fuzzing is a programming testing technique that has gained more interest

from the research security community. The purpose of fuzzing is to find software

errors, bugs and vulnerabilities. The system under test is bombarded with

random input data generated by another program and then it is monitored for

any malformation of the results. The fuzzers principles have not changed along

the time, but the mechanism used has known a significant evolution from a

dumb fuzzer to a modern fuzzer that is able to cover most of the source code of a

software application. This paper is a survey of the concept of fuzzing and

presents a state of the art of the fuzzer techniques.

Key words: fuzzing, bugs, testing, software vulnerabilities, exploit

1

Faculty of Automatic Control and Computers, University Politehnica of Bucharest, România, e-mail:

victor.varza@cti.pub.ro

VICTOR VARZĂ

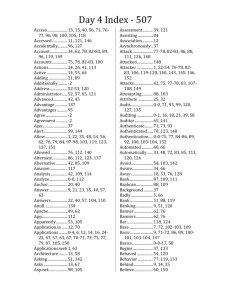

Table of content

1.

Introduction ................................................................................................................................................... 3

1.1.

Fuzzer anatomy ..................................................................................................................................... 3

2. Fuzzer types ................................................................................................................................................... 4

2.1.

By fuzz generators ................................................................................................................................. 4

2.2.

By delivery mechanisms ........................................................................................................................ 5

2.3.

By monitoring systems .......................................................................................................................... 6

3. Modern Fuzzers ............................................................................................................................................. 6

3.1.

SAGE ...................................................................................................................................................... 7

3.2.

Comparison of blackbox and whitebox fuzzing methods ..................................................................... 8

4. Combine whitebox and blackbox fuzzing methods ..................................................................................... 12

4.1.

Project architecture ............................................................................................................................ 12

4.2.

Path predicates collector .................................................................................................................... 13

4.3.

Input data generator ........................................................................................................................... 14

4.4.

Delivery mechanism ............................................................................................................................ 15

4.5.

Monitoring system .............................................................................................................................. 15

5. Experimental results .................................................................................................................................... 16

6. Conclusion and future work ......................................................................................................................... 17

Discover expoits and software vulnerabilities using fuzzing methods

1. Introduction

Fuzzing is an automated security testing method for discovering software

vulnerabilities by providing invalid or random input and monitoring the system under test

for exceptions, errors or potential vulnerabilities. Fuzzing was initially used to find zero days

in black-hat community. The main idea is to generate testing data that can be able to crash

the target application and to monitoring the results. There are also other ways to discover

potential software vulnerabilities such as source code review, static analysis, beta testing or

to create unit tests. Although there are alternative ways to find software vulnerabilities,

fuzzing tends to become widely used because it does not require the source code, it is less

expensive than manual tests, does not have blind spots like human testers, it offers

portability and fewer false positives.

The fuzzer efficiency has grown from a simple input data generator that discovers

flaws in software development to a complex system that can be able to discover bugs and

security vulnerability. For example between 2007 and 2010, a third of Microsoft Windows 7

bugs were discovered by the SAGE fuzzer tool [4].

This paper will present the current state of the art of automated methods to find

exploits and software vulnerabilities.

The paper is organized as follows: section 2 presents fuzzer anatomy, and describes

each component of a fuzzer. Section 3 presents existing types of fuzzers. Section 4 presents

modern fuzzers, detailing SAGE fuzzer. The section 5 presents a comparation between

blackbox and whitebox fuzzing and finally last section presents the open problems,

conclusions and future work.

1.1. Fuzzer anatomy

A fuzzer is usually composed of three building blocks: the generator, the delivery

mechanism and the monitoring system. Figure 1 presents the interactions between these

three components. The fuzzer generator creates relevant data inputs that are sent to the

System Under Test (SUT) by the delivery mechanism. The monitoring system analyzes the

outputs of SUT and feedback the generator improving the testing process.

VICTOR VARZĂ

INPUT DATA

GENERATOR

DELIVERY MECHANISM

SYSTEM UNDER

TESTS (SUT)

MONITORING

SYSTEM

Figure 1. Fuzzer components

The fuzz generator creates inputs that are used to test the SUT. Data generated

should be in the same area as a normal expected input data by the SUT. There are few

methods to generate testing data like mutation fuzzing, generative fuzzing or blackbox,

whitebox and graybox fuzzing. Each of this method will be presented in the Chapter 3.

Delivery mechanism collects data from the generator and send them to SUT. The

delivery mechanism is different from the case to case, depending on the fuzzer generator.

For example, a SUT that uses a file as input data is different than another that uses user

interaction by keyboard or mouse.

The monitoring system observes the SUT for error, crash or other unexpected results

after the processed input data is sent by delivery mechanism. It plays an important role in

fuzzing process because it is responsible for detecting malformations of SUT results.

2. Fuzzer types

We can classify fuzzers depends on its component type or based on context

knowledge of the system that are being to be tested. For example by the characteristics of

the data generator fuzzer can be mutative and generative. All fuzzer types are described

below.

2.1. By fuzz generators

By how input data is generated, fuzzers can be mutative and generative. Mutative

fuzzers generate data sets by mutating the existing input data while the generative fuzzers

creates new data sets from its own resources.

Mutative fuzzers , also known as ‘dumb’ fuzzers, use an existing data set and change

it by destroying, mutating in order to obtain new input sets. Mutators are less efficient than

generators and they can be used where the SUT can only accept a particularly structured

input set. In the Figure 2 we have an example of a gif image data that is mutated.

Discover expoits and software vulnerabilities using fuzzing methods

Figure 2. Original Gif vs Mutated Gif [2]

Generative fuzzers create a new data set from its own resources. They are more

powerful than mutative fuzzers, especially in a complex environment where network

protocol are being tested such as ftp, tftp, dns, etc. When fuzz FTP, for example, a mutative

fuzzer captures a network packet and generates a data set based on this basic data. It can

have some problems if the ftp protocol data is not present in network traffic. On the other

hand, generative fuzzers are more ‘intelligent’ and, in order to generate valid input data,

they need to understand the other protocols that are used in the network communication

such as DNS, SMB or, in more depth, TCP protocol keeping TCP connection state, or

sequence number.

Other criteria is referring to the context knowledge of the SUT. In this way, we have

blackbox, whitebox, and graybox fuzzers.

Blackbox fuzzers represent the first technique adopted as an approach by the blackhat community to discover software vulnerabilities. Input data is generated randomly by

modifying correct input and does not require knowledge about implementation of

application. This type of fuzzers have code coverage problem because they do not have

information details about the process that is under test so they do not realize when they use

the same portion of code all the time in the testing operation.

Whitebox fuzzers use input data that is generated with complete knowledge about

the application implementation details. The fuzzers is usually assisted by techniques such as

symbolic execution or taint analysis to chose what is the next action in testing process.

The graybox fuzzers fall between blackbox and whitebox and use minimal knowledge

of application implementation details.

2.2. By delivery mechanisms

The delivery mechanism is responsible for collecting data from generator and

presents it to SUT. Depending on the data that is sent to the SUT delivery mechanisms,

which may include: files, operating system events, environment variables, network

transmission, operating system resources.

File-based fuzzers have an advantage that all data is encapsulated and formatted in a

single entity. Fuzzers based on network transmission, such as ProxyFuzz, are quite easy to

configure as a proxy between data source and SUT and just modify packets that are passing

through proxy. This type of fuzzing is more complicated than file-based fuzzers because a

session is defined by multiple parameters and some of this parameter may depend on the

VICTOR VARZĂ

previous event. This means to create a system which monitors the response of the SUT and

changes the delivered data based on the SUT response (see Figure 1).

2.3.

By monitoring systems

The monitoring system is the critical component of a fuzzer because it reports error,

bugs, or malformations of the SUT results. It interacts with fuzzer generator to optimize the

input data set that may crash the SUT. Depending on where it is placed, we can identify two

types of monitoring system: local or remote.

A local monitoring system is used when SUT is running on the same system as a

fuzzer monitor component. It is more complex and provides a run-time environment for

improving detection of error or vulnerabilities in the SUT. Microsoft Application Verifier is a

relevant example. It monitors the interaction of SUT with Windows API to detect

programming errors that normally are difficult to be identified. It also detects errors caused

by heap corruption, incorrect handle or critical section usage.

Remote monitoring systems are used when we can monitor only the SUT input and

output data. In this case, the SUT and monitoring system can run on independent systems. It

is used especially in network protocol testing, where errors are signaled by TCP reset

packets or timeout parameter for TCP and UDP communications. Because the network

complexity has increased over time, more advanced remote monitoring systems have

appeared. For example, if we want to monitor a web application that runs on a specific web

server (eg. Apache, Tomcat, IIS), we have to monitor the output of the web server in order

to examine the web based application.

3. Modern Fuzzers

Classical fuzzers are based on blackbox technique, where the fuzzer does not have

access to the SUT internal state and structures. On the other hand, the modern fuzzers can

look inside the box and have access to SUT internals and may have monitoring tools that

feedback the input generator. To show limitation of blackbox fuzzing we can analyze the

following source code.

int test(int x) { // x is an input

int y = x + 7;

if (y == 11) do_something(); // simple function

return 0;

}

In the above example, the chance to execute function do_something() is about 1 in

if the variable x is random chosen from a 32-bit number. This explains why blackbox

fuzzing has low code coverage and can escape some security bugs.

A modern fuzzer may not have access to the source code of the SUT, but can use

other techniques to create the SUT execution graph such as: reverse engineering,

debugging, disassembly or static structural analyze. To generate input data, the fuzzer can

232

Discover expoits and software vulnerabilities using fuzzing methods

use mutative, generative or hybrid (a combination between the two) methods. The delivery

system is similar to classical fuzzers, and in addition, it may use memory based delivery. The

advantage of usage memory based delivery is that the fuzzer is able to modify the memory

content of the SUT or to bypass some function from the system input interface (checksum

verification) and send data directly to the function that normally is protected by checksum

verification step.

Having a formatted data input, whitebox fuzzing can symbolically execute the SUT

collecting constrains on input from condition branches that were founded along the

execution. Symbolic execution means that abstract symbols are provided and tracking than

actual values to generate the symbolic formulas over the input data [5]. The constrains

found are negated and it is used a constraint solver to obtain relevant assignments to the

path constraint. After that, new inputs can be found that can test different program

execution paths. For example, in the previous source code, if initially x is 0, then the

program will chose the else branch statement and generates the path constraint x +7 != 11.

After this, the constraint is negated and solved, and then x will take the value of 4, causing

the programs to chose the then branch of the if statement. Thus all the source code is

covered and new error or bugs can be found.

Even if theoretically testing with symbolic methods can lead all the source code

coverage, however, in practice, this is not possible due to large number of execution paths

and because symbolic execution, constraint generation and constraint solving collides with

complex program stuff such as: pointers manipulation, external variables or system calls.

3.1.

SAGE

Scalable, Automated, Guided Execution (SAGE) is the first implementation of

whitebox fuzzing technique, which can be used for testing large applications. SAGE has had

a noteworthy impact on Microsoft, where between 2007 and 2010 a third of Microsoft

Windows 7 bugs was discovered by using this tool.

SAGE was implemented with other Microsoft tools such as iDNA trace recorder

framework, TruScan analysis engine and Disolver constraint solver. It also implements

dynamic symbolic execution in x86 programming. TruScan simplifies symbolic execution by

instruction decoding, offering an interface to application symbolic information, monitoring

system I/O calls.

SAGE suffers several modifications to improve the speed and memory of constraints

generation: symbolic-expression caching (symbolic data is mapped on the same physical

object), unrelated constraint elimination (removes constraints that doesn’t share symbolic

data with the negated constraint, reducing in this way the size of constraint solver queries),

local constraint caching (does not add a constraint in the path constraint if it has already

been added) and flip count limit (sets the maximum number of whenever a constraint that

is generated by a program branch can be flipped).

The architecture of SAGE is presented in Figure 3. First, SAGE uses Input0 and tests

the SUT with AppVerifier to see if the initial input data determines an error in the SUT

results.

VICTOR VARZĂ

Figure 3. SAGE architecture [4]

If this first step does not generate malformations in the SUT results, then SAGE

creates a list of unique program instructions used in this first step. After that, SAGE tests

again the SUT using symbolic execution using that input and it generates the path constraint

that is characterized by the current program execution. After that, all the constraints from

the path constraint are negated one by one and finally are solved by a constraint solver (Z3

SMT solver). All relevant constraints are mapped to N inputs and then are used and ranked

according with instruction coverage.

For example, if Input2 discovers 20 new instructions, it gets a rank of 20. Next, the

new input with the highest rank is used in the symbolic execution task and the cycle is

repeated. It is important to remark that all the tasks on the SAGE are executed in parallel on

a multicore architecture.

3.2.

Comparison of blackbox and whitebox fuzzing methods

To compare blackbox and whitebox fuzzing two tools were used: Zzuf and Catchconv.

The tests were made by Team for Research in Ubiquitous Secure Technology (TRUST). Zzuf is

a blackbox fuzzer used to find vulnerabilities in application by modifying random bits in the

valid data. To make test case it uses fuzzing ratio also called corruption ratio and a range of

seeds. On the other hand Catchconv uses valid data input, it knows the execution details of

the SUT, and it collects the path conditions to create test cases.

To observe the results of the SUT, a specialized tool was used in this way: Vagrind.

This tool can detects memory management errors, can reports errors occurred in the

program and is useful to find and report more errors that could be obtained from

segmentation fault. It can report errors such as: invalid writes, invalid reads, uninitialized

values, memory leak. The methodology of the fuzzing test is presented in figure 4.

Discover expoits and software vulnerabilities using fuzzing methods

Figure 4. Methodology of the fuzzing test [9]

The target applicatications used in the tests were: mplayer, ImageMagick Convert,

Adobe Flash Player and Antiword. There were generated more than 1.2 million of test cases.

The architecture used was UC Berkeley PSI-cluster, which consisted of 81 machines and 270

processors. After tests 89 unique bugs were reported.

To compare the fuzzer performance, three metrics were used: number of test cases,

number of unique test cases, total bugs and total unique bugs found. Zzuf generated

962,402 test cases and found 1,066,000 errors (456 unique) while Catchconv generated 279,

953 test cases and found 304,936 errors (157 unique).

To make difference between the two fuzzers, first were compared performance of

the both fuzzer by the average number of unique errors fund in 100 test cases. So Zzuf had a

coefficient 2.69 and CC 2.63 (Figure 5).

Figure 5. Unique bugs/100 bugs [9]

Next was analyzed performance of percentage unique errors per total errors found. Zzuf had a 0.5%

and CC 22% (Image 6).

VICTOR VARZĂ

Figure 6. Unique bugs as % of total errors [9]

Finnaly was analized both fuzzers based on errors type found. Zzuf was better in

finding invalid write (the most important bug type from the others types).

Figure 7. Type of errors for each fuzzer [9]

From these tests we can conclusion the following: if we have lower budget and have

time then blackbox is the solution in this way. Once ‘easy’ bugs were found, then a smarter

fuzzer should be used, so a whitebox fuzzer is a good solution in this situation. But, in

practice is recommended to use both methods.

Discover expoits and software vulnerabilities using fuzzing methods

VICTOR VARZĂ

4. Combine whitebox and blackbox fuzzing methods

Whitebox and blackbox fuzzing are two fuzzing technics that are different by how input data is

generated. Whitebox fuzzers use symbolic execution and constraint solving techinics with complet knoladge

about the SUT (fuzzer must have access to the source code of SUT) while blackbox fuzzers generate input data

randomly by modifying correct input and does not require knowledge about implementation of the SUT.

From chapter 3 we conclusion that if we want to get best performance in term of bug and vulnerability

founding we have to use both methods. But what happens if we combine both of this methods in a single

fuzzer. This chapter presents a solution to combine whitebox and blackbox fuzzers in a single fuzzer using

some opensource fuzzers tools such as:

4.1.

Project architecture

Path predicates collector

(Symbolic execution)

Input data generator

PPL

STP

KLEE

SYSTEM UNDER TEST

Figure 8. Architecture overview

Delivery Mechanism

Monitoring system

Zzuf

Discover expoits and software vulnerabilities using fuzzing methods

4.2.

Path predicates collector

Symbolic execution

VICTOR VARZĂ

4.3.

Input data generator

Generate input data

After generate path predicates through symbolic execution we obtained constraints for ower system.

These constraints have to be solved using a constraint solver and , on the other hand, a numerical abstraction

method based on convex polyedra. For the first method we used STP and for the second we used Parma

Polyedra Library. The difference between this two methods is that STP give one solution for a given constraint

and the second method creates an input space from where we can random choose input data. Another

difference is that STP cand resolve complex constraint while PPL can olny handle linear equation with first

order symbolic variable. For example a_symb + b_symb < c is a linear formula whereas a_symb * b_symb < c is

not.

Convex polyedra

The polyedra is the solution of linear inequations defined as:

𝑎11 + 𝑎12 + ⋯ + 𝑎1𝑛 ≤ 𝑏1

𝑎𝑚1 + 𝑎𝑚2 + ⋯ + 𝑎𝑚𝑛 ≤ 𝑏𝑚

Equation 1

The result is the intersaction of m halfspace with normal vectors 𝑎𝑖 = (𝑎𝑖1 , 𝑎𝑖2 , … , 𝑎1𝑛 ), 𝑎𝑖 ≠ 0.

a2

a1

a3

a5

a4

Figure 9. Convex polyedrum

Discover expoits and software vulnerabilities using fuzzing methods

4.4.

Delivery mechanism

4.5.

Monitoring system

VICTOR VARZĂ

5. Experimental results

Discover expoits and software vulnerabilities using fuzzing methods

6. Conclusion and future work

VICTOR VARZĂ

BIBLIOGRAPHY

[1] Richard McNally, Ken Yiu, Duncan Grove and Damien Gerhardy, “Fuzzing: The State

of the Art”, Australian Command, Control, Communications and Intelligence Division

Defence

Science

and

Technology

Organisation,

February

2012,

http://www.dtic.mil/cgi-bin/GetTRDoc?AD=ADA558209

[2] Brian S. Pak, “Hybrid Fuzz Testing: Discovering Software Bugs via Fuzzing and

Symbolic Execution”, School of Computer Science Carnegie Mellon University

Pittsburgh, Master thesis, May 2012

[3] Sofia Bekrar, Chaouki Bekrar, Roland Groz, Laurent Mounier, “Finding Software

Vulnerabilities by Smart Fuzzing”, Software Testing, Verification and Validation

(ICST), 2011 IEEE Fourth International Conference, March 2011, pages 427- 430.

[4] Patrice Godefroid, Michael Y. Levin, and David Molnar, “SAGE: Whitebox fuzzing for

security testing”, communications of the ACM, vol. 55, no. 3, March 2012

[5] James C. King. “Symbolic execution and program testing”, Commun. ACM 19(7), July

1976, pages 385–394

[6] Tsankov P., Dashti, M.T., Basin, D., “SECFUZZ: Fuzz-testing security protocols”,

Automation of Software Test (AST), 2012 7th International Workshop, June 2012,

pages 1-7

[7] HyoungChun Kim, YoungHan Choi, DoHoon Lee, Donghoon Lee, “Advanced

Communication Technology, 2008. ICACT 2008. 10th International Conference”, Feb.

2008, paegs 1304 – 1307

[8] Adrian Furtuna, “Proactive cyber security by red teaming”, PhD thesis, Military

Technical Academy, 2011

[9] Marjan Aslani, Nga Chung, Jason Doherty, Nichole Stockman, and William Quach,

“Comparison of Blackbox and Whitebox Fuzzers in Finding Software Bugs”, Summer

Undergraduate Program in Engineering Research at Berkeley, Talk or presentation,

2008, www.truststc.org/pubs/493.html