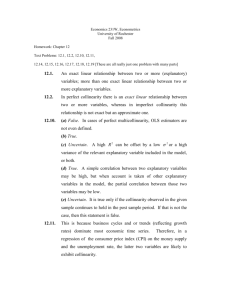

Gujarati Chapter 6-- Statistical Inference and Hypothesis Testing

advertisement

UNC-Wilmington Department of Economics and Finance ECN 377 Dr. Chris Dumas Regression Analysis--The Multicollinearity Problem (When X Variables are Linearly Correlated with Other X Variables) Multicollinearity exists among the X variables in your regression equation when two (or more) of your X variables are LINEARLY related with one another. Recall that one of the assumptions of the OLS method is that the X variables in a regression equation are NOT linearly correlated with one another, so Multicollinearity is a violation of one of the assumptions of the OLS method. When this assumption is violated, serious consequences can occur for regression analysis. Now, in theory, multicollinearity should never arise, because we are supposed to choose X variables for our model that are not linearly related with one another. But, in practice, multicollinearity occurs to some extent often--almost all the time. How do we determine whether X variables are linearly related and, if they are, what can be done about it? Perfect Multicollinearity In rare situations, some of the X variables in the regression equation can be perfectly linearly correlated with each other—that is, if you graph one X variable against another, the dots lie exactly along a perfectly straight line. In the case of perfect correlation, the math behind the OLS method fails and won’t work at all. In this case, you will definitely need to try one of the “Remedies” for multicollinearity described below. Perfect Multicollinearity is rare, except in situations where the researcher creates some new X variables based on other X variables in the dataset. If a linear relationship is used to create the new X variables from the other X variables, then Perfect Multicollinearity can result. This commonly occurs when a new X variable is created by adding a constant to an existing X variable or multiplying an existing X variable by a constant. Example: Suppose a researcher is building a regression equation to forecast the cost per month of cars produced by an auto manufacturer. Suppose that cost depends on the number of auto bodies used in producing the cars as well as the cost of the wheels. The researcher has data on the number of car bodies used by the manufacturer each month, so, to estimate the number of wheels used, the researcher simply multiplies the number of car bodies by 4, because four wheels are used per car body. Now, if the researcher includes both Xbodies, the number of car bodies used per month, and Xwheels, the number of wheels used per month, in the regression equation that is used to predict cost, the regression will fail, because Xbodies is perfectly correlated with Xwheels. If we graphed Xwheels against Xbodies we would have a perfectly straight line with a slope of 4. This perfectly straight relationship between X wheels and Xbodies is a cause of Perfect Multicollinearity. Strong (but not Perfect) Multicollinearity More commonly, some of the X variables in the regression equation can be strongly, but imperfectly, linearly correlated with other X variables in the equation. This situation is what is typically meant by researchers when they refer to the “Multicollinearity Problem.” Multicollinearity exists to some degree in almost all data samples. Therefore, we do not test for it; we simply assume it is always there. However, it does not cause major problems unless it is strong / severe / present to a large degree. So, we typically try to assess the severity of the multicollinearity problem in several ways . . . 1 UNC-Wilmington Department of Economics and Finance ECN 377 Dr. Chris Dumas Assessing the Severity of Multicollinearity Multicollinearity may be strong / severe /present in your data sample to a large degree when: 1. R2 is very large but many t-values are insignificant. 2. If one X variable is dropped from the model, the 𝛽̂ coefficients of the X variables that remain in the model change substantially in size, sign and/or significance. 3. The Pearson correlation coefficient "r" between two X variables in your model is large. Recall that the Pearson correlation coefficient is a measure of the LINEAR relationship between two variables. You should check the correlation between every pair of X variables in your model. (Remember that “PROC CORR” in SAS will easily produce a Correlation Matrix, which shows the correlation between every pair of X variables in your model.) If the correlation between two X variables is very large, say over 0.70, then you may need to take some action to reduce the multicollinearity (see below). SAS Code for Calculating Correlation Coefficients In SAS, PROC CORR can be used to easily calculate Pearson correlation coefficients for every pair of X variables in the dataset. For example, if you have four X variables in your dataset named "dataset01", and the variables are named X1 X2 X3 X4 the following SAS code will calculate correlation coefficients for each pair of X variables: proc corr data=dataset01; var X1 X2 X3 X4; run; Consequences of Strong (but not Perfect) Multicollinearity When multicollinearity is strong / severe / present to a large degree, the OLS method is still BLUE (which is good), but recall that BLUE means that the estimates of the β's (the 𝛽̂ ′𝑠) will be unbiased and have minimum variance on average over repeated samples. In a particular sample of data, multicollinearity can cause the following problems: 1. R2 values appear to be very high when in reality they shouldn’t be. 2. Some of the 𝛽̂'s may appear to have the “wrong” signs. 3. The s.e.’s of the 𝛽̂'s are very large. This can cause the t-statistics of some 𝛽̂'s to be insignificant when in reality they should be significant, which means that some X variables may appear to have no effect on Y when in fact they do. 4. If you drop an X variable from the regression, the t-values of some of the remaining X variables change from insignificant to significant—it becomes difficult to tell which X variables are truly significant. 5. The 𝛽̂'s and their s.e.’s are very sensitive to small changes/errors in the data. 2 UNC-Wilmington Department of Economics and Finance ECN 377 Dr. Chris Dumas Remedies for Multicollinearity 1. Transform the X variables used in the model. For example, you may need to use the log of X, or X2, in your regression equation instead of the original X variable. If you use the log of X, or X2, instead of X, then you “break” the linear relationship between that X variable and other X variables in the model, and the multicollinearity problem may go away or at least become much smaller. Another possible transformation is adjusting X variables for inflation. For example, if you have more than one X variable measured in "dollar" units or other "money" units, the “nominal” (not adjusted for inflation) forms of these variables may be highly correlated with one another, whereas the “real” (inflation-adjusted) forms of these variables may not be, so you may need to adjust any "money" variables for inflation in order to reduce multicollinearity among the "money" X variables in the model. 2. Drop one of the collinear X variables from the model. However, if the dropped variable actually has a significant effect on Y, then dropping it from the model will cause the problems associated with Omitting a Relevant Variable (as discussed in the Model Specification handout), such as biasing the estimates of the 𝛽̂'s for the X variables that remain in the model (yikes!). But, if the bias is small, it may be better to accept a little bias in order to eliminate a lot of multicollinearity. 3. Obtain additional data or a new sample. With luck, there will be less multicollinearity among the X variables in the augmented / new sample. This can work when the multicollinearity in the original data sample was not due to any real linear relationship among the X variables, but was simply due to an unlucky random sample that happened, by pure chance, to have two X variables whose values lined up with one another. A Situation in which Multicollinearity is NOT a Problem: Forecasting/Predicting Y Multicollinearity makes it difficult to separate the effects of the different X variables on Y, and it makes it difficult to determine which of the X variables are actually significant. But, if you only want to predict Y, and you don’t care about separating the effects of the individual X variables, then multicollinearity is not much of a problem, even when it exists, as long as any collinearity among the X variables in the population continues to exist during the forecast period as it did in the sample of data on which the regression was based. However, if the X variables are correlated in your sample of data but are not correlated out there in the real world (i.e., in the population), then your model will not necessarily predict Y very well. So, if multicollinearity is present in a model, and you decide to use the model to predict Y, then the predictions are made under the assumption that any correlations among the X variables in the sample also exist among the X variables in the population. 3