Elec 484 Project Pha..

advertisement

Phase Vocoder Report for Audio Signal

Processing

Gerald Leung

V00659924

Table of Contents

1.

Windowed Overlapping Segments ................................................................................................................................. 3

2.

Verifying Windowed Overlapping Segments .................................................................................................................. 3

a.

3.

Plotting a 3D spectrogram .......................................................................................................................................... 3

Phase Response .............................................................................................................................................................. 5

a.

Matlab Implementation .............................................................................................................................................. 6

4.

Effects of Cyclic Shift ....................................................................................................................................................... 8

5.

Implementation of Phase Vocoder and Audio Effects .................................................................................................... 9

a.

Time Stretch ................................................................................................................................................................ 9

b.

Pitch Shift .................................................................................................................................................................. 11

c.

Robotize .................................................................................................................................................................... 13

d.

Whisperization .......................................................................................................................................................... 14

e.

Stable and unstable transients ................................................................................................................................. 15

f.

De-noising ................................................................................................................................................................. 17

6.

Audio Compression ....................................................................................................................................................... 19

a.

Frequency Threshold ................................................................................................................................................ 19

b.

Retaining strongest N Frequencies ........................................................................................................................... 20

1. Windowed Overlapping Segments

This project is based on the implementation of window overlap segments based from assignment 5.

2. Verifying Windowed Overlapping Segments

The Fast Fourier Transform (FFT) is an algorithm that determines the magnitude and phase of a signal in the

frequency domain. Fourier states that any periodic signal can be re-constructed using a weighted sum of sinusoids.

The magnitude and phase can be determined based on the amplitude and phase of each sinusoid. In audio signal

processing, processing segments of data at a time is a common practice, especially in real-time audio applications.

The audio segment taken is most likely non-periodic; therefore, when performing FFT operations on a non-periodic

waveform, the frequency response doesn’t give an accurate representation of the actual frequency response. This

“error” is also known as leakage.

a. Plotting a 3D spectrogram

A 3D spectrogram displays the time-frequency contents of a signal. This is accomplished by taking the Short

Time Fourier Transform (STFT) of each window segment and plotting the frequency response for each

window. Each STFT of a window segment corresponds to a line in the time axis on the 3D graph.

The following graph is a spectrogram of a simple non-periodic cosine function with no window overlapping

that illustrates the effects of leakage.

Normally, we would expect a clean spike at the frequency of the cosine wave.

To rectify this problem, a window overlap operation is used to enforce each segment of the audio signal to be periodic.

The following figures illustrate 6 test cases to verify that the leakage effect is rectified.

A cosine of 1 Hz was used to construct the window. From the above graph, it can clearly be seen that the leakage affect

has been rectified except for the 0.75 Hz cosine signal. This is because the FFT resolution is not sufficient enough to reconstruct the signal. These are the implications of discrete time and frequency computation. Notice that there are also

signal artifacts in the 200 Hz and 1 Hz signal. This is due most likely due to again, the limitations of frequency resolution

in the window function.

3. Phase Response

The phase spectrogram was constructed by taking the angle of each FFT bin and plotting their values versus time.

The following graph is the result of a 3D phase spectrogram for a 200 Hz cosine wave.

The FFT operation provides a set of real and imaginary values. These values correspond to the magnitude and phase at a

particular frequency. The FFT time vector starts in the left of the window. This suggests that a pulse in the middle of a

window has a phase of 0, pi, 0, -pi ... since the components of the cosine signal is 0, +1, 0, -1 ... This causes the phase to

unwrap in opposite directions for even and odd FFT bins.

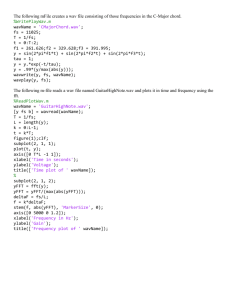

a. Matlab Implementation

The following is the code implementation of plotting a 3D Magnitude and phase Spectrogram.

The following code is the implementation of a Magnitude 3D spectrogram

%PHASE VOCODER Summary of this function goes here

%

Detailed explanation goes here

clear all;

close all;

%---------------------- Input -----------------------%

fs = 44100;

A = wavread('flute.wav');

A=A';

halfSignal =1:2048;

Y2 = .5*(1-cos(2*pi*halfSignal*1/(2048)));

% Fixed window size = 2048

windowSize = 2048;

output2 = A(1:windowSize).*Y2;

hopSize = windowSize/2;

out=zeros(1,length(A));

for d=1 : length(A)/hopSize-1

%In time domain

wA = A((d-1)*hopSize+1: (d-1)*hopSize+windowSize);

wX = Y2.*wA;

specSegment = wX;

%In frequency domain

Wx=fft(wX);

%To get the mangnitude and put it in Wxmag array.

Wxmag(d) = abs(Wx(d));

%In time domain

YY = ifft(Wx);

fft_x = ifft(Wx);

out((d-1)*hopSize+1: (d-1)*hopSize+windowSize)=real(YY)+out((d-1)*hopSize+1: (d-1)*hopSize+windowSize);

%calculate the spectrogram magnitude for the window segment

specMag(1:windowSize,d) = 20*log10(abs(fft(specSegment)));

end

%set the spectrogram axis

X = 1:length(A)/hopSize-1;

Y = 1:windowSize/2;

Z = specMag(1:windowSize/2, 1:length(A)/hopSize-1);

%plot it

figure(1)

surf(X, Y, Z);

title('3D spectrogram');

Similar to the magnitude spectrogram, the phase spectrogram is plotted by obtaining the angle of all the FFT bins in each

window segment. Each window corresponds to a line on the spectrogram. The following is the matlab code and 3D

phase spectrogram results using flute.wav as a simple test input file.

%PHASE VOCODER Summary of this function goes here

%

Detailed explanation goes here

clear all;

close all;

%---------------------- Input -----------------------%

fs = 44100;

A = wavread('flute.wav');

windowSize = 2048;

A=A';

halfSignal =1:2048;

Y2 = .5*(1-cos(2*pi*halfSignal*1/(2048)));

% Fixed window size = 2048

windowSize = 2048;

output2 = A(1:windowSize).*Y2;

hopSize = windowSize/2;

out=zeros(1,length(A));

for d=1 : length(A)/hopSize-1

%In time domain

wA = A((d-1)*hopSize+1: (d-1)*hopSize+windowSize);

wX = Y2.*wA;

specSegment = wX;

%In frequency domain

Wx=fft(wX);

%To get the mangnitude and put it in Wxmag array.

Wxmag(d) = abs(Wx(d));

%In time domain

YY = ifft(Wx);

fft_x = ifft(Wx);

out((d-1)*hopSize+1: (d-1)*hopSize+windowSize)=real(YY)+out((d-1)*hopSize+1: (d-1)*hopSize+windowSize);

%calculate the spectrogram magnitude for the window segment

specMag(1:windowSize,d) = 20*log10(abs(fft(specSegment)));

specPhase(1:windowSize,d) = angle(fft(specSegment));

end

%set the magnitude spectrogram axis

X = 1:length(A)/hopSize-1;

Y = 1:windowSize/2;

Z = specMag(1:windowSize/2, 1:length(A)/hopSize-1);

%plot it

figure(1)

surf(X, Y, Z);

title('3D spectrogram');

Z = specPhase(1:windowSize/2, 1:length(A)/hopSize-1);

figure(2)

surf(X, Y, Z);

title('3D Phase Spectrogram');

4. Effects of Cyclic Shift

The FFT operation provides a set of real and imaginary values. These values correspond to the magnitude and phase at a

particular frequency. The FFT time vector starts in the left of the window. This suggests that a pulse in the middle of a

window has a phase of 0, pi, 0, -pi ... since the components of the cosine signal is 0, +1, 0, -1 ... This causes the phase to

unwrap in opposite directions for even and odd FFT bins.

We can obtain a simpler phase relationship by performing a circular shift operation before taking the FFT operation. The

following graph illustrates the effect on phase with and without circular shift.

5. Implementation of Phase Vocoder and Audio Effects

The implementation of the phase vocoder was successfully verified using the provided .wav file from the DAFX web

source using Tom_diner.wav. Each audio grain was multiplied by the user-defined window function, whose FFT was

taken. The IFFT and overlap add was then performed to re-construct the original audio signal.

Phase unwrapping needed to be performed in order to find the phase difference between FFT bins of the same

frequencies. If the angular phase of the entire range is from 0 to 360 degrees. If the phase difference is to be

calculated between, for example, from 330 degrees to 30 degrees (390 degrees) we would obtain a negative and

incorrect phase difference. To rectify this issue, we must unwrap the phase from 30 degrees to 390 degrees. This

would give us the correct phase difference of 390 – 330 degrees, which yields a phase difference of 60 degrees.

The following figure illustrates the effect of phase unwrapping:

a. Time Stretch

Time stretch was performed in the frequency spectrum manipulation of the phase vocoder. The FFT magnitude

and phase are first taken for each window segment. The magnitude and phase increment are then calculated

and interpolated using linear interpolation based on the time stretch ratio. Because FFT is a summation of

sinusoids, the time-stretched audio signal in the time domain can be re-constructed by summing all of the

sinusoids based on the interpolated phase and magnitude. This procedure is repeated for all the windows in the

audio signal and overlap add is performed

The following matlab code is an implementation of time stretch using a phase vocoder. Refer to

timestretch.wav for results.

function [ output_args ] = time_stretch( input_args )

%TIME_STRETCH Summary of this function goes here

%

Detailed explanation goes here

%window grain size

n1 = 256;

%time stretched window grain size

n2 = 3*1024;

%window length

WLen = 2048;

w1 = hanning(WLen);

w2 = w1;

[in_signal, FS] = wavread('redwheel.wav');

L = length(in_signal);

in_signal = [zeros(WLen, 1);

in_signal;

zeros(WLen-mod(L, n1),1)] / max(abs(in_signal));

stretch_factor = n2/n1;

out_signal = zeros(WLen + ceil(length(in_signal)*stretch_factor), 1);

grain = zeros(WLen, 1);

ll = WLen/2;

omega = 2*pi*n1*[0:ll-1]'/WLen;

phi0 = zeros(ll, 1);

r0 = zeros(ll, 1);

psi = zeros(ll, 1);

res = zeros(n2, 1);

pin = 0;

pout = 0;

pend = length(in_signal)-WLen;

%iterate through the audio file

while pin<pend

%multiply audio segment with window function

grain = in_signal(pin+1:pin+WLen).*w1;

%take the fft

fc = fft(fftshift(grain));

%get the magnitude and phase

f = fc(1:ll);

r = abs(f);

phi = angle(f);

%phase unwrap

delta_phi = omega + princarg(phi - phi0-omega);

delta_r = (r-r0)/n2;

delta_psi = delta_phi/n1;

%reconstruct the audio signal from interpolated phase and mag.

for k = 1:n2

r0 = r0 + delta_r;

psi = psi + delta_psi;

res(k) = r0'*cos(psi);

end

phi0 = phi;

r0 = r;

psi = princarg(psi);

out_signal(pout+1:pout + n2) = res;

pin = pin + n1;

pout = pout + n2;

end

soundsc(out_signal, FS);

out_signal = out_signal./max(out_signal);

wavwrite(out_signal, FS, 'time_stretch.wav');

b. Pitch Shift

Similar to time stretch, pitch shift was performed in the frequency spectrum manipulation of the phase vocoder.

The FFT magnitude and phase are first taken for each window segment. The phase is computed from each FFT

bin and is then multiplied by a pitch shift ratio to obtain a pitch effect. Based on the modified phase of each FFT

bin, the audio signal is re-constructed. The final result is a pitch effect on the input audio signal based on the

pitch shift ratio.

The following matlab code is an implementation of time stretch using a phase vocoder. Refer to pitch_shift.wav

for results.

function [ output_args ] = pitch_shift( input_args )

%PITCH_SHIFT Summary of this function goes here

%

Detailed explanation goes herefunction [ output_args ] = time_stretch( input_args )

%TIME_STRETCH Summary of this function goes here

%

Detailed explanation goes here

n1 = 256;

%pitch shift ratio

pit_ratio = 1.4;

WLen = 2048;

w1 = hanning(WLen);

w2 = w1;

[in_signal, FS] = wavread('redwheel.wav');

soundsc(in_signal, FS);

L = length(in_signal);

in_signal = [zeros(WLen, 1);

in_signal;

zeros(WLen-mod(L, n1),1)] / max(abs(in_signal));

out_signal = zeros(length(in_signal), 1);

grain = zeros(WLen, 1);

ll = WLen/2;

omega = 2*pi*n1*[0:ll-1]'/WLen;

phi0 = zeros(ll, 1);

r0 = zeros(ll, 1);

psi = zeros(ll, 1);

res = zeros(n1, 1);

pin = 0;

pout = 0;

pend = length(in_signal)-WLen;

while pin<pend

%multiply audio segment with window function

grain = in_signal(pin+1:pin+WLen).*w1;

%compute magnitude and phase of FFT

fc = fft(fftshift(grain));

f = fc(1:ll);

r = abs(f);

phi = angle(f);

%unwrap phase to get phase increment

delta_phi = omega + princarg(phi - phi0-omega);

delta_r = (r-r0)/n1;

%multiply the phase with the pitch ratio factor

delta_psi = pit_ratio*delta_phi/n1;

%reconstruct the audio signal

for k = 1:n1

r0 = r0 + delta_r;

psi = psi + delta_psi;

res(k) = r0'*cos(psi);

end

phi0 = phi;

r0 = r;

psi = princarg(psi);

out_signal(pout+1:pout+n1) = out_signal(pout+1:pout+n1)+res;

pin = pin + n1;

pout = pout + n1;

end

%Normalize output signal

%out_signal = out_signal(WLen/2 + n1 + 1:length(out_signal))/max(abs(out_signal));

soundsc(out_signal, FS);

out_signal = out_signal./max(out_signal);

wavwrite(out_signal, FS, 'pitch_shift.wav');

c. Robotize

In order to create a robotize audio effect, the phase of all the FFT bins taken in the window grain are simply set

to zero. The IFFT is then performed using the absolute value of the original FFT while setting the phase for all

FFT bins to zero. Overlap add is then performed on all the window segments to re-construct the audio signal.

The window length must be well defined such that the window only segments the audio pitch frequency.

The following matlab code is an implementation of robotize effect using a phase vocoder. Refer to robotize.wav

for results.

function [ output_args ] = robotize( input_args )

%PHASE VOCODER Summary of this function goes here

%

Detailed explanation goes here

clear all;

close all;

%---------------------- Input -----------------------%

[A fs] = wavread('redwheel.wav');

windowSize = 2048;

A=A';

% Fixed window size = 2048

windowSize = 256;

halfSignal =1:windowSize;

%define window functino

Y2 = .5*(1-cos(2*pi*halfSignal*1/(2048)));

%initialization

output2 = A(1:windowSize).*Y2;

hopSize = windowSize/2;

out=zeros(1,length(A));

for d=1 : length(A)/hopSize-1

%In time domain

wA = A((d-1)*hopSize+1: (d-1)*hopSize+windowSize);

wX = Y2.*wA;

%wX = wA;

specSegment = wX;

%In frequency domain

Wx=fft(wX);

%take ifft of absolute value of FFT only

YY = ifft(abs(Wx));

%overlap add to reconstruct the audio signal

out((d-1)*hopSize+1: (d-1)*hopSize+windowSize)=YY+out((d-1)*hopSize+1: (d-1)*hopSize+windowSize);

end

soundsc(out, 44100);

%normalize

out = out ./ max(out);

wavwrite(out, 44100, 'robotize.wav');

d. Whisperization

Similarly to robotize effect, to create a whisperization effect, the phase of each FFT bin is set to a random

number in the range from 0 to 2pi for each window grain. The IFFT is then performed for each window grain

and overlap add is performed to re-construct the whisperized audio signal.

The following matlab code is an implementation of whisperization effect using a phase vocoder. Refer to

whisperize.wav for results.

function [ output_args ] = phase_vocoder( input_args )

%PHASE VOCODER Summary of this function goes here

%

Detailed explanation goes here

clear all;

close all;

%---------------------- Input -----------------------%

[A fs] = wavread('redwheel.wav');

A=A';

% Fixed window size

windowSize = 128;

halfSignal =1:windowSize;

Y2 = .5*(1-cos(2*pi*halfSignal*1/(windowSize)));

output2 = A(1:windowSize).*Y2;

hopSize = windowSize/2;

out=zeros(1,length(A));

for d=1 : length(A)/hopSize-1

%In time domain

wA = A((d-1)*hopSize+1: (d-1)*hopSize+windowSize);

wX = Y2.*wA;

%In frequency domain

Wx=fft(wX);

r = abs(Wx);

phi = 2*pi*rand(1,length(Wx));

Wx = r.*exp(i*phi);

YY = real(ifft(Wx));

fft_x = ifft(Wx);

out((d-1)*hopSize+1: (d-1)*hopSize+windowSize)=YY+out((d-1)*hopSize+1: (d-1)*hopSize+windowSize);

end

soundsc(out, fs);

%Normalizing

out = out ./ max(out);

wavwrite(out, fs, 'whisperize.wav');

e. Stable and unstable transients

The stable and unstable transients of a signal can be extracted by comparing the phase difference between

successive FFT bins. For stable transients, only the phase differences between successive FFT bins are kept. The

opposite case applies for unstable transients. Any FFT bins that violate this condition are set to 0. The signal is

then re-constructed back using the modified FFT.

The following matlab code is an implementation. Refer to stable.wav and unstable.wav for results.

%=====NEEEEEEED TO WORK ON !

%===== this program extracts the stable components of a signal

%===== w1 and w2: windows (analysis and synthesis)

%===== WLen: is the length of the windows

%===== n1 and n2: steps (in samples) for the analysis and synthesis

%=====NEEEEEEED TO WORK ON !

close all;

clear all;

%------------ INPUT ------------[INPUT,fs] = wavread('gavot.wav');

%------------ INPUT ------------test = 1; % 0.5 % 1

n1

= 200; %256

n2

= n1;

windowLength

halfSignal =

w1 = .5*(1 w2

inputLength

= 2048;

1:windowLength;

cos(2*pi*(halfSignal)'/(windowLength)));

= w1;

= length(INPUT);

INPUT

= [zeros(windowLength, 1); INPUT; zeros(windowLength-mod(inputLength,n1),1)] / max(abs(INPUT));

%----- initializations ----ratio = n2/n1

OUTPUT = zeros(windowLength+ceil(length(INPUT)*ratio),1);

pin = 0;

pout = 0;

pend = length(INPUT)-windowLength;

count = 0;

%----- initializations ----devcent = 2*pi*n1/windowLength;

vtest

= test*devcent

DAFx_out = zeros(length(INPUT),1);

grain

= zeros(windowLength,1);

theta1

= zeros(windowLength,1);

theta2

= zeros(windowLength,1);

while pin < pend

newPin = pin+windowLength;

%segment mupltiplied by window

segment = INPUT(pin+1:newPin).* w1;

% circular shifting by fftshift

f

= fft(fftshift(segment));

r

= abs(f);

theta = angle(f);

dev = princarg(theta-2*theta1+theta2);

ft= f.*(abs(dev)<vtest) %%%%%% ????

grain=fftshift(real(ifft(ft))).*w2;

theta2 = theta1;

theta1 = theta;

% circular shifting by fftshift and back in time domain

segment = fftshift(real(ifft(r))).*w2;

newPout=pout+windowLength;

OUTPUT(pout+1:newPout)=OUTPUT(pout+1:newPout) + segment;

pin = pin + n1;

pout = pout + n2;

specSegment = r;

count = count + 1;

smallSegment= segment;

fftSmall = fft(smallSegment);

realFftSmall = abs(fftSmall);

smallMag(1:windowLength, count)=20*log10(realFftSmall);

end

%---------------------------- OutputPLOT ----------------------------%

% %OUTPUT PLOT

% X = 1:count;

% Y = 1:max(n2,n1);

% Z = smallMag(Y,X);

% surf(X,Y,Z);

% shading interp;

%

% %INPUT PLOT

% figure(2);

% [S,F,T,P] = spectrogram(INPUT,2048);

% surfc(T,F,20*log10(P));

% shading interp

%------------------------------ Output -------------------------------%

OUTPUT = OUTPUT(windowLength+1:length(OUTPUT))/max(abs(OUTPUT));

soundsc(OUTPUT, fs);

wavwrite(OUTPUT, fs, 'stable.wav');

f. De-noising

De-noising an audio signal emphasizes or maintains specific areas of a frequency spectrum and lowers the noise

within the sound. This can be accomplished by performing non-linear spectral subtraction. In practical terms,

we can de-noise a signal by keeping the phase of the FFT and manipulating the magnitude to maintain the

higher levels and attenuating the lower levels of the audio signal. The following non-linear function is applied

for the implementation of the de-noise algorithm:

𝑓(𝑥) =

𝑥2

𝑥+𝑐

or 𝑓(𝑥) =

𝑥

𝑥+𝑐

The constant: c can be used to adjust the sharpness of the attenuation curve. The smaller the value, the sharper

the attenuation curve will be.

The following graph is the non-linear function that will be used to attenuate any signal below a given threshold

with c = 0.01.

The following graph illustrates the attenuation effect of the audio signal in both signal and gain and in dB:

The following is the matlab code for implementing the de-noise algorithm.

function [ output_args ] = denoise( input_args )

%DE-NOISE Summary of this function goes here

%

Detailed explanation goes here

n1 = 512;

n2 = n1;

WLen = 2048;

w1 = hanning(WLen);

w2 = w1;

[in_signal, FS] = wavread('denoise_input.wav');

in_signal = in_signal(1:length(in_signal), 1)

L = length(in_signal);

in_signal = [zeros(WLen, 1);

in_signal;

zeros(WLen-mod(L, n1),1)] / max(abs(in_signal));

WLen2 = WLen/2;

coef = 0.01;

freq = (0:1:299)/WLen*FS;

out_signal = zeros(length(in_signal), 1);

pin = 0;

pout = 0;

pend = length(in_signal)-WLen;

while pin<pend

grain = in_signal(pin+1:pin+WLen).*w1;

f = fft(grain);

r = abs(f);

ft = f.*r.^2./(r+coef);

grain = real(ifft(ft)).*w2;

out_signal(pout+1:pout + WLen) = out_signal(pout+1:pout + WLen) + grain;

pin = pin + n1;

pout = pout + n2;

end

%Normalize output signal

%out_signal = out_signal(WLen/2 + n1 + 1:length(out_signal))/max(abs(out_signal));

soundsc(out_signal, FS);

out_signal = out_signal./max(out_signal);

wavwrite(out_signal, FS, 'denoise.wav');

6. Audio Compression

a. Frequency Threshold

This experiment involves setting all frequency components below a threshold to zero. The higher the

threshold, the fewer frequency components will be retained. An instrumental and voice sound clip were

used to experiment with setting frequency thresholds. This effect dampens the sound. The higher the

frequency threshold, the more damping is applied to the sound. The frequency threshold is particularly

more sensitive in frequencies close to the window length.

The following is the matlab code for implanting audio compression:

WLen = 2048;

w1 = hanning(WLen);

w2 = w1;

freq_threshold = 1090;

[in_signal, FS] = wavread('redwheel.wav');

in_signal = in_signal(1:length(in_signal), 1);

L = length(in_signal);

max_sample = max(in_signal);

in_signal = [zeros(WLen, 1);

in_signal;

zeros(WLen-mod(L, n1),1)] / max(abs(in_signal));

WLen2 = WLen/2;

coef = 0.01;

freq = (0:1:299)/WLen*FS;

out_signal = zeros(length(in_signal), 1);

pin = 0;

pout = 0;

pend = length(in_signal)-WLen;

max_mag = max(abs(fft(in_signal)));

while pin<pend

grain = in_signal(pin+1:pin+WLen).*w1;

f = fft(grain);

r = abs(f);

for i = 1 : freq_threshold

f(i) = 0;

end

grain = real(ifft(f)).*w2;

out_signal(pout+1:pout + WLen) = out_signal(pout+1:pout + WLen) + grain;

pin = pin + n1;

pout = pout + n2;

end

%Normalize output signal

%out_signal = out_signal(WLen/2 + n1 + 1:length(out_signal))/max(abs(out_signal));

% soundsc(in_signal, FS);

soundsc(out_signal, FS);

out_signal = out_signal./max(out_signal);

wavwrite(out_signal, FS, 'compression.wav');

Refer to the compression.wav sound file for results

b. Retaining strongest N Frequencies

This audio effect involves choosing the strongest N frequency components, and setting all others to zero.

This was accomplished by determining the maximum FFT magnitude of all the window segments. An

iteration is then performed to set all frequency components whose magnitude are below a percentage

threshold of the maximum FFT magnitude to 0. For example, if the maximum FFT magnitude s 100, we

can set all frequency components below 80% of the maximum magnitude to 0.

This has close to the same effect as setting a frequency threshold. The sound begins to dampen when

the percentage threshold is set to 5%. However, setting all FFT components below 1% of the maximum

FFT magnitude results in an audibly identical. Setting the percentage threshold really high results in the

FFT containing the strongest frequency sinusoid. As a result, you will only hear a “beep”, which

corresponds to what a sinusoidal signal sounds like.

The following matlab code is the implementation of retaining the strongest N frequencies.

n1 = 512;

n2 = n1;

WLen = 2048;

w1 = hanning(WLen);

w2 = w1;

freq_threshold = 1090;

%400hz threshold

[in_signal, FS] = wavread('redwheel.wav');

in_signal = in_signal(1:length(in_signal), 1);

L = length(in_signal);

max_sample = max(in_signal);

in_signal = [zeros(WLen, 1);

in_signal;

zeros(WLen-mod(L, n1),1)] / max(abs(in_signal));

WLen2 = WLen/2;

coef = 0.01;

freq = (0:1:299)/WLen*FS;

out_signal = zeros(length(in_signal), 1);

pin = 0;

pout = 0;

pend = length(in_signal)-WLen;

max_mag = 0;

while pin<pend

grain = in_signal(pin+1:pin+WLen).*w1;

f = fft(grain);

r = abs(f);

%find the maximum FFT magnitude

if(max_mag < max(r))

max_mag = max(r);

end

grain = real(ifft(f)).*w2;

pin = pin + n1;

pout = pout + n2;

end

%now set all FFT components below a threshold to 0

pin = 0;

pout = 0;

pend = length(in_signal)-WLen;

while pin<pend

grain = in_signal(pin+1:pin+WLen).*w1;

f = fft(grain);

r = abs(f);

for i = 1 : WLen

if(abs(f(i)) < max_mag*0.01)

f(i) = 0;

end

end

grain = real(ifft(f)).*w2;

out_signal(pout+1:pout + WLen) = out_signal(pout+1:pout + WLen) + grain;

pin = pin + n1;

pout = pout + n2;

end

plot(20*log10(abs(fft(out_signal))));

soundsc(out_signal, FS);

out_signal = out_signal./max(out_signal);

wavwrite(out_signal, FS, 'compression.wav');