Redundancy and Suppression in Trivariate Regression Analysis

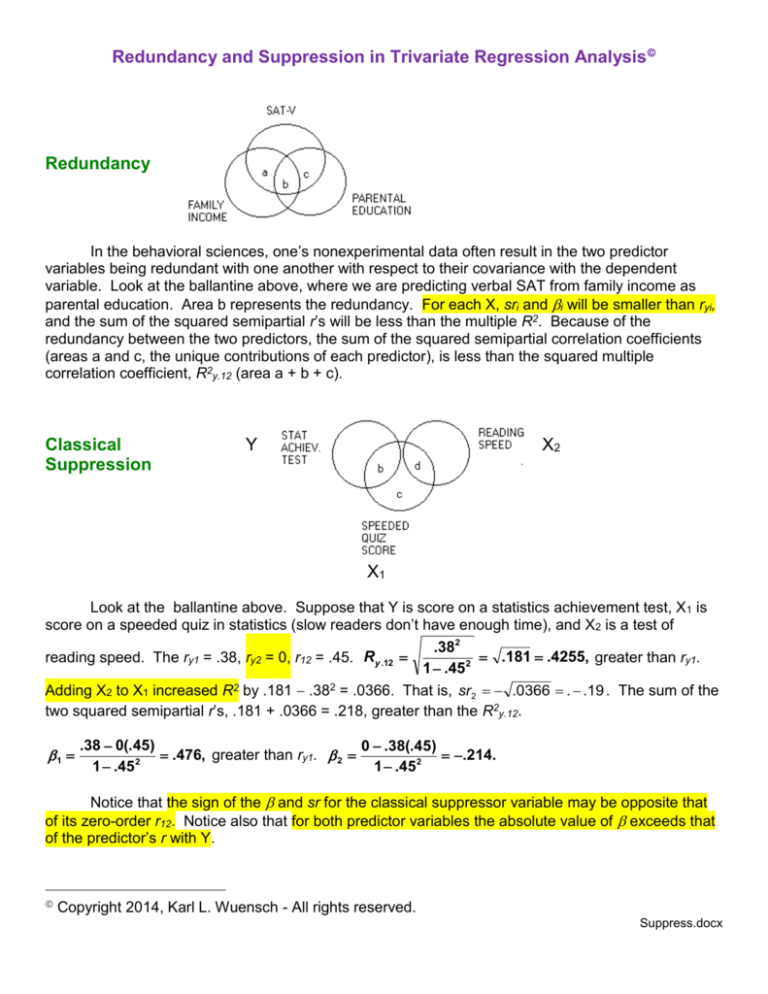

Redundancy

In the behavioral sciences, one’s nonexperimental data often result in the two predictor

variables being redundant with one another with respect to their covariance with the dependent

variable. Look at the ballantine above, where we are predicting verbal SAT from family income as

parental education. Area b represents the redundancy. For each X, sri and i will be smaller than ryi,

and the sum of the squared semipartial r’s will be less than the multiple R2. Because of the

redundancy between the two predictors, the sum of the squared semipartial correlation coefficients

(areas a and c, the unique contributions of each predictor), is less than the squared multiple

correlation coefficient, R2y.12 (area a + b + c).

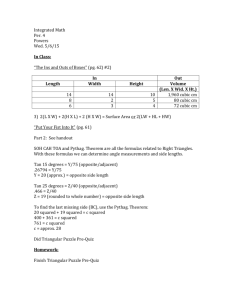

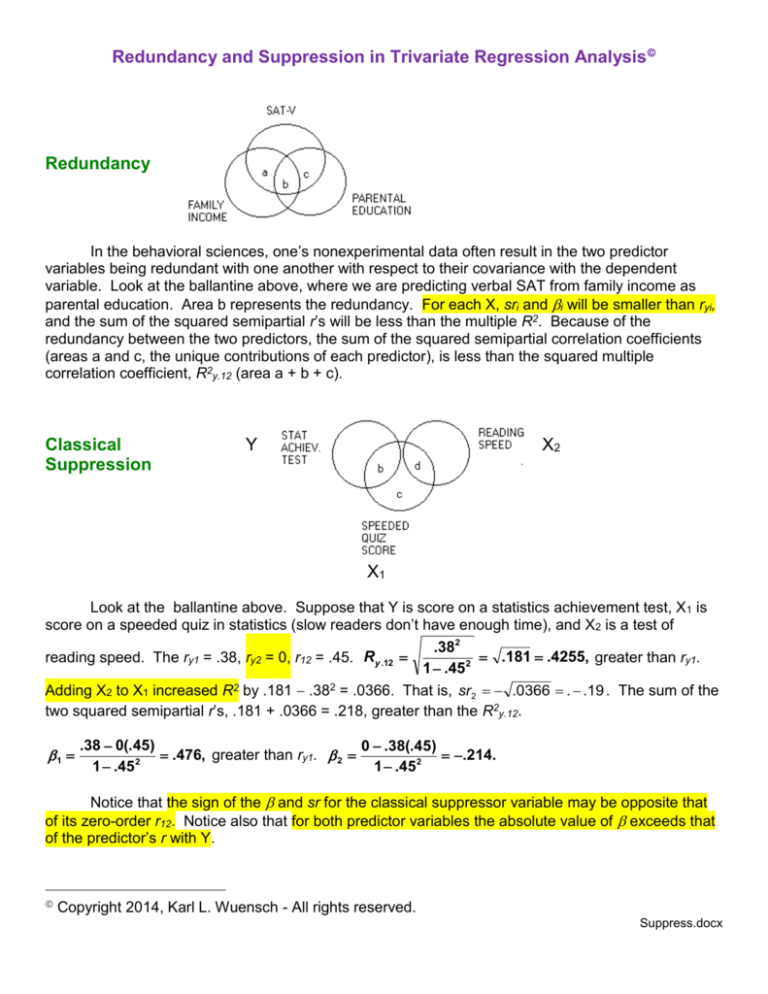

Classical

Suppression

Y

X2

X1

Look at the ballantine above. Suppose that Y is score on a statistics achievement test, X1 is

score on a speeded quiz in statistics (slow readers don’t have enough time), and X 2 is a test of

.382

.181 .4255, greater than ry1.

1 .45 2

Adding X2 to X1 increased R2 by .181 .382 = .0366. That is, sr2 .0366 . .19 . The sum of the

two squared semipartial r’s, .181 + .0366 = .218, greater than the R2y.12.

reading speed. The ry1 = .38, ry2 = 0, r12 = .45. Ry .12

1

.38 0(.45)

0 .38(.45)

.476, greater than ry1. 2

.214.

2

1 .45

1 .45 2

Notice that the sign of the and sr for the classical suppressor variable may be opposite that

of its zero-order r12. Notice also that for both predictor variables the absolute value of exceeds that

of the predictor’s r with Y.

Copyright 2014, Karl L. Wuensch - All rights reserved.

Suppress.docx

2

How can we understand the fact that adding a predictor that is uncorrelated with Y (for

practical purposes, one whose r with Y is close to zero) can increase our ability to predict Y? Look at

the ballantine. X2 suppresses the variance in X1 that is irrelevant to Y (area d). Mathematically,

R 2 ry22 ry2(1.2) 0 ry2(1.2)

r2y(1.2), the squared semipartial for predicting Y from X2 ( sr 22 ), is the r2 between Y and the residual

X

1

X̂ 1.2 . It is increased (relative to r2y1) by removing from X1 the irrelevant variance due to X2

what variance is left in X 1 X̂ 1.2 is more correlated with Y than is unpartialled X1.

2

2

1y

r

r1y

b

b

b

.144

b .382 .144 which is < r(21.2 ) y

.181 Ry2 .12

2

2

bcd

b c 1 d 1 r12 1 .45

Velicer (see Smith et al, 1992) wrote that suppression exists when a predictor’s “usefulness” is

greater than its squared zero-order correlation with the criterion variable. “Usefulness” is the squared

semipartial correlation for the predictor.

Our X1 has rY21 .144 -- all by itself it explains 14.4% of the variance in Y. When added to a

model that already contains X2, X1 increases the R2 by .181 – that is, sr12 .181. X1 is more useful in

the multiple regression than all by itself. Likewise, sr22 .0366 r22 0. That is, X2 is more useful in

the multiple regression than all by itself.

Net Suppression

X1

X2

Look at the ballantine above. Suppose Y is the amount of damage done to a building by a fire.

X1 is the severity of the fire. X2 is the number of fire fighters sent to extinguish the fire. The ry1 = .65,

ry2 = .25, and r12 = .70.

1

.65 .25(.70)

.93 > ry 1.

1 .702

2

.25 .65(.70)

.40.

1 .702

Note that 2 has a sign opposite that of ry2. It is always the X which has the smaller ryi which

ends up with a of opposite sign. Each falls outside of the range 0 ryi, which is always true with

any sort of suppression.

Again, the sum of the two squared semipartials is greater than is the squared multiple

correlation coefficient:

Ry2.12 .505, sr12 .505 ry22 .4425, sr22 .505 ry21 .0825, .4425 .0825 .525 > .505.

3

Again, each predictor is more useful in the context of the other predictor than all by itself:

sr .4424 rY21 .4225 and sr22 .0825 rY22 .0625 .

2

1

For our example, number of fire fighters, although slightly positively correlated with amount of

damage, functions in the multiple regression primarily as a suppressor of variance in X 1 that is

irrelevant to Y (the shaded area in the Venn diagram). Removing that irrelevant variance increases

the for X1.

Looking at it another way, treating severity of fire as the covariate, when we control for severity

of fire, the more fire fighters we send, the less the amount of damage suffered in the fire. That is, for

the conditional distributions where severity of fire is held constant at some set value, sending more

fire fighters reduces the amount of damage. Please note that this is an example of a reversal

paradox, where the sign of the correlation between two variables in aggregated data (ignoring a third

variable) is opposite the sign of that correlation in segregated data (within each level of the third

variable). I suggest that you (re)read the article on this phenomenon by Messick and van de Geer

[Psychological Bulletin, 90, 582-593].

Cooperative Suppression

R2 will be maximally enhanced when the two X’s correlate negatively with one another but

positively with Y (or positively with one another and negatively with Y) so that when each X is

partialled from the other its remaining variance is more correlated with Y: both predictor’s , pr, and

sr increase in absolute magnitude (and retain the same sign as ryi). Each predictor suppresses

variance in the other that is irrelevant to Y.

Consider this contrived example: We seek variables that predict how much the students in an

introductory psychology class will learn (Y). Our teachers are all graduate students. X1 is a measure

of the graduate student’s level of mastery of general psychology. X2 is a measure of how strongly the

students agree with statements such as “This instructor presents simple, easy to understand

explanations of the material,” “This instructor uses language that I comprehend with little difficulty,”

etc. Suppose that ry1 = .30, ry2 = .25, and r12 = 0.35.

1

sr1

.30 .25(.35)

.442.

1 .35 2

.30 .25(.35)

1 .35

2

.414.

2

sr2

.25 .30(.35)

.405.

1 .35 2

.25 .30(.35)

1 .35 2

.379.

Ry2.12 ryi i .3(.442) .25(.405) .234. Note that the sum of the squared semipartials, .4142 +

.3792 = .171 + .144 = .315, exceeds the squared multiple correlation coefficient, .234.

Again, each predictor is more useful in the context of the other predictor than all by itself:

sr .171 rY21 .09 and sr22 .144 rY22 .062 .

2

1

4

Summary

When i falls outside the range of 0 ryi, suppression is taking place. This is Cohen &

Cohen’s definition of suppression. As noted above, Velicer defined suppression in terms of a

predictor having a squared semipartial correlation coefficient that is greater than its squared zeroorder correlation with the criterion variable.

If one ryi is zero or close to zero , it is classic suppression, and the sign of the for the X with

a nearly zero ryi may be opposite the sign of r12.

When neither X has ryi close to zero but one has a opposite in sign from its ryi and the other a

greater in absolute magnitude but of the same sign as its ryi, net suppression is taking place.

If both X’s have absolute i > ryi, but of the same sign as ryi, then cooperative suppression is

taking place.

Although unusual, beta weights can even exceed one when cooperative suppression is

present.

References

Cohen, J., & Cohen, P. (1975). Applied multiple regression/correlation for the behavioral

sciences. New York, NY: Wiley. [This handout has drawn heavily from Cohen & Cohen.]

Smith, R. L., Ager, J. W., Jr., & Williams, D. L. (1992). Suppressor variables in multiple

regression/correlation. Educational and Psychological Measurement, 52, 17-29.

doi:10.1177/001316449205200102

Wuensch, K. L. (2008). Beta Weights Greater Than One !

http://core.ecu.edu/psyc/wuenschk/MV/multReg/Suppress-BetaGT1.doc .

Example of Net Suppression and Reversal Paradox – with data and SAS ouput.

Return to Wuensch’s Stats Lessons Page

Copyright 2014, Karl L. Wuensch - All rights reserved.