Advanced Computer Architecture Homework 1

Advanced Computer Architecture

Homework 1, Nov. 2, 2015

1.

(1) Explain the differences between architecture, implementation, and

realization. Explain how each of these relates to processor performance

as expressed in Equation (1.1).

A processor's performance is measured in terms of how long it takes to execute a particular program (time/program)

Ans:

Architecture specifies the interface between the hardware and the programmer and affects the number of instructions that need to be executed for a particular program or algorithm. Implementation specifies the microarchitectural organization of the design, and determines the instruction execution rate (measured in instructions per cycle) for that particular design. The realization actually maps the implementation to silicon, and determines the cycle time. The product of the three terms determines performance.

(2) As silicon technology evolves, implementation constraints and tradeoffs change, which can affect the placement and definition of the dynamicstatic interface (DSI). Explain why architecting a branch delay slot [as in the millions of instructions per second (MIPS) architecture] was a reasonable thing to do when that architecture was introduced, but is less attractive today.

Ans:

When the MIPS ISA was created, a single-chip processor could reasonably contain a straightforward pipelined implementation like the MIPS R2000. There was no room on chip for branch predictors or decoupled fetch pipelines that could be used to mask branch latency. At the same time, processors were already fast enough to tolerate some additional complexity in the compilation process for finding independent instructions to place in the delay slot. Hence, a branch delay slot made sense, since it reduced the penalty due to branch instructions without negatively affecting implementation or realization. However, as silicon technology enabled more advanced pipelines, including superscalar fetch, other techniques for masking the branch latency became available and more attractive. Once this had occurred, the branch delay slot became an archaic special case that only complicated implementation and realization. Hence, more recent ISAs like

PowerPC and Alpha omitted the branch delay slot.

2.

(1) Using Amdahl's law, compute speedups for a program that is 85% vectorizable for a system with 4, 8,16, and 32 processors. What would be a reasonable number of processors to build into a system for running such an application?

Ans:

S n,p = 1/[p/n + (1-p)]: p = 0.85; n=4, 8, 16:

S 4,0.85 = 2.76, S 8,0.85 = 3.90, S 16,0.85 = 4.92, S 32,0.85 = 5.52

Of these, the 4 processor system achieves the most parallel efficiency (2.76/4), hence that might be a reasonable number.

(2) Using Amdahl's law, compute speedups for a program that is 98% vectorizable for a system with 16, 64, 256, and 1024 processors. What would be a reasonable number of processors to build into a system for running such an application?

Ans:

S n,p = 1/[p/n + (1-p)]: p = 0.98; n=16, 64, 256, 1024:

S 16,0.98 = 12.3, S 64,0.98 = 28.3, S 256,0.98 = 42.0, S 1024,0.98 = 47.7

Of these, the 16 processor systeam achieves the most parallel efficiency (12.3/16), hence that might be a reasonable number. However, the 256 processor system still gains substantial speedup which is fairly close to the ideal case of 50 (where p is infinite).

3.

(1) Consider adding a load-update instruction with register + immediate and postupdate addressing mode. In this addressing mode, the effective address for the load is computed as register + immediate, and the resulting address is written back into the base register. That is,Iwur3,8(r4) performs r3«-MEM[r4+8]; r4«-r4+8. Describe the additional pipeline resources needed to support such an instruction in the TYP pipeline.

Ans:

This instruction performs two register writes. It can either be underpipelined, by forcing the second write to stall the pipeline, or, to maintain a fully pipelined implementation, a second write port must be added to the register file. In addition, the hazard detection and bypass network must be augmented to handle this special case of a second register write.

(2) Given the change outlined in Problem (1), redraw the pipeline interlock hardware shown in Figure 2.20 to correctly handle the load-update instruction.

Ans:

The figure should be modified to include the changes described above.

4.

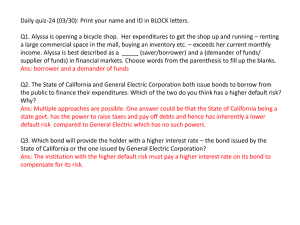

(1) The IBM study of pipelined processor performance assumed an instruction mix based on popular C programs in use in the 1980s.Since then, object-oriented languages like C++ and Java have become much more common. One of the effects of these languages is that object inheritance and.polymorphism can be used to replace conditional branches with virtual function calls. Given the IBM instruction mix and CPI shown in the following table, perform the following transformations to reflect the use of C++ and Java, and recompute the overall

CPI and speedup or slowdown due to this change:

• Replace 50% of taken conditional branches with a load instruction followed by a jump register instruction (the load and jump register implement a virtual function call).

• Replace 25% of not-taken branches with a load instruction followed by a jump register instruction.

Ans:

5.

(1) Generate a pipelined implementation of the simple processor outlined in the figure that rninirnizes internal fragmentation. Each subblock in the diagram is a primitive unit that cannot be further partitioned into smaller ones. The original functionality must be maintained in the pipelined implementation. Show the diagram of your pipelined implementation.Pipeline registers have the following timing requirements:

• 0.5-ns setup time

• 1-ns delay time (from clock to output)

Ans:

(2) Compute the latencies (in nanoseconds) of the instruction cycle of the nonpipelined and the pipelined implementations.

Ans:

Non-pipelined: PC delay(1ns) + ICache(6ns) + Itype Decode(3.5ns) + Src. Deccode(2.5ns) +

Reg. Read(4ns) + Mux(1ns) + ALU(6ns) + Reg. Write(4ns) = 28 ns. or

Non-pipelined: Add(2ns) + PC setup(0.5) + PC delay(1ns) + ICache(6ns) + Itype Decode(3.5ns)

+ Src. Deccode(2.5ns) + Reg. Read(4ns) + Mux(1ns) + ALU(6ns) + Reg. Write(4ns) = 30.5 ns.

Pipelined: 5 stages * 7.5ns cycle time = 37.5ns

(3) Compute the machine cycle times (in nanoseconds) of the nonpipelined and the pipelined implementations.

Ans:

Non-pipelined: machine cycle = instruction cycle = 28ns.

Pipelined cycle time: 7.5ns

(4) Compute the (potential) speedup of the pipelined implementation in Problems

(1)-(3) over the original nonpipelined implementation.

Ans:

Potential speedup is not 5x, because of additional overhead from the pipeline registers. The nonpipelined solution finishes an instruction once every 28ns. The pipelined solution finishes an instruction once every 7.5ns. The speedup is

28/7.5 = 3.73 speedup