04LoS

advertisement

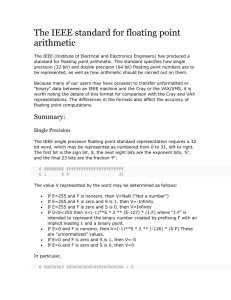

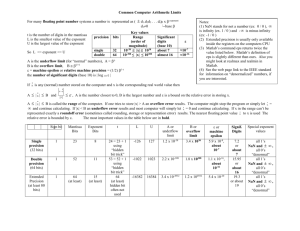

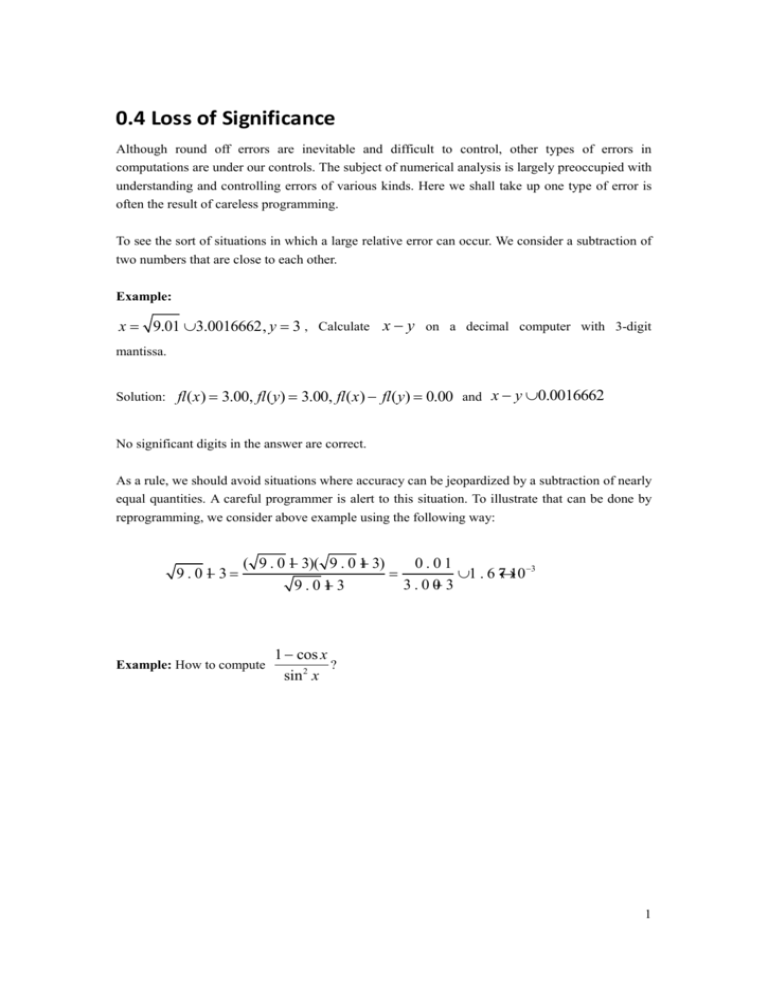

0.4 Loss of Significance Although round off errors are inevitable and difficult to control, other types of errors in computations are under our controls. The subject of numerical analysis is largely preoccupied with understanding and controlling errors of various kinds. Here we shall take up one type of error is often the result of careless programming. To see the sort of situations in which a large relative error can occur. We consider a subtraction of two numbers that are close to each other. Example: x = 9.01 » 3.0016662, y = 3 , Calculate x - y on a decimal computer with 3-digit mantissa. Solution: fl(x) = 3.00, fl(y) = 3.00, fl(x) - fl(y) = 0.00 and x - y » 0.0016662 No significant digits in the answer are correct. As a rule, we should avoid situations where accuracy can be jeopardized by a subtraction of nearly equal quantities. A careful programmer is alert to this situation. To illustrate that can be done by reprogramming, we consider above example using the following way: 9 . 0 1- 3 = ( 9 . 0 1- 3)( 9 . 0 1+ 3) 0.01 = » 1 . 6 7́ 10 -3 3 . 0 0 + 3 9 . 0 1+ 3 Example: How to compute 1 - cos x ? sin 2 x 1 0.5 Furthur Reading: Theorem on Loss of Precision An interesting question is, exactly how many significant binary bit are lost in the subtraction x - y when x is close to y? The precise answer depends on the particular values of x and y . However, we can obtain bounds in terms of the quantity measure for the closeness of |1- y / x |, which is a convenient x and y . The following theorem contains useful upper and lower bounds. Theorem Theorem on Loss of Precision If x and y are positive normalized floating-point binary machine numbers such that x>y and 2-q £ 1- y / x £ 2- p , q>p , then at most q and at least p significant binary bits are lost in the subtraction x-y . Proof We shall prove the lower bound and leave the upper bound as an exercise. The normalized binary floating-point forms for x and y are x = r ´ 2n (1£ r < 2) y = s ´ 2m (1 £ s < 2) Since x is larger than y, the computer may have to shift y so that y have the same exponent as x before performing the subtractions of y from x. Hence, we must write y as y = (s ´ 2m-n ) ´ 2n And then we have x - y = (r - s ´ 2m- n ) ´ 2n To normalize the computer representation of x-y, a shift of at least p bits to the left is required. Then at least p spurious 0 are attached to the right end of the mantissa, which means that at least p bits of precision have been lost. Similar argument hold the upper bound q, at most 1 bits of precision have been lost. 2