Data Loading

SQL Server Technical Article

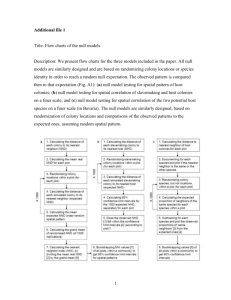

Summary: The APS architecture provides Massive Parallel Processing (MPP) scalability that can overcome many of the performance limitations generally associated with loading data into a traditional SQL Server database. In this whitepaper we will examine a number of concepts and considerations relevant to loading data into the Analytic Platform System (APS) appliance for optimal performance.

Writer: Andy Isley, Solution Architect

Technical Reviewer: Charles Feddersen, Mary Long, Brian Mitchell

Published: June 2015

Applies to: Analytics Platform System

Copyright

This document is provided “as-is”. Information and views expressed in this document, including

URL and other Internet Web site references, may change without notice. You bear the risk of using it.

Some examples depicted herein are provided for illustration only and are fictitious. No real association or connection is intended or should be inferred.

This document does not provide you with any legal rights to any intellectual property in any

Microsoft product. You may copy and use this document for your internal, reference purposes.

© 2014 Microsoft. All rights reserved.

2

Contents

3

Introduction

In this whitepaper, we will present a number of concepts and considerations relevant to loading data into the Analytic Platform System (APS) appliance. The APS architecture provides Massive

Parallel Processing (MPP) scalability that can overcome many of the performance limitations generally associated with loading data into a traditional SQL Server database.

All of the tests described in this paper were performed on a single rack appliance running on version APS AU3 RTM (v10.0.3181.0) of PDW. As such no assumptions should be made that the results are accurate for, nor reflect the performance of any previous or future versions of

PDW, nor any smaller or larger compute rack configurations.

Objectives

Explain key concepts relating to the Data Movement Service

Provide examples to demonstrate load performance and how it is affected by database design

Demonstrate different causes of bottlenecks in the load process

Discuss the various load methods (SSIS/DwLoader) and load rates based on each method

Discuss load rates based on table geometry and index strategy.

Data Loading

Importance of Load Rate

The rate at which the Parallel Data Warehouse (PDW) Region of an APS System can load data is generally one of the most important questions asked by a potential customer either during a

Proof of Concept or during an Implementation. It is a simple and tangible metric for customers to make comparisons against their existing implementations or competing vendors that they may also be evaluating. Given the performance limitations of loading data into a single table in a traditional Symmetric multiprocessing) SMP database, via either BCP or SSIS, it is important that the load results for an APS demonstrate multiple factor gains over their existing solution.

PDW Table Architecture

A database in PDW implements two different types of tables to achieve MPP scalability across multiple physical servers. These types are called Replicated and Distributed. In the case of a replicated table, an entire copy of the table exists on each physical compute node and as such there is always a 1:1 relationship between computes nodes and the physical instances of a replicate table.

Distributed tables are represented as 8 physical tables on each compute node. A single rack appliance contains 8 - 9 compute nodes depending on the manufacturer of the APS. Therefore

64 - 72 physical tables for each distributed table are created across these nodes. Data is

4

distributed amongst these physical tables by applying a multi-byte hashing algorithm to a single column of data in that distributed table. All 8 physical tables per compute nodes are stored in a single database within the named instance of the compute node. The following diagram explains the physical table layout for a distributed table.

Logical Table Name: lineitem

Distribution 10 - 79

Compute Node 3 - 9

1 2 3 4 5

Compute Node 1

6 7 8 9 80

Compute Node 2

Compute Node 10

Load speed will vary depending on the type of distribution used. This will be covered in more detail later in the document.

The Data Movement Service

The APS appliance implements the Data Movement Service (DMS) for transporting data between compute nodes during load and query operations. Whilst this paper will focus primarily on the loading of data into the PDW, some of the information regarding DMS buffer management is also applicable to optimizing the query process.

The DMS uses two different buffer sizes when loading data into the appliance. When moving data between the Loading Server and the Compute nodes, a 256 KB buffer is used to move the data. At this stage of the load the data is still raw and unparsed. Once the data is received by each Compute Node, converted to the applicable data type and the hash applied to the distribution key column, the DMS then uses 32 KB buffers (32768 bytes) to transfer data between the Compute Nodes (shuffle) to complete the load process. The details below will focus on the 32 KB buffers as these are affected by destination DDL whereas the 256 KB buffer will not be.

5

These 32 KB buffers also contain additional header information stored with each buffer being transmitted (the 256 KB buffer holds only bullets 1,2 and 5 form the list below) however it does not form part of the 32768 bytes permitted for data use. Whilst a detailed look at the buffer header is beyond the scope of this document, the following bullets summarize the data stored in the header.

The Command Type: This is either a WriteCommand (0x02) or a CloseCommand (0x03). Every buffer except the last in a load contains the WriteCommand . Only the final buffer in a load contains the CloseCommand . The final buffer also contains no data.

The PlanId. This is a unique identifier that identifies the DMS plan that is associated to the buffer

The Distribution. An integer that defines the target distribution for a buffer

The SourceStepIndex.

The SizeOfData. This represents the actual size of data stored in the buffer in bytes

Within each 32 KB buffer the DMS effectively creates fixed width columns to store the data from the source file. This is a very important concept to understand when optimizing the load performance during a POC or implementation. The width specified for each column is determined by the ODBC C data structures that support each SQL type. More information on these data types can be found here: C Data Types .

The byte sizes each data type supported in PDW generally aligns with the max_length defined in the SQL Server sys.types catalog view, however there are some exceptions. Examples of this are provided later in this document. A simple example of the fixed width columns can be made using a varchar (100) data type in the destination table. The following diagram shows how

3 different length strings are stored in the DMS buffer.

Microsoft

Parallel Data Warehouse

PDW

3 x 101 = 303

Bytes

101 Bytes

Actual Bytes From Source: 9 + 23 + 3 + (3) = 38

This example is quite extreme to demonstrate unused space in the buffer. You will notice that the column is described as having 101 bytes of data, even though the column DDL definition was for 100 bytes. This is because an extra byte is required in the C language to represent the end of string null terminator. The rule for this extra data applies only to char, varchar, nchar and nvarchar data types. In the case of nchar or nvarchar, an extra 2 bytes is required for this character as they store double byte data.

In addition to the actual size of the data being stored, there is overhead in the buffer caused by the data type as well as the NULL status of the destination column. If a column in the

6

destination table is allowed to be NULL, or if it is a string data type (char, varchar, nchar, nvarchar) then an 8 byte indicator will be added to that column in the buffer. This is used to specify the length of the variable length column as well as whether the value in the field is

NULL. If a column is NULL or of variable length then the 8 byte indicator will be added, however if the column is NULL and of a variable length type then the indicator will remain 8 bytes in total for the column, it does not add to a 16 byte indicator. The only way to avoid this 8 byte indicator is to use a fixed width data type (int, bigint, datetime etc.) that is defined as NOT NULL in the destination database table.

The following table summarizes the whether or not a column would have an 8 byte indicator:

Column Type 8 Byte Indicator

Fixed Width Data Type NOT NULL

Fixed Width Data Type NULL

Variable Width Data Type NULL / NOT

NULL

No

Yes (8 Bytes)

Yes (8 Bytes)

As a result of this behavior, a subtle change to table DDL may have a significant impact on the efficient packing of rows from a source file into each buffer during a load. The same effect would occur if performing a CTAS into a new table where an existing string data type is CAST into an unnecessary larger size. Here are two examples of similar table DDL with their corresponding data sizing. create table Example1 (

id int not null

, name char(10) not null

, code smallint not null

, address varchar(8) not null)

8

0

0

Indicator Bytes

0

Data Bytes

4

10 + 1

2

8 + 1

Column id name code address create table Example2 (

id bigint null

, name char(10) null

, code char(5) not null

, address varchar(8) null)

Total

4

11

2

17

Total: 34

7

Column id name code address

8

8

Indicator Bytes

8

8

Data Bytes

8

10 + 1

5 + 1

8 + 1

Total

16

19

14

17

Total: 66

This example demonstrates that 2 variations of the same table that could have been used to store the same data will have significant effect on the number of rows per buffer in the DMS.

The best data loading efficiency is achieved by loading as many rows into each buffer as possible. It is common that a customer will supply DDL of excessive data types as part of a

POC scope. Generally these are over size string types it may also be the case that BIGINT applied where a smaller numeric type would be more suitable.

Calculating the number of rows in each buffer is important for understanding the efficiency of the load process. As mentioned above the data sizing for each field used in the buffer is based on the ODBC C data type definitions. The following query can be used to identify the number of rows that can be packed into a DMS buffer when loading a file to the database. The same query can be used to identify buffer utilization for data movements during queries. As the data type size does not completely align with the sys.types max_length field for types, some adjustments have been made to match the sizes with the ODBC C data types used. select

CAST(32768. / SUM(CASE

WHEN NullValue = 1 OR VariableLength = 1 THEN 8

ELSE 0

END +[DataLength]) AS INT) AS RowsPerBuffer

,SUM(CASE

WHEN NullValue = 1 OR VariableLength = 1 THEN 8

ELSE 0

END + [DataLength]) AS RowSize

,32768. % SUM(CASE

WHEN NullValue = 1 OR VariableLength = 1 THEN 8

ELSE 0

END +[DataLength]) AS BufferFreeBytes

,CAST(((32768. % SUM(CASE

WHEN NullValue = 1 OR VariableLength = 1 THEN 8

ELSE 0

END +[DataLength])) / 32768) * 100 AS DECIMAL(8,5)) AS [BufferFreePercent] from ( select

c.name

,c.is_nullable AS [NullValue]

,CASE

WHEN ty.name IN ('char','varchar','nchar','nvarchar') THEN 1

ELSE 0

END AS [VariableLength]

8

inner join (

,CASE

WHEN ty.name IN('char','varchar') THEN c.max_length + 1

WHEN ty.name IN('nchar','nvarchar') THEN c.max_length + 2

WHEN ty.name IN('binary','varbinary') THEN c.max_length

ELSE ty.max_length

END AS [DataLength] from sys.tables t inner join sys.columns c on t.object_id = c.object_id select

name

,system_type_id

,user_type_id

,CASE

WHEN name = 'time' THEN 12

WHEN name in

('date','datetime','datetime2','datetimeoffset') then max_length * 2

WHEN name = 'smalldatetime' then 16

WHEN name = 'decimal' then 19

ELSE max_length

END as max_length from sys.types t) ty on c.system_type_id = ty.system_type_id and c.user_type_id = ty.user_type_id where t.name = 'si') z

To emphasize the impact that oversize DDL will have on the load performance, 2 loads of the line item table were run with the same source file. The first load was based on the standard DDL for the line item table, and the second was into altered DDL where the l_comment column type was set to varchar(8000). The DDL for each of these tables is as follows.

CREATE TABLE [TPC_H].[dbo].[lineitem_h]

(

[l_orderkey] BIGINT NOT NULL,

[l_partkey] BIGINT NOT NULL,

[l_suppkey] BIGINT NOT NULL,

[l_linenumber] BIGINT NOT NULL,

[l_quantity] FLOAT NOT NULL,

[l_extendedprice] FLOAT NOT NULL,

[l_discount] FLOAT NOT NULL,

[l_tax] FLOAT NOT NULL,

[l_returnflag] CHAR(1) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL,

[l_linestatus] CHAR(1) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL,

[l_shipdate] DATE NOT NULL,

[l_commitdate] DATE NOT NULL,

[l_receiptdate] DATE NOT NULL,

[l_shipinstruct] CHAR(25) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL,

[l_shipmode] CHAR(10) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL,

[l_comment] VARCHAR(44) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL

9

(

)

WITH (DISTRIBUTION = HASH ([l_orderkey]));

CREATE TABLE [TPC_H].[dbo].[lineitem_h]

[l_orderkey] BIGINT NOT NULL,

[l_partkey] BIGINT NOT NULL,

[l_suppkey] BIGINT NOT NULL,

[l_linenumber] BIGINT NOT NULL,

[l_quantity] FLOAT NOT NULL,

[l_extendedprice] FLOAT NOT NULL,

[l_discount] FLOAT NOT NULL,

[l_tax] FLOAT NOT NULL,

[l_returnflag] CHAR(1) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL,

[l_linestatus] CHAR(1) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL,

[l_shipdate] DATE NOT NULL,

[l_commitdate] DATE NOT NULL,

[l_receiptdate] DATE NOT NULL,

[l_shipinstruct] CHAR(25) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL,

[l_shipmode] CHAR(10) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL,

[l_comment] VARCHAR(8000) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL

)

WITH (DISTRIBUTION = HASH ([l_orderkey]));

This might seem an extreme case however it has been the case that a customer has supplied

DDL for a POC where 3 of the text columns in the fact table were set to varchar (8000) where the max length actually required was 30 characters, and additional text columns were set to varchar(500) where again this was well in excess of what was required. The load times for each these 2 data loads were as follows.

Original DDL 000:15:21:566

Oversize DDL 002:08:36:026

10000

8000

6000

4000

2000

0

921

7716 varchar(44) varchar(8000) l_comment column data type DDL

Hashing Algorithm

The PDW uses a multi-byte hashing algorithm applied to a single column of data in a distributed table to determine the physical table to store each row in. The hashing process occurs after the data type conversions have been applied on the Compute Node during load. As a result of this process, the same value (for example the number 1) may be stored in a different distribution

10

depending on the SQL type assigned to it. To demonstrate this, the following table lists the various distributions that will store the value 1 depending on the data type defined for that column in the table DDL. Note, these are the distribution based on a single rack appliance and will not reflect the behavior of smaller or larger rack configurations. create table HashExample ( id <COLUMN DATA TYPE> not null ) with (distribution = hash(id))

Data Type tinyint smallint int bigint float decimal(4,2) decimal(5,3) money smallmoney real

49

11

23

56

10

71

50

Distribution

76

63

42

The decimal data type is represented twice in this table with 2 arbitrary precision and scale values. This is to show that the value set for these values in the type definition will have an effect on the distribution location for that value.

There are many variables to consider when selecting a distribution column. A starting point would be to select a column with a high cardinality and often used in joins. This should theoretically distribute the data evenly across the appliance but not always. A table can be skewed due purely to the values being hashed. Data types can have an impact on how the data is hashed. Changing the data type from numeric to INT or BIGINT will cause a different hash result. Loading data into a well-balanced distribution will yield far higher throughput than loading a table with skew or often called a hot spot/unbalanced distribution.

Customers often wonder if it is possible to determine the quality of the distribution in advance before loading the data onto the appliance. Unfortunately, this is not possible as the location of the data is dependent on the size of the appliance.

To get a better feel for how PDW distributes data, the following table shows that even when distribution is based on a series of INT values starting at 1 and incremented by 1, the minimum value stored on each of 80 distributions does not represent the values 1 - 80.

Compute Nodes – Minimum INT value for each distribution

Dist 01 02 03 04 05 06

A (1) 132 136 6 41 75 10

07

156

08

49

09

37

10

71

11

B (2) 123

C (3) 86

D (4) 8

E (5) 195

F (6) 82

G (7) 92

H (8) 79

131

97

39

77

48

5

53

131

97

39

77

7

9

53

25

15

28

110

19

29

45

21

109

169

325

36

50

26

1

52

73

35

40

72

217

64

153

69

89

31

407

2

336

23

4

130

125

62

14

46

3

43

12

113

17

87

You can see from this table that the largest value is 407, or basically 5 times greater than the total number of distributions. Additionally, the NULL status of a column does not affect the outcome of hashing a value. For example, inserting the value 1 into a NULL or NOT NULL column will always result in the same distribution being determined.

34

44

158

24

11

159

67

From this chart, you might conclude that the hash algorithm seems to randomly place the data across the appliance. When in fact, the data placement is not random. There are 7 rules that can be used to understand how data is distributed.

A distributed table will always contain the same values in the column of a distribution where that column is defined as the distribution key in the CREATE TABLE statement.

This means that, for example , where a table defined with distributed on the column ID (…

DISTRIBUTION = HASH(Id) ... ) the same values in the ID column will always be physically stored in the same distribution.

All rows with the value 23 in the column ID will be stored in the same physical location.

The statement DBCC PDW_SHOWSPACEUSED(<TableName>) is useful for identifying the location a row is added to

INSERT INTO DistributedTable VALUES (23)

INSERT INTO DistributedTable VALUES (23). Second row: same value, same location

12

1. Different values for the distribution key can exist in the distribution. a. This is different for Rule 1. b. Rule 1 stated that all rows with the same distribution key value (for example, 23) would be stored in the same distribution. c. Rule 2 means that values other than 23 (used in the example) may exist in the same distribution as value 23. d. Example: rows with values 23, 50, and 80 in the distribution key may all be stored in the same distribution e. The following basic test demonstrates this: i. Count the unique values in the distribution key column:

SELECT COUNT(DISTINCT(OrderDateKey)) FROM FactInternetSales

RESULT: 1,124 ii. But, we know we only have one distribution in this appliance.

2. The hash algorithm is consistent across all tables for the entire appliance based on data type. a. This means, for example, that the value 23 of type INT in Table_A will be stored in the same physical location as the value 23 type INT for Table_B. b. Based on the previous example, had a second table been created in a different database that was distributed on an INT column (of any name), then the value 23 would have been stored in the same location. c. The only way that an INT type would be stored in a different location would be when a different size appliance is used (either less or more compute nodes).

3. The hash algorithm is based on bytes, not the actual value. The same value stored in a different data type will return a different hash result and be stored in a different location. a. This means that distributing a table on column ID of type INT will yield a different result for distributing the same table on column ID of type BIGINT.

4. The value NULL will always be stored on Compute Node 1, Distribution A. a. Best practice is to ensure that the distribution key column for a table does not contain any

NULL values and is defined as NOT NULL in the table definition statement.

5. The distribution key column is not updateable . a. Attempting to alter an existing value in the distribution key column will return an error. b. A best practice is to ensure the column chosen for distribution is fixed and not expected to change during load processes.

6. Only a single column can be defined as the distribution key.

13

a. This is allowed:

CREATE TABLE <TableName> WITH (DISTRIBUTION = HASH( Col1 )) AS SELECT

Col1, Col2 FROM…. b. This is not Allowed:

CREATE TABLE <TableName> WITH (DISTRIBUTION = HASH( Col1, Col2 )) AS

SELECT Col1, Col2 FROM…. c. However: if you need to combine columns it can be completed as follows:

CREATE TABLE <TableName> WITH (DISTRIBUTION = HASH( Col3 )) AS SELECT

C ol1 + Col2 AS Col3 FROM… - Yes

Finally, as the hashing algorithm is based on the bytes used to store a data type, the performance of applying the hash to each row will be affected by the size of the data type. The result of hashing a 4 byte integer will resolve faster than hashing a 100 byte varchar column.

Therefore, where possible it is best to distribute data on the smallest data type (by bytes) possible, with an exception. The data type tinyint will not distribute well on a full rack appliance, as is has a very limited number of values that can be stored in (0-155). As a general rule, a column should have 10X the distinct vales as the appliance has distributions. In a full appliance a column may not be a good candidate for distribution if the number of distinct values is less than 720(Dell/Quanta) or 640 (HP).

APPEND -m vs. FASTAPPEND -m + CTAS

PDW supports 4 modes of loading data into a database table. The mode chosen is dependent on the type of table being loaded and the circumstances under which the data is being refreshed. The different modes supported are:

APPEND - Automatically stages data prior to finally loading destination table

FASTAPPEND - Directly loads to the destination table

RELOAD - Truncates the destination table and then loads data in the same manner as

APPEND

UPSERT - Performs a merge of existing and new data based on a key column

It is important to understand the differences between APPEND and FASTAPPEND. The

APPEND mode allows loading to a staging table in the first step that then gets inserted into a final target table in the second step. The second step assures that any fragmentation that occurred in writing to the staging table is corrected. The FASTAPPEND mode writes the rows to the final target table as they are ready to be written in a multi-processing format thus causing fragmentation in the final target table for any table other than that established as a CCI table.

This section will focus on 2 approaches for loading data from a file to a final distributed destination table that also has a Clustered Index (CI). Loading into a CI on a fact table adds additional performance overhead for ordering the data. It can also lead to fragmentation where the column values are not always ascending and inserting a record mid table will cause a page split to occur.

14

Depending on the data being loaded, there may be advantages in using the APPEND mode to automatically handle staging and inserts, however an alternative is to FASTAPPEND to a heap staging table and then CTAS and partition switch into the destination table to achieve the same goal. The examples provided here do not use partitions but instead recreate the entire table each load but the principals remain the same. The generic TPC-H line item DDL (below) will be used for all examples in this section.

CREATE TABLE lineitem

( l_orderkey bigint not null,

l_partkey bigint not null,

l_suppkey bigint not null,

l_linenumber bigint not null,

l_quantity float not null,

l_extendedprice float not null,

l_discount float not null,

l_tax float not null,

l_returnflag char(1) not null,

l_linestatus char(1) not null,

l_shipdate date not null,

l_commitdate date not null,

l_receiptdate date not null,

l_shipinstruct char(25) not null,

l_shipmode char(10) not null,

l_comment varchar(44) not null)

WITH

(DISTRIBUTION = HASH (l_orderkey),

CLUSTERED INDEX (l_shipdate))

The APPEND mode was reviewed first for loading data. When PDW loads data using the

APPEND mode, the process will create a temp table that matches the geometry of the final destination table. This means that the Clustered Index defined for the destination table will also be created for the staging table. The following code shows the index creation statement for the staging table taken form the Admin Console.

CREATE CLUSTERED INDEX [Idx_0e4a1b0b3e08424990ef815d246e01a3] ON

[DB_69c0fcf082cc412298d59a34ae290d02].[dbo].[Table_c56e471888f144458dce7a89f87cf23f_A]

([l_shipdate] )

WITH (DATA_COMPRESSION = PAGE,PAD_INDEX = OFF,SORT_IN_TEMPDB = ON,IGNORE_DUP_KEY =

OFF,STATISTICS_NORECOMPUTE = OFF,DROP_EXISTING = OFF,ONLINE = ON,ALLOW_ROW_LOCKS =

ON,ALLOW_PAGE_LOCKS = ON);

The loading times for this approach are as follows:

Staging Table Load

Destination Table Load

Overall Time

000:23:17:243

000:12:30:123

000:35:47:726

15

During the APPEND data loading process the index fragmentation information on Compute

Node 1, Distribution A was reviewed to identify the fragmentation in the Clustered Indexes. The page splits / sec perfmon counter was also captured. The screenshot below shows the page split activity on Compute Node 1 of the appliance.

This shows that database server is consistently incurring approximately 2000 page splits per seconds. The page split operation in SQL Server; especially at the leaf level of the index is a relatively expensive process. Fundamentally, this process will allocate a new page and then move half of the existing rows from the split page to the new page. It then writes the new incoming record to either the new or existing page, or, if there is still not enough room it will perform a 2 nd page split operation and so on until the new record can be written.

The table below shows information from the sys.dm_db_index_physical_stats DMV that was used to understand the status of the staging table after the initial part of the APPEND load was complete. The figure that stands out here is the fragment_count. It's very high compared to the page count. A fragment is a collection of pages in sequence. Ideally this figure should be as low as possible but given that the load inserted into a CI this behavior is expected.

Index Level avg fragmentation in percent

0

1

2

3

98.4345616

99.91023339

50

0 fragment_count Page_count

370631

1114

4

1

370631

1114

4

1 avg_page space used in percent

98.97777366

69.75905609

58.06770447

0.753644675 record_count

37507272

370631

1114

4

16

371750

This table shows the same information for the final destination table after the insert from the above staging table was complete.

Index Level avg fragmentation in percent

0

1

2

3

0.01

0

0

0 fragment_count Page_count

49

4

2

1

368192

774

2

1

371750 avg_page space used in percent

99.52845318

99.83723746

81.20830245

0.370644922 record_count

37507272

368192

774

2

As expected the state of the final destination table is much better. The fragment_count and the avg_fragmentation_in_percent is now significantly lower as the Staging to Destination table insert process has moved pre-sorted data directing into the destination table. The following screen captures show the typical appliance CPU and Landing Zone Disk Read Bytes / sec utilization during the load.

The data generated by the TPC-H generator is not completely random however so the l_orderdate column that we are clustering on will be partially ordered. While supplying ordered data to PDW does not produce the same benefits as it does in a traditional SQL instance, it is likely that a lot of the records inserted were adding new pages to the table rather than splitting existing ones. To prove that a different clustering column on the same table and same data

17

could yield different load results, the line item was recreated with a clustered index on the l_comment field. This has limited practical use in the real world but for demonstration purposes it works well. The load time and fragmentation status for the staging and destination tables are as follows:

Staging Table Load

Destination Table Load

Overall Time

Index Level avg fragmentation in percent

0

1

2

3

99.21058813

100

100

0

000:39:02:846 (previously 000:23:17:243)

000:12:20:723 (previously 000:12:30:123)

000:51:23:833 (previously 000:35:47:726) fragment_count Page_count

421452

3342

29

421453

3342

29

1 1

424825 fragment_count Page_count avg_page space used in percent

71.2618977

69.92252286

66.36376328

16.48134421

2

3

0

1

Index Level avg fragmentation in percent

0.01

0.123915737

20

0

41

14

10

1

287731

1614

10

1

289356 avg_page space used in percent

99.61156412

99.44738078

91.42698295

5.769705955 record_count

37507272

421453

3342

29 record_count

37507272

287731

1614

10

The first difference to notice is the overall load time. While the second stage to load into the destination table remains vaguely the same, the initial load to the Clustered Index staging table has increased by almost 16 minutes, which was resulted in the overall load time increasing from

35 minutes 47 seconds to over 51 minutes.

The next key difference is the page_count for the staging and destination tables. The increase of ~ 50,000 pages for the destination table is due to page splits occurring in a more random manner (high ratio of page splits mid table vs. adding to the end of the table) and therefor lower average page utilization. The record count, as expected, remains exactly the same. The page_count for the destination table is a lot lower that previously. This is due to the page compression in SQL Server that applies what is known as prefix and dictionary compression methods to the column values.

By sorting the string l_comment field alphabetically, near identical strings will be positioned on the same page which provides the opportunity to replace a lot of characters (which use a lot of

18

space) with a single key value when compressed. When looking at an example of this during a previous POC where the local clustering column was actually a non-unique GUID, the storage requirements for the table as a heap were 61 GB. This was reduced to 44 GB when applying the Clustered Index. This, in turn, reduces the scan time on the disks and increases the storage capacity of the appliance.

Ultimately though, loading the data with either clustering column yielded near identical fragmentation for both destination tables which is ideal for querying.

The second approach is to use the FASTAPPEND mode to load data into a heap staging database and then apply a CTAS command for the final transformation. This approach requires additional development time as the staging table needs to be created by the user, and ideally the load is partition friendly as the CTAS operation will need to create a new table. In the case that a clean SWITCH in cannot be made then a UNION ALL will be required between the most recent stored partition and the new data, and then a finally the existing partition would be switched out and the new table switch in to replace it. The example provided here loads the table entirely and does not demonstrate additional partition switching. The DDL used for the

Staging heap table in this example is as follows.

CREATE TABLE [TPC_H].[dbo].[lineitem_h]

(

[l_orderkey] BIGINT NOT NULL,

[l_partkey] BIGINT NOT NULL,

[l_suppkey] BIGINT NOT NULL,

[l_linenumber] BIGINT NOT NULL,

[l_quantity] FLOAT NOT NULL,

[l_extendedprice] FLOAT NOT NULL,

[l_discount] FLOAT NOT NULL,

[l_tax] FLOAT NOT NULL,

[l_returnflag] CHAR(1) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL,

[l_linestatus] CHAR(1) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL,

[l_shipdate] DATE NOT NULL,

[l_commitdate] DATE NOT NULL,

[l_receiptdate] DATE NOT NULL,

[l_shipinstruct] CHAR(25) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL,

[l_shipmode] CHAR(10) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL,

[l_comment] VARCHAR(44) COLLATE Latin1_General_100_CI_AS_KS_WS NOT NULL

)

WITH (DISTRIBUTION = HASH ([l_orderkey]));

The load times for the same TPC-H line item file to be loaded via FASTAPPEND into the heap staging table and then inserted into the final table with clustered Index are as follows.

Staging Table Load

Destination Table Load

Overall Time

000:15:21:566

000:14:13:867

000:29:35:433 (same DDL APPEND 000:35:47:726)

19

This approach saves about 6 minutes (18%) of the overall load time. During the same POC mentioned above where the clustered Index was applied to a uniqueidentifier, the APPEND method took 20 minutes 37 seconds for the full file to destination table load, whereas the

FASTAPPEND + CTAS took only 9 minutes and 57 seconds with the resulting fragmentation for both approaches actually better for the FA + CTAS method. The data being loaded will drive the performance of the approach so while in both instances the FA + CTAS approach is quicker overall, the proportional improvement will vary. The following tables show the fragmentation state of the heap staging table and the Clustered Index destination table using the FA + CTAS approach on the TPC-H data.

Index Level avg fragmentation in percent

0 (HEAP) 7.783018868 fragment_count Page_count

3797 372131

371750 avg_page space used in percent

99.23099827 record_count

37507272

Index Level avg fragmentation in percent

0

1

2

3

0.01

0

0

0 fragment_count Page_count

3781

17

2

1

368374

774

2

1

369151 avg_page space used in percent

99.52815666

99.88589078

81.20830245

0.370644922 record_count

37507272

368374

774

2

Looking at the typical appliance CPU and Landing Zone Disk Read Bytes / sec utilization during the loading process into the heap staging table we can see very different characteristics. The

Landing Zone bytes read from the disk are a lot higher, as is the CPU utilization on the compute nodes. Basically, the appliance is consuming data faster.

20

The CTAS into the final destination took longer than the APPEND method, but the significant saving in loading the staging table more than accounted for this difference.

Linear Scaling of Loads

Linear Scale is based on the load performance and how it is effected as larger files are loaded into the system or multiple files are loaded across the different table structures.

To test linear Scale, 3 loads were performed using TPC-H generated line item data. The dbgen64.exe data generation tool was used to create 100 through 300 GB sizes of data. These were then loaded into the various table structures (Heap, CI and CCI) and were also loaded using the FastAppend, Append and Reload. The following section summarizes the results and demonstrates that loading a single files up to 300 GB remains linear across all table structures and loading methods.

Note: File size is based on passing the 100GB, 200GB and 300GB as a parameter into the

TCPH file generator. Actual file size is less than the expected size.

Single File using DWLoader

Results show that no matter the table type (Heap, Clustered Index or Clustered Column Store), loads scale linear as the size of the file increases. With the fastest method for loading a file is through the fast append method to a heap table.

Overall, it can been seen that each method is scalable as the size of the file increases. While the overall load time increases, the duration is proportional to the increase in file size.

21

TPC-H

Size

Generated

Size on Disk (GB) Size on Disk (KBytes) Total Rows

(GB)

100 74.6

78,300,520 600,037,902

200

300

150

226

157,873,851

243,544,621

1,200,018,434

1,799,989,091

22

Scaling of APS by adding Scale Units

Equally important is the ability for APS to scale vertically by adding additional Scale Units. For this section, DWLoader was utilized to identify the load times of loading a single 100GB File or

5GB File. The 100 GB file was loaded into a distributed table where as the 5GB file was loaded into a Replicated table. This test started with ¼ rack (single scale (2 compute node) and an additional scale units was added until a full rack was established (four scale units (8 Compute nodes).

Replicated Table Scaling (5GB):

Replicated table loads are less effected by scaling than loading data into distributed tables for

CCI and Heap tables. However, CI replicated tables can see a decrease in load performance when scaling. For this reason, we suggest loading replicated tables into a Heap or CCI. CCI replicated tables take a 1.4X longer to load were as a CI table takes 8.5x longer than loading data into a Heap.

The decision to load into a Heap/CCI/CI should be determined based on the final table size and how the table is queried.

23

Row Labels

2 Nodes

4 Nodes

6 Nodes

8 Nodes

GB per Hr

57.5

58.4

Minutes

4

4

56.7

57.7

4

4

24

Row Labels

2 Nodes

4 Nodes

6 Nodes

8 Nodes

GB per Hr

9.7

6.5

Minutes

22

33

5.4

5.2

40

42

Row Labels

2 Nodes

4 Nodes

6 Nodes

8 Nodes

GB per Hr

39.1

Minutes

6

40.1

40.8

39.8

5

5

5

Distributed Table Scaling:

Distribute table loads are effected by scaling more than replicated tables. Heap tables can scale from 637 GB/Hr to 1.8 TB/Hr(8 nodes). One of the greatest improvements to the overall load times can be seen when loading CCI tables. While the overall speed of loading a CCI is slower than loading a heap, the overall % increase in speed is greater when adding each scale unit. Scaling from a 2 nodes to 4 nodes saw a 100% increase in speed for both the Heap and

CCI and the CI achieved a 125% speed increase. When scaling from 4 nodes to 6 nodes, the

CI and CCI saw a 77-78% increase in load speed were as the Heap only achieved a 29% increase. Going from a 6 node to 8 nodes, the CCI achieved 44%, CI 23% and Heap 8%

Often Heap tables are used in the staging database to decrease the duration of the load from source to PDW. This process along can significantly improve the overall ETL processing time.

25

Row Labels

2 Nodes

4 Nodes

6 Nodes

8 Nodes

GB per Hr

638

1312

Sum of GB/Hr/Node Minutes % Diff GB/Hr

319

328

41

19

0%

106%

1691

1818

282

227

13

11

29%

8%

26

Row Labels

2 Nodes

4 Nodes

6 Nodes

8 Nodes

GB per Hr

213

418

Sum of GB/Hr/Node Minutes % Diff GB/Hr

107

105

164

86

0%

96%

742

1071

124

134

51

37

77%

44%

Row Labels

2 Nodes

4 Nodes

6 Nodes

8 Nodes

GB per Hr

78

Sum of GB/Hr/Node Minutes % Diff GB/Hr

39 255 0%

175

312

384

44

52

48

104

62

50

125%

78%

23%

Multiple File loading

ETL processes are rarely single threaded. When determining an integration approach (either

ETL or ELT), the process needs to take into account the impact of loading multiple files at the same time. The next set of tests were developed to give some insight into the impact of loading multiple files using either DWLoader or SSIS. Loading was performed using a 100GB file to be loaded into a table and scaled to loading 8 - 100GB files to eight tables. The test was repeated for the Heap, CCI and CI tables and only for Distributed tables.

The results show SSIS scales better when loading multiple files in parallel. However, the overall throughput of DWLoader is greater than SSIS. SSIS continued to have a more consistent load times between each table geometry. As more files were loaded in parallel with DWLoader, the duration increased 150% to 700%. Were as SSIS only saw a 67% increase in duration.

27

Multiple Files Using DWLoader

DWloader will try to utilize as mush of the load throughput as possible. By adding a second file to the process, the throughput begins to be split between each of the loads. Most ETL processes require multiple files to be loaded. When this happens, there is some overhead in starting up a DWLoader process. Therefore, when loading small files, having multiple loads running simultaneously can improve the overall load times as one file starts another is ending.

A running DWLoader process will take advantage of the time between the end of one load and the start of another.

Keep in mind that DWLoader only works when the source is a flat file. When loading data from a DB, the data will need to be extracted into a flat file first then loaded. While our testing did not take this into account, the overall processing time should include this duration.

When loading into a heap, the duration increases 78% between 1 files and 2 files. The total

GB/Hr continues to increase until 6 files are loaded in parallel. At which point the throughput begins to decrease.

Loading a Cluster Index table with DWloader tend to be more scalable then loading a heap.

The GB/Hr continues to increase through each parallel test.

28

`3

Loading a Cluster ColumnStore Table with DWloader is scalable as far as GB/Hr until you reach

4 parallel files. At which point the GB/Per starts to be more consistent and falling off when the seventh file starts to load. In testing a CCI tends to be more scalable even in Duration. As the adding two and three files showed minimal increases in duration. However, the overall GB/hr is still slower than loading into a Heap.

29

Multiple Files Using SSIS

The objective of any ETL tool is to remove the business logic from the DB and to have a scale out solution. Testing shows that table structure has little effect on the overall load times with loading with SSIS. All three table struct ures complete within 2.5% of each other’s time.

While our testing only included a single SSIS server, having multiple servers could produce better throughput results. Additionally, during the testing we were experiencing IO issues on the SSIS server as the tests were scaled from 1-8 Files. With a better disk subsystem, better performance would have been seen.

SSIS has the ability to pull data from any source including a DB. While ssis is slower than

DWloader, SSIS removed the added step to create a flat file that DWloader requires.

30

Possible Causes Of Slow Load Speeds

There are many items that can contribute to slow load speeds. This section will point out the most common issues.

Data

The sort order of the data presented to APS can cause bottlenec ks. Let’s propose we have created a Data warehouse for a retail business. Their load process imports detail data for each store and loads it into APS. The source table is sorted by store number. It has been determined that StoreNum is a good distribution column as the Business have +10k stores and store sales are nearly the same across all stores.

CREATE TABLE FactSales (

StoreNum int not null

, DateID int not null

, CustomerID int not null

, ProductID int not null

, SaleAmount decimal(18,3) not null)

WITH (

Distribution = HASH(StoreNum)

)

31

This will cause the same store number to be passed to all compute nodes to be hashed and then rerouted to the single compute node to be stored. This will cause a hot spot on a single node for writing. In this case it would be best to load the data based on a random order of storeNum. This will allow all of the nodes to be writing data to disk.

Infrastructure

The overall architecture of a Data Warehouse system is very important to get correct.

Otherwise, performance issues can arise during data loads. While an APS appliance has the capacity to connect servers to APS through the Infiniband Network, data loads can still have performance issues. This is a result of a bottleneck in the upstream data flow process. Each component that is responsible for passing data to an APS Appliance should be reviewed. An

ETL process will only be as fast as the slowest component.

Network

The network section of the diagram below, represents a possible performance issue. If the source DB or File provider can only sustain a max 10Gb/sec(with no other workload) connection to the ETL server, the overall throughput will be 10Gb/sec(9.76 MB/sec or 34 GB/Hr). With additional workloads applied to the source/network, the overall throughput will be even less. As shown in section 4.6 above, the APS was capable of sustaining a throughput of 1.8 TB/Hr.

In comparison, if we used the Ethernet IP vs the InfiBand IP of the control node in DWLoader, the throughput of a load is greatly affected.

SAN

The SAN/disk subsystem infrastructure is another possible area of bottle necks when dealing with high speed data movement. If the Disk Subsystem of the Source systems, ETL servers or

32

33

Flat File storage can’t keep up with the throughput demand, the overall ETL process will slow down.

Source Servers/ETL Servers

These systems can also be the issue for slow data movement to APS. These can be difficult to trouble shoot as there are many components that can contribute to throughput issues. Memory,

Disk, CPU, NIC configurations, Server Configuration, DB configuration and HBA configuration can all have an impact on data throughput.

Design

We have briefly covered the different table designs (Geometry) options available in APS.

Selecting the correct geometry (distributed/replicated) can has a big impact on a systems overall performance. One important item to remember is that table geometry can change over time. In the beginning, a table may be very small and can be replicated however over time the table continues to grow until it needs to be distributed.

Optimal Connectivity Between Server

SAN/Servers Network SAN/Servers Network

Table Geometry

Source System

Infiniband Infiniband

SSIS/ETL/Loading Server

Ethernet Ethernet

SSIS/ETL/Loading Server

APS PDW Region

SSAS

SSRS

Real World Scenarios

Like the DMS buffer, SQL Server Integration Services (SSIS) also implements fixed width buffers for the data flow pipeline. The key difference though is that the width (and more specifically the data type) is defined at the data flow source, not inherited from the destination. It is therefore common to see the DDL of the destination table defined with overly generous data type sizes. It makes no difference to the SSIS buffer utilization and developers will often use this approach as a defensive move against big types (or they are just lazy).

As a result of this, it has sometimes been the case during either Proof of Concept (POC) executions or customer delivery sessions that the table DDL provided has contained excessive column widths. These tables have traditionally performed well with their existing SSIS implementation on SMP SQL Server however when tested with dwloader the results have been less than satisfactory. This is almost always a result of oversized column definitions creating underutilized buffers in the DMS

As mentioned in the previous section, a customer supplied actual DDL from their production environment during a POC that contained 3 columns of type varchar(8000), however the actual max length of the data in each of the fields was less than 30 characters. While this is an extreme case, it highlights the need to review and tighten the DDL to ensure the best buffer utilization for both loads as well as data movement during queries. Tactics that can be applied for tightening the data types are described in the Conclusions section at the end of this document.

Best Practice

CTAS vs Upsert

DWLoader/Insert compared to CTAS

The DWLoader Insert mode allows an incoming flat file to function as input to a “merge” procedure. In this case, each incoming row is either inserted, if not found in the target table, or used as an update row, if found in the target table. This process works very well and quickly.

The alternate method of performing a “merge” with incoming data is to use the CTAS functionality. This is not recommended as a best practice unless there are certain circumstances. One of these circumstances would be if the incoming data is a great number of rows and is known to be more updates than inserts. In this case the recommended process is:

Step 1: Create a new distributed table with all incoming rows from the flat file (Table_A).

Using the DWLoader FastAppend mode, load the data into a distributed heap table (Table_A) that is distributed on the same distribution key as the target table (Table_T).

Step 2: Create a new target table (Target_T2) with whatever indexing scheme is present for the existing target table by combining the unchanged rows in the target table (Table_T) with the new rows from Table_A and the existing rows to be updated from Table_A

34

Using a CTAS statement, create the new target table (Table_T2) by joining the old target table

(Table_T) with the new table (Table_A) to gather the unchanged rows followed by a union of the new rows from Table_A and a union of the rows to be updated from Table_A.

Example: (assuming a final target table with a CCI index)

CREATE TABLE Table_T2

WITH (DISTRIBUTION=HASH(DistributedColumn) CLUSTERED COLUMNSTORE

INDEX)

/* gather rows from the target table that are unique from the incoming flat file rows */

AS SELECT T.* FROM Table_T T

LEFT OUTER JOIN Table_A A

ON T.distributedcolumn = A.distributedcolumn

AND A.anycolumn IS NULL

UNION

/* gather rows from the incoming table that are new rows to be inserted */

SELECT A.* FROM Table_A A

LEFT OUTER JOIN Table_T T

ON A.distributedcolumn = T.distributedcolumn

AND T.anycolumn IS NULL

UNION

/* gather rows from the incoming table that match the target table to be updated */

SELECT A.* FROM Table_A A

JOIN Table_T T

ON A.distributedcolumn = T.distributedcolumn

Conclusions

Some of the approaches for loading data defined in this paper are quite clear cut, whereas others are more dependent on the type of data and the customer circumstances. Based on the

35

current implementation of DMS buffers, it is possible to confirm the following best practices for destination table DDL when loading data.

DWloader is the most efficient method for loading flat files into APS/PDW

Heap tables are the most efficient table geometry to load and should be used for DW

Staging Tables.

Upsert should be considered when inserting/modifying a medium set of records.

SSIS can be a viable ETL toll when loading into CI or CCI tables or when parallel loads are required.

Data Types should be sized according to the data/source system. Oversized data types can have an impact on load performance.

While DWloader is faster to load data into APS/PDW, consider the total time of generating the flat file and loading the data. SSIS may be a better alternative.

Of the two different approaches to loading data into a distributed table (FASTAPPEND + CTAS or APPEND), it may come down to a particular pain point and there is value in testing both approaches before settling on one.

36

![[#EL_SPEC-9] ELProcessor.defineFunction methods do not check](http://s3.studylib.net/store/data/005848280_1-babb03fc8c5f96bb0b68801af4f0485e-300x300.png)

![[#CDV-1051] TCObjectPhysical.literalValueChanged() methods call](http://s3.studylib.net/store/data/005848283_1-7ce297e9b7f9355ba466892dc6aee789-300x300.png)