08. Machine scheduling

CHAPTER 8 MACHINE SCHEDULING

8.1 Basic Concepts of Machine Scheduling

allocation of jobs (or tasks) T

1

, T

2

, …, T n

to be performed on processors (or machines) P

1

, P

2

, …,

P m

allocation to be made to optimize some objective

machine scheduling problems include

sequencing , which determines the order in which tasks are processed, and

scheduling that determines at what time the processing of a task begins and ends

single machine scheduling

parallel machine scheduling dedicated machine scheduling

open shop, in which each task must be processed by all machines, but no specific order in which processing has to take place

flow shop, in which each job must be processed all machines, and each task processed by the machines in the same specified order

job shop, in which each job must be processed on a specific set of machines, and the processing order is also job-specific

a number of parameters

processing time p ij

is the processing time of task T j on machine P i

(if only a single machine or all machines have the same processing time for a job, we simplify the notation to p j

.

ready time (also referred to as arrival time or

release time) r j

, the time at which task T j

is ready for processing. In the simplest case, r j

= 0

due time d j

, the time at which task T j

should be finished.

a number of variables

completion time c j

of job T j

, which is the time at which task T j

is completely finished

flow time f j

of task T j

, defined as f j

= c j

r j

, is the time that a job is in the system, waiting to be processed or being processed

lateness of a task T j

, is j

= c j

d j

and tardiness, t j

= max{ j

, 0}

a number of alternative performance criteria; three important criteria are:

makespan (or schedule length) C max

= max { } j c j

, the time at which the last of the tasks has been completed

mean flow time F is the unweighted average F =

1 n

( f

1

f

2

...

f n

) (differs from the mean completion time constant 1 n

(

1 ( c c r

1 r

2 n

...

1 r n

)

2

...

c n

) only by the

and refers to the average time that a job is in the system, either waiting to be processed or being processed)

maximal lateness L max

= max { } j j

is the longest lateness among any of the jobs.

8.2 Single Machine Scheduling

Minimizing the makespan is not meaningful: each sequence of tasks will result in the same value of

C max

which will equal the sum of processing times of all tasks

Minimizing the mean flow time: the simple Shortest

Processing Time (STP) Algorithm solves the problem optimally:

SPT Algorithm: Schedule the task with the shortest processing time first. Delete it and repeat until all tasks have been scheduled.

Extension of the rule: not only processing times p j

to consider, but also weights w j

associated with the tasks.

The objective is then to minimize the average weighted flow time, defined for task T j

as w j f j

. The weighted generalization of the SPT algorithm is:

WSPT Algorithm (Smith’s Ratio Rule):

Schedule the task with the shortest weighted processing time p j

/w j

first. Delete it and repeat until all tasks have been scheduled.

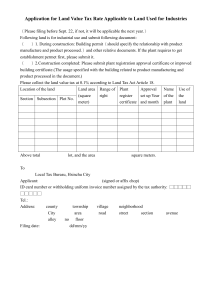

Example 1: There are seven machines in a manufacturing unit. Maintenance has to be performed periodically. Costs are incurred for downtime, regardless if a machine waits for service or is being served. Processing times of the machines, downtime costs, and weighted processing times are given in the table below.

T

1

T

2

T

3

T

4

T

5

T

6

T

7

Job #

Service time

(minutes)

Downtime cost

30 25 40 50 45 60 35

2 3 6 9 4 8 3

($ per minute)

Weighted processing time p j

/w j

15 8⅓ 6⅔ 5 5

9

11¼ 7½ 11⅔

Applying WSPT, first schedule T

4

(with the lowest weighted processing time of 5 5

9

), followed by T

3

with the next-lowest weighted processing time of 6⅔, followed by T

6

, T

2

, T

5

, T

7

, and T

1

. The schedule is shown in the Gantt chart (after the American engineer

Henry L. Gantt (1861 – 1919), who developed these charts in 1917).

Tasks T

4

, T

3

, T

6

, …, T

1

now have idle times of 0, 50, 90,

150, 175, 220, and 255. Adding the processing time results in completion times (= flow times) 50, 90, 150,

175, 220, 255, and 285. Multiplying by the individual per-minute costs and adding results in a total of

$4,930.

Minimizing maximal lateness L max

is done optimally by the earliest due date algorithm (or

EDD algorithm or Jackson’s rule):

EDD Algorithm: Schedule the task with the earliest due date first. Delete it and repeat until all tasks have been scheduled.

Example 2: A firm processes book-keeping jobs.

Eleven tasks are to be completed by a single team, one after another. Processing times and due dates are in the table below.

Job #

Processing

T

1

T

2

T

3

T

4

T

5

T

6

T

7

T

8

T

9

T

10

T

11

6 9 4 11 7 5 5 3 14 8 4 time (hours)

Due dates 25 15 32 70 55 10 45 30 30 80 58

The EDD rule schedules task T

6

first (its due date is

10, earliest of all), followed by T

2

, T

1

. The Gantt chart for the schedule is shown below (the tie between T

8 and T

9

with the same due dates is broken arbitrarily).

Tasks T

6

, T

2

, T

1

, and T

8

are finished before their due dates, T

9

is late by 7 hours, T

3

by 9 and T

7

by 1; T

5

, T

11

,

T

4

, and T

10

are finished before they are due. The maximal lateness occurs for job T

3

, so that L max

= 9.

There are other optimal schedules.

8.3 Parallel Machine Scheduling

Minimizing makespan is quite hard computationally, even for just two machines: we need heuristic methods; one is the longest

processing time first (or LPT) algorithm.

LPT is a list scheduling method, it makes a priority list of jobs, starting with the highest priority. Jobs are then assigned one at a time to the first available machine.

LPT Algorithm: Put the tasks in order of nonincreasing processing times. Assign the first task to the first available machine. Repeat until all tasks have been scheduled.

Example 3: Use again Example 1 above, but now we have three servicemen available to perform the tasks.

With the seven tasks in order of processing time, starting with the longest, we obtain the sequence T

6

,

T

4

, T

5

, T

3

, T

7

, T

1

, and T

2

with processing times of 60,

50, 45, 40, 35, 30, and 25 minutes. In the beginning, all three machines are available, so we first assign the longest task T

6

to machine P

1

.(P

1

will be available again at time 60, when T

6

is completely processed).

Next T

4

goes to P

2

, now available. (P

2

will be available again at time 50, when T

4

is completely processed).

Next T

5

is assigned to P

3

, which will be available again at time 45. Next task to be scheduled is now T

3

. The three machines become available again at 60, 50, and

45, so T

3

is scheduled on P

3

, and so on. (Shaded areas indicate idle time).

The schedule length is C max

= 110. This schedule is not optimal, but the LPT algorithm is not an exact algorithm but only a heuristic. (The optimal solution schedules T

6

and T

7

on P

1

, T

4

and T

5

on P

2

, and T

3

, T

1

, and T

2

on P

3

.This schedule has no idle time and finishes at time 95. Note that optimality does not require that there is no idle time. On the other hand, if there is no idle time, the schedule must be optimal).

The LPT algorithm is not guaranteed to find an optimal solution that truly minimizes the schedule length C max

. Instead, we may try to estimate how far from an optimal solution the heuristic solution found by the LPT algorithm might be. The performance ratio

R = C max

(heuristic)/C max

(optimum), where C max

(heuristic) is the schedule length obtained by the heuristic method and C max

(optimum) is the true minimal schedule length. Since C max

is a minimization objective, C max

(optimum)

C max

(heuristic), so that R

1, and the smaller the value of R, the closer the obtained schedule will be to the true minimum. The performance ratio R

LPT

for the LPT algorithm applied to an n-job, m-machine problem satisfies

R

LPT

4

1

3 3 m

.

For a two-machine (m = 2) problem, R

LPT

=

4

1

3 3(2)

=

7/6

1.167, meaning that in the worst case, the LPT algorithm will find a schedule that is 16.7% longer than optimal.This bound is actually tight, as shown in the following

Example 4: Let a two-machine, five-job scheduling problem have the task processing times 3, 3, 2, 2, and

2, respectively. Applying the LPT algorithm to this problem results in (a), whereas an optimal schedule is shown in (b). With C max

= 7 for the LPT schedule shown in (a), and C max

= 6 for the optimal schedule shown in (b), we see that the worst-case bound of R

LPT

= 7/6 is achieved.

Having shown that the worst-case scenario can occur for the performance ratio bound of R

LPT

=

4

1

3 3 m

, we can look at the positive side and conclude that for a two-machine problem an LPT schedule can never be poorer than 16.7% above the optimal value. For a

three-machine problem, this becomes slightly worse with R

LPT

=

4

1

3 3(3)

= 11/9

1.222, i.e., C max

is no more than 22% longer than its optimal value. For four machines, we obtain 25%, and for five machines

26.7%; for a large number of machines the value approaches 33⅓%.

When scheduling tasks on several parallel processors, it is sometimes possible to allow preemption, whereby a task may be preempted, i.e., stopped, and restarted later, on any (possibly another) processor. This makes the problem of minimizing C max

on parallel machines easy. The so-called Wrap-Around Rule of McNaughton finds an optimal schedule when preemption is permitted. Specifically, assume that there are m parallel processors on which n tasks are to be performed with processing times p j

, j=1, 2, …, n.

Clearly, no schedule exists with a makespan C max shorter than the longest of the processing times max{ p . Also, C j max

cannot be shorter than the mean

1 j n

1 processing time of all jobs, i.e., m j n

1 p j

. Therefore

C max

max max{

1 j n p j

},

1 m j n

1 p j

.

The algorithm can be described as follows.

McNaughton’s Wrap-Around Rule: First sequence the tasks in arbitrary order, obtaining a sequence of length p

1

+ p

2

+ … + p n

time units. Then compute

*

C max

p j

},

1 j n

1 m j n

1 p j

i and break the time sequence at the points

C

* max

, i=1, 2, …, m

1. Schedule all tasks in the interval i

C

*

( 1) ; max iC

* max

on processor P i

, i=1,

2, …, m, noting that preempted tasks may be at the beginning and/or the end of each processor schedule. Finally, any task that is preempted and processed on two different processors P i

and P

i+1

will have to be processed first on P

i+1

, then preempted, and finished on P i

.

It is clear that there will be no idle time on any of the processors, unless there is any job with a processing

1 time longer than m j n

1 p j

.

Example 5: Consider the processing times for the eleven tasks in Example 2, but ignore the due dates.

With two processors, we first compute

*

C max

max max{ p j

}, ½

1 j 11 j

11

1 p j

= max {p

9

, ½(76)} = max {14, 38} = 38. The Wrap-Around Rule will then produce the following optimal schedule:

In a practical application of this optimal schedule, job

T

6

would start on processor P

2

at time t = 0 and preempted at t = 4, then continue on P

1

at t = 37 and finished at t = 38.

Assume now that three processors (or teams) were available, and that T

9

increases from p

9

= 14 to p

9

= 34.

Then

*

C = max {34, ⅓(96)} = max {34, 32} = 34. max

Some idle time is now inevitable due to the long processing time p

9

. Using the Wrap-Around Rule, we obtain the schedule

For the preempted jobs in this optimal schedule, T

5 will commence being processed at t = 0 on P

2

, preempted at t = 3, and continued on P

1

at t = 30, until it is finished at t = 34. Job T

9

will start being processed on P

3

at t = 0 and processed completely until it is finished at t = 34 on P

3

. Job T

10

is processed on P

2

from

t = 16 to 24, immediately followed by T

11

from t = 24 to

28, after which P

2

is idle until the end of the schedule at t = 34.

Now minimize mean flow time F. For identical parallel processors, preemption is not profitable. Therefore, we will only consider nonpreemptive schedules. The problem can then be solved to optimality by means of a simple technique:

Algorithm for minimizing mean flow time: Sort the jobs in order of nondecreasing processing time (ties are broken arbitrarily). Renumber them as

1 2

,..., T n

.

machine P

1

Then assign tasks

, ,

2 2

m

, T

m

,...

to P

T T

2

1 1

, ,

m

3 3

,

m

T

, T

m m

,...

,...

to

to

P

3

, and so on. Tasks are processed in the order they are assigned.

Consider again Example 1 above. The reordered sequence of tasks is ( T T T T T T T

1 2 3 4 5 6 7

) = (T

2

, T

1

,

T

7

, T

3

, T

5

, T

4

, T

6

) with processing times (25, 30, 35, 40,

45, 50, 60). With three machines, assign to P

1

the tasks

T

1

, T

4

, and T

7

(or, renumbering them again, T

2

, T

3

, and T

6

), P

2

is assigned the jobs

T

5

), and P

3

will process T

3

and

T

2

T

6

and

, i.e., T

T

5

7

(i.e., T

and T

4

:

1

and

The mean completion time is F =

+ 75 + 35 + 85] = 440/7

62.8571.

7

1

[25 + 65 + 125 + 30

Minimization of the maximal lateness turns out to be difficult, and we leave its discussion to specialized books, such as Eiselt and Sandblom

(2004).

8.4 Dedicated Machine Scheduling

In an open shop, each task must be processed on each of a number of different machines. The sequence of machines is immaterial. We deal only with the case of two machines, which happens to be easy, while problems with more machines are difficult.

Minimizing the schedule length (makespan) C max

is easy. Optimal schedules can be found by the Longest

Alternate Processing Time (LAPT) Algorithm:

LAPT Algorithm: Whenever a machine becomes idle, schedule the task on it with the longest processing time on the other machine, if it has not yet been processed on that machine and is available at that time. If a task is not available, the task with the next-longest processing time on the other machine is scheduled. Ties are broken arbitrarily.

Example 6: In an assembly shop, each semi-finished product goes through two phases, assembly of components, and checking of them. The sequence of these tasks is immaterial. The times (in minutes) to assemble and check the six products are:

Job #

Processing time on P

1

T

1

T

2

T

3

T

4

T

5

T

6

30 15 40 30 10 25

Processing time on P

2

35 20 40 20 5 30

Using LAPT, we begin by scheduling a task on machine P

1

. The task with longest processing time on

P

2

is T

3

, so this is scheduled first on P

1

. Next we schedule a task on P

2

which is still idle. The task with the longest processing time on P

1

is again T

3

, but it is not available now, so we schedule the task with nextlongest processing time on P

1

. This is T

1

or T

4

, choose

T

1

. With T

3

and T

1

being scheduled on the two machines, T

1

is first to finish, at time 35, and P

2

is idle again. Now, the available task with the next-longest processing time on P

1

is T

4

, which is scheduled next on

P

2

. Continuing, the optimal schedule with C max

= 155 is:

Minimizing mean completion time and maximal lateness are computationally difficult, even for two machines.

Now the flow shop model, in which each task has to be processed by all machines, but in the same, prespecified order. The schedule above does not satisfy this condition, since T

3

is processed on P

1

first and later on P

2

, while T

1

is processed on P

2

first and then on P

1

. Consider two machines, for which the makespan is to be minimized. The famous Johnson’s

rule, first described in the early 1950s does this. All jobs have to be processed on P

1

first and then on P

2

, and the processing time of task T j

is p

1j

on machine P

1 and p

2j

on machine P

2

.

Johnson’s Algorithm: For all jobs with processing time on P

1

the same or less than their processing time on P

2

(i.e., p

1j

≤ p

2j

), find the subschedule S

1 with tasks in nondecreasing order of p

1j

values. For all other jobs (i.e., with p

1j

> p

2j

), determine the subschedule S

2

with tasks in nonincreasing order of their p

2j

values. The sequence of jobs is then (S

1

, S

2

).

Consider Example 3, where now each job has to be assembled first and then checked, i.e., processed on

machine P

1

first and then on P

2

. Tasks for which the condition p

1j

≤ p

2j

holds are T

1

, T

2

, T

3

, and T

6

; those with p

1j

> p

2j

are T

4

and T

5

. With the former four tasks in nondecreasing order of processing times on P

1

we get the subsequence S

1

= (T

2

, T

6

, T

1

, T

3

), and with the latter two in nonincreasing order of processing time on

P

2

we get the sequence S

2

= (T

4

, T

5

). The result is (T

2

,

T

6

, T

1

, T

3

, T

4

, T

5

), and the schedule is shown in the

Gantt chart below:

The schedule length is C max

= 175. Since a flow shop is more restrictive than an open shop, the increase of the schedule length from 155 to 175 minutes is not surprising.

The last model is a job shop: not all tasks need to be performed on all machines, and the sequence in which a job is processed on the machines is job-specific. We will only consider two machines and minimize the makespan. Jackson described an exact algorithm for this in 1955. It uses Johnson’s (flow shop) algorithm as a subroutine.

Jackson’s Job Shop Algorithm: Divide the set of jobs into four categories:

J

1

includes all jobs requiring processing only on P

1

,

J

2

includes all jobs requiring processing only on P

2

,

J

12

includes all jobs requiring processing on P

1 first and then on P

2

, and

J

21

includes all jobs requiring processing on P

2 first and then on P

1

.

Apply Johnson’s rule to jobs in J

12

, resulting in the sequence S 12 . Then apply the rule to jobs in the set J

21

, but with p

1j

and p

2j swapped. The result is the subsequence S 21 .

Jobs in J

1

and J

2

are sequenced in arbitrary order, denote their sequences by S 1 and S 2 .

The job order on P

1

is (S 12 , S 1 , S 21 ), and the job order on P

2

is (S 21 , S 2 , S 12 ).

We modify Example 3 as displayed in the table:

Job # T

1

T

2

T

3

T

4

T

5

T

6

Processing time p

1j

30 15

30 10 25

Processing time p

2j

35 20 40

5 30

Processing sequence P

2

, P

1

P

1

, P

2

P

2

P

1

P

1

, P

2

P

2

, P

1

We have J

1

= {T

4

}, J

2

= {T

3

}, J

12

= {T

2

, T

5

}, and J

21

=

{T

1

, T

6

}. Since J

1

and J

2

have only one job each, the subsequences are S 1 = (T

4

) and S 2 = (T

3

). Applying

Johnson’s algorithm to J

12

, we obtain the sequence S 12

= (T

2

, T

5

). For J

21

., applying Johnson’s rule to T

1

and

T

6

, with processing times p

1j

and p

2j

switched, we get

S 21 = (T

1

, T

6

). The overall sequence on P

1

is then (S 12 ,

S 1 , S 21 ) = (T

2

, T

5

, T

4

, T

1

, T

6

), while the overall sequence on P

2

is (S 21 , S 2 , S 12 ) = (T

1

, T

6

, T

3

, T

2

, T

5

). The resulting schedule has an overall schedule length C max

= 130 minutes: