Draft

advertisement

Face Recognition with Local Binary Pattern and Partial Matching

1.

Introduction

1.1 Motivation

1.2 Problem and Proposed Solution

1.3 Thesis Organization

2.

Related Work

2.1 Face Recognition

2.2 Local Binary Pattern

2.3 Partial Matching

3.

Implementation

3.1 Local Binary Pattern

3.2 Local Derivative Pattern

3.3 Partial Matching

3.4 Clustering

3.5 Multi-threads

4.

Experiment

4.1 Data_sets

4.2 Supervised Learning

4.3 Un-supervised Learning

5.

Conclusion

5.1 Discussion

5.2 Future Works

6.

Reference

Chapter 1

Introduction

1.1 Motivation

Face recognition is one of the most popular topics in computer vision for more

than three decades. Many people study in how to improve the accuracy in restricted

environment, such as frontal faces with indoor lighting. However, some other people

focus on how to achieve high accuracy in uncontrolled environment, such as outdoor

lighting or slanted faces. We are the last one, and we focus on the photos taken by

everyday people.

When people go on vacation, they always take a lot of pictures. We want to

design a system that can be easily for them to find out who are in the pictures, or who

are always in the same photos. In this case, we are dealing with photos taken by

everyday people, called “Home Photos”. These home photos perhaps contain a lot of

noise or occlusion, people maybe didn’t look at cameras, or the luminance may not be

consistent.

1.2 Problem and Proposed Solution

When we get the home photos, we use the “Face Detection” algorithm to get the

face images. And then, we use the “Face Alignment” method to crop and warp each

pair of eyes to same position and each face to the same size. We only use the gray

value of each pixel.

We use Local Binary Pattern (LBP) and Local Derivative Pattern (LDP) to

present a face. These two methods can encode each pixel to integral, which contains

the information of gray value of this pixel and its neighborhood. Then, we can define

some regions, and count the histogram of those integral. The histograms are the final

presentation of each face image. These methods are not easily affected by global

change of illuminations and slight rotation of the face.

After we get the presentation of each face, we cluster similar faces. We use

“Complete-Link Clustering” method to put some faces together. Complete-link

clustering will see each face as one cluster in the beginning, and merge two of clusters

each time if the similarity of any component pair in these two clusters is greater than a

threshold. In this method, we can support the components in the same cluster are

similar enough.

We test the LDP and LBP in three datasets, including AR dataset and two sets of

home photos taken by ourselves. The accuracies of LDP are not worse than LBP in all

three datasets.

(a)

Fig. 1

(b)

(c)

(d)

(e)

The prepared works of our system. First, when we get the home photos,

such as (a), we use face detection to get the face image, i.e. (b). Then we use the face

alignment to get the location of eyes and other features, and the result is showing in

(c). And we can rotate the face images according to the location of eyes, such as (d).

Finally, we crop the face image into the same size.

(a)

Fig. 2

(b)

(c)

(d)

The process of our system. First, we use the normalized face images, which

is the result of prepared work, for instance, (a). And we divide the face images into

some overlapped patches, (b). In each patch, we use (c) as a descriptor to describe the

features of images. And finally, we will get the metric to describe the image, such as

(d).

1.3 Thesis Organization

The remaining parts of the paper are organized as follows: Section 2 proposes

the related works of face recognitions. Section 3 presents the algorithm of my system.

Section 4 demos our experiment result of three datasets and other algorithm. Section 5

is the conclusion.

Chapter 2

Related Works

In this chapter, we will introduce some related works. We will introduce the following

topic separately: Face Recognition exclusive Local Binary Patterns and Local Binary

Patterns, LBP and its extension, and partial matching.

2.1 Face Recognition

Because the face recognition topic has been studied for several decades, the

algorithms change again and again. H. Moon, et al.[] use the PCA-based method to

analysis the face images. PCA model will extract the most distinguish parameters of

face images, and we can use it to reduce the dimension of face images and build

eigen-face. So we can easily to use it to recognize which subject the image belongs to.

Yi Ma, et al.[] develop a serious of Sparse Representation and Classification

algorithm to deal with face recognition problem. In this method, they will look each

face image as a vector, and the most important idea of this algorithm is that each

vector of face image can be linear combination with some other vector of image and

some error. So the procedure of Sparse Representation and Classification of classify

each face image is to build a metric A combined by all the training image vectors first

and define the vector to classify is y. Then, solve the linear system min ||Ax-y||, x can

be taken as the weight of each training vector, so the larger xi is, the more likely y and

Ii belong to the same subject. The same group extends Sparse Representation system

to uncontrolled environment []. In this paper, they define a warping parameter τ,

which is some kind of transformation, so that each image vector y0 can perform a

warping vector y = y0。τ. Sparse Representation and Classification is one of the most

robust face recognition algorithm, however, it takes a lot of time in solving the sparse

matrix. The more training data, the more time it will need.

Xiujuan Chai, et al.[] develop another way, called Local Linear Regression (LLR).

They use one frontal face image and some non-frontal face images of special pose as

training data, and get the translation or warping parameters of transform from

non-frontal face image into frontal image. Then, when the testing images, including

frontal and non-frontal images, they can warp the non-frontal image into frontal

image, and it is easy to do the face recognition. In their study, the warping result of

upper part of face is better than the lower part. And it will lead to some ghost effect

because of warping.

2.2 Local Binary Pattern

Local Binary Pattern (LBP) is one of the most popular methods for face recognition.

It is used in pattern analysis originally, but () [] use it in the face recognition area. It

can encode one pixel in a gray-value image into a meaningful label by using the gray

value as a threshold to analysis the relationship with its neighbor. And the authors

divide the input image into several regions, then, they can calculate a local histogram

of the labels for each region, and combine the local histogram into a huge special

histogram. When compare the similarity of any two images, they need only calculate

the similarity of the special histogram using weighted chi-square distance. The

executing time of this algorithm is very short, and its accuracy in AR datasets can be

over 95%.

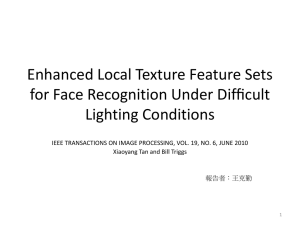

Xiaoyung Tan and Bill Triggs [] extend LBP in other way. They focus on the

problems under difficult lighting condition. Although the Local Binary Patterns are

robust for monotonic change of illumination, the lighting focus on some part will

affect the performance. X. Tan, et al. develop a general form of LBP, called Local

Ternary Pattern (LTP), which will be less sensitive to noise. The LBP cares only about

the subtraction value of neighborhood pixels and the middle pixel, and if the

subtraction is positive, it will be labeled as ‘1’, otherwise, ‘0’. But the LDP has more

choices. LDP define a threshold, that if the absolute value of subtraction is smaller

than the threshold, it will be labeled as the third choice ‘2’. And LDP will generate

two labels for each pixel. The first one is taking label ‘2’ as ‘1’, and generates a label

like LBP. Another one is taking label ‘2’ as ‘0’, and generates another label. Moreover,

X. Tan et al. use gamma correction, Difference of Gaussian (DoG) filter, masking, and

contrast equalization, which is improve the performance very much.

Baochang Zhang, et al. [] develop an extension of LBP in another way. They think the

LBP is failed to extract more detailed information contained in the input object. B.

Zhang, et al. introduce a general framework to encode directional pattern feature

based on local derivative variations, called Local Derivative Pattern. It will label each

pixel according to the gradient of this pixel and its neighbors. Using different order of

derivative and different directions will lead to different labels. The author found that

the performance of second-order derivation and four special degrees is best. And this

method can also imply to the Gabor Filter result, called G_LDP. And the performance

of LDP is better than the LBP. However, the dimension of LDP and G_LDP is much

higher than LBP. The authors say it maybe can solve by using LDA to reduce the

dimension.

2.3 Partial Matching

Gang Hua, et al. [] present a robust elastic and partial matching metric for face

recognition. It is always a problem to recognize face under different poses, different

face expression and partial occlusion. The authors develop a system that will divide

each input image into N overlapping patches. When calculating the distance or

similarities of any two images, they will calculate the minima distance of each patch

in one image with the mapping patches (the patch in the same position and its

neighbors) in another image first. However, they will not use the distance of all

patches, but use one predefine ranking distance. So, they will ignore the occlusion

part, or quite different patches caused by different expressions or poses.

Fig.

The procedure of partial matching. Quote from []. G. Hua, et al. use the eye

detection to identify the location of eyes. And the f vector means the 36-dimention

vector of each patch, which means 4 gradient value, and 9 regions as (f) shows.

3.

Implementation

In this section, we will introduce the algorithm we used and experimented. First, we

will introduce the Local Binary Pattern (LBP), and Local Derivative Pattern (LDP).

And then we will describe partial matching and how to combine LBP and LDP with

partial matching.

3.1 Local Binary Pattern

This method is original used in the texture description. It is computational efficiency

and can distinguish two monotonic gray level images. And it is proven to be one of

the best performing texture descriptor. T. Ahonen, etc.[] use it in the face recognition.

3.1.1

Local binary pattern and its extension

The LBP operator assigned a label to every pixel of a gray level image. The label

mapping to a pixel is affected by the relationship between this pixel and its eight

neighbors of the pixel. If we set the gray level image is I, and Z0 is one pixel in this

image. So we can define the operator as a function of Z0 and its neighbors, Z1, …, Z8.

(seeing Fig. 1.) And it can be written as:

T = t (Z0, Z0-Z1, Z0-Z2, …, Z0-Z8).

However, the LBP operator is not directly affected by the gray value of Z0, so we can

redefine the function as following:

T ≒ t (Z0-Z1, Z0-Z2, …, Z0-Z8).

To simplify the function and ignore the scaling of grey level, we use only the sign of

each element instead of the exact value. So the operator function will become:

T ≒ t (s(Z0-Z1), s(Z0-Z2), …, s(Z0-Z8)).

Where the s(.) is a binary function, defined as following:

1, if x ≥ 0

s(x) = {

.

0, otherwise

And we get the LBP result in the following function:

LBP = ∑8p=1 s(Z0 − Zp ) ∗ 2p .

Overview of LBP operator, it takes the gray value of the center pixel as a threshold,

and if the gray value of its eight-neighborhood pixels is larger than the threshold, it

will assign ‘1’, otherwise, it will assign ‘0’. So, we will get eight bits and can consider

it as a label of this pixel. Then the histogram of the labels can be taken as the

descriptor of the gray level image.

Fig. 1.

8-neighborhood around Z0

In order to dealing with the different size of image, T. Ojala, etc.,[] develop an

extension of LBP, that uses neighborhoods of different sizes. They define the notation

(P, R), which means P samples in a circle of radius R. See Figure 2 as an example of

circular neighborhoods. And LBP operator can be rewritten in a general form:

p

LBPP,R = ∑P−1

p=1 s(Z0 − Zp ) ∗ 2 .

where Z1, …, Zp are the samples we take around Z0.

Fig. 2.

An example of circular neighborhood. (P, R) in (a) is (8, 1). (P, R) in (b) is

(16, 2). And (P, R) in (c) is (8, 2).

Fig. 3.

An example of LBP code. (a) is the original gray value. (b) is the

difference of each neighbor with the middle pixel. (c) use only the sign of (b). And

the final label of the middle pixel will be “11010011”.

Another extension of the original LBP operator is called “uniform patterns”. A local

binary pattern called uniform pattern is that the binary string of its label contains at

most two bitwise transitions from 0 to 1 or vice versa when the binary string is

considered circular. For example, the patterns 11111111 (0 transitions) and 00001110

(2 transitions) are uniform patterns, but the patterns 01010101 (8 transitions) and

01100110 (4 transitions) are not. In the most of case, the uniform patterns occur much

more than the non-uniform patterns. If we calculate the non-uniform patterns

separately, it will decrease the performance. So, we can put all the non-uniform

patterns in the same bin when calculating the histogram.

Another variation of original LBP operator is called “rotation”. It is defined as:

ri

LBPP,R

= min{ROR(LBPP,R , i)|i = 0, … , P − 1},

ROR(c, i) means rotate c by i bits. This operator will take the binary string as a ring,

and all the rotation results of this ring are put in the same bin. For instance, the

patterns 00110000 and 00001100 will be considered as the same, the patterns

00101000 and 10100000 are also the same. However, the patterns 00110000 and

00101000 are not in the same bins. When implement this operator, we will rotate the

binary string, and return the minimal decimal as a result. If any two patterns can

return same minimal decimal, they will be seen as the same patterns. Otherwise, they

will be put in the different bins.

3.1.2

Face Description with LBP

The LBP method presents a descriptor of the image. It will count a histogram of the

LBP labels like following function:

Hi = ∑x,y{LBP(x, y) = i} , i = 0, … , n − 1.

n is the number of bins. If we use the uniform patterns, n will be 59. If we use the

rotation patterns, n will be 36. And if we use both the uniform and rotation patterns, n

will be 9.

However, the face images are different with typical texture images. The different

subregions in different part of face such as eyes, noses, or lips are totally different

with others. And if we ignore those differences and use only one descriptor to present

a face, it tend to average over the image are, so the performance will drop down. Also,

using local features can be more robust against variations in pose or illumination.

So, as the reason presented above, we will divide the face image into some local

regions and LBP descriptors are extracted from each region independently. The local

regions can be rectangle or circle, and can be overlapped with others. See Figure 3 as

an example of a face image divided into rectangle regions.

If we divide the face image into m local regions, notation as R0, R1, …, Rm-1, we

can calculate the histogram separately in each region. The enhance histogram,

composed by R0, R1, …, Rm-1, has size m x n. So the histogram will be modified as

following:

Hi,j = ∑x,y{I(x, y)|LBP(x, y) = i and (x, y) ∈ R j }.

We can summarize the LBP system shortly: the pixel value in the face image can

affect the LBP label nearby, the label can make up the histogram of local region, and

the histograms of all the local regions can form spatially enhanced histograms, which

is the descriptor of the face image.

Fig. 4

Examples of local regions. The local regions don’t need to be the same

size, for example, the lowest local regions in (c) are smaller than upper regions.

Fig. 5

An example of spatially enhanced histogram. Each green box in (a) is a

local region. We can calculate the histograms in each local region independently.

The histograms is showing in (b). All the local histograms can concatenate together

to form the spatially enhanced histogram.

Fig. 6

Examples of the weighted used in the weighted Chi-Square Distance. The

regions in red box are the border regions of the face images, and we give them lower

weight. The regions in the green box are in the middle of the face images, so we

they are more reliable and give them higher weight.

3.1.3

Similarity

After we have the descriptors of all face images, we need to evaluate the similarity of

any two face images. In the LBP system, we use the weight Chi Square distance:

χ2ω (A, B) = ∑𝑗=0,…,𝑚−1 𝜔𝑗 [∑𝑖=0,…,𝑛−1

(𝐴𝑖,𝑗 −𝐵𝑖,𝑗 )2

𝐴𝑖,𝑗 +𝐵𝑖,𝑗

],

A, B are spatially enhanced histograms of two face images, and ω are the weights in

each local region. In our system, we set the weight in the local region of the border of

the image is ‘1’, and in the other regions is ‘2’, because when cropping the face

images from the original home photos, it is easy to contain some background. We

decrease the weight in the border region, so the influence of background will

decrease.

3.2 Local Derivative Pattern

Local Derivative Pattern is a general framework to encode directive pattern feature

from local derivative various. The (n-1)th-order local derivative various can encode

the nth-order LDP. In this concept, LBP can be considered as first-order local

derivative pattern with all direction. Compared to LBP, LDP can store more

information of the gray level image.

3.2.1

Second-order Local Derivative Pattern

As we describe above, the nth-order LDP can be encoded by (n-1)th-order local

derivative various, to calculate second-order LDP must calculate first-order derivative

various. Given an image I(Z), we calculate first-order derivatives along 0°, 45°, 90°

and 135° directions, which is denoted as I’α(Z) where α= 0°, 45°, 90° and 135°. If Z0

is one point in I(Z), Zi, i = 1, …, 8, are the 8 neighboring point around Z0 (see Fig. 1).

So the four first-order derivatives at Z=Z0 are

I’0°(Z0) = I(Z0) – I(Z4)

I’45°(Z0) = I(Z0) – I(Z3)

I’90°(Z0) = I(Z0) – I(Z2)

I’135°(Z0) = I(Z0) – I(Z1)

And the second-order directional LDP can be defined as

LDP2α(Z0) = {f(I’α(Z0), I’α(Z1)), f(I’α(Z0), I’α(Z2)), …, f(I’α(Z0), I’α(Z8))}, α= 0°, 45°,

90° and 135°.

Where f(., .) is a binary function describe below:

0, if 𝑎 ∗ 𝑏 > 0

𝑓(𝑎, 𝑏) = {

}

1, if 𝑎 ∗ 𝑏 ≤ 0

And the second-order LDP, LDP2(Z), is defined as 32 bits sequence, which is

concatenated by 8-bit directional LDP:

LDP2(Z) = {LDP2α(Z) |α= 0°, 45°, 90° and 135°}.

Fig. 7.

Meanings of “0” and “1” for the second-order LDP. ref. 1 is Z0, and ref. 2 is

one of the 8-neighbor of Z0. The arrows mean the gradient in each point. (a) result in

both cases are “0”. (b) result in both cases are “1”.

Figure 7 illustrates the transition in gray-scale images to binary code. If the local

pattern is a “gradient turning” pattern (Fig 2. b), it is labeled as a “1”. Otherwise, the

gradient is monotonically increasing (Fig 2. a-2) or decreasing (Fig 2. a-1) in both Z0

and its neighbor, the result is labeled as a “0”.

Figure 8 demos the second-order LDP in 0°. First we calculate the first derivation of

each pixel. And then, we can calculate the multiple of the first derivations between

operating pixel and its neighbors. In 0°, we will get LDP0° = “01010011”. As the

same, we will get LDP45° = “10001101”, LDP90° = “11010010” and LDP135° =

“01000010”.

Fig. 8.

The example of 0° second-order LDP. (a) is the original gray value in some

local pattern. (b) is the first derivation of each pixel in 0°. (c) is the multiple result of

I’0°(Z0) and I’0°(Z1). So we can get LDP0° = “01010011”.

3.2.2

Nth-order Directional Local Derivative Pattern

Like the second-order LDP, we can easily calculate the third-order LDP. What we

need to do first is to calculate the second derivation of the images. We can define the

second derivation as following:

I”0°(Z0) = 2*I(Z0) – I(Z4) – I(Z8)

I”45°(Z0) = 2*I(Z0) – I(Z3) – I(Z7)

I”90°(Z0) = 2*I(Z0) – I(Z2) – I(Z6)

I”135°(Z0) = 2*I(Z0) – I(Z1) – I(Z5).

And the LDP operator will become:

LDP3α(Z0) = {f(I”α(Z0), I”α(Z1)), f(I”α(Z0), I”α(Z2)), …, f(I”α(Z0), I”α(Z8))}, α= 0°, 45°,

90° and 135°.

LDP3(Z) = {LDP3α(Z) |α= 0°, 45°, 90° and 135°}.

Figure 9 shows the same example with second-order LDP. We can calculate the

second derivation of each pixel, and calculate the third-order LDP. In the Figure 9, we

show the third-order LDP in 0°. And as the same, we can get the third-order LDP in

45°, 90° and 135°, they are “00100000”, “11010010”, and “00101100”.

Fig. 9.

The third-order LDP. (a) shows the same example with Fig. 8. (b) is the

second-order derivatives in the middle nine pixels. Using the function (), we can get

result in (c). So, the LDP30° is “01011011”.

As same as the second-order LDP and third-order LDP, if we want to calculate the

nth-order LDP, we need to calculate the (n-1)th-order derivatives along 0°, 45°, 90°

and 135° directions, denoted as I(n-1)α(Z), α= 0°, 45°, 90° and 135°. The nth-order LDP,

LDPnα(Z0), in α direction at Z = Z0, is defined as

LDPnα(Z0) = {f(I(n-1)(Z0), I(n-1)(Z1)), f(I(n-1)(Z0), I(n-1)(Z2)), …, f(I(n-1)(Z0), I(n-1)(Z8))},

And the nth-order LDP is a local pattern string defined as

LDPn(Z) = {LDPnα(Z) |α= 0°, 45°, 90°, 135°}.

Even though [] says function () can not be easy to affect by noise, in our experiment,

if the noise is too large, the performance of LDP is even worse than LBP. In order to

decrease the influence by this noise, we use bilateral filter first to smooth the noise.

We have tried Gaussian Smooth, the performance is better than the noise images.

3.2.3

Histogram

We will calculate one histogram for each direction, so there are four histograms in

each image. And we will use the rotation pattern we have described in sec. 3.1.1. So

the number of bin in each direction is 36.

And we use the spatially enhanced histogram just like LBP operator.

3.2.4

Compete with LBP

The advantages of the high-order LDP over LBP can be briefly summarized below.

1. LDP can provide a more detailed description for face by encode the high-order

derivatives. However, LBP can only describe the pattern in gray-scale value, not

the gradient.

2. LBP encodes only the relationship between the central point and its neighbors, but

LDP encodes the various distinctive spatial relationships in a local region and,

therefore, contains more spatial information.

3.3 Partial Matching

We have described the representation of face images above. However, sometimes the

face images of same subjects are not quite similar, because of the occlusion or noise.

So we consider if there is a algorithm that can ignore the occlusion Partial Matching is

one of these algorithm.

3.3.1

Partial Matching

If we sample a local region every s pixels, and totally we have N = K × K local

regions for one face image, we can have a descriptor for total image:

F = |f⃗mn |, 1 < 𝑚, 𝑛 < 𝐾,

where ⃗fmn corresponds to the descriptor extracted from local regions located at (m.

s, n.s).

Now, if we have two images I(1) and I(2), we first calculate the similarity of each local

descriptor ⃗fij in I(1) and its neighbors in I(2)as following:

(1)

d(fij ) =

min

k,l:|i∙s−k∙s|≤r,|j∙s−l∙s|≤r

(1)

(2)

‖fij − fkl ‖ ,

1

fij(1) and fkl(2) represent the local descriptor in images I(1) and I(2). And r shows the how

many neighbors we allow to match for each local region. Then, we can get the sorted

result as following:

K

[d1 , d2 , … , dαN , … dN ] = Sort {d(fij(1) )}

i,j=1

.

And we can define

d(I (1) → I (2) ) = dαN

as the directional distance from I(1) to I(2), where α is a control parameter for partial

matching. So, as the function we describe above, partial matching can find out some

regions that are similar or different. We can change α to control the similarity we use.

Similarly, we can also define the distance from I(2) to I(1). Often, d(I(1)→I(2)) is

different from d(I(2)→I(1)). To make the similarity symmetric, we define the distance

between two images are

D(I (1) , I(2) ) = max{d(I (1) → I(2) ), d(I(2) → I(1) )}.

Fig.

Example of local regions. If we define the width of local region is n, and

sample the local region every s pixels, we can get the first two local regions as the

images shows. The green box shows the first local region, and we go on right, sample

the second regions, as the red box showing.

[

f11

f21

⋮

fK1

Fig.

f12

f22

fK2

f1K

f2K

]

⋱

⋮

⋯ fKK

⋯

Example of representation of the face image. Every local region can make

up a descriptor, and we combine those descriptors to perform a descriptor for the face

image.

Fig.

Example of partial matching for a local region. The green boxes in the two

image are in the same location. If we want to match the green box in (a), we find

some neighbors of green box in (b), such as the yellow boxes and green boxes (b). We

can use the value of r to control the size of red box in (b). And we can get the distance

between the local region in (a) and the most similar local region in (b).

3.3.2

Partial Matching with LBP

As we describe in 3.1, LBP operator will count a histogram to represent each local

region. So it is easy to combine LBP and partial matching. When implement these two

methods, we will calculate the LBP labels for each pixel first. Then, in each local

region, we count a histogram independently, and take it as vector fij. The following

procedures are same with section 3.3.1. For each local region in I(1), we can find the

most similar region in the I(2). Then we can set the distance from I(1) to I(2) are the α

region distance if we sort the distance of all the local region.

3.3.3

Advantage of Partial Matching

One of the most common problems we will face when dealing with the home photos

is the occlusion. Sometimes people may wear the sun glasses or hats, which may

cover the eyes of the subjects. In traditional LBP and LTP, features around eyes and

eyebrows are the impotent. And if we use partial matching, it will look for some

similar local regions. So it may ignore the influence of the occlusion.

3.4 Clustering

When we get the similarity of any two images, we can divide those images into

several clusters. We have tried kNN, and complete-link clustering. We will introduce

each method in this section.

3.4.1

Nearest Neighbor

In this algorithm, we manage each image sequentially. First, we define there are no

existed clusters. And when the first image comes in, we will put it into the first cluster.

Then, while the second image comes, we calculate the similarity of the first cluster

and the second image. If they are similar enough, says similarity is larger than a

threshold, we can add the second image into the first cluster. However, if those two

images are not quite similar, we need to build a new cluster, and add the second

image into this cluster. Like the procedure of the second image, the nth image is

compared to the existed clusters. If the similarity of the existed cluster and nth image

is larger than the threshold, we can add nth image to those cluster. Nevertheless, we

need to build a new cluster which contains only one component, nth image. To

notice that, we assume the representation of the cluster is the average of its

components, so that we can calculate the similarity of the images and clusters just

like calculating the similarity between two images.

The advantage of this algorithm is that it is the quickest algorithm in our testing. It is

no need to calculate the similarity of any two images, but only the similarity of image

and existed clusters. However, the accuracy of this method may be affected by the

sequence of the face images.

3.4.2

KNN

KNN is one of the most popular algorithms of clustering. In this algorithm, we need

to give system the value of k. And in the initial step, we random select k images in the

total N images as seeds of kNN. Then, we need to calculate the similarity between all

images and seeds. And if the similarity of ith image and the jth seed is largest, than

we can assigned ith image into jth cluster. All the images can be assigned to a cluster.

We can take the mean of each cluster as new seed of this cluster. And repeatedly, we

need to calculate the similarity of images and seeds. We will do the above procedure

several times until the cluster contribution will not change.

The advantage of this algorithm is that it is quickly when calculating the similarity of

seeds and images each time. However, it might need to repeat many times to get a

converge result. Even worse, it might get the local minima of the system, not the

optimal result. And, the result may be influent by the random selected images in the

initial step.

Fig.

The disadvantage of kNN. If we sample a and f as the initial seeds, we will

get the optimal clustering result like (b). However, if we sample a and d as the initial

seeds, we will only get the local minima result like (c).

3.4.3

Complete-Link Clustering

The clustering algorithms describe above are not the perfect algorithm. So we tried

some hierarchical clustering algorithm. We use complete-link hierarchical clustering

algorithm instead of single-link hierarchical clustering because it is too easy to merge

all the subjects into the same cluster in single-link clustering. But in complete-link

clustering, it will support all the components in the same cluster have strong

relationship with each other. The algorithm of complete-link clustering will be

described in the following paragraph.

The main point of complete-link clustering is to build a tree according to each

pairwise-relationship between any two components in two clusters. So, in the initial

step, we need to calculate all the similarity between any two identities in the dataset,

and sort the similarities. And also, we take each identity as a cluster and put in a leaf

node. In the first step, we check the most similar pair of identities. Because in their

cluster content only one component, themselves, we can see all the component in

this two clusters are similar enough, and then, we can merge these two clusters

together. In the tree structure, we can build a parent node of these two leaf nodes. In

the second step, we check the second similar pair of identities. If all pairs of their

clusters are checked, which means their similarities is larger than the pair we process

now, we can merge these two clusters, and also we will build a new parent node of

two cluster node. Otherwise, we will do nothing. We will do the second step again

and again, until all pairs are processed. Then, we will get a tree, which root are

showing all the identities in the same cluster, and other nodes show some identities

in one cluster.

The complexity of worse case of complete-link clustering is O(n2logn) if there are n

identities, because it needs to sort n2 pairs. Except the high complexity, the

complete-link clustering perform better than kNN and nearest neighbor.

Fig.

Example of complete-link clustering. (a) is the similarity of four identities.

(b) shows the sorting results of similarity. (c) shows the initial tree nodes. (d)-(i) are

the processing step of complete-link clustering. First, we deal with AD pair. Because A

and D are made up a cluster themselves, we can merge them to the same cluster,

and build a new node (red one). Second, we deal with BD. However, AD are in the

same cluster, so we need to consider the similarity of AB, too. The similarity of AB is

smaller than BD, because it is not handled. So we do nothing in this step. Third, we

deal with AB pair. In this step, we check BD pair, and we know the similarity of BD

pair is larger than AB pair. So we can merge AD cluster and B cluster, like (f) shows.

The following steps are just the same with second and third step. And In the sixth

step, we can get a final result tree.

Fig.

If we set a threshold = 5, which means only the pair whose similarity is

larger than 5 will be accepted to be the same cluster, we will get the result as (a).

ABD are in the same cluster, and C makes up a cluster itself.

3.5 Multi-thread

To summarize all the steps in our system, it can be showing in the following.

We can find out that we need to do the first three steps for each face image, and the

result is independent to other images. And the forth step, calculating the similarity of

any two images are also independent to other images except these two images. So, we

can use multithreads to parallel doing these steps. In our implement, we will do it in

four-thread in quar-core system. And it will have more than twice speed up.

4.

Experiment

4.1 Data sets

In our experiment, we mainly use three data sets. The first one is the AR dataset. We

use 7 or 14 images per subjects with different express and different lighting. In this

dataset, we totally use 881 images, so there are 120 individual subjects. Although

those subjects may have different express, they are still face to the camera and almost

on the same position. The other two data sets are provided by the members of our

laboratory. We ask them to provide some photos they took on vacation with friends, so

there might be several subjects in one photos. As we say before, we call these photos

“Home Photos”. In these home photos, subjects may locate at different part of images,

may not face to the camera, may have different position, and there might be different

lighting. To deal with those problems, the first job we do is to detecting where the

human faces are. And then, we need to do the face-aliment to each detected face, and

crop and warp each face images until the eyes of each face are located at almost the

same position and each face image have the same size. The first home-photo data set

(Home Photos I) contains 309 images and there are 5 subjects, 2 males and 3 females.

The second home-photo data set (Home Photos II) contains 838 images and there are

8 subjects, 4 males and 4 females. In most of the experiments, we will focus on the

two home-photo data sets.

Different Express

Fig.

Blur

Fig.

Blur

Fig.

Different Lighting

Examples of AR datasets.

Non-frontal

Image

Different

Express

Different

Lighting

Occlusion

Different

Lighting

Occlusion

Examples of first home-photo dataset.

Non-frontal

Image

Different

Express

Examples of second home-photo dataset.

4.2 Supervised Learning

In this section, we introduce our experiment of supervised learning. For each data set,

we random select half of face images per subject for training, the remaining half for

testing. The method we use to classify each image is k-nearest-neighborhood. We will

do it for five times to get the average performance. The result is showing bellow.

LBP

AR

Home Photos I

Home Photos II

85.3521%

92.7044%

93.1783%

LDP

LBP + partial

matching

89.8113%

94.6479%

LDP + partial

92.956%

95.7364%

85.9119%

matching

Table.

LBP

The result of supervised learning.

AR

Home Photos I

Home Photos II

8.924s / 1.8056s

0.713s / 2.422s

7.725s / 12.303s

LDP

LBP + partial

matching

3.909s / 12.718s

220.840s /

11764.903s

LDP + partial

matching

Table.

59.941s /

1381.247s

150.352s /

4136.810s

364.938s /

9033.929s

Executing time of supervised learning. The previous one is training

time, and the last one is the testing time.

One can see that the accuracy using LDP with partial matching is higher than all other

methods. Furthermore, if one compares the result of LBP with and without partial

matching, one can find that the accuracy with partial matching is higher than the

accuracy without. Especially, one can notice that the accuracy of AR is improved the

most. It was told us that the partial matching is useful in face recognition. However,

the calculating time of LBP with partial matching is much longer than the pure LBP,

even we use the multithread to compute the LBP and the similarity. In our experiment,

the executing time of pure LBP is about 200 minutes. It is because the time to

compute the distance between two representations of images is 250ms in LBP with

partial matching, and 10ms in pure LBP. It is a disadvantage if we want to implement

the partial matching in the real-time projects.

4.3 Unsupervised Learning

In this section, we will show some results of unsupervised learning. We mainly use

the clustering algorithm, such as complete-link hierarchical clustering algorithm or

kNN algorithm, which we have described before.

LBP with partial matching performs better than pure LBP (as Figure ). And if r is

more than 0, the performance is much better than LBP. It is because that our face

alignment is not perfect. Sometimes, it will have one or two pixels error, and

sometimes, even we fix the location of eyes, the locations of noses or mouths are not

the same. If we check the similarity of the neighbor local patches, maybe we will find

the correct mapping patch of each patch. And the r value constrains the size of

neighborhood. If one patch locates at the middle of the face image, and another

locates at the border area, they must be not the same part of face. However, partial

matching takes more time when computing the similarity of two face images.

1.2

LBP

LDP

1

LBP+PartialMatching

LDP+PartialMatching

0.8

0.6

0.4

0.2

0

0

Fig.

0.1

0.2

0.3

0.4

0.5

The comparison of LBP, LDP with and without Partial Matching.

0.6

1.2

1.2

LBP + PM (r=0)

LBP + PM (r=1)

1

1

LBP + PM (r=2)

LBP + PM (r=3)

LBP + PM (r=4)

0.8

0.8

LBP

0.6

0.6

LBP+r0alpha0.2

LBP+r1alpha0.2

LBP+r2alpha0.2

LBP+r3alpha0.2

LBP+r4alpha0.2

LBP

0.4

0.2

0.4

0.2

0

0

0

Fig.

0.05

0.1

0.15

0.2

0.25

0

0.1

0.2

0.3

0.4

The result of LBP with and without partial matching in unsupervised

learning using home photos II. The x-axle is the pair-wise recall and the y-axle is the

precision.

And we can change the parameter of alpha. Alpha value controls the similarity of the

partial matching. If we define alpha to 0, it means that we use the most similar patch

to define the similarity of two images. However, if we define alpha value to 1, it

means that we use the most different patch to calculate the similarity of two images.

To under stand how alpha value affects our exam, we do the experiments with

different alpha values (see Fig.). As the result shows, if the alpha value is 0.5, the

performance is a little worse than 0.2 and 0.1. It is similar to the result of (partial

matching 的作者). We think it is more reliable of more similar patches. If we set

alpha value to be 0, it might be the most similar patches. However, it might be the

chip with no information of who the subject is. If we set the alpha value to be 1, it will

indicate the most different patch, and they are not reliable. So the alpha value is

smaller, the similarity is more reliable.

And we also try some other experiments. We wonder the descriptor of the local

patches will affect the performance or not. In the original local binary pattern and

local derivative pattern, we used to describe a local patch with only one histogram.

However, when (partial matching 作者) describing their way to do the partial

matching, they use a concentric circle. So we imitate their way to use 9 histograms to

describing each local patch. The result is showing in Figure. The performance of

concentric circle descriptor is much better than the plant one. Maybe it is because

there are more spatial information if we use the concentric circle structure to describe

each local patch. And if we use the plant structure, the label in the middle of a local

patch or in the edge could be look as the same.

1.2

1.2

alpha=0

1

alpha=0.1

1

alpha=0.2

alpha-0.5

0.8

0.8

0.6

0.6

0.4

0.4

r3+alpha0

r3+alpha0.1

r3+alpha0.2

r3+alpha0.5

r3+alpha1

0.2

0

0

Fig.

0.1

0.2

alpha=1

0.2

0

0.3

0

0.1

0.2

0.3

0.4

Example of different alpha value of partial matching.

1.2

1.2

PM (r=4 sub=9)

1

1

0.8

0.8

0.6

0.6

0.4

0.4

PM (r=4 sub=1)

PM (r=3,sub-9)

0.2

0.2

PM (r=3,sub=1)

0

0

0

0.1

0.2

0.3

0

0.1

0.2

0.3

0.4

Fig.

An example of different descriptors of local patches. The blue line uses the

concentric circle, similar with []. The red one uses no special structure to describe

local patches.

#Clusters

#Single-component

precision

Time

Clusters

Picasa Online

253

150

100%

< 1 second

#Clusters

#Single-component

precision

Time

Picasa PC

LBP

LDP

LBP + Partial

Matcing

LDP + Partial

Matching

(a)

Clusters

Picasa Online

99

75

100%

< 10 seconds

Picasa PC

99

75

100%

3 minutes

LBP

100

31

90.378%

3.641s

LDP

100

36

95.8221

11.797s

LBP + Partial

100

39

99.4602%

1461.078s

100

59

81.5476%

8144.985s

Matcing

LDP + Partial

Matching

(b)

Table.

The comparison of our result and Picasa web album.

And if we compare our result with Picasa web album, we can get the result as

following. We put the same photos to Picasa Web album, but it can only find out part

of face. However, the Picasa almost don’t make mistakes, so the precision is very high,

and the executing time is very short in web version. And if we take a look at our

result, we can find out that although we still make some mistakes. But the number of

clusters which contain only one component is much less than Picasa.

5.

Conclusion

5.1 Discussion

In this paper, we use both Local Binary Pattern and Partial Matching algorithm to deal

with face recognition problem. These two algorithms are both used in face recognition

before. However, they are not the perfect algorithms. So, in this paper, we try to

merge these two methods. We use the labels of local binary pattern as the feature of

the face images. And then, we follow the partial matching algorithm to calculating the

similarity of any two face images. And as we demo before, the accuracy of our system

is better than previous works, including the Local Binary Pattern and Partial Matching

algorithm. It is novel to combine these two algorithms.

To summarize the advantage of our system, it is suitable to use in the face recognition

problem of home photos. We use more dimensions to describe each patch than

original Partial Matching algorithm. It will preserve more details than original one.

And compared to the Local Binary Pattern, we ignore some patches that are not

distinguished enough. We use a parameter alpha to control the similarity of two

images. This parameter is usually set to 0.2, which means we use the patches that are

not so similar which may be in the cheek and contain less information, and we don’t

use the patches that are quite different, which are not reliable. So, to deal with home

photos, it will ignore the problem of different pose, or partial occlusion.

5.2 Future Work

Even though we have improved the accuracy of face recognition system, there are still

some problems. The biggest problem of our system is time-consuming. Because the

dimension of each LBP histogram is about 59, and there are more than 3000 patches

in each image when calculating the partial match distance. Now we use 4 threads in

quar-code system, however, it takes about two hours in calculating the distances of

any two images of 514 images. We can apply the algorithms of this paper to the cloud

computing system in the future. The steps we use multithreads now are easily to apply

to cloud computing systems. And we can expect it will take less than one minute to

complete all the works.

Reference

[1] T. Ahonen, A. Hadid, and M. Pietikainen. Face Recognition with Local Binary

Patterns. In Proc. ECCV, 2004.

[2] Gang Hua, Amir Akbarzadeh. A Robust Elastic and Partial Matching Metric

for Face Recognition. In Proc. ICCV, 2009

[3] Baochang Zhang, Yongsheng Gao. Local Derivative Pattern Versus Local

Binary Pattern: Face Recognition With High-Order Local Pattern Descriptor. In

IEEE Transactions on Image Processing, VOL. 19, No. 2, February 2010.