BotGraph: Large Scale Spamming Botnet Detection

advertisement

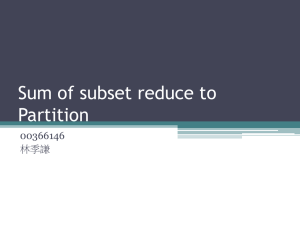

Mosharaf Chowdhury, PhD Student in CS My current high-level research interests include data center networks and large-scale data-parallel systems. Recently, I have worked on a project on improving the performance and scalability of packet classification in wide-area and data center networks. I have also worked on network virtualization that allows multiple heterogeneous virtual networks to coexist on a shared physical substrate. Please visit http://www.mosharaf.com/ for more information on my research. I want to take away lessons on macro- and micro-level parallelism from this course and possibly apply them in my research. I summarize a recent work on botnet detection using large-scale data-parallel systems (MapReduce [2] and Dryad/DryadLINQ [3][4]) in the following. While previous incarnations of this assignment resulted in people discussing how MapReduce (or its open-source implementation Hadoop) works, this one is about how MapReduce like large data-parallel systems can be used in practice for useful purposes. BotGraph: Large Scale Spamming Botnet Detection [1] Motivation Analyzing large volume of logged data to identify abnormal patterns is one of the biggest and most frequently faced challenges in the network security community. This paper presents BotGraph, a mechanism based on random graph theory, to detect botnet spamming attacks on major web email providers based on millions of log entries and describes its implementation as a data-parallel system. The authors posit that even though individually detecting bot-users (false email accounts/users created by botnets) is difficult, they do have some aggregate behavior (e.g., they share IP addresses when they log in and send emails). BotGraph detects abnormal sharing of IP addresses among bot-users to separate them from human-users. Core Concept BotGraph has two major components: aggressive sign-up detection that identifies sudden increase of signup activities from the same IP address and stealthy bot detection that detects sharing of one IP address by many bot-users as well as sharing of many IP addresses by a single bot-user by creating a user-user graph. In this paper, the authors consider multiple IP addresses from the same autonomous system (AS) as one shared IP address. It is also assumed that legitimate groups of normal users do not use the same set of IP addresses from different ASes. In order to identify bot-user groups, the authors create a user-user graph where each user is a vertex and each link between two vertices carry some weight based on a similarity metric of the two vertices. The authors' assumption is that the botusers will create a giant connected component in the BotGraph (since they will share IP addresses) that will collectively distinguish them from the normal users (who create much smaller connected components). They show that there exists some threshold on edge weights, which, if decreased, will suddenly result in large components. It is proven using random graph theories that if there is IP address sharing than the giant component will be seen with a high probability. The detection algorithm works in an iterated manner starting with a smaller threshold value to create a large component, and it then recursively increases the threshold to extract connected sub-components (until the size of the connected components become smaller than some constant). The resultant output is a hierarchical tree. The algorithm then uses some statistical measurements (using histograms) to separate bot-user group components from their real counterparts. After the pruning stage, BotGraph goes through another phase of traversing the hierarchical tree to consolidate bot-user group information. Data-parallelism in Action The biggest challenge in applying BotGraph is the complexity arising from the construction of the graph that can contain millions of vertices. The authors propose two approaches using currently popular large data parallel processing mechanism MapReduce and its extension using selective filtering with two more interfaces than just basic Map and Reduce (Dryad/DryadLINQ). Method 1. Simple Data-parallelism In this approach the authors partition data according to IP address, and then leverage the well known Map and Reduce operations to straightforwardly convert graph construction into a data-parallel application. As illustrated in Figure 1, the input dataset is partitioned by the user-login IP address (Step 1). During the Map phase (Step 2 and 3), for any two users Ui and Uj sharing the same IP-day pair, where the IP address is from Autonomous System ASk , they output an edge with weight one e =(Ui, Uj , ASk). Only edges pertaining to different ASes need to be returned (Step 3). After the Map phase, all the generated edges (from all partitions) will serve as inputs to the Reduce phase. In particular, all edges will be hash partitioned to a set of processing nodes for weight aggregation using (U i, Uj) tuples as hash keys (Step 4). After aggregation, the outputs of the Reduce phase are graph edges with aggregated weights. Figure 1: Process Flow of Method 1 [1] Method 2. Selective Filtering In this approach the authors partition the inputs based on user ID. For any two users that were located in the same partition, they can directly compare their lists of IP-day pairs to compute their edge weight. At the same time, for two users whose records locate at different partitions, they need to ship one user’s records to another user’s partition before computing their edge weight, resulting in huge communication costs. To reduce this cost, the authors selectively filter the records that do not share any IP-day keys. Figure 2 shows the processing flow of generating user-user graph edges with such an optimization. For each partition pi, the system computes a local summary si to represent the union of all the IP-day keys involved in this partition (Step 2). Each local summary si is then distributed across all nodes for selecting the relevant input records (Step 3). At each partition pj (j != i), upon receiving si, pj will return all the login records of users who shared the same IP-day keys in si. This step can be further optimized based on the edge threshold w: if a user in p j shares fewer than w IP-day keys with the summary si, this user will not generate edges with weight at least w. Thus only the login records of users who share at least w IP-day keys with si should be selected and sent to partition p i (Step 4). To ensure the selected user records will be shipped to the right original partition, they add an additional label to each original record to denote their partition ID (Step 7). Finally, after partition p i receives the records from partition pj, it joins these remote records with its local records to generate graph edges (Step 8 and 9). Other than Map and Reduce, this method requires two additional programming interface supports: the operation to join two heterogeneous data streams and the operation to broadcast a data stream (implemented using Dryad/DryadLINQ). Figure 2: Process Flow of Method 2 [1] The main difference between the two data processing flows is that Method 1 generates edges of weight one and sends them across the network in the Reduce phase, while Method 2 directly computes edges with weight w or more, with the overhead of building a local summary and transferring the selected records across partitions. However, the cross-node communication in Method 1 is constant, whereas for Method 2, with the increasing number of computers both the aggregated local summary size as well as the number of user-records to be shipped increase, resulting in a larger communication overhead. Results The authors found 0 to be the more resource efficient, scalable, and faster of the two. Using a 221.5 GB workload, Method 1 created the user-user graph in just over 6 hours with 12.0 TB communications overhead, whereas Method 2 finished in 95 minutes with only 1.7 TB overhead. Evaluations using the data-parallel systems show that BotGraph could identify a large number of previously unknown bot-users and bot-user accounts with low false positive rates. References [1] [2] [3] [4] Y. Zhao et al, “BotGraph: Large Scale Spamming Botnet Detection,” NSDI’09. J. Dean and S. Ghemawat, “MapReduce: Simplified Data Processing on Large Clusters,” OSDI’04. M. Isard et al, “Dryad: Distributed Data-parallel Programs from Sequential Building Blocks,” EuroSys’07. Y. Yu et al, “DryadLINQ: A System for General-purpose Distributed Data-parallel Computing Using a High-level Language,” OSDI’08.