out of core

advertisement

Numerical Integration

b

I ( f ) f ( x)dx general framework of NI

a

n

I ( f ) I ( f n ) f n ( x)dx I n ( f ) j 1 j ,n f ( x j ,n ) n 1 j f ( x j )

b

n

a

j 1

choose fn such that I(fn) can be evaluated easily fn polynomial

Most NI will have the form as

I n ( f ) j 1 j ,n f ( x j ,n ) n 1

n

n

j f ( x j )

where j :weight

x j :node (integration point)

j 1

Trapezoidal rule

y=f(x)

b

I ( f ) f ( x)dx

a

linear interpolation: I1 ( f )

and error: E1 ( f )

(b a)3

f ( ) (exact to linear function)

12

3

Ex:

ba

[ f (a ) f (b)]

2

b

a

I ( f ) (2 x 1)dx x 2 x | 12 2 10

3

1

1

3 1

(3 7) 10

2

if (b-a) is not small enough then E1(f) would be unacceptable!

Composite Trapezoidal rule (piecewise linear interpolation):

let n 1, h

(b a)

and x j a j h for j 0,1,..., n

n

1

1

f 0 f1 ... f n1 f n )

2

2

w / f j f ( x j ); h ( xi 1 xi ) evenly space

I n ( f ) h(

error : En ( f )

(b a)h 2

f ( )

12

Ex.: I e x cos( x)dx

0

y=f(x)

(e 1)

12.0703463164

2

…

a

xj

b

n2

In

2

1

1

( e 0 cos(0) e / 2 cos( / 2) e cos( ))

2 2

2

(0.5 0 11.5703) 17.3892

Simpson's rule

use quadratic interpolating polynomial P2(x) to approximate f(x) on [a,b] w/ c=(a+b)/2

P2 ( x)

( x c)( x b)

( x a)( x b)

( x a)( x c)

f (a)

f (c )

f (b)

(a c)( a b)

(c a)(c b)

(b a)(b c)

h

I 2 ( f ) [ f (a) 4 f (( a b) / 2) f (b)] w / h (b a) / 2

3

y=f(x)

assume f(4)(x) continuous

h5 ( 4)

(b - a)

f ( ); h

90

2

b

a

i.e., E2(f)=0 for f(x) is a polynomial w/ degree≦3, even the interpolation polynomial is only

E2 ( f )

quadratic (canceling of area)

Composite Simpson's rule (piecewise quadratic interpolation):

let n 1, h

(b a)

and x j a j h for j 0,1,..., n

n

h

( f 0 4 f1 2 f 2 4 f 3 2 f 4 ... 2 f n 2 4 f n 1 f n )

3

w / f j f ( x j ); h ( x j 1 x j )

In ( f )

En ( f )

(b a )h 4 ( 4 )

f ( )

180

Gaussian Quadrature:

b

a

n

f ( x)dx I ( f ) I n ( f ) j ,n f ( x j ,n )

j 1

The weightings { j,n } and nodes {xj,n} are to be chosen so that In(f) equals to I(f) exactly for

polynomial f(x) degree as large as possible. (w/ fixed n number of nodes)

Natural Coordinate ([a, b]=[-1, 1])

1

1

n

f ( x)dx j f ( x j )

j 1

n

En ( f ) f ( x)dx j f ( x j )

1

1

j 1

En (a0 a1 x a2 x ... am x m ) a0 En (1) a1En ( x) ... am En ( x m )

2

for E n ( f ) 0 for EVERY polynomial of degree m

En ( x t ) 0 t 0, 1, ..., m

Case I n 1 (2 variables : 1 , x1 )

E1 (1) 0; E1 ( x) 0

1 1dx 0

2

1

1

1

1

x1 0

1xdx 1 x1 0

exact to linear function

1

f ( x)dx 2 f (0) (midpoint rule)

1

Ex.:

1

1

1

1

I ( f ) (2 x 1)dx x 2 x| 2 0 2

2 f (0) 2 1 2

Case II n 2

E2 (1) E2 ( x) E2 ( x 2 ) E2 ( x 3 ) 0

1

i.e., E2 ( x t ) x t dx (1 x1t 2 x2t ) 0 t 0,1,2,3

1

1dx (1 2 ) 0

1 2 2

1

1

xdx ( x x ) 0

x 2 x2 0

1 1

2 2

1

12 1

1 2

2

2

2

1x dx (1 x1 2 x2 ) 0 1 x1 2 x2 2 / 3

1 3

1 x13 2 x23 0

3

3

x

dx

(

x

x

)

0

1

1 1

2 2

1

1

1

f ( x)dx f ( ) f ( ) (vs Simpson' s rule)

1

3

3

nn

1

En ( x t ) 0 t 0,1,..., (2n - 1)

n

j1

j

t 1,3,..., (2n - 1)

0

x tj

2 /(t 1) t 0,2,..., (2n - 2)

n nodes 2n dof exact integrate of polynomial w/ (2n-1) degree

Note: n=3 x=

3

,0

5

Ex.: I e x cos( x)dx

0

n

(e 1)

12.0703463164

2

I-In

2

4

6

n

2.66e-1

-1.57e-4

1.47e-8

I-In

3

5

7

5.71e-2

-1.78e-5

1.14e-9

n2

In

2

(e

(

)

2 2 3

cos(

) (e

2 2 3

2

(

)

2 2 3

cos(

)) 12.3362

2 2 3

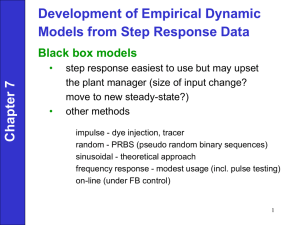

Expand to higher dimensions:

All above methods can easily be expanded into higher dimension, here we use GQ as example:

Applying the 1D GQ on each dimension separately, for instance, in 2D

1

1

1 1

ni

nj

i 1

j 1 i 1

ni

f ( x, y )dxdy ( i f ( xi , y ))dy i j f ( xi , y j )

1

1

unfortunately, in multi-dimensional case the GQ nodes are not longer the optimal.

For example, in 3D a six-point (face center) is exactly integrated for polynomial w/ degree < 4

while GQ require 2x2x2 (eight) pts.

1

0.5

0

-0.5

-1

1

0.5

1

0.5

0

0

-0.5

-0.5

-1

-1

Note: in general, the integration points (Gaussian points) are best for derivate value (stress and

strain)in FEA post-processing

Reduce and Selective Integration:

[k ]e [ B]eT [ D]e [ B]e dv

ve

The material property matrix [D] could be rewritten as

[ D] [ D] [ D]

where [D] is the -part of [D] and [D ] is the -part of [D]

Note: from the constitutive equation in isotropic case

ij ukk ij 2uij

where

2

E

1 2 (1 )(1 2 )

E

2(1 )

Lame' s parameters

Selective Reduced Integration: reduce the integration numerical order (less integration points) on

the –term, whereas normal integration is used on the -term.

Uniform Reduce Integration: both and terms are integrated w/ a reduced integration order.

Ex.: Normalized vertical tip displacement for cantilever beam w/ q4 (bi-linear) element

Anti-symmetry

F

(plane strain)

R

F: 2x2 full integration

0.3 0.904 0.912 R: selective reduce integration (2x2 on & one pt on )

0.499 0.334 0.937 (nearly incompressible)

Notes:

1.

2.

3.

In nearly incompressible analysis, / >> 1, i.e., [D] is greater than [D ] , and use selective

reduce integration does provide some physical meanings.

In compressible analysis, for 3D or plane strain cases, most elements give almost identical

results while using either full or reduce integration, but reduce integration breaks down in

axisymetric analysis.

The advantages of the reduce integration are the economy of element formulation and some

compensation of the over stiff behavior of the finite element approach. But the uniform reduce

integration might also reduce the rank of the element stiffness matrix resulting in singularity of

the global matrix.

Numerical Methods for Solving Linear System Equations from FEA

Ref: Finite Elements Computational Aspects (Vol.II) J.T. Oden, (Ch 3)

Application and Implementation of FEM,

J.E. Akin, (Ch. 9)

Problem Definition: [A]x = bin general [A] is symmetry and positive definite in FEA

Direct Methods : Gaussian elimination (best for resolve)

in core (system paging if memory is not enough)

out of core (Frontal Solver)

Iterative Methods: best for large system (trade accuracy with time)

start w/ initial guess X(0)

for k = 1 to n

obtain X(k) from X(k-1)

convergence test for X(k)

|| X

|| X

(k )

(k )

||

|| 1

|| X

(k )

|| X

X

(k )

( k 1)

|| 1

||

next k

Note:

norm: ‘size’ of a measurement of scale, vector & matrix

scale: |xi| (absolute value)

1

n

vector: p norm || x || p , ( i | xi | p ) p where 1 p ;i const 0

i 1

w/ ω=1

p=1 one norm ||x||=Σ|xi|

p=2 two norm (euelidean norm) ||x||2=(xTx)1/2=(Σ|xi|2)1/2

p=∞ infinity norm (maximum norm, uniform norm)||x||∞=Max|xi|

properties:

0 if x 0

0 if x 0

|| a x || p | a | * || x || p

|| x || p

|| x y || p || x || p || y || p

Geometry interpolation w/ n=2 (2-dimensional vectors): Sp={x R2 | ||x||p≦1}

p=1

p=2

p=∞

(0,1)

1

(0,1)

(-1,0)

(-1,0)

(1,0)

-1

1

(1,0)

(0,-1)

(0,-1)

-1

Direct methods:

For the most direct method to solve: [A]x = b, is to find the [A]-1 then x=[A]-1b. However, the

computational requirement of is generally at the order of n3, where n is the size of [A], which makes

it too difficult for large n.

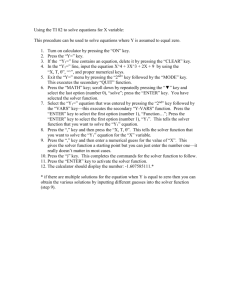

Gaussian Elimination (fewest calculation for solving general linear system equations) Factorization

Phase [A]x = b [U]x = b' where [U] upper triangle matrix

for k = 1 to n-1

// n: rank of [A]

for i = k+1 to n

a11'

mik = aik(k) / akk(k) // w/ akk <> 0

bi(k+1) = bi(k) - mik bk(k)

a12' ... a1' n x1 b1'

'

' '

x

0

a

...

a

22

2

n 2 b2

... ... ... ... : :

' '

x

0

0

...

a

nn n bn

for j = k+1 to n

aij(k+1) = aij(k) - mikakj(k)

next j

next i

next k

Note: k (k= 1 to n-1) is the stage to eliminate kth variable (xk) from ith equation (i = k+1 to n)

Back-substitution Phase

for k = n-1 to 1

n

1

xk

[bk akj x j ]

akk

j k 1

next k

a11' a12'

'

0 a22

... ...

0

0

... a1' n

... a2' n

= [U]

... ...

'

... ann

0

1

m

21 1

...

...

mn1 mn 2

... 0

... 0

= [M] = [L]

... ...

... 1

[A] = [L][U] and both could be stored in [A]'s memory position

The purpose of storing [L] is to resolve w/ different b

Choleski Factorization: when [A] is symmetry & positive definite

l11 a11

j 1

a

ij lik l jk

t

k 1

then [A]=[L][L] where [L] lower triangle matrix w/ lij

(i j )

l

jj

0 (i j )

i 1

lii aii lik2 (i 2,..., n)

k 1

[ A]x b [ L][ L]T x b (factoriza tion)

therefore: [L] z b (forward - substituti on)

[L]T x z (backward - substituti on)

p-d: xt[A]x>0 for all x≠0

sym: aij=aji

l112=a11

l21l11=a21

l212+l222=a22

Modified Gauss- Choleski: [A] sym. & p.d. (symmetry Gaussian elimination)

d11 a11

i 1

d a d l (i 2,..., n)

ii

ii ik

ii

k 1

[ A]x b ([ L][ D][ L]T ) x b (factoriza tion)

[ L]z b (forward - substituti on)

where lii 1

[ D] y z (scaling)

j 1

T

lij (aij d kk lik l kj ) / d jj (i j )

[ L] x y (backward - substituti on)

k 1

lij 0 ( j i )

Algorithm: [M] (nxn) original holds [A] and will contain [U] (=[L]T) at the end of the algorithm and

dk contain dkk.

Out of core direct method: Frontal Method unsupported explicitly in ANSYS

12

When

–use

memory is not enough to solve [A]x=b

iteration methods (can not resolve w/ new b)

–performing

–use

extra computing in exchange for reducing storage requirement (can not easily resolve)

auxiliary (secondary) storages

Since

the auxiliary storage is relatively slow, so the basic idea is to minimum the I/O to this

secondary storage. goal :factorization compute [U] & [D] from [A] w/o reading or

writing any single entry more than once.

forward & backward reading [U] twice,[D]once

Frontal method (auxiliary storage methods)ref: Iron,B.M. “A Frontal Solution Program for Finite

Elements”, Int. J. Numerical Methods Eng., 2, pp5-23, 1970

(Key notation: factorization is driven by the properties of elements rather than by the properties of

the DOF. element numbering is more important than node numbering. )

For all other direct methods, the elements are processed to generate [K] (or [A]) and apply Gaussian

method to solve the equations. In contrast, the frontal method proceed by processing elements one

at a time to assemble contributions to the coefficient matrix [A] (or [K]). Step of elimination

(factorization) are then carried whenever any new row of [A] has been completely assembled, i.e.,

whenever all elements containing the corresponding DOF have been processed. After an elimination

step, the pivot row is written to the auxiliary storage and its space in primary memory is reused. The

array used to store the matrix entries in core memory is called the active matrix and the number of

rows & columns of it in used at any time is called the frontwidth. For the frontal method, the

ordering of the element is critical, since it determined the maximum frontwidth, i.e., the maximum

required dimension (memory space) of the active matrix.

Iterative Methods: [A]x=b

Save memory space and is possible to trade accuracy (error or convergence limit) with

computational time.

Conjugate Gradient Method

Based on solving nonlinear (quad.) opt problem

for f(x)=(1/2)xT[A]x-bTx

if [A]: real, symmetry, and PD then

the xopt would satisfy [A] xopt =b (FOC)

i.e., the opt (min or max) of f(x) is the same as [A]x=b

any methods used to find opt of f(x) could be used to find the root of [A]x=b,

Conjugate direction:

for a [A] is nxn, we say a set of nonzero vectors, P1,…,Pn Rn is A-conjugate (or A-orthogonal) if

P i [ A]P j 0

1 i, j n; i j

t

If a set of conjugate direction is given for [A] as { P1,…,Pn} then to solve [A]x=b let

Xopt=α1P1+α2P2+α3P3+…+αnPn

(1)

since [A]Xopt=b

then k

(2)

t

Pk b

t

P k [ A]P k

k 1,2,...n (substitue (1) into (2) and multiply by Pkt at both side)

Note: [A] PD and P2≠0 so Pkt [ A]Pk 0

In geometry analysis, CG method transfer f(x) into its principal axis, then search each axis (Pi as S

of steepest descent) w/ step sizeαi to find the min.

Conjugate Direction Iteration: w/ {P1,…,Pn} given

let x

x

(k )

(0)

0 and

x

( k 1)

k P k (w/ k obtain using above formula)

therefore for k=n we have x(n)=x* or x(k)might equal x* w/ a smaller k (than n), i.e.,

the conjugate iteration method can find the exact solution of [A]x=b within n iteration, assuming no

rounding errors.

Conjugate Gradient method (CG method):

We are going to give a way to simultaneously generate the direction Pk and αk alone with the

iterates x(k) by the value of f ( x

(m)

) b [ A]x

(m)

r

(m)

Preconditioned CG: (PCG)

to increase the convergent rate of CG in solving [A]x=b, we can transfer the problem into

[M]-1[A]x=[M]-1b, where [M]-1=[Q][Q]T (in order to maintain the sys and p.d.)

so that [M]-1[A] has a smaller condition number than [A], then from the error bound this seems will

increase the convergent rate

Algorithm PCG (not necessary x(0)=0)

1. r(0) b-[A]x(0); S(0)[M]-1r(0); P0 S(0)

2. for k= 0, …, n-1 (loop to step7)

3. αk+1(r(k)TS(k))/(PkT[A]PK); x(k+1) x(k)+αk+1PK+1

4. if converged set x = x(k+1) and exit

5. r(k+1) r(k) -αk+1[A]Pk; S(k+1)[M]-1r(k+1)

6. βk+1 (r(k+1)TS(k+1))/(r(K)TS(k)); Pk+1 S(k+1)+βk+1Pk

7. end of loop k

8. x x(n) exit

*The most easy choose of [M] is diag[A], i.e., [M]=[D] Jacobi Conjugate Gradient Method, so

that in step 5 above S(k+1) could be solved easily.

15.9 Equation Solvers (ANSYS Theory Reference)

The system of simultaneous linear equations generated by the finite element procedure is solved either

using a direct elimination process or an iterative method. A direct elimination process is primarily a

Gaussian elimination approach which involves solving for the unknown vector of variables {u} in

[ K ]{u} {F }

where:

[K] = global stiffness/conductivity matrix

{u} = global vector of nodal unknown

{F} = global applied load vector

The direct elimination process involves decomposition (factorization) of the matrix [K] into lower and

upper triangular matrices, [K] = [L][U]. Then forward and back substitutions using [L] and [U] are made

to compute the solution vector {u}.

The two direct solvers that are available are the Sparse Direct Solver, and the Frontal (Wavefront) Solver

(in ANSYS 12 the frontal solver is merged within sparse direct) . The Sparse Direct Solver makes use of

the fact that the finite element matrices are normally sparsely populated. This sparseness allows the

system of simultaneous equations to be solved efficiently by minimizing the operation counts. The

Frontal Solver, on the other hand, is designed to minimize the memory used in the solution process

although the operation count is generally more than that of the Sparse Direct Solver.

A typical iterative method involves an initial guess, {u}(1), of the solution vector {u} and then a successive

steps of iteration leading to a sequence of vectors {u}(2), {u}(3), . . . such that, in the limit, {u}(n) = {u} as n

tends to infinity. The calculation of {u}(n + 1) involves [K], {F}, and the {u} vectors from one or two of the

previous iterations. Typically the solution converges to within a specified tolerance after a finite number

of iterations.

The ANSYS program offers a large number of iterative solvers as alternatives to the direct solvers (sparse

or frontal solvers). These alternatives in many cases can result in less I/O or disk usage, less total elapsed

time, and more scalable parallel performance. However, in general, iterative solvers are not as robust as

the direct solvers. For numerical challenges such as a nearly-singular matrix (matrix with small pivots) or

a matrix that includes Lagrangian multipliers, the direct solver is an effective solution tool, while an

iterative solver is less effective or may even fail.

The first three iterative solvers are based on the conjugate gradient (CG) method. The first of these three

CG solvers is the Jacobi Conjugate Gradient (JCG) solver (chosen with the EQSLV,JCG command)

which is suitable for well-conditioned problems. Well-conditioned problems often arise from heat transfer,

acoustics, magnetics and solid 2-D / 3-D structural analyses. The JCG solver is available for real and

complex symmetric and unsymmetric matrices. The second solver is the Preconditioned Conjugate

Gradient (PCG) solver (chosen with the EQSLV,PCG command) which is efficient and reliable for all

types of analyses including the ill-conditioned beam/shell structural analysis. The PCG solver is only

valid for real symmetric stiffness matrices. The third solver is the Incomplete Cholesky Conjugate

Gradient (ICCG) solver (chosen with the EQSLV,ICCG command). The ICCG solver is more robust than

the JCG solver for handling ill-conditioned matrices. The ICCG solver is available for real and complex,

symmetric and unsymmetric matrices.

Other iterative solvers are provided by ANSYS to achieve a more scalable parallel/distributed

performance. The algebraic multigrid (AMG) solver is explained below. The others, the DPCG and DJCG,

are mathematically the same as the PCG and JCG solvers described earlier in this section but are

implemented in a distributed computing environment.

The AMG solver (accessed with EQSLV,AMG), is made available through a license from Solvers

International, Inc. of Colorado (USA), and is written for shared-memory architecture machines. AMG

solver works on the incoming total equation matrix and automatically creates a few levels of coarser

equation matrices. Iterative convergence is accomplished by iterating between a coarse and a fine matrix.

The maximum scalability that can be achieved using 8 CPU processors is about a 5 times speedup in total

elapsed time. For the ill-conditioned problems where the ill-conditioning is caused by high aspect ratio

elements, a large amount of constraint equations, or shell/beam attached to solid elements, the AMG

solver with one CPU processor is more efficient than any of the three CG solvers. The AMG solver is

also valid with constraint equations and coupling.

Solver Memory and Performance

You will get the best performance from ANSYS if you first understand the individual solvers' memory

usage and performance under certain conditions. Each solver uses different methods to obtain memory;

understanding how memory is used by each solver can help you to avoid problems (such as memory

errors) and maximize the problem size you can handle on your system.

A singularity exists in an analysis whenever an indeterminate or non-unique solution is possible. A

negative or zero equation solver pivot value will yield such a solution.

(this singular is not the same as the singular point mentioned in the 1st HW)

The following conditions may cause singularities in the solution process:

Insufficient constraints.

Nonlinear elements in a model (such as gaps, sliders, hinges, cables, etc.). A portion of the

structure may have collapsed or may have "broken loose."

Negative values of material properties, such as DENS or C, specified in a transient thermal

analysis.

Unconstrained joints. The element arrangements may cause singularities. For example, two

horizontal spar elements will have an unconstrained degree of freedom in the vertical

direction at the joint. A linear analysis would ignore a vertical load applied at that point.

Also, consider a shell element with no in-plane rotational stiffness connected

perpendicularly to a beam or pipe element. There is no in-plane rotational stiffness at the

joint. A linear analysis would ignore an in-plane moment applied at that joint.

Buckling. When stress stiffening effects are negative (compressive) the structure weakens

under load. If the structure weakens enough to effectively reduce the stiffness to zero or less,

a singularity exists and the structure has buckled. The "NEGATIVE PIVOT VALUE - "

message will be printed.

Zero Stiffness Matrix (on row or column). Both linear and nonlinear analyses will ignore an

applied load if the stiffness is exactly zero.

(Numerical method to solve nonlinear system equations will be covered when we work on them!)