Seminar paper [DOCX 971.57KB]

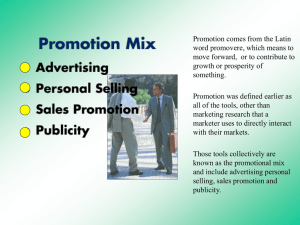

advertisement

![Seminar paper [DOCX 971.57KB]](http://s3.studylib.net/store/data/007060383_1-8bb8e2aaac956abac60a5cc9f820af39-768x994.png)