docx

advertisement

EECS 270 Fall 2014, Lecture 21

Page 1 of 7

Error Correction continued and start on

FPGA architecture

Today we’ll finish up error correction and start in on FPGA architecture. But first we’ll have an

announcement and a bit of review from last time.

Announcement:

GA4 problem 2 had an error—a needed device was left off the list. Yes, it could have been built

out of gates (and some of you who already did it may have done so) but that wasn’t the intent

of the question.

And review:

1. Draw the state transition diagram which corresponds to the following logic equations. Assume the

initial state is 001 and that our states are one-hot encoded.

o NS2=S2*A+S1*!A

o NS1=S2*!A+S0

o NS0=S1*A

o X=S1+S2

Question: how could you tell if this weren’t one-hot encoded?

2. What is the Hamming Distance of the following set: A={11111, 00011, 10100}? What does that say

about the potential error correction and detection if we knew the sender only sent data from set A?

EECS 270 Fall 2014, Lecture 21

Page 2 of 7

Error correction reviewed

Parity gave as a method of detecting a one-bit flip. This is useful because we can, at the least, not use

bad data, and we could perhaps even ask for the data to be resent. But what if we want to fix the data

without needing to ask for a resend? It turns out we can do that if the set of messages we are using has

a Hamming distance of 3 or more.

Why is that?

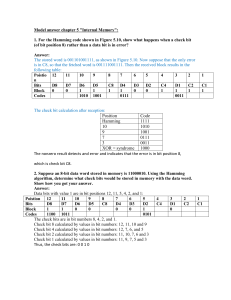

Let’s review the specific scheme with 4 data bits and 3 parity bits

A, B, C, D are data bits while X, Y, Z as parity bits

Let P(x1..xn) be the function that generates even ones parity over the inputs x1 to xn.

Let X=P(A,B,C)

Let Y=P(A,B,D)

Let Z=P(A,C,D)

From here, we’re going to assume only one bit will go bad in a given message? What happens if more

than one bit gets flipped? Our message will be received incorrectly. But let’s ignore that case for now.

Which parity bits will incorrect if the following bit were flipped in transmission?

A

B

C

D

X

Y

Z

So what’s going on? How many bits of error correction would we need if we want to send X data bits

with 1 bit of correction?

The equation:

_________________________________

P=# of parity bits, D= # of data bits.

EECS 270 Fall 2014, Lecture 21

Page 3 of 7

Other thoughts

1. How can we use that set to detect 2-bit errors?

2. How do we build the encoder (takes in 4 data bits, generates 7 bit message)?

3. How do we build the decoder (takes in 7 bit message, generates corrected 4-bit data)? -- This is

question 1 of GA4.

Moving forward on error correction

The most obvious question is: how do we make codes for more than 4 data bits? And the answer is

fairly simple. If the goal is to make it so that we have 8 data bits, the equation says we need 5 parity

bits. But how to generate them? The basic theme is that each data bit must be:

Covered by at least 2 parity bits

Be covered by a set of parity bits that is unique—that is no other data bit can be covered by

exactly the same parity bits.

An easy (and fairly standard) way to do this is to use the integers in binary. Start counting at 1. Have

each number that is a power of 2 (1, 2, 4, 8, etc.) be a parity bit. The other bits are data bits. Notice the

powers of 2 have exactly one “1” in them. Of course, all numbers have a unique set of “1”s (not shared

with any other number. And finally, when we receive the data, we check which parity bits are different

than we’d expect. We just “OR” their numbers together and see which bit was flipped. So if we find

0001 and 0100 were different than expected, we’d say that 0101 was the bit that was flipped!

____ ____ ____ ____ ____ ____ ____ ____ ____ ____ ____ ____ ____ ____ ____

0001 0010 0011 0100 0101 0110 0111 1000 1001 1010 1011 1100 1101 1110 1111

Notice that if we just do 1 bit of data, we have standard triple redundancy. What is the “set” of legal

codes? Notice if we want just 4 bits of data, we have the Hamming(7,4) scheme discussed above.

__1_ __0_ __0_ __1_ __1_ __1_ __0_ __0_ __1_ __1_ __1_ __0_ __0_ __0_ __0_

0001 0010 0011 0100 0101 0110 0111 1000 1001 1010 1011 1100 1101 1110 1111

And a few last words

The schemes we’ve used here have been generating sets. What I mean is that the sender is only sending

data that meets certain requirements—the parity bits are generated in a fixed way based on the data

bits. And so there are so sets of bits that the sender will send, and some it won’t send. In the case of

even one’s parity over 2 data bits, the set of things that might be sent are {000, 110, 101, 011}. That set

has a Hamming Distance of 2. The Hamming(7,4) scheme and the Hamming (15,11) scheme both

generate sets that have a Hamming Distance of 3.

Why can we only do detection of a 1 bit error with a set that has a Hamming Distance of 2?

Why can we detect 2 bits of error with a Hamming Distance of 3? Why can we correct 1 bit of

error?

What Hamming distance would we need if we wanted to correct 2 bits of data?

EECS 270 Fall 2014, Lecture 21

Page 4 of 7

FPGA internals: Lookup tables.

Let’s start looking at the internals of a Field Programmable Gate Array (FPGA).

Basic issue: we want “programmable hardware” but we can’t just move transistors around—they are

fixed in location. So how do we make it so we can create a real hardware device (not immolated in

software)?

Answer: We use memory.

Memory is one thing that we can easily change on the fly. Consider the following truth table of an XNOR

gate where F=x XNOR y.

We can implement it as a memory. We can even implement 2 logic functions if the memory is two-bits

wide (i.e. word size is 2).

Create a memory that implements the following (from the text)

k

0

0

0

0

1

1

1

1

p

0

0

1

1

0

0

1

1

s w

0

1

0

1

0

1

0

1

EECS 270 Fall 2014, Lecture 21

Page 5 of 7

EECS 270 Fall 2014, Lecture 21

Page 6 of 7

EECS 270 Fall 2014, Lecture 21

Page 7 of 7