3.1 T-tests - Walker Bioscience

advertisement

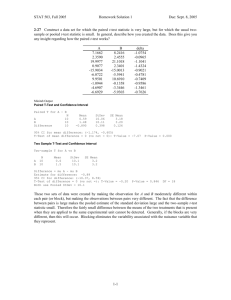

T-tests My brother, Kim, and I are competitive. One day, the two of us were discussing who was taller, me or Kim. Kim said he was 6’1” tall, plus or minus one inch. I said I was 6’2” tall, plus or minus two inches. So who is taller? Difference = 6’2” – 6’1” = 1 inch Error in measuring difference = sum of the errors in measuring each height = 1” + 2” = 3” So the difference, 1”, is much less than the error in measuring that difference, 3” It’s convenient to take this ratio: Difference 1 = Error in estimating the difference 3 Usually, to be convinced that a difference is real, we’d like this ratio to be 2 or more. Now, suppose we perform a clinical trial where we compare a drug to a placebo, and measure their effect on serum cholesterol. We get these results: Mean serum cholesterol of patients taking the placebo = 225 mg/dL. Mean serum cholesterol of patients taking the drug = 220 mg/dL. Difference = Mean(drug) – Mean(placebo) = 225 – 220 = 5. Is there really a difference between the drug and the placebo? Suppose that there is no difference between the drug and the placebo (the null hypothesis.) That is, the observations that we label "drug" or "placebo" are really random samples from a single population with a single mean value of serum cholesterol. When we take two random samples from a single population, the means of the two samples will differ somewhat just by random chance. If there really is no difference between the drug and the placebo, what is the probability that we would see a difference of 5 in the two samples? Let’s look again at the ratio: Difference Mean ( placebo ) Mean (drug ) = Error in estimating the difference Error in estimating the difference We've already calculated the numerator: Difference = Mean(drug) – Mean(placebo) = 225 – 220 = 5. How do we calculate the error in estimating that difference? Before, we used the sum of the errors in each measurement. Let's use that method here. What is the error in measuring each mean? A convenient measure is the Standard Error of the Mean, which we saw earlier. From the data on the patients in the clinical trial, we calculate the following. n (drug) = n (placebo), if we have equal numbers in each group. Mean(drug) Mean(placebo) Standard deviation (drug) = SD(drug) Standard deviation (placebo) = SD(placebo) SEM (drug) = SD(drug) / Sqrt(n) SEM (placebo) = SD(placebo) / Sqrt(n) So we have the SEM for both the drug and the placebo group. We can calculate the ratio Mean ( placebo ) Mean (drug ) Mean ( placebo ) Mean (drug ) = Error in estimating the difference SEM ( placebo ) SEM (drug ) This ratio is, approximately, the T statistic used in the T-test T ~= Mean( placebo ) Mean(drug ) SEM ( placebo ) SEM (drug ) T = the Difference in the Means divided by the Error in measuring that difference. To make the equation exact, we have to put in some squares and square roots: T= Mean( placebo ) Mean(drug ) SEM ( placebo ) 2 SEM (drug ) 2 How big does T need to be for us to be confident that the Difference in the Means is significant? We’ll see how to calculate p-values shortly, but, in general, if T is larger than 2, the p-value will be less than 0.05 (provided N is greater than ~ 10). How do we get from the T statistic to a p-value? If the null hypothesis is true (there really is no difference between the drug and the placebo), what is the probability that we would get the observed difference between the means of the two samples? One way to answer this question would be to do permutation or randomization tests. If the null hypothesis is true (there really is no difference between the drug and the placebo), the labels “placebo” and “drug” don’t mean anything. We could randomly shuffle the labels (placebo or drug), calculate the T statistic for each of the random shuffles, and see how often the T in our random shuffles was greater than the T for our original clinical trial data. Suppose that, when we did 100 random shuffles, we never got a random T bigger than the T in our original clinical trial data. We could then say that, if the null hypothesis is true (there really is no difference between the drug and the placebo), the probability that we would get the observed difference between the means of the two samples is less than 1/100, or p < 0.01. The observed difference is very unlikely, if the drug and placebo are not different. Suppose instead that, when we did 100 random shuffles, we got a random T bigger than the T in our original clinical trial data 70 out of 100 random shuffles. We could then say that, if the null hypothesis is true (there really is no difference between the drug and the placebo), the probability that we would get a T statistic bigger than the observed T statistic for the two samples in 70/100, or p = 0.70. The observed difference is very likely to occur, if the drug and placebo are not different. When the t-test was developed, over 100 years ago, there were no computers, so doing 100 permutations and calculating 100 T statistics was very tedious. Instead, a formula was found for the T-distribution that gave an easily-computed approximation to the results that you would get doing random shuffles. Hypothesis testing Suppose that we are testing a drug to kill bacteria in patients with sepsis (a potentially fatal bacterial infection in the blood). Unknown to us, the drug either kills bacteria (by a clinically useful amount) or it does not. The null hypothesis, H0, for our experiment is that the drug does not kill bacteria. The alternative hypothesis, H1, for our experiment is that the drug does kill bacteria. Usually, the null hypothesis is that there is no difference between treatments. Occasionally, such as showing a generic drug is equivalent to the patented version of the same drug, the null hypothesis is that the generic and patent versions are different. We won’t consider these cases further. For the rest of the book, we'll assume that the null hypothesis is that the treatment has no effect. We collect data on the bacteria in the blood of patients given either the drug or a placebo, and calculate a test statistic: 𝑇𝑒𝑠𝑡 𝑠𝑡𝑎𝑡𝑖𝑠𝑡𝑖𝑐 = 𝐸𝑓𝑓𝑒𝑐𝑡 𝑠𝑖𝑧𝑒 𝑆𝑡𝑎𝑛𝑑𝑎𝑟𝑑 𝑒𝑟𝑟𝑜𝑟 𝑜𝑓 𝑒𝑓𝑓𝑒𝑐𝑡 𝑠𝑖𝑧𝑒 where the effect size is the difference between the treatment groups (such as difference in mean bacteria count). To compare two groups, such as drug versus placebo, the most common statistic is the T statistic used in a t-test. Other commonly used test statistics are the F-statistic for analysis of variance and the chi-squared statistic for chi-squared tests. We calculate the probability that the observed value of the statistic would have been seen, if the null hypothesis is true. This probability is the p-value for the test. Calculation of the p-value is called a significance test. p-value = the probability that the experiment would produce a test statistic as big as, or bigger than, the actual test statistic, if the null hypothesis is true. If the observed p-value is less than a pre-specified value, (typically alpha = 0.05 or 0.01), then we say that the result is statistically significant. You will often see this written as , the Greek letter alpha. If the observed p-value is less than alpha, then we reject the null hypothesis. If the observed p-value is greater than alpha, then we do not reject the null hypothesis. The value of the test statistic corresponding to alpha is the critical value. Values of the test statistic that give an observed p-value less than alpha lead to rejection of the null hypothesis. These values of the test statistics that give p-values less than alpha define the rejection region. Type-I and type-II errors If the treatment does not work, but we conclude that it does work, we make a type-I error (a false positive) The probability of a Type I error is alpha. If the treatment does work, but we conclude that it does not work, we make a type-II error (a false negative) The probability of a Type II error is beta. Power is the probability that we will reject the null hypothesis. Power = 1 – beta. Clinical trial gives significant p-value (indicating the treatment works) Clinical trial gives nonsignificant p-value (indicating the treatment does not work) Treatment works True positive result. Correct conclusion. Probability of a true positive result is power = 1- beta. Type II error. False negative result. Probability of a Type II error is beta. Treatment does not work Type I error. False positive result. Probability of a Type I error is alpha. True negative result. Correct conclusion. T-test for two independent samples Let's look at an example of a t-test for two independent samples. Independent sample means that the subjects in one sample get one treatment, and the subjects in the second sample get the second treatment. We will test the ability of a drug to kill bacteria. The response is the count of bacteria colony forming units (CFU) x106 per milliliter of blood. Specifically, we will use a two-sample t-test to test the null hypothesis: mean bacteria count in the drug group = mean bacteria count in placebo group The standard version of the two-sample t-test assumes the following. the samples are normally distributed the two samples have equal variance The standard version of the two-sample t-test will usually give valid results if the data do not completely meet these assumptions. However, if the samples are strongly nonnormal, or have greatly different variance, we should consider alternative analyses, such as log transforming the data to get a normal distribution, or using non-parametric Wilcoxon rank tests described later. From our experiment, we collect the following data. Treatment Drug Placebo Bacteria counts 0.7, 0.9,1.0, 1.2, 2.2, 2.5, 3.6, 3.9, 4.2, 4.5, 4.5, 5.6, 5.9, 6.1 2.0,2.3, 2.3, 3.9, 4.1, 4.4, 5.3, 5.7, 6.3, 6.4, 7.2, 7.7, 8.0 In this case, the data do not show significant non-normality or outliers, and the standard deviations of the two treatment groups are similar. So we'll use the standard version of the t-test assuming equal variance. The t-test gives p=0.03586. We reject the null hypothesis and conclude that the bacterial count was significantly less in the drug group than in the placebo group (p = 0.03586). We get the same information from the confidence interval. The 95% confidence interval for the true value of the difference of the treatment group means is -3.2847862 to -0.1218072. Because the 95% confidence interval does not include zero, we reject the null hypothesis that the difference between the two groups is zero. Do bears lose weight between winter and spring? Here is another example of a two-sample t-test. We measure the weight of one group of bears in winter, and measure the weight of a different group of bears the following spring. Measurement time Winter Spring Bear weights 300,470,550,650,750,760,800,985,1100,1200 280,420,500,620,690,710,790,935,1050,1110 The t-test gives p = 0.7134, which is not significant, so we do not reject the null hypothesis that the mean weight of bears in winter is the same as the mean weight of bears in spring. The 95% confidence interval for the true value of the difference of means between Winter and Spring is -304.9741 to 212.9741. Because the 95% confidence interval includes zero, we do not reject the null hypothesis that the difference between the two groups is zero. A better design for this study would be to measure the same bears in winter and in spring, to remove the effect of variability among bears from the experiment. We'll do that shortly using a paired t-test. T-test for two independent samples: unequal variance In some cases, we may know or expect that two groups have unequal variance. For example, we may want to compare the mean yield of two processes, where we know that one process is more variable than the other. It may be that a group receiving an active treatment (drug) is more variable in its response than the control (placebo) group. In these cases, we may use an alternative version of the t-test, called Welch's test, which does not assume equal variance of the two treatment groups. Furness and Bryant (1996) compared the metabolic rates of male and female breeding northern fulmars (data described in Logan (2010) and Quinn (2002)). Sex Female Female Female Female Female Female Male Male Male Male Male Male Male Male Metabolic rate 728 1087 1091 1361 1491 1956 526 606 843 1196 1946 2136 2309 2950 The boxplots indicate that the variances of the metabolic rates of males and females are not equal. So we use the Welch t-test which does not assume equal variance of the two treatment groups. The Welch t-test gives a not significant p = 0.4565, so we do not reject the null hypothesis. Paired t-test for matched samples: We use the paired t-test when a single subject receives two treatments, or is measured on two occasions. For example, we could test each subject's heart rate after exercising on a bicycle and after exercising by walking. Because the same subject receives both treatments (bicycle and walking), the observations are paired (within subject), and we use a paired t-test. Here we'll re-examine the hypothesis that bears lose weight during hibernation. Previously, different bears are weighed in November and March. We tested for differences using an ordinary (unpaired) t-test. Here, the same bears are weighed in November and March. We will test if their mean weight is different using a paired t-test. The big advantage of measuring the same bears (and using the paired t-test) is that, because each bear serves as its own control, we control for the variability among bears. This has the effect of removing the (unexplained) variability due to variation in weight among the bears. Measurement time Winter Spring Difference Bear weights 300,470,550,650,750,760,800,985,1100,1200 280,420,500,620,690,710,790,935,1050,1110 20, 50, 50, 30, 60, 50, 10, 50, 50, 90 Notice that all the bears lose weight. Using the paired t-test, we get p = 0.0001053, which is significant. We reject the null hypothesis that the change in weight between winter and spring is zero. Let's compare the results of paired and un-paired t-test. First, use an ordinary t-test: Assuming 10 bears in November, different 10 bears in March: Two-Sample t-test assuming equal variance p = 0.71338 Second, use a paired t-test. The same 10 bears are weighed in November and again in March: p = 0.00011 The paired t-test is more powerful than the ordinary t-test for detecting differences. The paired t-test requires a smaller number of subjects (smaller sample size). We will see that the paired t-test is a special case of multiple regression analysis, where the subject (bear) is a variable in the analysis. By controlling (removing) the unexplained variance due to variation among subjects (bears), we increase the power of our analysis to detect significant differences. One-sample t-test A one-sample t-test tests the hypothesis that the mean of a sample is different from a specified mean. Suppose that we have recruited several students for a study to see if body mass index (BMI) is changed by exercise. In previous studies, the mean BMI of students at baseline (before the exercise) was 18.2. The BMI of the new volunteers is 17,17, 18,18,18, 19, 20,20, 21, 22, with a mean of 19. Is the BMI mean for the new students significantly different from 18.2? Our null hypothesis is that the BMI of the new students is 18.2. The one-sample t-test gives p=0.1708, which is not significant. We do not have sufficient evidence to reject the null hypothesis that the BMI of the new students is 18.2. However, our sample size of 10 students is very small, so we have little power to detect any difference. A little while later we recruit another group of students. Is their mean BMI significantly different from 18.2? The BMI of the second set of volunteers is 18,18,18, 19, 19, 20,20, 21, 22, 22, 23, with a mean of 20. The one-sample t-test gives p=0.007525, so we reject the null hypothesis that the bmi of this group of students is 18.2. The 95% confidence interval for the true value of the mean is 18.79823 to 21.20177. The paired t-test is equivalent to a one sample t-test, where the one-sample t-test uses the difference between before and after values. One-tailed versus two-tailed tests Suppose that I am considering purchasing a machine. Two different machines are available. I would like to know if one of the machines produces more than the other. Before the experiment begins, I don't care which one produces more. Because either machine could be better, I use a two-tailed test. Suppose, instead, that I already have a machine that produces 50 devices per hour. A supplier claims that he has a new machine that produces more than 50 devices per hour. I am only interested in proving that the new machine is better than my old machine. I am not interested in proving the new machine is worse. Because I am only interested in testing one direction (higher yield) I could use a one-tailed test. As another example, suppose that a vendor supplies bottles that are supposed to have 50 ml of liquid. We would be concerned if the bottles contain less than 50ml (one-sided test). We are not concerned if the bottles contain more than 50 ml. In this case, we could choose a one-sided test. Generally, if you are going to submit a test result to a regulatory agency (such as the Food and Drug Administration), or submit the result of an analysis for publication in a scientific journal, they will require you to use a two-tailed test. Log transform to deal with outliers If you have outliers and/or non-normal distributions, you may be able to apply a transform to the data to make the distribution more normal, and to reduce the influence of the outliers. Recall a previous example where we measured the level of the protein mucin in the blood of patients with colon cancer and in healthy controls. Notice that the mucin levels in the colon cancer group are lower than the level in all the healthy controls. So we would think the difference in means between the two groups should be significant. However, the healthy control group has an observation with a value of 141 that is an outlier far from any other observed value. Group Colon cancer Healthy control Mucin level 83, 89, 90, 93, 98 99, 100, 103, 104, 141 Even though the boxplot shows a clear separation of the two groups, the p-value for the ttest is not significant (p=0.054), due to the outlier (and the small sample size). We could try a log transform of the data to reduce the effect of the outlier. In this case, the log transform yields a p-value of p=0.036, so we reject the null hypothesis and conclude that colon cancer patients have mucin levels different from those of healthy controls. Another alternative for dealing with outliers is to use a non-parametric test, such as a Wilcoxon rank sum test, described later.