1994 Annual Proceedings - The National Association of Test Directors

National Association of Test Directors

Guiding Principles for Performance

Assessment

Proceedings of the 1994 NATD Annual Symposium

Presented at the National Council on Measurement in Education Annual Conference

April 1994

New Orleans, LA

Edited by Joseph O'Reilly Mesa (AZ) Public Schools

National Association of Test Directors 1994 Symposia

This is the tenth volume of the published symposia, papers and surveys of the National

Association of Test Directors (NATD). This publication serves an essential mission of NATD - to promote discussion and debate on testing matters from both a theoretical and practical perspective. In the spirit of that mission, the views expressed in this volume are those of the authors and not NATD. The paper and discussant comments presented in this volume were presented at the April, 1994 meeting of the National Council on Measurement in Education

(NCME) in New Orleans.

The authors and editor of this volume are:

Joe Hansen, Colorado Springs Public Schools,

1115 North El Paso Street, Colorado Springs, CO 80903-2599

719.520.2077

Michael Kean, CTB/McGraw-Hill

20 Ryan Ranch Road, Monterey, CA 93940-5903

408.393.7816

C. Thomas Kerins, Illinois State Board of Education

100 North First Street, Springfield, IL 62777-0001

217.782.0322

Joseph O'Reilly, Mesa Public Schools

549 North Stapley Drive, Mesa AZ 85203

602.898.7771

Table of Contents

A Proposed Set of Guiding Principles for Performance Assessment: Rationale and Discussion

Joe Hansen............................................................................................................4

Criteria For a Good Assessment System

Michael Kean......................................................................................................18

Quality Performance Assessment: A State Perspective

C. Thomas Kerins...............................................................................................24

A Proposed

Set of Guiding Principles for Performance Assessments:

Rationale and Discussion

Joe B. Hansen

Colorado Springs Public Schools

Overview

This symposium grew out of a concern that as the alternative assessment movement gained momentum and followers, assessment development efforts were being proliferated at all levels, from classroom to state department of education, by teachers and administrators who lacked even

the most basic grounding in traditional measurement theory and practice, and who seemed, at least, to have little if any concern about fundamental issues of technical adequacy regarding the assessments they were developing. This situation is worsened by the presence of charismatic figures who have flooded the country with seminars, "institutes" and "academies," videotapes and books in which technical issues pertaining to performance assessments are either eschewed or ignored. This is further exacerbated by a proliferation of legislative mandates for performance assessments tied to content standards, in which technical issues are either ignored or addressed superficially.

The author's personal experience with various institutes, academies, workshops and legislative mandates aroused his concern that the great potential of alternative assessments for adding new value to the assessment process and the instructional process could be lost if there were no quality control standards to guide their development. This concern led to the formation of an adhoc committee of NATD members to draft a set of quality control guidelines. The hope was that such an effort might eventually lead to a handbook or a "how to" guide for teachers and educational administrators on techniques for building quality assurance factors into their performance assessments as they are developed. The committee members who participated in this effort are, in alphabetical order:

Karen Banks, Wake Co. Public Schools, NC

Judith Costa, Clark Co. Public Schools, NV

Maryellen Donahue, Boston Public Schools, MA

Joy Frechtling, WESTAT, Rockville, MD

Aaron Gay, Norfolk Public Schools, VA

Joe Hansen, Colorado Springs Public Schools, CO (Chair)

Michael Kean, CTB/McGraw-Hill, Monterey, CA

Mary Moore, Dayton Public Schools, OH

Carole Perlman, Chicago Public Schools, IL

Introduction

Since the current wave of educational reform began in the mid-1980s, there has been a growing interest in alternative forms of assessment. Much of the impetus for this movement toward alternative assessment is motivated by dissatisfaction with traditional multiple-choice, standardized tests. Critics of such tests claim they are biased, over-relied upon, unable to test higher-order-thinking skills and lead to undesirable consequences when used for high-stakes decisions. The National Commission on Testing and Public Policy (NCTPP) published the results of a three year study of testing policy in 1990, in which they stated:

Current testing, predominantly multiple choice in format, is over-relied upon, lacks adequate public accountability, sometimes leads to unfairness in the allocation of opportunities, and too often undermines vital social policies (NCTPP, 1990, p. ix).

The NCTPP study concluded that:

To help promote greater development of talents of all our people, alternative forms of assessment must be developed and more critically judged and used, so that testing and assessment open gates of opportunity rather than close them off (p.x).

Many others have criticized the use of standardized tests and called for greater authenticity in educational assessments (Archibald & Newmann, 1988; Cannell, 1989; Neil & Medina, 1989;

Shepard, 1989; Wiggins, 1990).

These critics and others initiated the movement toward alternative forms of assessment, which in the past five years has become a national trend. Alternative assessments are a crucial element in the educational reform efforts of the National Goals Panel, NAEP, and an increasing number of state-level reform efforts, including but not limited to Vermont, Kentucky, Georgia, California,

Colorado, Illinois, Oregon and Washington.

As this movement has gained momentum it has fostered a proliferation of local development efforts at classroom, school and district levels all across the country. In many instances these local developments are undertaken by teachers, administrators and others whose expertise in psychometric methods is highly limited. One result of this widespread development has been a decrease in the concern for technical rigor in the new assessments. This lack of concern for technical rigor is, in part, a function of the growing disdain for traditional methods of assessment and, in part, a natural effect of the fact that much of the development work is being conducted by educators with a minimal amount of knowledge or skill in psychometrics.

To make matters worse, prestigious individuals and organizations have flooded the country with assessment workshops, seminars, institutes and academies in which minimal lip service is paid to issues of quality control and technical rigor while great enthusiasm is generated for performance assessment. The overall effect of these workshops is to increase the level of home-grown, unguided assessment development efforts, which result in assessments of questionable quality.

After watching these events unfold in Colorado as well as elsewhere in the country for several years, I became very concerned that the great potential of performance assessment, portfolio assessment and other alternative approaches for adding new value to the assessment process could be lost if there were no quality-control standards to guide their development. This concern for quality control led me to form an ad-hoc committee, under the auspices of NATD, to draft a set of quality-control guidelines for performance assessments, which could provide the guidance needed. It was my hope that the guidelines would be endorsed by NATD, NCME, AERA and perhaps ASCD, AASA and the major teacher unions. Ultimately, I hoped that the guidelines would be accompanied by an "assessment developer's guidebook" which would provide specific instructions for teachers and administrators on how to build the quality into their assessments and evaluate the assessment instruments. Therefore, at the AERA conference in 1993, I sought and

received permission from the new NATD president to organize an NATD working group to undertake the task of developing the quality guidelines or standards for performance assessments.

Committee Membership and Approach

The committee members were all members of NATD. They included: Michael Kean,

CTB/McGraw-Hill; Carole Perlman, Chicago Public Schools; Karen Banks, Wake County

Public Schools, NC; Aaron Gay, Norfolk, VA Public Schools; Maryellen Donahue, Boston

Public Schools; Mary Moore, Dayton OH Public Schools; and Judith Costa, Clark County NV

Public Schools. The committee met by telephone conference call approximately every two months between May and November 1993.

To initiate the discussion, I provided each committee member with a draft of a set of guidelines I had developed for use in a workshop in my own district. Over the course of four telephone conferences and many fax exchanges, we revised, added to and refined the guidelines until we were satisfied that they were at least a solid starting point for peer review. We decided that the best way to initiate that review was to present the guidelines to our colleagues at this symposium.

Guiding Principles

The guidelines or Guiding Principles , as we have dubbed them, were influenced by a variety of factors including: our own experiences, pending legislation on opportunity to learn, emerging research findings and a seminal article by Linn, Baker and Dunbar (1991). They represent our best effort at defining the key concerns surrounding the development of performance assessments and providing at least conceptual guidance for such development.

The seven Guiding Principles are described below.

1.0 Purpose of Assessment

* Different tests are designed for different purposes and should be used only for the purpose(s) for which they are designed. For example, norm-referenced tests (NRTs) can most efficiently assess the breadth of the curriculum, curriculum-referenced tests (CRTs) can provide more indepth measures of attainment of specific outcomes, and performance assessments can provide a better means of assessing a student's ability to integrate and apply knowledge from different sources.

2.0 Use of Multiple Measures

* Multiple measures should always be considered in generating information upon which any type of educational decision is to be made.

* No test, no matter how technically advanced, should be used as the sole criterion in making high-stakes decisions.

3.0 Technical Rigor

* All assessment must meet appropriate standards of technical rigor, which have been clearly defined prior to the development of the assessment. This is even more important when an assessment is used in a "high stakes" decision context.

* If a performance assessment cannot meet desired technical standards, it should not be used to make high-stakes decisions.

* The APA/AERA/NCME Standards and the Code of Fair Testing Practices represent the type of technical standards against which all tests should be judged.

* In addition to technical standards, the quality of performance assessments should be judged against a set of quality criteria which, at a minimum, address the issues of consequences, fairness, transfer and generalizability, cognitive complexity, content quality, content coverage, meaningfulness and cost efficiency as described by Linn, Baker and Dunbar (1991).

* All assessment used in high stakes decisions must be developed in conjunction with personnel who have demonstrated expertise in psychometrics.

4.0 Cost Effectiveness

* In making selection decisions about assessments, the quality and utility of the information produced must be weighed against the cost of collecting, interpreting and reporting it. Such costs must take into account the time requirements for developers, teachers, administrators and students.

5.0 Protection of Students/Equitability

* No harm should accrue to any student or groups of students as the result of administration or subsequent use of the results of any form of assessment.

* Since some studies have found results generated by performance assessments to exhibit systematic ethnic, racial and gender bias, extra care should be exercised when using them, especially in their interpretation, and alternative explanations should be considered when such bias is exhibited (Williams, Phillips & Yen, 1991). This problem can be ameliorated through the use of multiple measures (Guiding Principle 2.0)

6.0 Educational Value

* All performance assessment should be designed so that both the administration of the assessment itself and the use of the results of the assessment augment the educational experience of students. Indeed, augmentation is one of the most significant potential contributions performance assessment has to offer to educational reform.

7.0 Decision Making

* All assessment should provide data that enhance the decision making ability of students, teachers, school administrators, central office administrators, parents and/or community members.

Other Standards

Since our committee completed its work on the current draft of these Guiding Principles a number of other national organizations have also released drafts of assessment standards. These include the National Council of Teachers of Mathematics (NCTM) and the Mathematical

Sciences Education Board (MSEB) as reported in the FAIRTEST Examiner (FAIRTEST, 1994).

The Center for Research on Evaluation Standards and Student Testing (CRESST) has also published a set of quality criteria for performance assessments based on the work of Linn, Baker and Dunbar (1991; CRESST, 1993), and referred to above in NATD guiding principle 3. It is interesting to note that although these efforts overlap, all of the concerns addressed by them are encompassed by our Guiding Principles. Each of these sets of standards is summarized below.

MSEB Assessment Standards

The MSEB standards address three principles:

* The Content Principle: Assessment should reflect the mathematics that is most important for students to learn.

* The Learning Principle: Assessment should enhance mathematics learning and support good instructional practice.

* The Equity Principle: Assessment should support every student's opportunity to learn important mathematics.

NCTM Assessment Standards

The NCTM has six standards:

* Important Mathematics: Assessment should reflect mathematics that is most important for students to learn.

* Enhanced Learning: Assessment should enhance mathematics learning.

* Equity: Assessment should promote equity by giving each student optimal opportunities to demonstrate mathematical power and by helping each student meet the profession's high expectations.

* Openness: All aspects of the mathematics assessment process should be open to review and scrutiny.

* Valid Inferences: Evidence from assessment activities should yield valid inferences about students' mathematics learning.

* Consistency: Every aspect of an assessment should be consistent with the purposes of the assessment.

CRESST Assessment Standards

These standards, developed by CRESST, are the only set among those recently released to specifically address reliability. They are:

* Consequences: Assessments must minimize unintended negative consequences, and we need from the outset to assess the actual consequences of our actions. This includes a concern for validity.

.

* Fairness: An assessment should allow students of all cultural backgrounds to exhibit their knowledge and skills. All students must have an equal opportunity to learn the complex skills being assessed.

* Transfer and Generalizability: Scores on assessments should enable statements about students' capabilities on a larger domain of skills and knowledge represented by the assessment.

* Cognitive complexity: An assessment should require a student to use complex thinking skills. It should take into account prior knowledge, examine processes used, provide novel problems, include tasks that the student cannot memorize in advance, and provide evidence that it elicits complex understanding or problem-solving skills.

* Content Quality: An assessment should evaluate content that is important and valid. The task should be worth the students' time and effort.

* Linguistic Appropriateness: An assessment must credit students with what they know and can do and must not make language demands that overwhelm them and undermine their motivation.

* Instructional Sensitivity: An assessment should tap what a student has learned through instruction and should not merely reveal individual differences which are independent of instruction.

* Content Coverage: An assessment should match well the content of the curriculum and should not leave unassessed curriculum gaps.

* Meaningfulness: An assessment should be meaningful to students, teachers, parents and the community. It should evoke positive reactions from teachers and students, be perceived as a valid indicator of competence, engage students and motivate them to perform their best, and be credible to parents, teachers and the community.

* Cost and Efficiency: An assessment should be practical. The information gained from it should be worth the cost and effort required to obtain it. It should be cost efficient and administered and scored in an efficient and cost-effective manner.

Table 1 compares each of the above sets of standards against the proposed NATD standards. As can be observed, the four sets share two common standards - equity and educational value. Three of the four address technical rigor in some way, although the approach taken toward this topic by the proposed NATD standards is more comprehensive than any of the others.

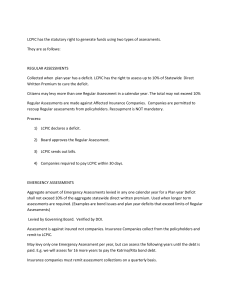

Table 1 Comparison Chart of Assessment Standards

NATD NCTM MESB CRESST Purpose Yes No No No

Multiple Measures Yes No No No

Technical Rigor Yes Validity No Yes

Cost Effective Yes No No Yes

Equity/Protection Yes Yes Yes Yes

Educational Value Yes Yes Yes Yes

Decision Making Yes No No No

Summary & Conclusions

It is clear from the appearance of the newly emergent standards from varied sources that there is a need for quality control in the development of performance assessments. Obviously, our committee was not the only group of professionals to recognize this need and respond to it.

Several questions now arise regarding the various sets of standards that have emerged.

First is the question of whether it is desirable to have a single authoritative set of standards or guidelines and, if so, whose responsibility is it to develop them? Second is the question of how to make effective use of the current standards and those that we may expect to see emerge in the near future. If NCTM and MSEB have proffered standards, isn't it reasonable to expect that other curriculum-development groups will follow suit? Third is the question of what role organizations such as NATD and NCME should play in the development and promotion of assessment standards. In my opinion, it is extremely important to the long-term viability of assessment reform that such standards not only exist but that they under gird all development activities from the classroom to statewide and even national assessment efforts. Teachers have been estimated to spend as much as 80 percent of their classroom time conducting assessment in some form or another (Stiggins & Bridgeford, 1985). If assessment reform is to have a lasting positive effect on education, then teachers must learn to distinguish between quality assessments and the

superficial characteristics of assessments that are in vogue simply because they are different from what has been used in the past. As many states undertake educational reforms focused on standards, it is crucial that the assessment practices they foster are guided by well conceived quality control standards, for, if they are not, we may well find that such reforms result in more harm to students than the alleged damage caused by traditional assessments whose psychometric qualities are well established.

I feel strongly that the professional measurement community has a responsibility to provide leadership in the development of assessment standards. NATD and NCME as flagship organizations, one focused on practice and the other on research, should work together to establish clear, relevant, rigorous and practical guidelines for practitioners in this area. Our adhoc committee's work is a starting point for that effort. I recommend that a joint committee of

NATD, NCME, AERA and other organizations with a vital interest in this issue address the development of such standards. Some may argue that the most reasonable approach to take would be for the Joint Standards Committee to address this problem in the revision of the Joint

Standards document. I believe that to rely on that approach as the sole means of establishing quality standards for performance assessments would be to virtually guarantee that the standards would not be accepted or even recognized by the groups that most need them - the curriculum developers and teachers.

Our NATD ad-hoc committee welcomes collegial input and collaboration on this critical issue.

References

Archibald, D., and Newmann. F. (1988). Beyond standardized testing: Assessing authentic academic achievement in the secondary school.

Reston, VA: National Association of Secondary

School Principals.

Cannell, J. (1989). How public educators cheat on standardized tests.

Albuquerque, NM: Friends for Education.

Center for Research on Standards and Student Testing. (1993). Alternative assessments in practice database.

Los Angeles, CA: University of California at Los Angeles.

Linn, R., Baker, E., and Dunbar, S. (1991). Complex, performance-based assessment: expectations and validation criteria. Educational Researcher, 20(8), 16-21.

Medina, N., Neil, D. M. and the staff of FAIRTEST. (March, 1990). Fallout from the testing explosion: How 100 million standardized exams undermine equity and excellence in public schools.

Cambridge, MA: National Center for Fair and Open Testing (FAIRTEST), Third

Edition.

National Commission on Testing and Public Policy . (1990). From gatekeeper to gateway:

Transforming testing in America.

Chestnut Hill, MA: National Commission on Testing and

Public Policy, Boston College.

Shepard. L. (1989). Why we need better assessments. Educational Leadership, 46 (April), 4-5.

Stiggins, R.J. and Bridgeford, N.J. (1985). The ecology of classroom assessment. Journal of

Educational Measurement , 22(4): 271-286.

Wiggins, G. (1990). Reconsidering standards and assessment. Education Week, (January 24), 36,

25.

Williams, P., Phillips, G., and Yen, W . (1991) . Measurement issues in high stakes performance assessment. Paper presented at the Annual Meeting of the American Educational Research

Association: Chicago, IL .

Discussant Comments

Criteria For A Good Assessment System

Michael Kean

CTB/McGraw-Hill

We've been talking about the elements that comprise a good assessment system for over three and one-half years, since President Bush initially introduced his America 2000 plan. The message hasn't changed; but I'm happy to see that it's now being viewed positively. Many of these elements are reflected in recent legislative initiatives -- both at the Federal and state levels.

The five elements I'll mention relate to:

1. The use of multiple measures

2. The use of different tests for different purposes

3. Assessment systems providing useful information for and on all students

4. The use and reporting of assessment results

5. Assurance of technical/psychometric qualit

Multiple Measures Should Be Used To Meet Assessment and Accountability

Objectives

Multiple measures are essential because no one test can do it all.

Therefore, no test, no matter how good it is, should be the sole criterion for any decision.

Furthermore, no single method of assessment is superior or even adequate for all purposes.

These are fundamental principles of assessment.

"Multiple measures" encompass a variety of assessments.

Multiple measures include writing samples, reading logs, teachers' anecdotal records, and many other examples of informal types of assessments.

They also include other alternative assessments, designed to more closely simulate performance and assess the students' application of problem-solving processes to selected tasks.

Performance-based assessments provide an excellent complement to a multiple-choice achievement-test battery.

Multiple-choice tests more accurately, objectively, reliably and cost effectively measure a broad range of information.

Performance assessments can provide more in-depth feedback on a specific area.

Today's NRTs and PAs can be engineered to assess both higher-order and basic skills.

Just As No One Test Can Do It All, No Test That Is Accurate And Appropriate

For Its Intended Use Should Be Excluded From A Balanced Measurement

System

Multiple-Choice and Performance-Based Assessments Are Not Mutually Exclusive

* When one has a need to understand student achievement, both multiple-choice and performance-based tests should be used.

* Proposals that performance-based assessments could serve as replacements for multiple-choice tests would be much like asserting that carpenters should use saws instead of hammers.

* It's important to remember that compared to a performance-based assessment, a multiplechoice test would:

* more likely address a larger portion of a state's content standards;

* use a reasonable amount of testing time; and,

* not require the commitment of significant amounts of scarce resources.

Norm-Referenced and Curriculum-Based Assessments Are Not Mutually Exclusive

* Results on either a multiple-choice or a performance-based assessment can be interpreted on a norm-referenced basis.

* Both can also provide information on a student's performance relative to other students (in well-defined and meaningful reference groups possessing similar characteristics).

* Norm-referenced tests can also measure a student's performance, progress and mastery of curriculum-based, criterion-referenced standards.

* We are a comparative/box- score society.

* The operative concept is that multiple-choice/performance-based and normreferenced/criterion-referenced formats complement each other.

* They each have distinct advantages and disadvantages.

* Which format to use should not be an either/or proposition; they are not mutually exclusive.

An Assessment System Must Provide Accurate, Objective and Useful

Information For All Students

* It should provide understandable and useful information geared to the different audiences, test purpose, and types of data.

* Information on individual students is vital!

* For example, proposals that fourth graders be sampled to be able to report on changes in average fourth grade scores over the past two years, based on a small sample of pupils, won't help the individual student in the fourth grade.

* Knowing the cholesterol level of the average adult will not help a high risk individual who wants to know his/her cholesterol level.

* Without individual pupil assessment, appropriate information for identification, diagnosis, intervention, and remediation would be lost

* And this brings us to the "A Word" - ACCOUNTABILITY.

* Performance assessments are increasingly being used for accountability and for high-stakes purposes

* A sound accountability system should use multiple-assessment measures to ensure that:

* all students whose achievement is intended to improve, do, in fact, benefit from the program;

* progress for individual students is sustained over time;

* the billions of dollars of resources are not misspent;

* states will be accountable to do the "right thing" instead of being left to "do their own thing;" and,

* there is an external audit to produce high quality, independent, objective data, rather than qualitative self-reporting.

A System Should Assure Appropriate And Effective Use And Reporting Of

Assessments

* It is not enough that assessments meet technical and other quality control standards and are appropriate for their intended use.

* How the assessments are used is a decisive element of any educational system.

* First, each state and local district should provide assurances that the assessments are appropriate for their intended use and actually are used appropriately.

* The SEA/LEA should demonstrate:

* that the assessments will be applicable to all children;

* that they will meet requisite technical standards; and,

* that they will be aligned to any content specifications.

* Second, an accountability system should demonstrate how school staff will be trained on developing, administering, interpreting, and using both informal and formal assessments.

* Staff, as well as parents, guardian, and students, should receive annual detailed reports on how each student in the program is doing.

* Finally, the SEA/LEA should specify how it will use the results from assessments to improve instruction and further the purposes of the program.

All Formal Assessments Should Meet Technical Quality Standards

* An essential element of a multiple measurement system is that any formal assessment used must be demonstrably valid, reliable and fair for its intended purpose.

* The assessments also should be consistent with professional and technical standards (i.e. the

Standards for Educational and Psychological Testing and the Code of Fair Testing Practices ).

* This concept is embedded in the recent federal education legislation.

* These standards are equally applicable to both performance-based and multiple-choice assessment formats.

Quality Performance Assessment: A State Perspective

C. Thomas Kerins

Illinois State Board of Education

I believe that one of the reasons I was asked to be a discussant at this symposium is that the state of Illinois is currently engaged in a school accreditation program in which performance assessment at the school level is not only being allowed but also encouraged as a way to document student progress. While the state continues to have a standard measure to evaluate student progress in meeting broad state goals through its own assessment, each school has to prove that it has a valid, reliable and fair assessment system in place to measure student outcomes.

These local outcomes are broad measurable statements of what students should know and be able to do; they are not lesson plan or unit objectives. School personnel have to prove with documentation to an on-site team that their assessment system uses multiple measures to evaluate the depth and breadth of the school's outcomes. Therefore, Illinois encourages schools to include assessments that go beyond multiple- choice tests.

In his paper, Joe Hansen makes the valid point that lunging toward a performance assessment approach without quality control will eventually result in a lack of credibility that will seriously diminish the potential of the performance assessment. Joe points out that "teachers must learn to distinguish between quality assessments and the superficial characteristics of assessments that are in vogue." Therefore, standards are needed to guide the proper use of performance assessment within the context of a larger systemic effort to evaluate student progress.

However, how can we expect these standards to be useful to these same teachers Joe refers to when the CRESST standards discuss "transfer" and "generalizability," as well as "cognitive complexity." At several AERA and NCME sessions here, the presenters caution the audience to keep the performance assessments simple and of immediate use. Otherwise, the teachers will stay with the traditional publisher's off-the-shelf test even if they don't believe it is valid or useful. At least it is familiar or comfortable, and it has the added benefit of not taking teacher time in either development or the correction of open-ended responses or performances. As

Stiggins has documented, across the country there are few teachers who receive any kind of preservice or in- service training in real classroom tests and measurements. In fact, a majority of their experiences have been to mindlessly administer nationally norm-referenced tests without any intellectual engagement.

Therefore, given the contrast between some who rush in blindly to do performance assessment because it is in vogue and the majority of educators who are reluctant to engage in any kind of change process, I returned to the title of Joe's paper in which he used the phrase "Guiding

Principles." If the audience for these guiding principles are typical local administrators and teachers, then the level of rigor ought to be quite different than if it is a state or national assessment. While there are certain principles that hold for any time one is going to assess a student's knowledge, the guidelines should have enough flexibility to account for cases as different as:

* whether a classroom teacher is attempting to find out if her pupils understand photosynthesis so she can move on to the next subject;

* whether the high school's Improvement Team is documenting the progress of its students in meeting the school's math outcomes as it plans to revise its curriculum;

* whether the district is evaluating a student's knowledge of government as part of a graduation requirement; or,

* whether a state is evaluating the percentage of students who exceed, meet or do not meet the state's writing standards.

Joe's concept of an "assessment developer's guidebook" to accompany any set of guiding principles might be the best way of communicating by example the variations in performance assessment that could work, depending on the nature and purpose of the assessment.

With regard to dissemination, I would advise against just relying on the written word. There are already too many things to read and too little time. Also, we seem to be a nation of visual learners, so any written document should be accompanied by a videotape or laser disk which includes live examples of how performance assessment can be a useful tool within the context of a complete assessment program.

1994 Annual Proceedings