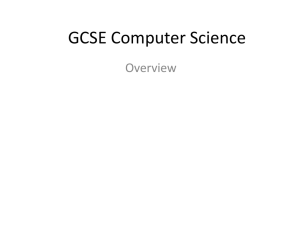

word comparison across outputs

advertisement

Supporting Document 1

German text interpreted as “unb auf ben ©elnrgen be6 fublic{)en” by the OCR process.

IA OCR

OCR 2

Transcription

1

Transcription

2

1

unb

und

und

und

Ok

2

den

ben

den

den

Ok

3

©elnrgen

©ebirgen

Bebirgen

Gebirgen

X

4

be6

des

de5

des

Chk

5

fublic{)en

fublichen

Füdlichen

Südlichen

X

6

£)eittfc{)(anb6

Deutfchlanbs

Deutfchlands

Deutschlands

X

Different texts parsing the phrase “und auf den Gebirgen des südlichen Deutschlands”

(“and on the mountains of southern Germany”)

In some cases, the correct text can be inferred from the agreeing versions (examples 1 and 2 in the table

above), others, it can be automatically reviewed or verified (example 4 above) but others (like examples

3, 5 and 6) may indicate the need to do a manual revision of the words by a Data Analyst or even the

necessity to regenerate the whole OCR text for the page with proper parameters (like a specialized

engine trained in the language).

For each page, the density of non-alpha characters can indicate those cases where the OCR process was

too erratic. An estimation of such index could help separate initially those pages that would require the

generation of a new OCR text.

Then different versions of text, coming either from OCR or transcription, can be compared to identify

the differences. Well-known specialized algorithms (like BLAST) that take into account characters

insertions and deletions can determine such differences and allow the extraction of those problematic

zones that require correction.

Even when computer automation can process large quantities of tasks in less time than most humans,

the human eye can distinguish images as text relatively easily (citation needed) and could be more costeffective, and that is exactly what approaches like RECAPTCHA are based on. But reviewing all the

content of a book or even transcribing every single page of a book could be time consuming. We intent

to reduce the size of the problem by identifying only the correct spelling of those words that need

verification (like example 4 above).

In order to obtain the appropriate image of each word, we intend to use the files that come as byproduct of the scanning process carried by Internet Archive. When such by-products are not available

because the text is produced by transcription, another process could be used to crowdsource the

determination of such coordinates.

Also, the repetitive task of identifying words could be enticed by applying a purposeful gaming

technique where the user could be supporting the reviewing process either consciously or not.[cite

Digital Koot]