Invariance Testing with Mplus

advertisement

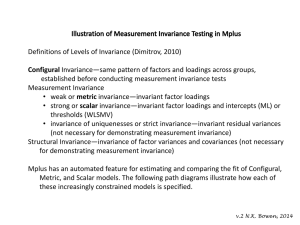

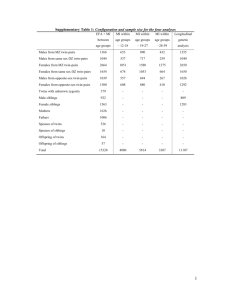

v.2 N.K.Bowen, 2014 TESTING FOR DIFFERENCES IN MEASUREMENT (CFA) MODELS USING MPLUS’S INVARIANCE SHORTCUT CODE (WLSMV) Measurement invariance occurs when the parameters of a measurement model are statistically equivalent across two or more groups. The parameters of interest in measurement invariance testing are the factor loadings and indicator intercepts (when ML estimation is used) or indicator thresholds (when WLSMV is used with categorical variables). Invariance in residual variances or scale factors can also be tested, but there is consensus that it is not necessary to demonstrate invariance across groups on these parameters. Researchers typically hope to demonstrate that their measures are invariant across groups. When loadings and intercepts or thresholds are invariant across groups, scores on latent variables can be validly compared across the groups and the latent variables can be used in structural models hypothesizing relationships among latent variables. A recent update to Mplus (7.11) provides a convenient shortcut for conducting a sequence of increasingly restrictive invariance tests. This handout gives some non-Mplusspecific information on invariance testing, information on Mplus non-shortcut invariance testing, and then invariance testing using the Mplus shortcut. We focus on tests of invariance with measurement models based on ordinal variables—the most common type of scale variables in social science research. When scales are based on ordinal measures, it is appropriate to use WLSMV estimation and a polychoric correlation matrix for the analysis. Specifying that observed indicator variables are ordinal automatically leads to the use of WLSMV and the polychoric correlation matrix. Note that Mplus allows two different approaches to the specification of multiple group CFAs: delta and theta parameterization. Delta is the default, but we recommend using Theta. Theta parameterization lets you obtain information on residual variances (unexplained variance in the observed indicators of factors). Social work researchers are typically interested in error variances, not scale factors. Add the line “Parameterization is Theta” to the analysis section of your input file to request Theta parameterization. Step 1: Test your hypothesized model separately with each group. Some invariance scholars recommend obtaining the best model for each group separately before beginning invariance testing. To run separate analyses based on groups represented by values on a variable in your data set, use the following syntax in the VARIABLE section of the code, substituting your variable’s name and value as appropriate. For example, the code below specifies that the analysis should use only cases that have a value of 2 on the race/ethnicity variable in the dataset. After running the model with this group, the value would be changed to 1 or some other value to run the model with another group. 1 v.2 N.K.Bowen, 2014 USEOBSERVATION ARE (raceth EQ 2); (Note: there is no space after USE) If data for your groups are in separate data files, specify the data file for one group at a time in the usual way: DATA: FILE IS "C:\MyMplus\datafilewhite.dat”; Seek a good-fitting model for each group, but it may be acceptable to have marginal fit at this stage (Raykov, Marcoulides, & Li, 2012). The model for one group may include correlated errors that do not appear in other groups, but the pattern of factors and loadings should be the same across groups in order to proceed to multiple group modeling. However, see Byrne, Shavelson, Muthén, 1989 for a more relaxed approach. Step 2: Specify a multiple group model. It may include slight variations for each group if they were identified in the individual group tests. Multiple group models can be run from multiple datasets or based on values for a variable in the dataset. To specify a multiple group model based on values on a variable in the dataset, use code like the following. The numbers in parentheses must match values on the variable gender in the dataset. This code goes in the section under VARIABLE, because the variable “raceth” here has a special function. GROUPING IS raceth (1 = WH 2 = AA); According to the online Mplus user’s guide, the way to specify that data are in two files is: FILE (white) IS white.dat; FILE (AA) IS AA.dat; When data are all in one file, the “GROUPING IS” code is all it takes to let Mplus know you want to do a multiple group analysis. If the models for your groups are the same, simply enter your model code as if you were doing a single group model. For example, the code below specifies that a factor called SSFR (social support from friends) is measured by 4 observed indicators in both groups: MODEL: SSFR BY c24 c26 c27 c28; If the factor model is the same for all groups, no further specification is required. However, if, for example, a correlated error was found between c24 and c25 when the African American group was tested individually, that difference from the overall model 2 v.2 N.K.Bowen, 2014 could be specified in the multiple group model with code referring to just the African American group as follows: MODEL: SSFR BY c24 c26 c27 c28; MODEL AA: c24 WITH c25; (Note that this line does not turn blue because it is a subcommand under MODEL:) By Mplus default (without the shortcut), the code above will test a highly constrained model—one in which factor loadings and thresholds are constrained across groups, with residual variances (theta parameterization) or scales (delta parameterization) fixed at one in the first group and free in the second, and with latent factor means fixed at 0 for the first group and free in the second group. The AA group model would also have its freely estimated correlated error. To compare this constrained model to a less constrained model, we would have to first specify in many lines of syntax a model in which the default constraints on thresholds and/or loadings were all freed (except for those that need to be fixed or constrained for identification purposes). We would need to run this less restrictive model first in order to use the WLSMV difftest mechanism. The following difftest code would be included in the syntax for the less restrictive model. The code requests that a small file be saved with information on the current model to be used later when running the more restrictive model. SAVEDATA: difftest=freemodel.dat; Then we would run the more constrained model, this time including the “difftest= freemodel.dat” code under the ANALYSIS command. These steps give us the chi square difference test results we need to determine if model fit is significantly worse with the equality constraints. BUT, there is a new and easier way to test measurement invariance! Version 7.11 has a shortcut that allows us to simultaneously run and compare chi squares for a configural model, metric model, and scalar model all in one analysis. You specify the grouping variable or two data files as before, AND, in the ANALYSIS section of the code type: ****** MODEL IS CONFIGURAL METRIC SCALAR; ****** From Dimitrov (2010) we have the following definitions of these levels of invariance: Configural Invariance—same pattern of factors and loadings across groups, established before conducting measurement invariance tests 3 v.2 N.K.Bowen, 2014 Measurement Invariance • weak or Metric Invariance—invariant factor loadings • strong or Scalar Invariance—invariant factor loadings and intercepts (ML) or thresholds (WLSMV) • invariance of uniquenesses or strict invariance—invariant residual variances (not necessary for demonstrating measurement invariance) With the shortcut, Mplus specifies the Configural model as having loadings and thresholds free in both groups except the loading for the referent indicator, which is fixed at 1.0 in both groups. The means of factors in both groups are fixed at 0 while their variances are free to vary. Residual variances (with theta parameterization) or scale factors (delta parameterization) are fixed at 1.0 in all groups. With the shortcut, Mplus specifies the Metric model as having loadings constrained across groups except the loading for the first indicator of a factor, which is fixed at 1.0 in both groups. Thresholds are generally allowed to vary across groups, but certain thresholds have to be constrained in order for the model to be identified. Therefore, the first two thresholds of the referent indicator are constrained to be equal across groups, and the first threshold of each other indicator on a factor is constrained to be equal. Note that you can’t run a Metric model with binary indicator variables because it can’t be identified— there is only one threshold for a binary variable. With the shortcut metric model, the mean of the first factor is fixed at 0 and other factor means are free to vary and factor variances are free to vary. Some researchers, and the online Mplus User’s Guide, suggest evaluating only the Configural/Scalar comparison. They contend that lambdas and thresholds for indicators should only be freed or constrained in tandem. In this case, the code “MODEL IS CONFIGURAL SCALAR” can be used, and searches for sources of invariance will involve freeing lambdas and their thresholds at the same time. Note too, that any one of the three invariance models can be run by itself with the “MODEL IS” line under ANALYSIS. Step 3: Run the Model: With the example from above (MODEL: and MODEL AA:) we will get output comparing the fit of the three models, which will guide us to our conclusions or next steps. Specifically, before you see detailed fit information in the output for each model, you’ll see: MODEL FIT INFORMATION Invariance Testing 4 v.2 N.K.Bowen, 2014 Model Configural Metric Scalar Number of Parameters 32 29 22 Chi-square Degrees of Freedom 4.919 7.763 26.491 4 7 14 Models Compared Chi-square Metric against Configural Scalar against Configural Scalar against Metric 3.571 21.589 20.653 Degrees of Freedom 3 10 7 P-value 0.2957 0.3540 0.0224 P-value 0.3117 0.0173 0.0043 Step 4: Interpreting the Model Comparison Output A limited number of scenarios are possible in the Invariance Testing output. Scenario 1: All models have good fit and invariance is supported at each step. Scenario 2: One of the models does not have good fit. We need to demonstrate good fit of the model at each step--an issue separate from the invariance tests. Not only would we examine the chi square statistic for the models above, but we would evaluate any other fit indices we have pre-specified. Fit indices pertaining to the multiple group configural model, for example, can be found in the output section called MODEL FIT INFORMATION FOR THE CONFIGURAL MODEL. If the fit statistics are not adequate for any CFA model in our sequence of increasingly constrained model tests, it makes no sense to proceed to the next model: first because any less constrained model should have better fit than the next more constrained model, and second because if our measurement model at any step is unacceptable, it makes no sense to examine further whether parameters differ across groups. Scenario 3: Fit worsens from the configural to the metric level. If there is noninvariance at the metric level compared to the configural level (meaning constraining lambdas leads to worse fit), the researcher can choose to search for the source(s) of worse fit by testing the loadings of one factor at a time as a set and/or testing one loading at a time. If only a relatively small number of loadings are non-invariant, it is possible to proceed to test the effects of constraining thresholds in this new model. This sequence would be similar to (but simpler than) the search for non-invariant thresholds described in Step 5 below. 5 v.2 N.K.Bowen, 2014 Scenario 4: Fit does not significantly worsen when lambdas are constrained, but it does get worse when thresholds are constrained. In this situation, we can claim metric, or weak, invariance of our measure and stop testing, but weak invariance is not desirable. Alternatively, we can do additional tests to identify the source(s) of noninvariance. If only a small number of thresholds are non-invariant, we could claim that our measure is “partially invariant” (Byrne, 1989; Dimitrov, 2010—says invariance in up to 20% of parameters is okay). Scenario 3 is what we have in our example. The “Metric against Configural” line above indicates that constraining factor loadings did not significantly worsen fit (p of 2 change > .05). However, the “Scalar against Metric” lines indicate that constraining thresholds across groups worsened fit (p of 2 change < .05). We cannot conclude that our measure has scalar, or strong, invariance. We can, however, determine the extent of the non-invariance in the thresholds. Step 5: Searching for Sources of Non-Invariance, Approach 1 If we pre-specified that we would be testing thresholds and lambdas separately (as suggested by Millsap & Yun-Tein, 2004), we could take advantage of the shortcut code in the following way, even though we are going beyond the shortcut to look for individual noninvariant parameters. 5a. Mplus’s WLSMV difftest requires that the less restrictive model be run first. The “nested” model is run second. Therefore, we would start with the less constrained model—the SCALAR model plus thresholds freed for one indicator. ANALYSIS: . . . . MODEL IS SCALAR; Lambdas and thresholds constrained . . . MODEL: SSFR BY c24 c26 c27 c28; MODEL AA: [C24$2*] [C24$3*] (threshold 1 for c24 remains constrained for identification. The other 2 are freed) SAVEDATA: DIFFTEST=Ts24free.DAT; Save a difftest file to use in the next analysis No need to even look at the output, we just wanted to get that difftest file saved. 6 v.2 N.K.Bowen, 2014 5b. Then run the more constrained SCALAR model and use difftest to compare its fit to the prior model ANALYSIS: . . . . MODEL IS SCALAR; DIFFTEST = Ts24free.DAT The model with lambdas and thresholds constrained Compares fit of this model with prior model’s fit. MODEL: SSFR BY c24 c26 c27 c28; No thresholds freed SAVEDATA: DIFFTEST=SCALAR.DAT; Save fit information for next comparison. The results: Chi-Square Test for Difference Testing Value Degrees of Freedom P-Value 4.545 3 0.2083 Constraining the thresholds of C24 did not worsen fit, so we’ll move on to another indicator. We know the source of bad fit is out there somewhere! Repeat Step 5b until the problem indicator is identified. The test for invariance in the thresholds of C27 and C26 had the same result as the test with C28. Each time we compared the more restrictive SCALAR model (with all lambda and threshold constraints) to a less restrictive model—one with the thresholds free for one indicator. Each time the chidiff test was non-significant, we re-constrained the thresholds before continuing our search. 5c. Look for invariance of thresholds within one indicator. We now know that the problem lies somewhere among the thresholds for C24. Instead of testing all of them together (we already know the difftest would be significant), let’s test them one at a time. ANALYSIS: . . . . MODEL IS SCALAR; MODEL: Lambdas and thresholds constrained . . . 7 v.2 N.K.Bowen, 2014 SSFR BY c24 c26 c27 c28; MODEL AA: [C24$1] Except: One threshold for C24 is freed. SAVEDATA: DIFFTEST=TAU124free.DAT; Save a difftest file to use in the next analysis According to the diff test results below, threshold 1 for C24 passes the test! We can reconstrain it and continue our search. Chi-Square Test for Difference Testing Value Degrees of Freedom P-Value 2.994 1 0.0836 The comparison of the SCALAR model with a model with threshold 2 for indicator 24 freed yields the following difference test information: Chi-Square Test for Difference Testing Value Degrees of Freedom P-Value 13.197 1 0.0003 Threshold 2 is non-invariant across groups. Fit gets statistically significantly worse when the threshold is constrained to be equal across groups. We need to allow this parameter to remain free in the second group in our final model. We have one more threshold to test. To make sure that the order of our testing does not affect our conclusions, we will test threshold 3 the same way we have tested the others— against the fully constrained SCALAR model (i.e., with threshold 2 constrained again). We get the following results: Chi-Square Test for Difference Testing Value Degrees of Freedom P-Value 0.159 1 0.6904 8 v.2 N.K.Bowen, 2014 The model with threshold 3 constrained does not have significantly worse fit than the model with it free. It looks as though threshold 2 for item 24 is the sole culprit! One noninvariant parameter out of 3 lambdas and 12 thresholds isn’t bad, so when we move on to latent variable modeling with “social support from friends” variable, we’ll model the noninvariant parameter but treat the latent variable as invariant across the groups we tested. Step 5: Searching for Sources of Invariance, Approach 2 If we had pre-specified that we believed it was best to test lambdas and thresholds together as a set instead of separately (Muthén & Muthén, 1998-2012), we would have conducted the tests above with code releasing one indicator’s lambda and thresholds at the same time. Because c24 is the referent indicator for SSFR, we’d have to make another indicator the referent when testing the invariance of its parameters. Also, we can’t free all of an indicator’s thresholds at once—we would have an identification problem. So, in our example, we free any two of our three thresholds at a time to test the effects of freeing lambda and thresholds. If with any combination of thresholds free we find a significant deterioration of fit, we model the lambda and those thresholds as non-invariant. MODEL: SSFR BY c24* c26@1 c27 c28; (free the default of c24@1 & fix the loading for c26 to 1.0 instead) MODEL AA: SSFR by c24* c26@1; [C24$1*] [C24$2*] (threshold 3 for c24 remains constrained for identification. The other 2 are freed) The first loading will be different in the two groups. The second loading equals 1.0 in both groups. The third and fourth loadings are constrained to be equal across groups. The first two thresholds vary across groups; the third is constrained across groups. If we accept the rule that loadings and thresholds should be freed or constrained together, noninvariance found at this step, would suggest we continue to model c24 this way without searching for non-invariance of individual parameters within the set. Cheung & Rensvold, 1999 discuss importance of doing the tests with different referent indicators. Sass, 2011 is a good source on issues involved with invariance testing with ordinal data. Among other things, he explains how to interpret invariance of parameters. 9