parameters

advertisement

How parameters have been chosen in metaheuristics: A survey

Eddy Janneth Mesa Delgado

Applied computation. Systems School. Faculty of Mines.

National University of Colombia. Medellín. Cra. 80 x Cl. 65. Barrio Robledo.

ejmesad@unal.edu.co

Abstract:

Keywords: Global Optimization, Heuristic Programming, nonlinear programming,

parameters.

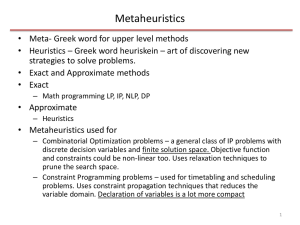

1. INTRODUCTION

The difficult of real-world optimization problems has

increased over time (shorter times to find solutions,

more variables, greater accuracy is requires, etc.).

Classic methods of optimization (based on

derivatives, indirect methods) are not enough to solve

all optimization problems. So, direct strategies were

developed, despite they can’t guarantee an optimal

solution. The first direct methods implemented were

systematic searches over solutions spaces. Step by

step, they evolved to be more and more effective

methods until to arrive to artificial intelligence.

Those new methods were called heuristics and

metaheuristics (Himmelblau, 1972).

Heuristics mean “to find” or “to discover by trial and

error”. Metaheuristics mean a better way “to find”

(Yang, 2010). Besides to intelligence, metaheuristics

differs from other kind of direct methods because

they use parameters to adjust the search and the

strategy to enhance them. Parameters let algorithms

to get good answers in a bigger spam of problems

than initial direct methods with less effort (Zanakis

and Evans, 1981; Zilinskas and Törn, 1989) e.g.

evolutionary strategies are used to get an initial

solution for an unknown problem. By the way,

parameters are a weakness too, because they need to

be tuning and controlling for every problem. I.e. a

wrong set of parameters can make metaheuristics fail

(Angeline, 1995; E. Eiben, et al., 1999; Kramer,

2008; Yang, 2010).

The aim of this paper is to summarize the

information available for parameterization of

metaheuristics, how them are controlled and tuned

and the effect over performance in some cases. To do

that, initially, three key words (parameters,

metaheuristics and heuristics) were used to search in

SCOPUS database. After that, a filter was made

manually including papers that contain:

Applied metaheuristics with adaptive and

self-adaptive parameters

Presentation and comparison of new

methods to adapt or to automate parameters.

Classifications and formalization of methods

to control and tune parameters.

With these criteria 49 papers were chosen.

This paper is organized as follows: parameters are

introduced in section 2. The parameters classification

found in the literature are described in section 3 and

Section 4, the first part present the parameters

relevance in the heuristics to categorize the

parameters choice and the second part uses the

technique to choose the parameters to indicate the

type of setting. An example of adaptation and selfadaptation are shown in Section 6. Finally, general

conclusions and future works are presented in section

7.

2.

PARAMETERS

Stochastic methods have not the same pathway each

run of algorithms. Also, the intelligence immerse in

metaheuristics change dynamically from the

characteristics found in the search. Parameters give

to metaheuristics robustness and flexibility (Talbi,

2009).

To choose parameters is not a simple task because a

wrong set of parameters would make that

metaheuristics have premature convergence or even

no convergence at all (Bäck, 1996; Talbi, 2009).

Metaheuristics need to have enough parameters to be

flexible, but each parameter increase the complexity

to the tune and control of the method and the

necessary changes to optimize a new problem. Each

metaheuristic is a complete different world, has

different parameters, and they influence the

metaheuristic in different ways. There is not a unique

right to choice parameters in metaheuristics. In

literature two points of view are related, but they are

only for Evolutionary Computing (EC).

Traditionally, EC includes genetic algorithms (GA),

evolution strategies (ES), and evolutionary

programming (EP). By the way, other metaheuristics

met the conditions propose by Angeline (1995) to be

an EC metaheuristic, method that iterated until

specific stopping criteria. So, we would extend this

classification to another metaheuristics and heuristics

in general, but they do not use the same operators

that EC ( Fogel, D. B., et al, 1991). Specifically, most

part of another heuristics has not operators

formalized as operators, they use a complete rules

with parameters inside, so maybe it is not a direct

analogy (Zapfel, et al., 2010). In the next two

sections the formal parameter classification proposed

in literature was discussed and follow an example of

other heuristic adaptation are presented.

3.

PARAMETERS CLASSIFICATION

Each metaheuristic has a parameter’s set to control

the search. Although there isn’t consensus about a

unique classification to metaheuristic parameters, one

approximation had been developed for EC (Angeline,

1995; Hinterding,et al., 1997; Kramer, 2008).

According with the definition provided by Angeline

(1995) this classification could be extended to others

paradigms.

The most complete classification is proposed by

Kramer (2008) who links Angeline (1995) point of

view and Hinterding et al. (1997)

2.1. Exogenous.

These parameters are those whose are affected by

metaheuristic performance, but they are external to it,

for example: constrain changes, problems with parts

functions.

2.2. Endogenous

These are internal to the method and could be change

by the user or method itself.

Even though,

Endogenous parameters are our focus, we cannot

forget the exogenous ones because they will affect

the choice.

Population level: In this level the parameters are

global for the optimization. Operator of EC that use

this type or parameters control the next generation.

For example: population size, stopping criteria, etc.

Individual level .This kind of parameters only affects

each individual, for example: It could be the pass for

each individual.

Component level. In this level, the parameters affect

part of the individual like a gene of a chromosome in

Generic Algorithms (GA).

Also, it is important to notice that, authors propose

this classification, but they do not talk about a right

or unique manner to adapt and automatize each level

of parameters. The techniques are addressed by next

classification.

4.

PARAMETERS SETTINGS

In last section, parameters were changed according to

their level of sort in the metaheuristic. In this case,

the parameters are chosen in two stages the first is

before to use metaheuristic, and it is called “to tune”

and the second one is called “to control” and both

have different ways to be select.

2.1. Tuning.

Parameters are tuned before to use metaheuristic.

Those initial parameters could be chosen by three

different levels accord Eiben, et al (1999).

Manually: The initial parameters could be chosen by

and expert ad hoc it is a right manner but lately are

not the most recommended (Kramer, 2008; Talbi,

2009)

Design of experiments (DOE): It implies design test

to show the behavior, and use or define a metric

analyze the result and take a decision about a value

or a range of values for each parameter. (Kramer,

2008; Talbi, 2009).

Metaevolutionary: It means that parameters are

chosen by other metaheuristics, in literature a good

example is given by bacterial chemotaxis method

proposed in (Müller, et al., 2000). Additionally,

Kramer (2008) extend this procedure to control like

in Hyper-metaheuristics (Hamadi, et al., 2011)

2.2. Control

Parameters can change while metaheuristic is

running. Control process is this change in “real

time”. Accord to Hinterding et al. (1997) and Eiden

et al (1999), Kramer (2008) describes and discusses

about three methods to control parameters.

Deterministic: It could be static or dynamics. Statics

means there is no change at all and dynamic it

change with a specific rule like a dynamic penalty

than change with the distance to feasible zone

(Kramer, 2008; Mesa, 2010)

Adaptive: In this case, parameter changes agree a rule

like if it happens, then do this. A classic example of

adaptive parameters is the 1/5 th rule for mutation use

in ES (Bäck, 1996; Kramer, 2008).

Self-adaptive: In this type of control, parameters

evolves agree to the problem for example the selfadaptation of the mutation step-size with the

direction to mutation (Kramer, 2008; Schwefel,

1995)

5. AN EXAMPLE OF THE ADAPTATIVE AND

SELF-ADAPTATIVE PARAMETERS FOR

DIFFERENTIAL EVOLUTION (DE).

Besides the metaheuristics covered by EC, there are

at least twenty different metaheuristics. Additionally,

they have different approaches and hybrids. In the

sake of brevity, only Differential Evolution (DE)

parameters adaptation is review and just one

approach of self-adaptation is present.

DE is a metaheuristic proposed by Price (1996). It

was classified as evolutionary metaheuristic, but DE

does not use formal operators (crossover, mutation)

like other EC algorithms. This metaheuristic have

certain characteristics that make a good example to

study: conceptually are near to EC, have one

complete rule but they identify same operators that

have EC, mutation and crossover; have few

parameters, it is widely known and have selfadaptive parameter approach.

This metaheuristic (DE) has different versions; five

classic versions for this metaheuristics are present in

(Mezura-Montes, et al., 2006). Brest, et al.( 2006)

use the version called DE/rand/1/bin to propose their

idea of self-adaptive parameters.

DE parameters for DE/rand/1/bin version are:

Size of population 𝑁𝑃(population-level)

Scale factor 𝐹(Individual-level)

Crossover parameters 𝐶𝑅(component-level)

Minimal border 𝐱𝐦𝐢𝐧

Maximal border 𝐱𝐦𝐚𝐱

Number of generation 𝐺 (population-level)

Dimensions 𝐷

Trial vector 𝐯 (individual-level)

Randomly chosen index(an random integer

∈ [1, 𝐷]) 𝑟𝑛(𝑖), 𝑟1, 𝑟2, 𝑟3

Random number 𝑟(𝑗)

Figure 1 shows the pseudocode for DE, 𝐱 is a

solution vector and 𝑓(⋅) is the value of goal function.

Line 04 is mutation operator (individual-level). Line

06 is the complete heuristic rule. Lines 07-09 are a

borders handle when there a component outside the

feasible zone is forced to go in feasible solution

(exogenous-level).

01 Start

02 for 𝑡 = 1 until 𝐺

03 for 𝑖 = 1 until 𝑁𝑃

04

𝐯𝑖 = 𝐱 𝑟1 + 𝐹(𝐱 𝑟2 − 𝐱 𝑟3 ) ∴ 𝑟1 ≠ 𝑟2 ≠ 𝑟3 ≠ 𝑖

05

for 𝑗 = 1 until 𝐷

𝑣𝑖𝑗 if 𝑟(𝑗) ≤ 𝐶𝑅 ∨ 𝑗 = 𝑟𝑛(𝑖)

06

𝑢𝑖𝑗 = {

𝑥𝑖𝑗 if 𝑟(𝑗) > 𝐶𝑅 ∧ 𝑗 ≠ 𝑟𝑛(𝑖)

07

if 𝑢𝑖𝑗 < 𝑥𝑚𝑖𝑛𝑗 ∨ 𝑢𝑖𝑗 > 𝑥𝑚𝑎𝑥𝑗 then

08

𝑢𝑖𝑗 = 𝑟𝑎𝑛𝑑[𝑥𝑚𝑖𝑛𝑗 , 𝑥𝑚𝑎𝑥𝑗 ]

09

end if

08

𝑗 =𝑗+1

09

end for

𝐯 if 𝑓(𝐯𝑖 ) < 𝑓(𝐱 𝑖 )

10

𝐱 𝑖 (𝑡 + 1) = { 𝑖

𝐱𝑖

otherwise

11 end for

12 end for

13 end algorithm

Fig. 1. Pseudocode of DE.

Original algorithm tuned parameter manually based

on a few test, they recommended 𝐶𝑅 = [0,1] ∈ ℝ ,

𝑁𝑃 = [10,50] ∈ ℤ, 𝐹 = [0,1.2] ∈ ℝ, the other

parameters are random or given by the problem. The

parameters control deterministically (statics).

Later, one of the approaches to control parameters is

proposed by Ali and A. Törn (2004) they fixed

𝑁𝑃 = 10 ∗ 𝐷, and 𝐶𝑅 = 0.5 and adapt 𝐹 using:

𝐹={

𝑓

𝑓

𝑓𝑚𝑖𝑛

𝑓𝑚𝑖𝑛

𝑓𝑚𝑖𝑛

max (𝑙𝑚𝑖𝑛 , 1 − | 𝑚𝑎𝑥 |) if | 𝑚𝑎𝑥 | < 1

max (𝑙𝑚𝑖𝑛 , 1 − |

𝑓𝑚𝑎𝑥

|)

(1)

framework to set the parameters is important because

it could help to determine which parameters need

more control than others before long tests. Anyway,

this review just gives an initial idea of the

possibilities. There is a lot of work to do in this way.

REFERENCES

otherwise

Where 𝑓𝑚𝑖𝑛 and 𝑓𝑚𝑎𝑥 are the minimum and

maximum value found for current generation. And

𝑙𝑚𝑖𝑛 is the lower value feasible for 𝐹 in this case

𝑙𝑚𝑖𝑚 = 0.4.

Brest, et al.( 2006) proposed self-adaptive strategies

for 𝐹 and 𝐶𝑅 in this case, the values changes for each

individual. Initially a parent random vector are select

𝐫𝐚𝐧𝐝 with size 1 × 4. For 𝐹 a range between [𝐹𝑖 =

0.1, 𝐹𝑢 =0.9] are proposed.

(2)

Where 𝜏1 = 𝜏2 = 0.1 . The best advantage presented

is the possibility to obtain the best result without run

the metaheuristics many times to find the best

parameter to optimize each problem.

In the previous paragraphs indicated in parentheses

the level of slot to the different parameters.

Although, it is not at exactly as in EC could be

possible extend

5. CONCLUSIONS

There is not a general categorization for parameters

selection in metaheuristics. EC has a good

framework for parameter setting. It is important to

notice that these metaheuristics have a high degree of

formalization.

In the previous section the level of slot to the

different parameters was indicated in parentheses.

Although, it is not at exactly as in EC could be

possible extend. The possibility to have a formal

Ikeda, M. and Siljak, D.D. (1992). Robust

stabilization of nonlinear systems via state

feedback. In: Robust Control Systems and

Applications, Control and Dynamic Systems, Ed

C.T. Leondes, Vol. 51, pp. 1-30. Academic

Press, New York.

Ogata, K. (1987). Discrete-Time Control Systems.

Prentice-Hall, Englewood Cliffs, NJ.

Tadmore, G. (1989). Uncertain feedback loops and

robustness. Automatica, 27, 1039-1042.

Ali, M., and Törn, A. (2004). Population set-based

global optimization algorithms: Some

modifications and numerical studies. Comput.

Operational Research, 31(10), pp. 1703–1725.

Angeline, P. (1995). Adaptative and Self-Adaptative

Evolutionary Computations. IEEE,

Computational Intelligence (pp. 152-163).

IEEE.

Brest, J., Greiner, S., Boskovic, B., Mernik, M., and

Zumer, V. (2006). Self-Adapting Control

Parameters in Differential Evolution: A

Comparative Study on Numerical Benchmark

Problems. IEEE Transactions on Evolutionary

Computation, 10(6), 646-657.

Bäck, T. (1996). Evolutionary Algorithms in Theory

and Practice. Oxford University press.

Eiben, E., Hinterding, R., and Michalewicz, Z.

(1999). Parameter control in evolutionary

algorithms. IEEE Transactions on Evolutionary

Computation, 3(2), 124-141.

Fogel, D. B., Fogel, L. J., and Atmar, J. W. (1991).

Meta-evolutionary programming. Signals,

Systems and Computers, 1991. 1991

Conference Record of the Twenty-Fifth

Asilomar Conference on pp. 540–545. IEEE.

Hamadi, Y., Monfroy, E., and Saubion, F. (2011).

What Is Autonomous Search? In: Hybrid

optimization.Ed M. Milano and P. Van

Hentenryck . pp. 357-392. Springer.

Himmelblau, D. (1972). Applied Nonlinear

Programming (p. 498). McGraw hill.

Hinterding, R., Michalewicz, Z., and Eiben, A. E.

(1997). Adaptation in evolutionary

computation: a survey. Proceedings of 1997

IEEE International Conference on

Evolutionary Computation (ICEC ’97) (pp. 6569). IEEE.

Kramer, O. (2008). Self-adaptative Heuristics for

Evolutionary Computation. Design (First Ed.,

p. 175). Berlin: springer.

Mesa, E. (2010). Supernova : un algoritmo novedoso

de optimización global. National University.

Retrieved from

http://www.bdigital.unal.edu.co/2035/.

Mezura-Montes, E., Velázquez-Reyes, J., and Coello

Coello, C. A. (2006). A comparative study of

differential evolution variants for global

optimization. Proceedings of the 8th annual

conference on Genetic and evolutionary

computation (p. 485–492). ACM.

Müller, S., Airaghi, S., Marchetto, J., and

Koumoutsakos, P. (2000). Optimization

algorithms based on a model of bacterial

chemotaxis. in Proc. 6th Int. Conf. Simulation

of Adaptive Behavior: From Animals to

Animats,, SAB 2000 Proc. Suppl (pp. 375-384).

Citeseer.

Price, K. V. (1996). Differential evolution: a fast and

simple numerical optimizer. Proceedings of

North American Fuzzy Information Processing

(pp. 524-527). Ieee. doi:

10.1109/NAFIPS.1996.534790.

Schwefel, H. P. (1995). Evolution and Optimun

Seeking (p. 435). N.Y. Wiley.

Talbi, E. (2009). Metaheruistics. Search (p. 618).

Wiley.

Yang, X.-S. (2010). Engineering Optimization An

Introduction with Metaheuristics Applications.

Engineering Optimization. Hoboken: Wiley.

Zanakis, S. H., and Evans, J. R. (1981).

Heuristic “Optimization”: Why, When, and

How to Use It. Interfaces, 11(5), 84-91.

Zapfel, G., Braune, R., and Bogl, M. (2010).

Metaheuristic Search Concepts. Search (1st

ed., p. 316). Berlin: Springer.

Zilinskas, A., and Törn, Aimo. (1989). Global

Optimization. In G. Goos and J. Hartmanis

(Eds.), Lecture Notes in Computer Science vol

350 (p. 255). Berlin: Springer.