Testing Ambiguity Theories in a New Experimental Design with

advertisement

Testing Ambiguity Theories in a New Experimental Design

with Mean-Preserving Prospects

BY

Chun-Lei Yang and Lan Yao

OCTOBER 2012

Abstract

Ambiguity aversion can be interpreted as aversion against second-order risks associated with

ambiguous acts, as in MEU/α-MP and KMM. In our design the decision maker draws twice

with replacement in the typical Ellsberg two-color urns, where a different color wins each

time. Consequently, all conceivable simple lotteries share the same mean, while the variance

increases with the color balance. MEU/α-MP, KMM and Savage’s SEU predict

unequivocally that risk-averse (-seeking) DMs shall avoid (choose) the 50-50 urn that

exhibits the highest risk conceivable. While this is true for many subjects, we also observe a

substantial number of violations. It appears that the ambiguity premium is partially paid to

avoid the ambiguity issue per se (the source), consistent with both experimental findings on

source dependence and the CEU weighting function model. This finding is robust even when

there is only partial ambiguity. We also show in an excursion that Machina’s paradox in the

reflection example disappears once the preference theories are formulated with our notion of

source-specific act.

KEYWORDS: Ambiguity, Ellsberg paradox, expected utility, experiment, Machina paradox,

second-order risk, source premium, source-specific act, weighting function

JEL classification: C91, D81

Acknowledgements: We are grateful to Jordi Brandts, Yan Chen, Soo-Hong Chew, Songfa

Zhong, Dan Houser, Ming Hsu, Jack Stecher, and Dongming Zhu for helpful comments.

Research Center for Humanities and Social Sciences, Academia Sinica, Taipei 115, Taiwan; e-mail:

cly@gate.sinica.edu.tw, fax: 886-2-27854160, http://idv.sinica.edu.tw/cly/

School of Economics, Shanghai University of Finance and Economics, Shanghai, China 200433; email:

yao.lan@mail.shufe.edu.cn

1

1. Introduction

The Ellsberg Paradox refers to the outcome from Ellsberg’s (1961) thought experiments, that

missing information about objective probabilities can affect people’s decision making in a way that is

inconsistent with Savage’s (1954) subjective expected utility theory (SEU). Facing two urns

simultaneously in Ellsberg’s two-color problem, one with 50 red and 50 black balls (the risky urn) and

the other with 100 balls in an unknown combination of red and black balls (the ambiguous urn), most

people prefer to bet on the risky urn, whichever the winning color is. This phenomenon is often called

ambiguity aversion. Many subsequent experimental studies confirm Ellsberg’s finding, as for example

surveyed in Camerer and Weber (1992).

Many extensions to SEU have been proposed to rationalize the Ellsberg paradox and applied to

economic analysis. Among the most prominent ones, Gilboa and Schmeidler (1989) develop the

maxmin expected utility (MEU) theory, generalized to the so-called α-MP (multi-prior) model by

Ghirardato, Maccheroni, and Marinacci (2004). MEU solves the paradox and has been applied to

studies on asset pricing in Dow and Werlang (1992) and Epstein and Wang (1994) among others.

Another theory that has found broad applications because of its convenient functional form is the

smooth model of ambiguity aversion by Klibanoff, Marinacci, and Mukerji (2005, KMM). Chen, Ju,

and Miao (2009), Hansen (2007), Hansen and Sargent (2008), and Ju and Miao (2009) successfully

applied KMM to studies of asset pricing and the equity premium puzzle. The third is the model of

Choquet expected utility (CEU) by Schmeidler (1989), where the DM uses a weighting function

called capacity to evaluate prospects.1 Mukerji and Tallon (2004) survey application of CEU in

various areas of economics such as insurance demand, asset pricing, and inequality measurement.

Given the success in the applied fields, many new experimental studies have been conducted to

test these models and characterize subjects’ behavior accordingly. However, all previous experiments

on ambiguity aversion we are aware of share the feature that the ambiguous prospect can be

associated with a first-order lottery that is of either lower mean or higher variance than the benchmark

risky prospect. As such, one cannot distinguish whether the observed ambiguity aversion reflects

willingness to pay an ambiguity premium for the second-order risk associated with the uncertain act,

1

For further theoretical models of multi priors, second-order sophistication, and rank-dependent utility, see

Segal (1987, 1990), Casadesus-Masanell, Klibanoff, and Ozdenoren (2000), Nau (2006), Chew and Sagi (2008),

Ergin and Gul (2009) and Seo (2009) among others. Wakker (2008) and Eichberger and Kelsey (2009) offer

excellent surveys.

2

which α-MP (MEU) and KMM predict, or for the issue of ambiguity per se, which turns out to be

consistent with CEU and seems to be behind the ideas of source dependence studies initiated by Heath

and Tversky (1991) and Fox and Tversky (1995). We have a lottery design that is a simple

modification of Ellsberg’s two-color problem that enables this separation.

In our design, the DM draws twice with replacement from a two-color urn. With the novel rule of

each color winning exactly one of the draws, ours has the unique feature that all conceivable color

compositions yield the same expected value and differ only in the variance that increases with the

balance of color in the urn. The payoff is risk free if all balls in the urn are of the same color.

Consequently, according to SEU, α-MP (MEU) and KMM, a risk-averse DM is to prefer both the

ambiguous and the objective uniformly compound urn when pitched against the objective 50-50 urn;

while a risk-seeking DM’s preference displays the exactly reversed order. In fact, even without

precise knowledge of risk attitude, these theories predict that the DM is to consistently show the same

order of preference in these two decisions. Note, to avoid Machina’s paradox (Machina, 2009), the

preference models for testing are formulated with our new notion of source-specific act.2 This enables

us to identify partial ambiguity in a straightforward manner, and to design a partial ambiguity

treatment (PA) where the color composition in the urn is only partly unknown, in addition to the full

ambiguity one (FA), as robustness check for our basic finding of persistent violation to second-order

risk models of ambiguity. The predictions do not change when the extent of ambiguity varies from

full to partial.

It turns out that 22-39% subjects violate the above-mentioned theoretical predictions after

eliciting their risk attitude with a simple multi-price-list (MPL) method, depending on decision issues

and treatment conditions. Disregarding the risk attitude, 23-43% violate the consistency prediction.

Interestingly, CEU proves to be sufficiently general to not be tied down to any specific prediction for

testing, within our design. In particular, it is not bound to evaluating the utility function with a virtual

lottery (via weighting function) that is mean preserving, which the other theories require in our design.

To the extent that CEU’s weighting leads to a lower virtual mean, we may explicitly identify its

difference to the original mean as the premium for the source, besides the premium for second-order

risk associated with ambiguity postulated by the other theories mentioned.

2

For detailed discussion of Machina’s paradox with his reflection example and how it goes away with the

notion of source-specific act, see the Excursion in Section 4.

3

In the next section, we discuss the relevant preference models, our experimental design, and the

associated theoretical predictions. Data analysis is in Section 3. We then further interpret our results in

relation to findings in the literature in Section 4, as well as discuss Machina’s reflection example and

how our notion source-specific act helps to solve Machina’s dilemma in an excursion, before

concluding the paper with Section 5.

2. Theoretical Models and Experimental Design

Models of decision under uncertainty

Let Ω be a state space with a sigma algebra ∑, and X be an outcome space. An act is a mapping

𝑓: Ω → X. An individual is assumed to have a preference ordering over the space of all acts, for

making decisions under uncertainty. For our purpose, assume the outcome space consists of finite real

numbers that represent monetary payoffs, 𝑋 = {𝑥1 , 𝑥2 , … , 𝑥𝑛 }, with 𝑥1 > 𝑥2 , … , > 𝑥𝑛 . For any set

Z, let Δ(𝑍) denote the space of probability distributions, i.e. lotteries, on Z. An act f and a probability

distribution on Ω induce a unique probability distribution 𝑝 ∈ Δ(𝑋). However, the specific lottery

device, or the source that governs the circumstances of the underlying uncertainty, might involve

higher-order compound lotteries in Δ𝑘 (𝑋) for arbitrary k that the DM may or may not reduce to their

first-order forms before evaluation. Suppose k is the highest relevant order of stochastic elaboration

by the DM, then different sources of uncertainty can be associated with different sets of admissible

order-k compound lotteries, 𝑆 ⊆ Δ𝑘 (𝑋). In the spirit of the revealed preference approach, we assume

that the pair (f, S) summarizes all relevant aspects of a decision option and the DM is to be indifferent

between (f, S) and (g, S) for any acts f and g. In other words, additional information details as reflected

in the sub sigma algebra on Ω induced by 𝑓 −1 are considered irrelevant.

Note that this notion of (f, S) is an attempt to explicitly identify the source of uncertainty, and

hence is called a source-specific act subsequently. The motivation for this new notion comes from our

insight that Machina’s paradox can be avoided if the preference models below are defined on the

outcome space X with explicit recognition of partial ambiguous set 𝑆 ⊆ Δ𝑘 (𝑋), instead of their

standard definitions on the state space Ω where partial ambiguity is implicit in the act f.3 The

3

Details on our solution of Machina’s paradox can be found in the Discussions. Note that Gajdos, Hayashi,

4

ambiguous Choice C in our FA treatment, for example, has 𝑆𝐶 consisting of the 11 simple lotteries

listed in Table 1, or of its convex hull alternatively. All choices with objective lotteries have singleton

S. In the case of our compound lottery Choice D, for example, 𝑆𝐷 = {𝜇𝐷 } with 𝜇𝐷 ∈ Δ2 (𝑋)

representing the uniform distribution over 𝑆𝐶 . Source dependence as discussed in Fox and Tversky

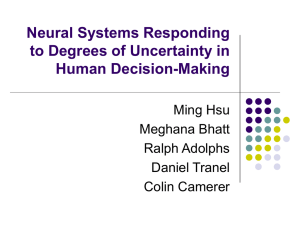

(1995), Hsu, Bhatt, Adolphs, Tranel and Camerer (2005), and Abdellaoui et al. (2011) among others

can be reinterpreted as different natural sources leading to different subjective specification of S,

presumably in the first-order space Δ(𝑋). Ergin and Gul’s (2009) issue dependence can be interpreted

as referring to differentiations in 𝑆 ⊆ Δ2 (𝑋) associated with different choices. Also, one advantage

of source-specific formulation is to explicitly discuss partial ambiguity, which motivates our PA

treatment.

With the term (f, S) defined, we now turn to well-known models that are relevant for our

experimental tests. Savage’s (1954) subjective expected utility theory assumes that there is a

monotone utility function on the outcome space, 𝑢: 𝑋 → ℝ, such that for each source-specific act (f, S)

with 𝑆 ⊆ Δ(𝑋), there is a subjective belief 𝑝 ∈ 𝑆 so that

𝑛

(1)

SEU(𝑓, 𝑆) = ∑

𝑖=1

𝑝(𝑥𝑖 )𝑢(𝑥𝑖 )

Ghirardato et al. (2004) have the so-called α-MP (multi-prior) model, a generalization to MEU,

as follows. Given that the DM has a compact set K for each (f, S),

(2)

α-MP(𝑓, 𝑆) = α min ∑

𝑝∈𝐾⊆𝑆

𝑛

𝑝(𝑥𝑖 )𝑢(𝑥𝑖 ) +(1-α) max ∑

𝑖=1

𝑝∈𝐾⊆𝑆

𝑛

𝑝(𝑥𝑖 )𝑢(𝑥𝑖 )

𝑖=1

In the extreme case of α = 1, we obtain the original MEU expression proposed by Gilboa and

Schmeidler (1989).

(2a)

MEU(𝑓, 𝑆) = min𝑝∈𝐾⊆𝑆 ∑𝑛𝑖=1 𝑝(𝑥𝑖 )𝑢(𝑥𝑖 ).

The smooth model of ambiguity aversion (KMM) by Klibanoff et al. (2005) considers the space

of second-order compound lotteries as the relevant space for decision under uncertainty and assumes

that there is a monotone function 𝑣: ℝ → ℝ, with which the DM evaluates the certainty equivalents of

Tallon, and Vergnaud (2008) also attach an admissible set to an act in their model, which is defined on the state

space, while ours is on the outcome space. Chew and Sagi (2008) have a model that identifies sources with

small worlds in the form of sub sigma algebra on Ω.

5

first-order lotteries evaluated with u. For each (f, S) with 𝑆 ⊆ Δ2 (𝑋), there is a second-order

subjective belief 𝜇 ∈ 𝑆 so that

𝑛

(3)

KMM(𝑓, 𝑆) = ∫

𝑣 (∑

𝑝(𝑥𝑖 )𝑢(𝑥𝑖 )) d𝜇(𝑝)

𝑖=1

𝑝∊𝛥(𝑋)

Unlike the above models where the DM is to evaluate the act (f, S) with some admissible

probability distribution in S, Choquet expected utility by Schmeidler (1989) evaluates it with a

weighting function, called capacity, instead. Let 𝐸𝑖 = [𝑥 = 𝑥𝑖 ] denote the event that yields the

monetary payoff 𝑥𝑖 , which increases with i = 1, …, n. A weighting function w defined on the sigma

algebra generated by these events is a capacity, if it is non-negative, 𝑤(∅) = 0, 𝑤(⋃𝑛𝑖=1 𝐸𝑖 ) = 1,

and 𝑤(𝐴) ≤ 𝑤(𝐵) whenever 𝐴 ⊆ 𝐵. The payoff under CEU is then the following.

𝑖

𝑛

(4)

CEU(𝑓, 𝑆) = ∑

[𝑤 (⋃

𝑖=1

𝑗=1

𝑖−1

𝐸𝑗 ) − 𝑤 (⋃

𝑗=1

𝐸𝑗 )] 𝑢(𝑥𝑖 )

𝑛

Note with 𝑞𝑖 : = 𝑤(⋃𝑖𝑗=1 𝐸𝑗 ) − 𝑤(⋃𝑖−1

𝑗=1 𝐸𝑗 ), we have ∑𝑖=1 𝑞𝑖 = 1 and 𝑞𝑖 ≧ 0, for all i. Thus, CEU

can be interpreted to evaluate the utility function u with a more flexible distribution q that may not be

in the set of admissible lotteries S. In our experimental study, this added degree of freedom proves to

be crucial to distinguish CEU from the other models. Note that in the special case that the DM assigns

a probability distribution p over the act, or when dealing with an objective lottery, the weighting takes

the form of an increasing function, 𝑤: [0,1] → [0,1], that is at the center of prospect theory by

Tversky and Kahneman (1992). We then can work with the following instead.

𝑛

(4a)

CEU(𝑓, 𝑆) = ∑

𝑛

[𝑤(𝑝(𝑥 ≤ 𝑥𝑖 )) − 𝑤(𝑝(𝑥 ≤ 𝑥𝑖−1 ))]𝑢(𝑥𝑖 ) = ∑

𝑖=1

𝑞𝑖 (𝑥𝑖 )𝑢(𝑥𝑖 )

𝑖=1

We refer to Wakker (2008) for more detailed discussion.

Decision problems of the experiment

There are three urns labeled B, C, and D. Each urn has 2N balls, each of which can be red or

white colored. The novel feature of our design is to have subjects draw from the selected urn twice

with replacement, with a different color winning 50 Yuan each draw. If the first draw is red and the

second is white, he gets 100 Yuan; if the two draws are of the same color, he gets 50 Yuan; but if the

6

two colors are in the order of white first and red second, he gets 0. Urn B is the 50-50 risky one with

exactly N red and N white balls. Urn C is the ambiguous urn where the number of red balls could be

any in a subset 𝑆 ⊆ 𝐻𝑁 : = {0,1, … ,2𝑁}. Urn D is a compound lottery with uniform distribution over S.

The options associated with urns B, C, D are subsequently denoted Choice B, C and D respectively.

Subjects face three simple decision problems one after another. Problem 1 is meant to test their

risk attitude. On a list of 20 cases of sure payoffs that range from 5 to 100 Yuan in steps of 5 Yuan,

subjects have to choose either the sure payoff or the risky one, Choice B, for every case.4 Problem 1

is in fact a simple form of the MPL procedure that can also be viewed as a modified version of the

BDM procedure.5 Problem 2 is our main test for theoretical predictions regarding ambiguity aversion.

In this problem, subjects have to decide between Choice B and Choice C. Problem 3 is a test on

preference over objective compound lotteries, where subjects are to choose between (the first-order

risk) Choice B and (the second-order risk) Choice D.

We have two main treatments that differ both in sizes of the urn and in whether there is full or

partial ambiguity in Choice C. In the full ambiguity treatment (FA), N = 5 and 𝑆FA = 𝐻5. In the

partial ambiguity treatment (PA), N = 8 and 𝑆PA = {0,1,2,3,4,5; 11,12,13,14,15,16} ⊆ 𝐻8 . By

definition, FA and PA also differ in Choice D due solely to the difference between 𝑆FA and 𝑆PA.

Note, however, that the feature of a different color winning each round ensures that the mean of the

lottery is always 50 Yuan, independent of the color composition in the urn. In fact, all compound

lotteries can be ranked regarding their variances, with Choice B being associated with the highest

possible variance. As illustration, Table 1 summarizes the statistical characteristics of all physically

feasible first-order lotteries in our design, for N = 5.

Theoretical predictions

Let 𝜋ℎ𝑁 ∈ Δ(𝑋), with X = {0,50,100} being the outcome space, denote the induced simple

lottery that associates with a hypothetical urn with h red and 2N-h white balls, according to our

double-drawing rule. Let 𝐻𝑁 = {𝜋ℎ𝑁 }2𝑁

ℎ=0 denote, with slight abuse of notations, the physically

4

We aim at revealing individual certainty equivalent values of Choice B. Though we may alternatively replace

Choice B with its reduced form (100, 1/4; 50, 1/2; 0, 1/4) here, it would lose the structural congruence to Choice

C and D, which we consider eminently crucial to our design.

5

Sapienza, Zingales and Maestripieri (2009) use a similar method. See Becker, DeGroot, and Marschak (1964)

for BDM procedure. See Holt and Laury (2002) for multi-price-list (MPL) procedure. See also Harrison and

Rutström (2008) and Trautmann, Vieider and Wakker (2011). Detailed discussion can be found in Appendix C2.

7

feasible set of first-order lotteries under our design. Generically, the outcome probabilities are

𝜋ℎ𝑁 (0) = 𝜋ℎ𝑁 (100) = ℎ(2𝑁 − ℎ)/4𝑁 2 =: 𝑝ℎ0 and 𝜋ℎ𝑁 (50) = 1 − 2𝑝ℎ0 , respectively. Due to our

symmetrical design, {h-red, (2N-h)-white} and {(2N-h)-red, h-white} urns induce equivalent

prospects, in all aspects relevant for decision under uncertainty. The mean for 𝜋ℎ𝑁 is the same 50 for

all h. But the variance, var 𝜋ℎ𝑁 = 2 ∗ 502 ℎ(𝑁 − ℎ)/𝑁 2 , increases from h = 0 to h = N and then

symmetrically decreases from h = N to h = 2N, with max var 𝜋ℎ𝑁 = var 𝜋𝑁𝑁 = 1250. The crucial

h

feature for our design is that a more color-balanced urn constitutes a mean-preserving spread to a less

balanced one. As illustration, Table 1 summarizes the stochastic characteristics of all 11 elements in

𝐻5 .

Table 1: Complete list of feasible first-order lotteries,6 N = 5

𝝅𝟎

𝝅𝟏

𝝅𝟐

𝝅𝟑

𝝅𝟒

𝝅𝟓

𝝅𝟔

𝝅𝟕

𝝅𝟖

𝝅𝟗

𝝅𝟏𝟎

Red

0

1

2

3

4

5

6

7

8

9

10

White

10

9

8

7

6

5

4

3

2

1

0

p(0)

0

.09

.16

.21

.24

.25

.24

.21

.16

.09

0

p(50)

1

.82

.68

.58

.52

.50

.52

.58

.68

.82

1

p(100)

0

.09

.16

.21

.24

.25

.24

.21

.16

.09

0

mean

50

50

50

50

50

50

50

50

50

50

50

variance

0

450

800

1050

1200

1250

1200

1050

800

450

0

Though there are only 2𝑁 + 1 lotteries in 𝐻𝑁 physically feasible, it is nonetheless conceivable

that more complicated compound lottery devices can be used to determine which of them gets

chosen.7 To be on the safe side, let us assume that the relevant set of lotteries for the ambiguous

Choice C under SEU, α-MP, MEU and CEU is in the convex hull of 𝐻𝑁 , i.e., 𝑆𝐶 ⊆ co 𝐻𝑁 ⊆ Δ(𝑋).

At the heart of our design is the feature that, for any 𝜋, 𝜋 ′ ∈ 𝑆𝐶 , mean(𝜋) = mean(𝜋 ′ ) = 50 and

Table 1 summarizes all possible first-order lotteries given this payoff rule, with 𝜋ℎ coding for the lottery

with h red balls and 10-h white balls. There are exactly 11 of them. Each column lists the distribution of

monetary outcome, its mean and its variance. For example, the urn with 4 red and 6 white balls, 𝜋4 , gives us the

probabilities of .24, .52, and .24 to earn the prize of 0, 50, and 100 Yuan, respectively; with a mean of 50 Yuan

and a variance of 1200. Obviously, our modified Ellsberg risky prospect, 𝜋5 , has the highest variance of 1250,

while all color compositions yield the same mean payoff.

7

For example, Stecher, Shields and Dickhaut (2011) have an ingenious method to generate virtual ambiguity

via objective but mathematically involved compound lotteries, which illustrates the need to consider the convex

hull here.

8

6

one of 𝜋 and 𝜋 ′ is a mean-preserving spread to the other due to the nature of 𝐻𝑁 . In fact, let 𝑝𝜋0

denote the probability π assigns to x=0 for any 𝜋 ∈ 𝑆𝐶 , then 𝑝𝜋0 ∊ [0, .25], i.e. SC can be represented

as a one-parameter family by the compact interval [0, .25].

Let 𝑛 = #𝑆𝐶 , 𝜋𝐷 = ∑ℎ∈𝑆𝐶 𝜋ℎ /𝑛 ∊ 𝑆𝐶

is the reduced first-order distribution for Choice D, with var 𝜋𝐷 = ∑ℎ∈𝑆𝐶 var 𝜋ℎ /𝑛 < 𝑣𝑎𝑟 𝜋𝑁𝑁 . 8

More specifically, for treatments FA and PA, 𝑝𝜋0FA = .15, 𝑝𝜋0PA ≈ .12, var 𝜋𝐷FA = 750, var 𝜋𝐷PA ≈

𝐷

𝐷

602, respectively.

Once the DM reveals his risk attitude associated with u in Problem 1 as being risk averse, risk

neutral or risk seeking (corresponding to CE < 50, = 50, or > 50, i.e., 𝑢(0) + 𝑢(100) − 2𝑢(50) < 0,

= 0, or > 0), specific predictions can be derived for his decision in Problems 2 and 3 based on the

above-mentioned models, which we can test experimentally. First, Problem 3 only involves singleton

sources 𝑆𝐵 = { 𝜋𝐵 } and 𝑆𝐷 = {𝜋𝐷 }, i.e., there is no ambiguity. It is obvious that both SEU and

α-MP predict preferences of D over B for a risk-averse DM as well as B over D for risk-seeking DMs,

because 𝜋𝐵 = 𝜋𝑁𝑁 is a mean preserving spread of 𝜋𝐷 . In fact, as 𝜋𝐵 is a strict mean preserving

spread to any lottery in 𝑆𝐶 but itself, a risk-averse (-seeking) DM in Problem 2 is also to prefer C to

B (B to C), as long as he does not put all weight on 𝜋𝑁𝑁 when evaluating Choice C. In the PA

treatment, the potential indifference is ruled out by design as 𝜋𝑁𝑁 ∉ 𝑆𝐶 = 𝑆PA . In the FA, the latter is

the case if 𝜋𝐵 ∉ 𝐾 ⊆ 𝑆𝐶 in the MEU formulae (2a) or α < 1 even when 𝐾 = 𝑆𝐶 with α-MP. This

exactly illustrates the fundamental difference from Ellsberg’s original design, where a subjective

probability can be associated with the ambiguity prospect that may yield a higher mean or a lower

variance than the benchmark risky prospect.

The same prediction is also true for KMM. For Choice C under KMM, we assume 𝑆𝐶KMM =

{𝜑 ∈ Δ2 (𝑋): supp 𝜑 ⊆ 𝑆𝐶 } , whose first-order reduction trivially coincides with 𝑆𝐶 . Let 𝑐𝜋 : =

𝑝𝜋0 [𝑢(0) + 𝑢(100)] + (1 − 2𝑝𝜋0 ) 𝑢(50) denote the expected value for 𝜋 ∈ 𝑆𝐶 . For any 𝜋, 𝜋′ ∈ 𝑆𝐶 ,

(5)

𝑐𝜋 − 𝑐𝜋′ = (𝑝𝜋0 − 𝑝𝜋0′ )[𝑢(0) + 𝑢(100) − 2𝑢(50)].

For any 𝜋′ ∈ 𝑆𝐶 with 𝜋′ ≠ 𝜋𝐵 , since 𝑝𝜋0𝐵 = .25 > 𝑝𝜋0′ , 𝑐𝜋𝐵 − 𝑐𝜋′ ≶ 0 iff 𝑢(0) + 𝑢(100) −

2𝑢(50) ≶ 0 , i.e. iff CE ≶ 50 . Now, for any strictly increasing 𝑣(. ) and any 𝜇 ∊ 𝑆𝐶KMM ⊆

Δ2 (𝑋) without degenerately putting all weight on 𝜋𝐵 , we conclude from the definition of KMM that

Note for any compound lottery 𝑦 = (𝑝ℎ : 𝑥ℎ )ℎ , var y = ∑ 𝑝ℎ var 𝑥ℎ + ∑𝑝ℎ (𝑥̅ℎ − 𝑦̅)2. Due to mean preserving,

the second term vanishes in our design.

9

8

(6)

KMM(B) ≶ KMM(C) ⇔ KMM(B) ≶ KMM(D) ⇔ CE ≶ 50

The proof is straightforward in that, due to monotonicity, 𝑣(𝑐𝜋𝐵 ) is either the maximum or the

minimum on {𝑐𝜋 : 𝜋 ∊ 𝑆𝐶 }, depending on whether the DM is risk seeking or averse. Note that this is

true whether the support for 𝜇 is restricted to 𝑆𝐶 or co 𝑆𝐶 . In summary, we have the following

theoretical predictions to test.

Hypothesis In both FA and PA treatments, SEU, α-MP (MEU in limit case), and KMM predict that

risk-averse individuals (CE<50 in Problem 1) are to choose C over B in Problem 2 and D over B in

Problem 3, while risk-seeking individuals (CE>50 in Problem 1) are to choose B over C or D in both

Problem 2 and 3.

Note that any decision in Problems 2 and 3 by a Problem-1 risk-neutral individual is trivially

consistent with the theory prediction, as is obvious from equation (5) above. And the theories predict

that people with non-neutral risk attitudes should have a strict preference among the two choices in

both Problems 2 and 3, which makes it redundant to provide the option of indifference between the

two choices in Problems 2 and 3 in the design. The above discussion also reveals that risk aversion or

seeking is exactly equivalent to the choice of either D or B in Problem 3. Thus, a weaker consistency

requirement restricted to behavior in Problem 2 and 3 is that the DM shall do either BB or CD there.

Hypothesis* (Weak consistency) To be consistent with models of SEU, α-MP (MEU in limit case)

and KMM, individuals shall choose either BB or CD in Problems 2 and 3, in both FA and PA.

In contrast, such sharp behavior predictions cannot be made with CEU. It turns out that any

decision profile across Problem 1-3 can be rationalized within the CEU model.

Lemma 1 For any combination of decisions in Problems 1, 2 and 3 in FA and PA, there is a

weighting function 𝑤 under the CEU model that rationalizes them.

A detailed proof can be found in Appendix C1. One way to understand this difference between

CEU and α-MP/KMM is to recall the fact that each weighting function 𝑤 induces a virtual lottery

𝑞 ∈ ∆(X) not necessarily in the mean-preserving class of co 𝑆𝐶 , so that CEU is exactly the expected

utility weighted with 𝑞 . In fact, 50 − ∑𝑛𝑖=1 𝑞𝑖 (𝑥𝑖 )𝑥𝑖 can be roughly interpreted as the source

premium, which would be zero under α-MP/KMM in similar terms due to our special prospect design.

10

Note, although our design is not intended to discriminate among different shapes of 𝑤, the partial

ambiguity approach may be useful for this in future studies.

Experimental procedure

We ran two treatments that differ in the number of balls in the urn and whether the color

composition is partially unknown. The full ambiguity one (FA) has 10 balls in the urn with full

uncertainty over the 11 color compositions in Choice C. The partial ambiguity one (PA) has 16 balls

in the urn with uncertainty over a set of 12 of the total 17 color compositions, presented with the

explanation to subjects that the absolute difference of the two colors is at least 6. The design is chosen

so that the size of the ambiguous set is similar (n=11 vs. n=12), there is some difference in maximal

risk between PA and FA ( max var 𝜋ℎ8 = 1074 < 1250 = var 𝜋𝑁𝑁 ), but the former is not too small to

ℎ∈𝑆PA

make the ambiguity issue irrelevant (e.g. under N=1000, n=12). Note that the primary purpose of

designing two treatments this way is to check whether and how any potential violation to the main

hypotheses is robust.

Our instructions were done with a PowerPoint presentation (Appendix A). Subjects were to hand

in their decisions on one problem before they got instructions for the next one. To increase credibility,

we demonstrated drawings with the urn to be used later in Choices B and D during instructions.

Choice C urn was prepared before the session and placed on the counter for all to see.9 After all

decision sheets were collected, subjects were called upon to have their decisions implemented one by

one.10 For both FA and PA, subjects drew randomly from one of the three decision problems and

were paid in cash according to the realization of their decisions in that problem. Note, in an initial

study, we ran sessions for FA with only about 10% of subjects randomly chosen for payment. To add

to data robustness, we also present its results here and call it FAR treatment henceforth.

A total of 269 subjects from Shanghai University of Finance and Economics participated in the

experiment. All participants were first-year college students of various majors ranging from

9

Note, our double-draw alternate-color-win design conceptually removes the subjects’ fear of possible

manipulation of color composition by the experimenter. Nevertheless, students still regularly asked to inspect

the content of the ambiguous urn C after the decision implementation.

10

After subjects handed in their decisions, they were given the option to have the payment procedure

implemented later in the experimenter’s office, if they did not wish to wait. Only two of them made use of this

option.

11

economics and management to science and language. 160 students participated in the treatments PA

and FA and all of them were paid. We ran two sessions in each treatment. 109 subjects participated in

the treatment FAR of three sessions, and only 11 subjects were paid randomly. Average payoff for all

171 subjects with real payment in the three treatments was 62.2 Yuan, and average duration for a

session was 40 min.11 Note we also ran an auxiliary session with 30 subjects on incentivized

comprehension tests. Details on its motivation, design and outcomes can be found in Appendix B1.

3. Experimental Results

Problem 1 elicits individuals’ risk attitude. The certainty equivalent value (thereafter CE) of the

risky lottery (Choice B) in our experiment is defined as the lowest value at which one starts to prefer

sure payoff to the lottery. The majority of subjects (77, 72, and 100 in PA, FA, and FAR respectively)

revealed monotone behavior of switching from B to A with increasing sure payoffs. Subsequent

analyses are restricted to these samples only.12 Note the incentivized comprehension tests show that

subjects from the cohort have no problem understanding the statistical implications of our unique

double-draw lottery design. In addition, most people displayed preferences over different urns of our

design that are consistent with standard theory of risk. Details are in Appendix B1.

The average CE values are 50, 49.65 and 46.1 for the treatments PA, FA and FAR with standard

deviations of 12.46, 11.11 and 15.22, respectively. In PA, we have 28.57%, 42.86% and 28.57% of

the subjects with CE<50, CE=50, and CE>50, respectively, which correspond to risk aversion,

neutrality, and seeking. The numbers in FA are 34.72%, 34.72%, and 30.56%, and those in FAR are

38%, 32% and 30%, respectively.13 Chi square test reveals no significant difference between PA and

11

Note that 1 USD = ca. 6.8 Yuan. Regular student jobs paid about 7 Yuan per hour and average first jobs for

fresh graduates paid below 20 Yuan per hour. The duration of 40 minutes is the average time spent by all

subjects including the long waiting time for payoff implementation.

12

Only 8 out of 85 subjects (9.41%) in the PA treatment, 3 out of 75 subjects (4%) in the FA treatment, and 9

out of 109 subjects (8.26%) in the FAR treatment switched back from A to B, which is deemed anomalous and

excluded from our data analysis. We also run an additional session of the treatment FAR (41 subjects) with the

alternate order of problems 1, 3 and 2, to control for potential order effects. Chi-square test confirms no

existence of order effects, with p=0.831, 0.640, and 0.759 for Problem 2, 3, and both combined, in comparison

with the order used in our design. Also, Arló-Costa and Helzner (2009) has a similar order-independence finding

like ours. We did not include the session in this paper.

13

Note that this kind of distribution of risk attitude is common in the literature. Halevy (2007), using the

standard BDM mechanism, has 31.7%, 30.5%, and 38.5% of the 105 subjects in his sample as risk averse,

12

FA regarding subject risk attitudes among the categories of risk averse, neutral and seeking (p=0.569).

Figure B1 in the appendix shows the distributions of subjects’ CE values.

As summarized in the Hypothesis, for risk-averse (-seeking) individuals for Problem 2 and 3, the

theories of α-MP (MEU) and KMM predict the choice of C and D (B and B), respectively. Figure 1

illustrates the case of violations. Note that [-,-] in the brackets refers to the 95% confidence interval

defined by percentage, throughout this paper. In PA, we observe 22.08% ([12.60, 31.55]) in Problem 2

and 3, respectively, when all samples are considered. In FA, these numbers are 27.78% ([17.18, 38.38])

in both Problem 2 and 3. In FAR, we observe 39% ([29.40, 49.27]) and 36% ([26.64, 46.21]) of

violations in Problem 2 and 3, respectively. Details can be found in Table B2 in Appendix B2.14 The

equality of proportion test on the difference in violation rate between PA and FA yields p-values

0.2105 and 0.2105 for Problem 2 and 3 respectively.

Figure 1: Proportion of violations in Problem 2 and 3

0.6

0.5

0.4

0.3

0.2

0.1

0

PA FA FAR

Averse (CE<50)

PA FA FAR

PA FA FAR

PA FA FAR

Seeking (CE>50)

Neutral (CE=50)

All included

Problem 2

Problem 3

So far, we have discussed decision consistency comparing Problem 1 with 2 and 1 with 3. In fact,

even without Problem 1, the theories also have a clear prediction on joint decisions within Problem 2

and 3, as set forth in the Hypothesis* (weak consistency).

The proportion of the types BB, BD, CB and CD are listed in Table 2, where numbers in brackets

indicate sample sizes. The proportion of inconsistent types (BD and CB) in PA and FA are 23.38%

neutral, and seeking, respectively.

14

For comparison, proportions for decisions in favor of the 50-50 risky, indifferent, or ambiguous urn in a

standard Ellsberg 2-color problem are (31.43%, 31.43%, 37.14%) in Stecher, Shields and Dickhaut (2011),

(46%, 10%, 44%) among Halevy’s (2007) risk-averse subjects, and (86.05%, 9.3%, 4.65%) among Halevy’s

(2007) risk-seeking subjects, respectively.

13

([13.71, 33.05], 18 out of 77 observations,) and 33.33% ([22.18, 44.49], 24 out of 72 observations),

respectively. The former is significantly lower than the latter, with p=0.0885 in the equality of two

proportion test. We also observe inconsistent type (BD and CB) in FAR is 43% ([33.14, 53.29], 43 out

of 100 observations). Such large scales of inconsistency here suggest that people may inherently treat

the ambiguous and the compound-risk issues differently.15 The difference between PA and FA also

implies that people behave more consistently when facing less ambiguous situations. Besides, it is

interesting to observe that the proportion of inconsistency weakly decreases from risk-averse, to

risk-neutral and risk-seeking subjects, (40, 32, 27) for FA and (27.27, 24.24, 18.19) for PA, as well as

from FA to PA. In combination with Figure 1, the latter observation suggests that violation of theories

of SEU, MEU and KMM might decrease with reduction of ambiguity, which is the case from FA to

PA.

Another pattern of behavior inconsistency is reflected in the relative frequency of people

switching action from B in Problem 2 to non-B in Problem 3, and vice versa. In the treatment FA, we

find that the switch rates are 16/38 = 42.11% ([26.31, 59.18]) (BD/(BD+BB)) and 8/34 = 23.53%

([10.75, 41.17]) (CB/(CB+CD)). The former is significantly higher than the latter, with p=0.0475 in

the equality of two proportion test. In the treatment PA, the switch rates are 11/32 =34.38% ([18.57,

53.19]) (BD/(BD+BB)) and 7/45 = 15.55% (CB/(CB+CD)) ([6.49, 29.46]). And the former is

significantly higher than the latter, with p=0.0272 in the equality of two proportion test. Thus, it is

interesting to observe that in both treatments people with preference for the ambiguous option in

Problem 2 turn out to be more consistent than those with Choice B. One way to understand this result

is to assume that decision for C or D carries with itself some sort of biased selection for DMs that are

more predisposed to follow second-order risk models.

Table 2. Proportion (%) of the types BB, BD, CB and CD

Risk averse

15

Risk neutral

Risk seeking

All

PA

[22]

FA

[25]

FAR

[38]

PA

[33]

FA

[25]

FAR

[32]

PA

[22]

FA

[22]

FAR

[30]

PA

[77]

FA

[72]

FAR

[100]

BB

13.64

20

28.95

27.27

32

28.13

40.91

40.91

23.33

27.27

30.56

27

BD

13.64

24

26.32

18.18

24

31.25

9.09

18.18

16.67

14.29

22.22

25

CB

13.64

16

18.42

6.06

8

18.75

9.09

9.09

16.67

9.09

11.11

18

CD

59.08

40

26.32

48.49

36

21.88

40.91

31.82

43.33

49.35

36.11

30

The results from the comprehension tests as reported in Appendix B3 rule out the concern that subjects may

not have properly understood the statistical implications of our double-draw design.

14

Note that we also clearly reject the randomization hypothesis of uniform distribution over the

four decision types BB, BD, CB and CD, with p=0.000 for PA, and p=0.0168 for FA, and p=0.3735

for FAR using the chi-square goodness-of-fit test. Moreover, the equality of proportion test rejects the

hypothesis that the inconsistent types result from 50-50 randomization, with p= 0.0000, 0.0023 and

0.0163 in PA, FA and FAR, respectively.

4. Discussions

Following Savage’s tradition of subjective expected utility, MEU and KMM also posit that the

DM evaluates the uncertain prospect with a feasible distribution on outcomes. Ambiguity aversion is

traditionally interpreted as willingness to pay a premium to avoid the variability of the range of

ambiguity behind the prospect, i.e. premium to avoid the additional, second-order risk beyond that

attached to any single objective distribution. However, when the ambiguous prospect is only

associated with mean-preserving contractions over the risky one, thus without any reason to pay

premium based on a wider range of unwanted risks, as in our design, a substantial share of subjects

still chose to avoid the ambiguous prospect, in violation of predictions by MEU and KMM. In

comparison, CEU has no problem in explaining these violations.

Technically, it is due to the fact that CEU is equivalent to weighting the given

von-Neumann-Morgenstern utility 𝑢(. ) with virtually arbitrary distributions not restricted to the

mean-preserving class of 𝑆𝐶 or 𝑆𝐶𝐾𝑀𝑀 , which is in contrast a binding condition for MEU and KMM.

Thus, a CEU DM may overweigh the x=0 event with some 𝑞 ∉ 𝑆𝐶 as if he is willing to pay a

premium to avoid the issue of ambiguity per se, even when it implies nothing but a mean-preserving

contraction over the simple-risk prospect. In standard Ellsberg-type studies, however, the admissible

set of distributions in the problem design of ambiguity is often the whole space ∆(𝑋), as in Ellsberg’s

two-color problem. Here, the CEU-induced weighting distribution 𝑞 also must fall within ∆(𝑋),

similar to those for MEU or KMM. In Ellsberg’s three-color problem or Machina’s (2009) Reflection

example involving partial ambiguity, there are still sufficient variations in mean and variance in both

directions associated with the ambiguous prospect, so that it is easy to overlook the case of potentially

𝑞 ∉ 𝑆𝐶 . In this sense, our novel prospect design offers a way to cleanly separate premium for avoiding

15

risk variability from that for avoiding the issue per se, associated with the ambiguous prospect. CEU

appears to be able to better deal with the latter. This view of premium for the issue per se is indeed

consistent with the source-dependence interpretation of ambiguity and recent neuroimaging studies.

Source preference and ambiguity premium

Many studies feature natural events in their design of ambiguous prospects, and find that decision

under uncertainty depends not only on the degree of uncertainty but also on its source. In a series of

studies, Heath and Tversky (1991) find support for the so-called competence hypothesis that people

prefer betting on their own judgment over an equiprobable chance event when they consider

themselves knowledgeable, but not otherwise. They even pay a significant premium to bet on their

judgments. These data cannot be wholly explained by aversion to ambiguity, as in the second-order

preference theories, because judgmental probabilities are more ambiguous than chance events.16 Fox

and Tversky (1995) find that ambiguity aversion is produced by the shock from the source of either

less ambiguous events or more knowledgeable individuals, and stated the comparative ignorance

hypothesis.17

As a further illustration, Fox and Weber (2002) observe that preference for the ambiguous bet

takes a huge boost when preceded by a quiz of equally unfamiliar and ambiguous background rather

than by a quiz of rather familiar background. This is as if the high-familiarity quiz reminds him of the

existence of the rational brain condition where he actively weighs different pieces of relevant

information before making the judgment. Being framed this way, facing an unfamiliar judgment may

cause a great sense of unease not present in its absence. In fact, having already made his judgment in a

similarly unfamiliar quiz helps trick his brain, say, the amygdala, to lower the suspicion and fear

against too uncertain issues. In some sense, the willingness to pay an ambiguity premium for the

source is greatly reduced in the latter, comparatively. This view is consistent with both the

competence and the comparative ignorance hypotheses.

Abdellaoui, Baillon, Placido and Wakker (2011) introduce a novel source method to

16

Fox and Tversky (1998) also find consistent evidence on the competence hypothesis.

Tversky and Fox (1995) and Tversky and Wakker (1995) study likelihood sensitivity, another important

component of uncertainty attitudes that depends on source. They provide theoretical and empirical analyses of

this condition for ambiguity. Chow and Sarin (2002) make a distinction between the known, unknown and

unknowable cases of information, which is consistent with the comparative ignorance hypothesis.

16

17

quantitatively analyze uncertainty. They show how uncertainty and ambiguity attitudes for uniform

sources can be captured conveniently by graphs of preference functions. They fit the CEU model with

individual decisions and find support for the source preference hypotheses.18

Recent neuroimaging studies like Hsu, Bhatt, Adolphs, Tranel and Camerer (2005), Huettel,

Stowe, Gordon, Warner and Platt (2006), and Chew, Li, Chark and Zhong (2008) compare brain

activation of people who choose between ambiguous vs. risky options and suggest that these two

types of decision making follow different brain mechanisms and processing paths. For example,

evidence in Hsu et al. (2005) suggests that, when facing ambiguity, the amygdala, which is the most

crucial brain part associated with fear and vigilance, and the OFC activate first and deal with missing

information independent of its risk implication. 19 In a study with treatment variations between

strategic vs. non-strategic and cooperative vs. competitive conditions, Chark and Chew (2012) also

find that activities in the amygdala and OFC are positively correlated with the level of ambiguity

associated with the decisions. In some sense, when the brain switches modes facing ambiguity or a

different source of ambiguity, the DM may become much less probabilistically sophisticated. This

sudden change in sophistication may be captured by the issue or source-specific premium induced by

a CEU weighting function that reflects their pure preference for sources without elaboration on

associated variability of risk. As further support, psychological studies in general identify multiple

processes (some more effortful and analytic, others automatic, associative, and often emotion-based)

in play for decisions under risk or uncertainty (Weber and Johnson, 2008).

In fact, reducing the extent of ambiguity may increase the chance and intensity that the

calculating process of the brain becomes involved as compared to the emotional process around

amygdala activity. As an extreme mind experiment, if ambiguity is restricted to up to 10 red balls in a

N=1000 urn, we expect people to treat this prospect as if it were the one with no red balls. This is

consistent with the observed reduction of inconsistency from FA to PA in our study. Along this line of

thinking, we conjecture that there must be a threshold point between almost zero to full ambiguity in

18

Early studies by Einhorn and Hogarth (1985, 1986) and Hogarth and Einhorn (1990) hold the same idea, but

do not fit within the revealed preference approach. Abdellaoui, Vossmann, and Weber (2005) analyze general

decision weights under uncertainty as functions of decision weights under risk.

19

However, Huettel et al. (2006) show that activation within the lateral prefrontal cortex was predicted by

ambiguity preference, while activation of the posterior parietal cortex was predicted by risk preference, without

implicating the amygdala. Hsu, in private communication, pointed out that the difference in implicated brain

parts between Hsu et al. (2005) and Huettel et al. (2006) might be due to a design difference, as learning might

have occurred while repeatedly facing the same task in the latter. In this sense, our design is closer to that of Hsu

et al. See Dolan (2007) for further study of the behavioral role of the amygdala and OFC.

17

our design where the source premium stops to be relevant. This is however up to future studies to

clarify.

In general, if the perceived mental burden is too high, people are more likely to switch to simple

heuristics such as ambiguity aversion. Force-feeding people with tutorials in favor of following

Savage’s sure thing principle, or other similar priming, also have good chance to reduce ambiguity

aversion per se. One way conceivable is to present a simple version of Table 1 to the subjects after

introduction of the double-draw rule. Priming subjects into thinking risks may indeed reduce the

impact of the said source premium, which may be worthwhile pursuing in further follow-up studies.

In light of the neural study findings, this would be similar to investigating the specific triggering

mechanisms of the brain switching between different decision pathways.

Literature on estimating ambiguity models

Given the success of the prominent models of ambiguity in economic applications, many

experimental studies have attempted to estimate how well they fit lab data. For example, Halevy

(2007) tests the preference models for consistency, where subjects were asked to price four different

prospects including the original Ellsberg ones. He concludes that the actions of the majority of

subjects in his experiment are best explained by KMM (35%) and RDU/CEU20 (35%), followed by

SEU (19%) and MEU (1%) respectively. The remaining 10% cannot be explained by any theories and

are considered noise. In light of our study, we might speculate that CEU was the best fitting for those

35% people in Halevy’s design because they were more sensitive to the source per se rather than to

second-order risks. In this sense, our studies can be considered complementary.

Subsequently, Baillon and Bleichrodt (2011) find that models predicting uniform ambiguity

aversion are clearly rejected, and those allowing different ambiguity attitudes for gains and losses are

able to accommodate models such as prospect theory, α-maxmin (i.e. α-MP), CEU and a

sign-dependent version of the smooth model. In some sense, the domain-induced source shock may

require a higher source premium than the familiarity-induced one. Ahn, Choi, Gale and Kariv (2011)

find that people take measures to reduce the second-order risk induced by ambiguity and that most

20

Note that, according to Wakker (2008), what Halevy (2007) calls RNEU is virtually the same as RDU and

CEU, all of which are related to Quiggin (1982). Schmeidler (1989) proved however that under the convexity

condition CEU is equivalent to MEU.

18

subjects’ behavior is better explained by the α-maxmin model than by two-stage models such as

KMM. Further tests on the α-MP model include Chen, Katuscak and Ozdenoren (2007) and Hayashi

and Wada (2011).

Hey, Lotito, and Maffioletti (2008) generate ambiguity from a Bingo blower in an open and

non-manipulable manner in the lab and find that sophisticated models (such as CEU) did not perform

sufficiently better than simple theories such as SEU. In a follow-up study, Hey and Pace (2011)

evaluate the prediction power of various theoretical models and claim that “sophisticated theory does

not seem to work”, particularly the two-stage models.

Andersen, Fountain, Harrison, and Rutström

(2009) use variations of the model developed by Nau (2006) to estimate subject behavior. They show

that subjects behave in an entirely different qualitative way towards risk as towards uncertainty. In

some sense, these papers raise questions about universal validity of second-order sophisticated class

of models, from different angles than ours.

All in all, these studies find mixed support for different models in various experimental designs

of ambiguous environments. Given their design purpose, they are not directly conducive to the

discussion of separation between premium for the source and premium for second-order risk, which is

the domain of our current study. However, it may be interesting to think of designs that connect our

idea with those in the above discussed literature to identify explicit premium for ambiguity issue per

se in a less restrictive setup than ours.

Modeling the source

Abdellaoui et al. (2011) assume that different sources of uncertainty lead to different weighting

functions. Alternatively, we can identify the source with a more detailed specification of the act under

consideration. Chew and Sagi (2008) model different sources as different small worlds within the

universal state space (Ω, Σ). Gajdos, Hayashi, Tallon, and Vergnaud (2008) attach explicit set

description of admissible distributions on the state space to an act. In contrast, we identify the source

with the set of admissible (first- or second-order) distributions on the outcome space naturally

associated with the prospect. Given our source-specific acts, one may imagine that an MEU or KMM

DM does not change his functional form when making decision under uncertainty. For different

natural sources 𝑓, however, the admissible set 𝑆𝑓 may change subjectively. With CEU, since the

19

choice of weighting distribution is not limited to 𝑆𝑓 , it is indeed more convenient to think of the

source as causing different 𝑤.

Note that the state space is an auxiliary notion that greatly facilitates axiomatic approaches to

modeling decisions under uncertainty. For purpose of empirical studies, however, the more intuitive

and primitive notion of the outcome space is the primary object of concern. The notion of the

source-specific act is an attempt to translate empirically relevant statements from the former to the

latter with minimal loss of generality.

4.1. Excursion: Machina’s Reflection paradox and source-specific act

Machina (2009) points out that standard formulation of CEU satisfies the tail-event separability

property, which may cause decision paradoxes. In his Reflection example (Table 3), theory predicts

𝑓5 ≿ 𝑓6 ⟺ 𝑓7 ≿ 𝑓8 . L’Haridon and Placido (2010) show that 72-88% of subjects behaved in

violation of this prediction, in various experimental setups. Baillon, L’Haridon and Placido (2011)

subsequently demonstrate that this paradox persists also in many other models, including MEU and

KMM, with the most popular assumption of a concave second-order utility index 𝑣.

We believe the reason behind this paradox is hidden in the fact that standard derivations of these

preference notions are based on axioms stated on the general space of abstract acts, without explicitly

taking into account the source-specific informational circumstances associated with the acts. For this

reason, we introduced the term source-specific act that enhances a standard act with a set of lotteries

that are admissible under the circumstance, and based our formulation of MEU, KMM, and CEU on

it.

Table 3. Machina’s (2009) Reflection example

50 balls

f5

f6

f7

f8

50 balls

E1

E2

E3

E4

$400

$400

$0

$0

$800

$400

$800

$400

$400

$800

$400

$800

$0

$0

$400

$400

20

For MEU (and α-MP respectively) for example, Machina’s original acts 𝑓5 , 𝑓6 , 𝑓7 , 𝑓8 will be

properly enhanced to source-specific ones as (𝑓5 , 𝑆), (𝑓6 , 𝑆 ′ ), (𝑓7 , 𝑆′), ( 𝑓8 , 𝑆) , where for 𝑋 =

{0, 400, 800},

𝑆 = {𝑝 ∈ ∆(𝑋): 𝑝0 ∊ [0, .5], 𝑝400 ∊ [0,1], 𝑝0 + 𝑝400 ≤ 1} and

𝑆′ = {𝑝 ∈ ∆(𝑋): 𝑝0 ∊ [0, .5], 𝑝400 = .5}

with 𝑝 = (𝑝0 , 𝑝400 , 1 − 𝑝0 − 𝑝400 ). It is trivial to conclude that MEU(𝑓5 , 𝑆) = MEU( 𝑓8 , 𝑆) and

MEU(𝑓6 , 𝑆 ′ ) = MEU (𝑓7 , 𝑆′) by definition (2a), i.e. (𝑓5 , 𝑆) ∼ (𝑓8 , 𝑆) and(𝑓6 , 𝑆 ′ ) ∼ (𝑓7 , 𝑆′), as the

Ω-based function 𝑓𝑘 is rendered irrelevant because it contains no extra information not already in S.

Consequently, 𝑓5 ≿ 𝑓6 ⟺ 𝑓8 ≿ 𝑓7 , in consistency with observed behavior. In other words, the

Reflection example causes no paradox for our source-enhanced notion of MEU in contrast to Baillon

et al. (2011).

In our study, the empirical violation against α-MP (MEU) and KMM persists despite this

source-specific modification. And in the case of KMM, it is even true for all monotone second-order

utility index 𝑣, while Baillon et al.’s (2011) only challenges the class of concave 𝑣’s. In summary,

while the paradox around the Reflection example suggests we should incorporate more information

details into the preference analysis independent of the specific models, our results suggest that

probabilistic models like α-MP (MEU) and KMM might not be as robust as the weighting function

approach of CEU in general.

5. Conclusion

When people reveal ambiguity aversion as in Ellsberg-type decisions, the conventional belief as

expressed in α-MP/MEU and KMM, among many others, is that their ambiguity premium is paid to

avoid the variability of a wider range of risk, or the second-order risk. The source dependence

approach postulates a source premium at the core of ambiguity aversion, which is consistent with

CEU and has been corroborated in recent neuroimaging studies. By restricting attention to a

mean-preserving class of prospects via a novel design, we manage to cleanly disentangle these two

effects. The new design allows us to separate people who are avoiding ambiguity per se from those

21

avoiding second-order risk. Although many people’s decisions are consistent with the prediction of

α-MP/MEU and KMM, we robustly find that a substantial number of people still show ambiguity

aversion that cannot be attributed to aversion to second-order risks, which indicates that their

premium is paid to avoid the issue of ambiguity per se.

Note that in our design with full ambiguity CEU appears to be a more complex and flexible

model than the competing ones, by possessing the additional dimension of variation for source

premium. But it might not always be the best fitting model for statistical analysis due to issues like

over fitting. With the insight of our study, further designs are needed to explore the conditions for

when a simpler model without a parameter for source premium may be methodologically more

appropriate. Also, the normal brain is endowed with decision pathways of both the rational

(second-order risk) and the emotional (source aversion) kind, either of which could be triggered under

certain conditions. Thus, behavior in real-world decision making situations may be pointedly

manipulated by either priming them into aversion to ambiguity per se or explicitly training them into

thinking second-order risks. Future studies are required to further explore the boundary and extent of

the source premium identified in the current study.

REFERENCES

Abdellaoui, Mohammed, Aurélien Baillon, Laetitia Placido and Peter P. Wakker. 2011. “The Rich

Domain of Uncertainty: Source Functions and Their Experimental Implementation.” American

Economic Review, 101(2): 695-723.

Abdellaoui, Mohammed, Frank Vossmann, and Martin Weber. 2005. “Choice-Based Elicitation and

Decomposition of Decision Weights for Gains and Losses under Uncertainty.” Management Science,

51(9): 1384–1399.

Ahn, David, Syngjoo Choi, Douglas Gale, and Shachar Kariv. 2011. “Estimating Ambiguity Aversion

in a Portfolio Choice Experiment.” Mimeo.

Andersen, Steffen, Glenn W. Harrison, Morten I. Lau, and E. Elisabet Rutström. 2006. “Elicitation

Using Multiple Price List Formats.” Experimental Economics, 9(4): 383–405.

Andersen, Steffen, John. Fountain, Glenn W. Harrison, and E. Elisabet Rutström. 2009. “Estimating

Aversion to Uncertainty.” Mimeo.

Arló-Costa, Horacio, and Jeffrey Helzner. 2009. “Iterated Random Selection as intermediate Between

Risk and Uncertainty.” Paper presented at 6th International Symposium on Imprecise Probability:

Theories and Applications, Durham, United Kingdom.

22

Baillon, Aurélien, Olivier L’Haridon, and Laetitia Placido. 2011. “Ambiguity Models and the Machina

Paradoxes.” American Economic Review, 101(4): 1547-60.

Baillon, Aurélien and Han Bleichrodt. 2011. “Testing Ambiguity Models through the Measurement of

Probabilities for Gains and Losses.” Mimeo.

Becker, Gordon M., Morris H. DeGroot, and Jacob Marschak. 1964. “Measuring Utility by a Single

Response Sequential Method.” Behavioral Science, 9(3): 226–232.

Camerer, Colin, and Martin Weber. 1992. “Recent Developments in Modeling Preferences:

Uncertainty and Ambiguity.” Journal of Risk and Uncertainty, 5(4): 325-37.

Casadesus-Masanell, Ramon, Peter Klibanoff, and Emre Ozdenoren. 2000. “Maxmin Expected Utility

over Savage Acts with a Set of Priors.” Journal of Economic Theory, 92(1): 35–65.

Chark, Robin, and Soo Hong Chew. 2012. “A Neuroimaging Study of Preference for Strategic

Uncertainty.”

Chen, Hui, Neng Ju, and Jianjun Miao. 2009. “Dynamic Asset Allocation with Ambiguous Return

Predictability.” Mimeo.

Chen, Yan, Peter Katušcak, and Emre Ozdenoren. 2007.“Sealed Bid Auctions with Ambiguity: Theory

and Experiments.”Journal of Economic Theory, 136(1): 513-35.

Chew, Soo Hong, and Jacob S. Sagi. 2008. “Small Worlds: Modeling Attitudes toward Sources of

Uncertainty.” Journal of Economic Theory, 139 (1): 1-24.

Chew, Soo Hong, King King Li, Robin Chark, and Songfa Zhong. 2008. “Source Preference and

Ambiguity Aversion: Models and Evidence from Behavioral and Neuroimaging Experiments.” In

Neuroeconomics. Advances in Health Economics and Health Services Research, Vol. 20, ed. Daniel

Houser and Kevin McCabe, 179-201. Blingley, UK: JAI Press.

Chow, Clare Chua, and Rakesh K. Sarin. 2002. “Known, Unknown and Unknowable Uncertainties.”

Theory and Decision, 52(2): 127–138.

Dolan, R.J. 2007. “The Human Amygdala and Orbital Prefrontal Cortex in Behvioural Regulation.”

Phil. Trans. R. Soc. B, 362(1481): 787-799.

Dow, James, and Sergio Ribeiro da Costa Werlang. 1992. “Uncertainty Aversion, Risk Aversion, and

the Optimal Choice of Portfolio.” Econometrica, 60(1): 197-204.

Eichberger, Jürgen, and David Kelsey. 2009. “Ambiguity.” in P. Anand, P. Pattanaik and C. Puppes

(eds.), Handbook of Rational and Social Choice, OUP.

Einhorn, Hillel J., and Robin M. Hogarth. 1985. “Ambiguity and Uncertainty in Probabilistic

Inference.” Psychological Review, 92(4): 433–461.

Einhorn, Hillel J., and Robin M. Hogarth. 1986. “Decision Making under Ambiguity.” Journal of

Business, 59(4): 225–250.

Ellsberg, Daniel. 1961. “Risk, Ambiguity and the Savage Axioms.” Quarterly Journal of Economics,

75(4): 643-669.

Epstein, Larry G., and Tan Wang. 1994. “Intertemporal Asset Pricing under Knightian Uncertainty.”

Econometrica, 62(2): 283-322.

Ergin, Haluk, and Faruk Gul. 2009. “A Subjective Theory of Compound Lotteries.” Journal of

23

Economic Theory, 144(3): 899-929.

Fox, Craig R., and Amos Tversky. 1995. “Ambiguity Aversion and Comparative Ignorance.” Quarterly

Journal of Economics, 110(3): 585-603.

Fox, Craig R., and Amos Tversky. 1998. “A Belief-Based Account of Decision under Uncertainty.”

Management Science, 44(7): 879–895.

Fox, Craig R., and Martin Weber. 2002. “Ambiguity aversion, comparative ignorance, and decision

context.” Organizational Behavior and Human Decision Processes, 88(1): 476–498.

Gajdos, Thibault, Takashi Hayashi, Jean-Marc Tallon, and Jean-Christophe Vergnaud. 2008. “Attitude

toward Imprecise Information,” Journal of Economic Theory, 140(1): 23-56.

Ghirardato, Paolo, Fabio Maccheroni, and Massimo Marinacci. 2004. “Differentiating Ambiguity and

Ambiguity Attitude.” Journal of Economic Theory, 118(2): 133–73.

Gilboa, Itzhak, and David Schmeidler. 1989. “Maxmin Expected Utility with a Non-Unique Prior.”

Journal of Mathematical Economics, 18(2): 141-153.

Halevy, Yoram. 2007. “Ellsberg Revisited: An Experimental Study.” Econometrica, 75(2): 503-536.

Hansen, Lars Peter. 2007. “Beliefs, Doubts and Learning: The Valuation of Macroeconomic Risk.”

American Economic Review, 97(2): 1144-52.

Hansen, Lars Peter, and Thomas J. Sargent. 2008. “Fragile Beliefs and the Price of Model Uncertainty.”

mimeo.

Harrison, Glenn W., and E. Elisabet Rutström. 2008. “Risk Aversion in Experiments.” Research in

Experimental Economics, 12: 41-196.

Hayashi, Takashi, and Ryoko Wada. 2011. “Choice with imprecise information: an experimental

approach.” Theory and Decision, 69(3): 355-373

Heath, Chip, and Amos Tversky. 1991. “Preference and Belief: Ambiguity and Competence in Choice

under Uncertainty.” Journal of Risk and Uncertainty, 4(1): 5-28.

Hey, John D., Gianna Lotito, and Anna Maffioletti. 2008. “The Descriptive and Predictive Adequacy

of Theories of Decision Making under Uncertainty/Ambiguity.” Journal of Risk and Uncertainty,

41(2): 81-111

Hey, John D., and Noemi Pace. 2011. “The Explanatory and Predictive Power of Non

Two-Stage-Probability Theories of Decision Making Under Ambiguity.” Mimeo.

Hogarth, Robin M., and Hillel J. Einhorn. 1990. “Venture Theory: A Model of Decision Weights,”

Management Science, 36(7): 780–803.

Holt, Charles A., and Susan K. Laury. 2002. “Risk Aversion and Incentive Effects.” American

Economic Review, 92(5):1644–55.

Hsu, Ming, Meghana Bhatt, Ralph Adolphs, Daniel Tranel, and Colin F. Camerer. 2005. “Neural

Systems Responding to Degrees of Uncertainty in Human Decision-Making.” Science, 310:

1680-1683.

Huettel, Scott A., C. Jill Stowe, Evan M. Gordon, Brent T. Warner, and Michael L. Platt. 2006.

“Neural signatures of economic preferences for risk and ambiguity.” Neuron, 49(5): 765–775.

Ju, Nengjiu, and Jianjun Miao. 2009. “Ambiguity, Learning, and Asset Returns.” Econometrica,

24

forthcoming.

Klibanoff, Peter, Massimo Marinacci, and Sujoy Mukerji. 2005. “A Smooth Model of Decision

Making under Ambiguity.” Econometrica, 73 (6): 1849–1892.

L’Haridon, Olivier, and Laetitia Placido. 2010. “Betting on Machina’s Reflection Example: an

Experiment on Ambiguity.” Theory and Decision, 69 (3): 375-393.

Machina, Mark J. 2009. “Risk, Ambiguity, and the Rank-Dependence Axioms.” American Economic

Review, 99(1): 385–92.

Mukerji, Sujoy, and Jean-Marc Tallon. 2004. “An Overview of Economic Applications of David

Schmeidler’s Models of Decision Making under Uncertainty.” I. Gilboa eds, Routledge Publishers,

Uncertainty in Economic Theory: A collection of essays in honor of David Schmeidler’s 65th

birthday.

Nau, Robert F. 2006. “Uncertainty Aversion with Second-Order Utilities and Probabilities.”

Management Science, 52(1), 136–145.

Quiggin, John C. 1982. “A Theory of Anticipated Utility.” Journal of Economic Behavior and

Organization, 3(4): 323-343.

Sapienza, Paola, Luigi Zingales, and Dario Maesripieri. 2009. “Gender Difference in Financial Risk

Aversion and Career Choices are Affected by Testosterone.” PNAS, 106, 15268-15273.

Savage, Leonard J. 1954. The Foundations of Statistics. John Wiley & Sons, New York.

Schmeidler, David. 1989. “Subjective Probability and Expected Utility without Additivity.”

Econometrica 57(3): 571-587.

Segal, Uzi. 1987. “The Ellsberg Paradox and Risk Aversion: An Anticipated Utility Approach.”

International Economic Review, 28(1): 175–202.

Segal, Uzi. 1990.

“Two-Stage

Lotteries

without

the

Reduction

Axiom.”

Econometrica, 58(2): 349–377.

Seo, Kyoungwon. 2009. “Ambiguity and Second Order Belief.” Econometrica, 77(5): 1575-1605.

Stecher, Jack Douglas, Timothy W. Shields, and John Wilson Dickhaut. 2011. “Generating Ambiguity

in the Laboratory.” Management Science, forthcoming.

Trautmann, Stefen T., Ferdinand M. Vieider, and Peter P. Wakker. 2011. “Preference Reversals for

Ambiguity Aversion.” Management Science, 57(7): 1320-33.

Tversky, Amos and Craig R. Fox. 1995. “Weighing Risk and Uncertainty.” Psychological Review,

102(2), 269–283.

Tversky, Amos, and Daniel Kahneman. 1992. “ Advances in Prospect Theory: Cumulative

Representation of Uncertainty.” Journal of Risk and Uncertainty, 5(4): 297-323.

Tversky, Amos, and PeterP. Wakker. 1995. “Risk Attitudes and Decision Weights.” Econometrica,

63(6): 1255–1280.

Wakker, Peter P. 2008. “Uncertainty.” In Lawrence Blume & Steven N. Durlauf (eds.), The New

Palgrave: A Dictionary of Economics, 6780–6791, Macmillan Press: London.

Weber, Elke U., and Eric J. Johnson. 2008. “Decisions under Uncertainty: Psychological, Economic,

and Neuroeconomic Explanations of Risk Preference.” In: P. Glimcher, C. Camerer, E. Fehr, and R.

25

Poldrack (Eds.), Neuroeconomics: Decision Making and the Brain. New York: Elsevier.

26

Appendix A: Instructions (Slides translated from Chinese original)

This is an economic decision experiment supported by the National Research Fund. Please listen to

and read the instruction carefully, and make your choices seriously. Depending on your choice and

luck, you will have the chance to earn different amounts of money in the experiment. Payments are

confidential and no other participant will be informed about the amount you make. From now on and

till the end of the experiment any communication with other participants is not permitted. If you have

a question, please raise your hand and one of us will come to your desk to answer it.

[used in the FA/PA treatment] The experiment comprises three decision problems. At the end

of the experiment, you will make a random draw to select one from the three decision problems

in today’s experiment. We will pay you fully based on the realization of your decision in that

problem.

[used in the FAR treatment] The experiment comprises three decision problems. Because of

the time constraint, at the end of the experiment, we will randomly choose 3 students for real

monetary payment. Every selected student will make a random draw to select one from the three

decision problems in today’s experiment. We will pay you fully based on the realization of your

decision in that problem.

We will now start with Problem 1.

[Slide 1]

[used in the FA/FAR treatment]

Problem 1: Making a choice between option A and urn B

Urn B contains 5 red balls and 5 white balls.

B

Payoff rule for urn B: Two balls are to be drawn from urn B with replacement. You get 50 Yuan

if the first ball drawn is red and nothing if it is white. Conversely, you get 50 Yuan if the second

ball drawn is white and nothing if it is red. You get paid the sum of money earned in the two

draws.

27

[used in the PA treatment]

Problem 1: Making a choice between option A and urn B

Urn B contains 8 red balls and 8 white balls.

B

Payoff rule for urn B: Two balls are to be drawn from urn B with replacement. You get 50 Yuan

if the first ball drawn is red and nothing if it is white. Conversely, you get 50 Yuan if the second

ball drawn is white and nothing if it is red. You get paid the sum of money earned in the two

draws.

[Slide 2] Decision sheet for Problem 1

Make a choice by checking either option A or urn B in each row

Situation

Payoff of Option A

1

5 Yuan

2

10 Yuan

3

15 Yuan

…

…

9

45 Yuan

10

50 Yuan

…

…

19

95 Yuan

20

100 Yuan

Option A

Urn B

[Slide 3] At the end of the experiment, if your payoff is decided by Problem 1, the process of realizing

the payment is as follows.

You are asked to randomly draw one of the twenty situations in option A, and your choice (A or

B) in this situation will decide how you are paid. For example, if you draw situation 1 and your

choice in situation 1 is “option A” (to accept fixed payoff of 5 Yuan and give up drawing balls

from urn B), then you will be paid 5 Yuan immediately. If you draw situation 1 and your choice

in situation 1 is “urn B” (to draw balls from urn B and give up fixed payoff of 5 Yuan), then we

will let you draw balls from urn B to realize your payoffs. In another example, if you draw

28

situation 20 and your choice in situation 20 is “option A” (to accept fixed payoff of 100 Yuan and

give up drawing balls from urn B), then you will be paid 100 Yuan immediately. If you draw

situation 20 and your choice in situation 20 is “urn B” (to draw balls from urn B and give up

fixed payoff of 100 Yuan), then we will let you draw balls from urn B to realize your payoffs. If

you draw other situations, your payoff will be realized in a similar method.

[Slide 4]

[used in the FA/FAR treatment]

Problem 2: Make a choice between urn B and urn C

Urn C contains a mixture of 10 red and white balls. The number of red and white balls is unknown;

it could be any number between 0 red balls (and 10 white balls) to 10 red balls (and 0 white

balls).

Payoff rule for urn C: same as Payoff rule for urn B.

B

C

[used in the PA treatment]

Problem 2: Make a choice between urn B and urn C

Urn C contains a mixture of 16 red and white balls. The number of red and white balls is unknown

and satisfy the constraints that either there are at least 6 more white balls than red balls or there

are at least 6 more red balls than white balls in the urn, in other words | number of the red –

number of the white| ≥6.

Payoff rule for urn C: same as Payoff rule for urn B.

B

C

29

[Slide 5]

[used in the PA treatment]

Quiz: Is there any possibility that urn C contains 9 red balls and 7 white balls?

The answer: there is NOT, because of |9-7|<6

[Slide 6] Decision sheet for Problem 2

Question: If you are asked to make a choice between urn B and urn C, which urn will you choose?

□Urn B

□Urn C

[Slide 7]

[used in the FA/FAR treatment]

Problem 3: Make a choice between urn B and urn D

Urn D contains a mixture of 10 red and white balls. The number of red and white balls is

determined as follows: one ticket is drawn from a bag containing 11 tickets with the numbers 0 to

10 written on them. The number written on the drawn ticket will determine the number of red

balls in the urn. For example, if the number 3 is drawn, then there will be 3 red balls and 7 black

balls in the urn.

Payoff rule for urn D: same as Payoff rule for urn B.

Draw the number of red balls in urn D

2 3 4

0

1

5

6 7

8

9

10

B

0

D

[used in the PA treatment]

Problem 3: Make a choice between urn B and urn D

Urn D contains a mixture of 16 red and white balls. The number of red and white balls is

determined as follows: one ticket is drawn from a bag containing 12 tickets with the numbers 0 to

5, and 11 to 16 written on them. The number written on the drawn ticket will determine the

number of red balls in the urn. For example, if the number 3 is drawn, then there will be 3 red

balls and 16 white balls in the urn.

30

Payoff rule for urn D: same as Payoff rule for urn B.

Draw the number of red balls in urn D

2

1

0

12

3

5

13

11

14

15

B

4

16

D

[Slide 8] Decision sheet for Problem 3

Question: If you are asked to make a choice between urn B and urn D, which urn will you choose?

□Urn B

Gender

□Urn D

□Male

□Female

31

Appendix B1: The Comprehension Tests

B1.1 Motivation and design

At the core of our study here is the double-draw, alternate-color-win lottery design associated with

Choice B, C, and D. Since it is novel in the literature, it is legitimate to question whether subjects may

not have fully understood its statistical implications and whether they would behave consistently

when comparing simple objective lotteries such as Choice B.

To test for comprehension, we conducted an additional session with freshmen students of the cohort

as in our PA treatment, with the Instructions given below in section B1.1. They first faced Problem 1

where they were asked to match outcome distributions (those in Table 1) with urns of 6 different color

compositions in the N=5 condition. Correct answers are rewarded with money payments. After

handing in their Problem 1 decisions, they faced the task of revealing their preferences over four

different objective urns with our novel double-draw rule. And three out of a total of 30 participants

were randomly chosen to receive monetary payment according to their decisions, the detail of which

is given in B1.1. The session lasted 15 min excluding time for payment. Average payoff was 12 Yuan.

B1.2 The results

In Problem 1, 28 out of 30 subjects answered all 6 questions correctly. The remaining two

subjects made 2 mistakes each. The observed 6.67% (=2/30, [0.82, 22.07]) failure ratio is similar to

6.88% (=11/160, [3.48, 11.97]), which is the ratio of people with anomalous decision in Problem 1 of

our PA and FA treatments). The equality of proportion test yields p=0.4835. Thus, we conclude that

aside from regular noise there is no reason to believe that subjects in our study had abnormal

comprehension issue that jeopardizes our main conclusions in this paper.

In Problem 2, 24 out of 30 subjects ranked the four urns in a standard manner that make them

clearly identifiable as either risk averse (12 obs.) with A(1) = A(9) > A(3) >A(5), or risk seeking (10

obs.) with A(5) > A(3) > A(1) = A(9), or risk neutral (2 obs.) with A(5) = A(3) = A(1) = A(9). Two had

the ranking (A(5) > A(1) = A(9) >A(3), which can be viewed as consistent with prospect theory with

an unusual reference point. The remaining four displayed the rankings of A(5) > A(9) > A(3) >A(1),