Implementation of K-means algorithm using different Map

advertisement

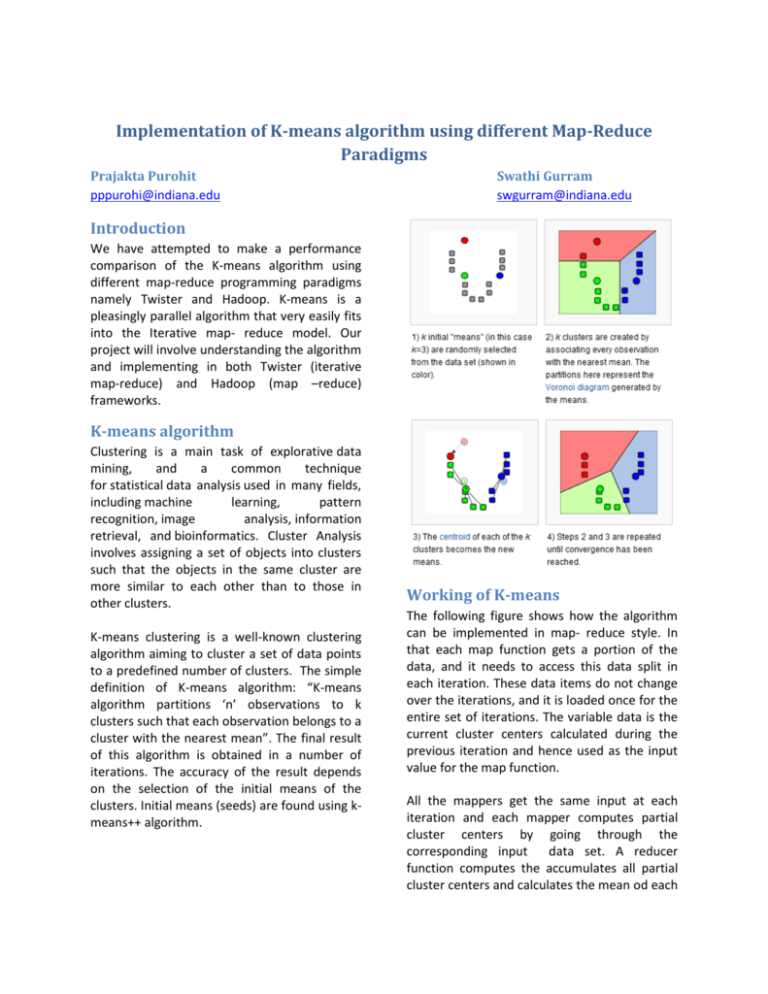

Implementation of K-means algorithm using different Map-Reduce Paradigms Prajakta Purohit pppurohi@indiana.edu Swathi Gurram swgurram@indiana.edu Introduction We have attempted to make a performance comparison of the K-means algorithm using different map-reduce programming paradigms namely Twister and Hadoop. K-means is a pleasingly parallel algorithm that very easily fits into the Iterative map- reduce model. Our project will involve understanding the algorithm and implementing in both Twister (iterative map-reduce) and Hadoop (map –reduce) frameworks. K-means algorithm Clustering is a main task of explorative data mining, and a common technique for statistical data analysis used in many fields, including machine learning, pattern recognition, image analysis, information retrieval, and bioinformatics. Cluster Analysis involves assigning a set of objects into clusters such that the objects in the same cluster are more similar to each other than to those in other clusters. K-means clustering is a well-known clustering algorithm aiming to cluster a set of data points to a predefined number of clusters. The simple definition of K-means algorithm: “K-means algorithm partitions ‘n’ observations to k clusters such that each observation belongs to a cluster with the nearest mean”. The final result of this algorithm is obtained in a number of iterations. The accuracy of the result depends on the selection of the initial means of the clusters. Initial means (seeds) are found using kmeans++ algorithm. Working of K-means The following figure shows how the algorithm can be implemented in map- reduce style. In that each map function gets a portion of the data, and it needs to access this data split in each iteration. These data items do not change over the iterations, and it is loaded once for the entire set of iterations. The variable data is the current cluster centers calculated during the previous iteration and hence used as the input value for the map function. All the mappers get the same input at each iteration and each mapper computes partial cluster centers by going through the corresponding input data set. A reducer function computes the accumulates all partial cluster centers and calculates the mean od each cluster to produce the new cluster centers for the next step. Depending on the difference between the previous and current cluster centers, a decision will be made on whether we need to execute another iteration of the algorithm. Map – Reduce Frameworks Twister is an iterative map-reduce framework. It is best suitable for algorithms like K-means. It has given us the best runtimes for K-means implementation. It does not have its own file system like Hadoop but uses the local filesystem. These are the salient features of Twister: Configurable long running (cacheable) map/reduce tasks Pub/sub messaging based communication/data transfers Efficient support for Iterative MapReduce computation Combine phase to collect all reduce outputs Data access via local disks Hadoop is a software framework that supports data-intensive distributed applications and suitable for simple map-reduce applications. It is however not suitable for iterative mapreduce functions like K-means. Hadoop has following features: Uses Map- reduce programming model it's own filesystem ( HDFS Hadoop Distributed File System based on the Google File System) which is specifically tailored for dealing with large files can intelligently manage the distribution of processing and your files, and breaking those files down into more manageable chunks for processing Validation We validated our results by calculating the sum of each point to the cluster center it belongs to. Since our aim is to find out the best cluster centers for the given data, each time the initial centroids differ, we will get a new answer. The best of these results, would be the one whose sum of distances to each point belonging to its own cluster would be minimum. Haloop is a modified version of the Hadoop Map - Reduce framework. We came across this prototype during our project. Major features of Haloop include: provide caching options for loopinvariant data access let users reuse major building blocks from Hadoop implementations have similar intra-job fault-tolerance mechanisms to Hadoop. HaLoop reduces query runtimes by 1.85 compared with Hadoop Implementation We have implemented the K-means algorithm in both Twister and Hadoop. The basic idea of the mapper and reducer remains same in both the frameworks. In Twister implementation becomes simple. The user program only can access Twister output compare to previoud input and based on a threshold decide whether to run another iteration of the clustering algorithm. Initial centroids from text file Load initial centroids and pass to twister framework as parameter Twister mapper: 1) loads its partition of data 2) Forms clusters corresponding to each centroid 3) sends this accumulated clusters to reducer Twister Reducer: 1) Collects output from all mappers 2) Calculates a new centroid for each new cluster 3) Sends out put to driver Driver: get centroids from Twister Check difference between previous and current centroids If differenced is greater than Threshold repeat the process again K-means Implementation in Twister 1 In Hadoop, there is no easy way to get the output from one map – reduce function and pass it as an input to the next map – reduce. Output of a map- reduce function can only be written to a file in the HDFS. The user program has to manually move this file from HDFS to local file system and read the results from the local file, and pass it as input to the next iteration. Initial centroids from text file Load initial centroids to a temp HDFS file and initiates Hadoop job Hadoop mapper: 1) For each point, decide which centroid is closest 2) Send the result to reducer Hadoop Reducer: 1) Collects output from all mappers 2) Calculates a new centroid for each new cluster 3) Sends out put to driver 4) Driver: get centroids from Hadoop Check difference between previous and current centroids If difference is greater than Threshold : 1) Copy reducer output HDFS to local file system 2) Delete previous initial centroids and load new centroids to that file in local directory 3) Delete hdfs output directory Repeat the process Otherwise , get final results K-means Implementation in Hadoop 1 Runtime Comparison We have noticed a large difference in the time taken by the K-means implementation in Twister framework and Hadoop framework. Twister is very fast and when compared to Hadoop. We took data sets ranging from 20,000 points to 80,000 points. Our initial centroid sets were generated by a method and we used these centroid sets as an input for 10 different executions. Twister’s execution times ranged about 1 or 1.5 seconds. However, a single iteration of the K-means on Hadoop itself took about 40 seconds. Iterative execution of Kmeans took more than 650 seconds. The graph below shows the comparison of execution times. The X-axis simply denotes the different input sets of initial centroids. This graph is plotted for a data set of 20000 points. When we attempted to execute the K-means Hadoop implementation on a larger data set, we often found that Hadoop has crashed while trying to execute it. K-means Twister implementation gave very good results even with 80000 points. The execution times were in the range if 1.5 -2 seconds. Twister- Hadoop Comparison 1000 900 Execution Time in seconds --> 800 700 600 500 400 300 200 100 0 1 2 3 4 5 6 7 8 9 10 Hadoop 603 630 886 642 646 942 483 690 671 713 Twister 1.1542 1.1263 1.1264 1.1097 1.1137 1.1262 1.0926 1.1102 1.1034 1.1159 Centroid Sets--> Timeline and Responsibilities Conclusion We have understood the difference between iterative map – reduce and simple map – reduce frameworks through our project. We are able to conclude that for algorithms that are iterative Twister is more suitable than Hadoop is. This is very evident through the execution times of K-means on Twister and Hadoop. References [1] http://salsahpc.indiana.edu [2] http://www.iterativemapreduce.org/sa mples.html [3] http://hadoop.apache.org/ [4] http://en.wikipedia.org/wiki/Apache_H adoop [5] http://clue.cs.washington.edu/node/14 [6] http://code.google.com/p/haloop/ [7] http://www.cs.washington.edu/homes/ billhowe/pubs/HaLoop.pdf