proj0 - Computer Science and Engineering

advertisement

Basic Image Manipulation and Perspective Projection

Om Pavithra Bonam and Sridhar Godavarthy

University of South Florida

{obonam, sgodavar}@cse.usf.edu

Computer Vision (CAP 6415 : Project 0)

1 Abstract

Perspective projection is the process of rendering an object in 3-dimensions as it would appear on

an image screen when captured on a camera. In this project we attempt to obtain the perspective

projection of an object -given a camera, the location of the object and the location of the image plane.

The projected image is then scaled and saved to an image file. The program was able to perform the

conversion accurately and saved the images to a PGM file. The program was also able to gracefully

handle any erroneous input.

2 Objectives

Write a program to read and write PGM files and perform simple operations on PGM images.

To understand perspective projection, the relation between real world, camera world and pixel

coordinates and to convert from one to the other and to use this understanding to write a program to

project objects in 3 dimensions onto an image screen and also to save the image to a file.

3 Programming and Problem solving

The entire program was written in C++. Once we read the input file from the user giving the specs

of the ROI and image files, the next part in programming involved reading and writing to a PGM file. The

PGM file is a very simple format and does not contain any excess information. The PGM file format is as

follows:

3.1 File Format

P5 PGM in binary format

# place for any comments

numrows numcols

max_gray_scale_value

Pixels…

Once the format is known, reading is only a file operation. The trick here was to write a function

that can read characters and another function that could read integers. This helped save a lot of

programming effort as calling these functions repetitively helped read the entire file without much

additional overload and also helped compact the code. By slightly tweaking these functions to handle

spaces and other punctuations, we were able to read the file successfully. Memory for the pixel values

was allocated dynamically.

3.2 Implementing the ROI

The ROI is represented as a pair of x and y coordinates corresponding to the top left and bottom

right corners. Whenever an operation is requested, we check to see if an ROI has been specified and if it

has, then start from the left corner and work our way towards the right bottom pixel performing the

required operations. This way, we avoid testing the other pixels. This is made possible by the fact that

ROIs are continuous rectangular regions.

3.3 Image Operations

In order to find the minimum and maximum gray scale operations in the ROI, we are left with no

option but to sequentially scan every pixel checking for the maximum and minimum at every pixel. once

the minimum and maximum values have been obtained, the threshold is calculated. the threshold needs

to be applied on the whole image and hence e ignore the ROI and compare each pixel to see if it falls

above or below the threshold and replace the pixel.

Finally, saving the image to a PGM file is just a file write operation once we have intelligent functions

that are capable of writing to the file.

3.4 The im class

All the image operations were encapsulated in a class called im. This class provides for almost all

of the basic operations needed on an image including reading, writing, accessing and setting individual

pixels, initializing an empty image, copying from one image to another, setting and getting ROI, setting

all pixels to white or to black etc.

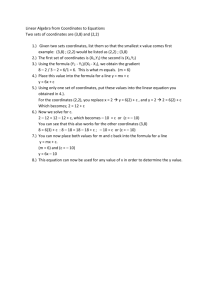

3.5 Aligning the camera axes with the real world axes

This was probably the toughest challenge in the entire project. The complications were mainly

due to our insufficient understanding of the alignment facts and also due to our virtual zero knowledge

of coordinate geometry. We were unable to proceed until we had a complete brush up of the basic

concepts in coordinate geometry. Finding pan and tilt required some serious thought. Finally, because

the xy plane is parallel to the x axis, the calculation of the theta angle reduced to a simple angle

between two points. Calculating alpha on the other hand was slightly more difficult. We arrived at a

solution by using the vector dot product. If we consider the Z axis as one vector and the camera axis as

the other vector, their dot product is given by

𝐴. 𝐵 = |𝐴|. |𝐵|. 𝑐𝑜𝑠 𝛼

------(1)

from which the angle α can be calculated. Creating vectors given two points is basic.

3.6 Converting from real world to image plane coordinates

Once we had the pan and tilt, conversion of any point from the real world coordinate system to

the image plane was straight forward by using the equations 2.5-42 and 2.5-43

At the end of this stage, we have converted from a 3-D object to a 2-D image as seen through a

camera and have discarded the depth information

3.7 Converting from image plane coordinates to pixel coordinates

The coordinates now are in the image plane. However, they are still in the real world coordinates

with the origin (0, 0) at the center. In order to display these vertices on a computer screen or in an

image, we will need to first convert them to the pixel coordinates. The difference is that in the pixel

coordinates system, the origin is at the left top and there are no negative coordinates! This conversion

can be achieved by the conversion functions given in the textbook and replicated here for completion:

𝑖=

𝑛−1

−

2

𝑗=

𝑚−1

+

2

𝑦

----------(2)

𝑥

----------(3)

where,

x,y are the coordinates in the image plane coordinates

m,n are the dimensions of the image

i,j are the pixel coordinates.

Although we attempted to plot the original image plane coordinates, they were really small in the

order of a hundredth and the plotted images could not really be deciphered without any scaling. So, we

went ahead and scaled the image plane coordinates before converting them to the pixel coordinates.

The pixel coordinates were, of course, rounded off to the nearest integer value.

3.8 Drawing the edges in the image

This was the most tedious task we had to undergo. Trying to explain it in detail would be an

injustice, both to the effort we put in and the actual objective of understanding the coordinate systems

and their conversions. We will satisfy ourselves and the reader by saying that we adopted a simple slope

intercept conversion and used the slope and the intercept at each point to calculate the next point on

the line. We had to be careful to see that we did not attempt to draw the line beyond the image

boundaries. We were aided by the fact that the image plane was restricted from +1 to -1 and hence we

were able to use the scaling itself as a boundary condition.

4 Observations

The major observation was the conversion from the real world to perspective images. It was an

experience to actually see the images as we had once drawn in our Engineering drawing class.

The only difference was that we did not perform hidden line removal. That would have

definitely made the final image look much better, but then we can already see the magnitude of

the effort we would have had to put in to complete that.

The exercise was an eye opener when it came to the relationship between pan, tilt, the real

axes, the image plane and the camera location. We had to put in a lot of thought and even

ended up making mini models of the three axes to help us in our understanding, but it was all

worth the effort as we are now able to visualize, with much less effort, the actual alignment

process.

Another interesting observation was the actual conversion between the real world and the pixel

coordinates. This is probably the first exercise where we have combined these two and

converted between them. We have done several image processing operations with a lot of

implicit assumption about the origin, rows and columns. Only after attempting to program this

did we realize that the rows and columns are actually interchanged between the pixel and real

coordinates and so was the origin shift.

Definitely worth mentioning was hidden line removal. Our excitement on seeing the final output

image was huge, but very short lived. We realized how important it is to perform hidden line

removal. It kind of marred the whole image.

Line drawing, between two points, is not as easy a task as it seems. What we thought would be

the most trivial part of the project turned out to be the most tedious! We spent most of our

time on this. Initially, we thought that we would just have to join along the vertical, horizontal or

diagonal, but it was when we put our fingers to the keyboard that we realized the intricacies

involved. But once we got the idea of using the slope, it was a cake walk.

Some effort was saved by the fact that the x-axis was parallel to the x-y plane. Because of this,

instead of having to go through the vector cross product to find the pan, we could just project

the point onto the x-y plane and calculate the angle between two straight lines. We did observe

that the angle was being measured clockwise and hence needed to be negative. This fact,

though, was not intuitive and we discovered it only during our sanity check.

The restriction of the image plane to +1 , -1 helped reduce a lot of error and bounds checking

when displaying the image.

5 Results

5.1 Binarization

Following are some of the output image after binarization. The program was tested on several

images of varying sizes and ranges. The program performed quite effectively and produced accurate

results. Figures 3, 4 and 5 are individually capable of confirming the correct working of the program. The

input images used in these cases are uniquely designed to be of block form and the ROI have been

selected to isolate the black or white regions. The ROI used, the maximum and minimum gray scale

values have been mentioned in the captions of the figures.

(a)

(b)

Fig 1. (a) Input PGM image(64 x 64) and (b) Result of binarization with a threshold based on the specified ROI

(10,100 82,200). calculated Lmax:239 and Lmin:0

(a)

(b)

Fig 2. (a) Input PGM image(512 x 512) and (b) Result of binarization with a threshold based on the specified ROI

(10,100 82,200). calculated Lmax:200 and Lmin:86

(a)

(b)

Fig 3. (a) Input PGM image(400 x 400) and (b) Result of binarization with a threshold based on the specified ROI

(150, 200 300, 350). calculated Lmax:255 and Lmin:0

(a)

(b)

Fig 4. (a) Input PGM image(400 x 400) and (b) Result of binarization with a threshold based on the specified ROI

(150, 200 300, 350). calculated Lmax:255 and Lmin:255

(a)

(b)

Fig 5. (a) Input PGM image(400 x 400) and (b) Result of binarization with a threshold based on the specified ROI

(50, 50 80, 60). calculated Lmax:0 and Lmin:0

(a)

(b)

Fig 6. (a) Input PGM image(800 x 800) and (b) Result of binarization with a threshold based on the specified ROI

(150, 200 300, 350). calculated Lmax:227 and Lmin:0

5.2 Perspective Projection

The results turned out to be much more beautiful and accurate than we dared to hope for! We

tried our program for various objects and sizes. The following figures show these results. The figures

each show the image as scaled to a 256x256 image and also the coordinates used for the object in real

world coordinates. Figure 9 shows an image where the image plane coordinates were beyond the

maximum of (+1 to -1). As can be seen the program clearly displays only the portion of the coordinates

that can actually be projected onto the image plane.

10 10 10 0 0 0 3

12 8

0 0 0

0 0 1

0 1 0

1 0 0

1 1 1

1 1 0

1 0 1

0 1 1

1 2

1 3

1 4

5 6

5 7

5 8

2 7

2 8

3 6

3 8

4 6

4 7

Fig 7. A cube in perspective vision on a 256x256 canvas. The real world coordinates are given next to it.

10 10 10 0 0 0 3

12 8

0 0 0

0 0 4

0 4 0

4 0 0

4 4 4

4 4 0

4 0 4

0 4 4

1 2

1 3

1 4

5 6

5 7

5 8

2 7

2 8

3 6

3 8

4 6

4 7

Fig 8. Another cube in perspective vision on a 256x256 canvas. The real world coordinates are given next to it.

10 10 10 0 0 0 3

12 8

0 0 0

0 0 16

0 16 0

16 0 0

16 16 16

16 16 0

16 0 16

0 16 16

1 2

1 3

1 4

5 6

5 7

5 8

2 7

2 8

3 6

3 8

4 6

4 7

Fig 9. Yet another cube in perspective vision on a 256x256 canvas. The real world coordinates are given next to it.

Note that the edges of the cube extend beyond the canvas and are being truncated.

10 10 10 0 0 0 3

12 8

0 0 0

0 0 4

0 10 0

5 0 0

5 10 4

5 4 0

5 0 4

0 10 4

1 2

1 3

1 4

5 6

5 7

5 8

2 7

2 8

3 6

3 8

4 6

4 7

Fig 10. A cuboid in perspective vision on a 256x256 canvas. The real world coordinates are given next to it.

10 10 10 0 0 0 3

12 8

0 0 0

0 0 4

0 10 0

4 0 0

4 10 4

4 10 0

4 0 4

0 10 4

1 2

1 3

1 4

5 6

5 7

5 8

2 7

2 8

3 6

3 8

4 6

4 7

Fig 11. A square prism in perspective vision on a 256x256 canvas. The real world coordinates are given next to it.

6 Conclusion

We were able to effectively convert real world coordinates into image plane coordinates and

eventually into pixel coordinates, scale the resulting image and also save it to a PGM file. We were able

to write our own program to read and write PGM files successfully. The program has been tested on

several images and also checked for boundary conditions. The program handles all scenarios and exits

gracefully in scenarios involving faulty input. We now have an image class that can be used to perform

many standard operations on a PGM image.

We were able to understand the concept of perspective projection and also the importance of axes

alignment and the pan and tilt angles.

7 References

[1]Rafael C. Gonzalez , Richard E. Woods, Digital Image Processing, Addison-Wesley Longman Publishing

Co., Inc., Boston, MA, 1992

[2] R. Jain, R. Kasturi, and B. G. Schunck, Machine Vision (McGraw-Hill, 1995)

[3] www.about.com