Chapter2_HW

advertisement

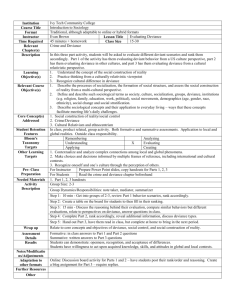

STAT 875 homework for Chapter 2 with partial answers 1) Partially based on #4.5 of Agresti (2007): A classic example in categorical data analysis courses involves estimated the probability of thermal distress (TD) for the space shuttle given the temperature at lift off. This data comes from the space shuttle launches before the 1986 Challenger mission disaster. Below is how I get the data into a data frame: > temp<-c(66, 70, 69, 68, 67, 72, 73, 70, 57, 63, 70, 78, 67, 53, 67, 75, 70, 81, 76, 79, 75, 76, 58) #In Fahrenheit > TD<-c(0,1,0,0,0,0,0,0,1,1,1,0,0,1,0,0,0,0,0,0,1,0,1) #1 distress, 0 no distress > table5.10<-data.frame(temp, TD) > table5.10 temp TD 1 66 0 2 70 1 3 69 0 4 68 0 5 67 0 6 72 0 7 73 0 8 70 0 9 57 1 10 63 1 11 70 1 12 78 0 13 67 0 14 53 1 15 67 0 16 75 0 17 70 0 18 81 0 19 76 0 20 79 0 21 75 1 22 76 0 23 58 1 For example, the first observation shows a temperature of 66 degrees at lift off and no thermal distress. Using this data, complete the following below. If you are working on this problem before the generalized linear model section, you can just complete the logistic regression parts until then. a) Find the estimated logistic regression model. Also, estimate the probit and complementary loglog models. b) Use an odds ratio with the logistic regression model to understand the effect temperature has on thermal distress. c) Is temperature important in predicting thermal distress? Perform hypothesis tests to answer the question using all three models. d) Estimate the probability of thermal distress at a temperature of 56F using all three models. e) Estimate the probability of thermal distress at a temperature of 31F using all three models. This was the temperature in 1986 for Challenger’s lift off. Discuss possible problems with using the model at this temperature. f) Using all three models, construct one plot of the estimated probability of thermal distress versus the temperature. 1 Below is part of my R code and output. > #Logistic model > mod.fit<-glm(formula = TD ~ temp, data = table4.10, family = binomial(link = logit)) Deviance = 20.5819 Iterations - 1 Deviance = 20.32139 Iterations - 2 Deviance = 20.3152 Iterations - 3 Deviance = 20.31519 Iterations - 4 > summary(mod.fit) Call: glm(formula = TD ~ temp, family = binomial(link = logit), data = table4.10, na.action = na.exclude, control = list(epsilon = 1e-04, maxit = 50, trace = T)) Deviance Residuals: Min 1Q Median -1.0611 -0.7613 -0.3783 3Q 0.4524 Max 2.2175 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) 15.0429 7.3719 2.041 0.0413 * temp -0.2322 0.1081 -2.147 0.0318 * --Signif. codes: 0 `***' 0.001 `**' 0.01 `*' 0.05 `.' 0.1 ` ' 1 (Dispersion parameter for binomial family taken to be 1) Null deviance: 28.267 Residual deviance: 20.315 AIC: 24.315 on 22 on 21 degrees of freedom degrees of freedom Number of Fisher Scoring iterations: 4 > #LRT: -2log(lambda)- Could also use the anova() and Anova() functions > mod.fit$null.deviance - mod.fit$deviance [1] 7.95196 > 1 - pchisq(q = mod.fit$null.deviance - mod.fit$deviance, df = mod.fit$df.null mod.fit$df.residual) [1] 0.004803533 > #Estimated probability of success – Could also use the predict() function > lin.pred <- mod.fit$coefficients[1] + mod.fit$coefficients[2] * 56 > exp(lin.pred)/(1 + exp(lin.pred)) (Intercept) 0.885115 2 1.0 Estimated probability of thermal distress for a primary O-ring 0.6 0.4 0.0 0.2 Estimated probability 0.8 Complementary log-log Logit Probit 55 60 65 70 75 80 Temperature 2) Why is the = 0 + 1x model not usually used to model binary response data? 3) Compare the logit, probit, and complementary log-log models using my pi.plot.R program. 4) Below are some past test questions involving odds ratios. Note that some of these are discussed already in the book . a) Consider the following estimated logistic regression model of logit( ˆ ) ˆ ˆ1x1 ˆ 2 x12 . Derive the estimated odds ratio for a c unit increase in x1. c ˆ1 ˆ 2 (2x1 c) The OR is e . Notice how x1 is still in the OR! When you interpret the OR, you will need to always state the value of x1 (so, investigate it at a few different x1 values) b) Consider the following estimated logistic regression model of logit(̂) ˆ ˆ1x1 ˆ 2x 2 ˆ 3 x1x 2 . Derive the estimated odds ratio for a c unit increase in x1 holding x2 constant. ˆ ˆ The OR is ec(1 x23 ) . Notice how x2 is still in the OR! When you interpret the OR, you will need to always state the value of x2 (so, investigate it at a few different x2 values) c) Find the correct Wald confidence intervals for those corresponding population odds ratios. Note that the most difficult part here is to find the correct variances. 5) (old test problem) The Salk vaccine clinical trial data set examined in Chapter 1 can also be examined through using a logistic regression model. Shown below is the data in its contingency table format and how the data can be entered into a data frame within R for the logistic regression analysis: Vaccine Placebo Total Polio Polio free Total 57 200,688 200,745 142 201,087 201,229 199 401,775 401,974 3 > polio<-data.frame(Treatment = c(0,1), no.polio = c(200688, 201087), trials = c(200745, 201229)) > polio Treatment no.polio trials 1 0 200688 200745 2 1 201087 201229 Using this data, answer the following questions. a) Estimate a logistic regression model using the treatment as the explanatory variable and the polio outcome as the response variable. > mod.fit<-glm(formula = no.polio/trials ~ Treatment, weight = trials, family = binomial(link = logit), data = polio) > summary(mod.fit) Call: glm(formula = no.polio/trials ~ Treatment, family = binomial(link = logit), data = polio, weights = trials) Deviance Residuals: [1] 0 0 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) 8.1665 0.1325 61.647 < 2e-16 *** Treatment -0.9108 0.1568 -5.807 6.34e-09 *** --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 (Dispersion parameter for binomial family taken to be 1) Null deviance: 37.313 Residual deviance: 0.000 AIC: 16.678 on 1 on 0 degrees of freedom degrees of freedom Number of Fisher Scoring iterations: 3 The estimated logistic regression model is logit( ˆ ) 8.1665 0.9108Treatment . b) Perform a LRT to determine if the treatment has an effect on the polio outcome. Make sure to correctly state the hypotheses of interest with respect to the model. Use = 0.05. > library(package = car) > Anova(mod.fit, test = "LR") Analysis of Deviance Table (Type II tests) Response: success/trials LR Chisq Df Pr(>Chisq) Treatment 37.313 1 1.006e-09 *** --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 H0: 1 = 0 vs. Ha: 1 0 n L((0) | y1,,yn ) ˆ (0) 1 ˆ (0) i i 2log( ) 2log 2 y log (1 y )log = 37.31 i i (a) (a) (a) L( | y1,,yn ) ˆ i 1 ˆ i i1 p-value = P(A > 37.31) = 1.010-9 where A ~ 12 4 Because 1.010-9 < 0.05, reject Ho. There is sufficient evidence that the treatment has on whether or not someone comes down with polio. c) Compute the 95% profile likelihood ratio interval for the odds ratio. Interpret the interval. The interval for 1 needs to be calculated first as the set of 1 values such that L(0 ,1 | y1,,yn ) 2 2log 1,1 ˆ ˆ L(0 ,1 | y1,,yn ) is satisfied. > beta.ci<-confint(object = mod.fit, parm = "Treatment", level = 0.95) Waiting for profiling to be done... > beta.ci #C.I. for beta 2.5 % 97.5 % -1.2255622 -0.6095081 The interval for OR= exp(1) takes both the lower and upper limits from above and uses the exponential function with them: > exp(beta.ci) 2.5 % 97.5 % 0.2935926 0.5436182 > rev(1/exp(beta.ci)) 97.5 % 2.5 % 1.839526 3.406080 With 95% confidence, the odds of a being polio free are between 0.29 and 0.54 times as large when the placebo is given than when the vaccine is given. Alternatively, you could say the odds of being polio free are between 1.84 and 3.41 times as large when the vaccine is given than when the placebo is given. This next part was not on the test, but it is important for understanding how R codes categorical variables. The contingency table from Chapter 1 was entered as follows: > c.table <- array(data = c(57, 142, 200688, 201087), dim = c(2,2), dimnames = list(Treatment = c("vaccine", "placebo"), Result = c("polio", "polio free"))) > c.table Result Treatment polio polio free vaccine 57 200688 placebo 142 201087 The c.table object can be directly converted into a data frame format using > set1 <- as.data.frame(as.table(c.table)) > set1 Treatment Result Freq 1 vaccine polio 57 2 placebo polio 142 3 vaccine polio free 200688 4 placebo polio free 201087 5 > levels(set1$Treatment) [1] "vaccine" "placebo" Notice that vaccine is listed before placebo, which is not in alphabetical order. The reason is due to how the row labels were entered into the array() function. R simply takes the ordering there as the ordering for the factor. To convert the data into an object that can be used with glm(), I can use the following code: > trials <- aggregate(formula = Freq ~ Treatment, data = set1, FUN = sum) > success <- set1[set1$Result == "polio free",] > set2 <- data.frame(Treatment = success$Treatment, success = success$Freq, trials = trials$Freq) > set2 Treatment success trials 1 vaccine 200688 200745 2 placebo 201087 201229 6) Partially based on #5.22 of Agresti (2007): Consider a data set with y = 0 at x = 10, 20, 30, 40 and y = 1 at x = 60, 70, 80, 90. a) Explain intuitively why ̂1 is essentially infinite for the logistic regression model logit() = 0 + 1x. b) State the value of ̂1 and Var(ˆ1 ) at the last iteration given by glm() using all of its default settings. Notice the warning message that appears at the end of the iterations! In R’s numerical estimation routine, ̂ ’s equal to 0 and 1 are appearing which is a warning sign to R that something is going wrong (remember that 0 < < 1). Notice the summary(mod.fit) output does not have any warning messages. No message is printed that convergence did not occur despite the maximum number of iterations being reached (R stops at 25 as its final iteration – see what happens when maxit = 24). The plot is helpful to see what is going on as well. > set1<-data.frame(x = 10*c(1,2,3,4,6,7,8,9), y = c(0,0,0,0,1,1,1,1)) > mod.fit<-glm(formula = y ~ x, data = set1, family = binomial(link = logit)) Warning message: glm.fit: fitted probabilities numerically 0 or 1 occurred > summary(mod.fit) Call: glm(formula = y ~ x, family = binomial(link = logit), data = set1) Deviance Residuals: Min 1Q -1.045e-05 -2.110e-08 Median 0.000e+00 3Q 2.110e-08 Max 1.045e-05 Coefficients: (Intercept) x Estimate Std. Error z value Pr(>|z|) -118.158 296046.187 0 1 2.363 5805.939 0 1 (Dispersion parameter for binomial family taken to be 1) Null deviance: 1.1090e+01 Residual deviance: 2.1827e-10 on 7 on 6 degrees of freedom degrees of freedom 6 AIC: 4 Number of Fisher Scoring iterations: 25 c) Add two observations at x = 50 – one with y = 0 and one with y = 1. Estimate the model again and discuss if there are any problems. There may still be some problems even though convergence is obtained in 21 iterations. > set2<-rbind(set1, data.frame(x = 10*c(5,5), y = c(0,1))) > mod.fit2<-glm(formula = y ~ x, data = set2, family = binomial(link = logit)) Warning message: glm.fit: fitted probabilities numerically 0 or 1 occurred > summary(mod.fit2) Call: glm(formula = y ~ x, family = binomial(link = logit), data = set2) Deviance Residuals: Min 1Q Median -1.177 0.000 0.000 3Q 0.000 Max 1.177 Coefficients: (Intercept) x Estimate Std. Error z value Pr(>|z|) -98.158 39288.592 -0.002 0.998 1.963 785.772 0.002 0.998 (Dispersion parameter for binomial family taken to be 1) Null deviance: 13.8629 Residual deviance: 2.7726 AIC: 6.7726 on 9 on 8 degrees of freedom degrees of freedom Number of Fisher Scoring iterations: 21 > plot(x = set1$x, y = set1$y, ylab="Estimated probability", xlab = "x", main = "Problem 5.22",panel.first = grid(col = "gray", lty = "dotted")) > curve(expr = plogis(mod.fit$coefficients[1]+mod.fit$coefficients[2]*x), col = "red", add = TRUE) 7 0.6 0.4 0.0 0.2 Estimated probability 0.8 1.0 Problem 5.22 20 40 60 80 x d) Replace the two observations from part c) with y = 1 at x = 49.9 and y = 0 at x = 50.1. Estimate the model again and discuss if there are any problems. The estimation method now is working. However, there are a large number of iterations for having just one explanatory variable, which is a sign that we “may” be close to having problems. > set3<-rbind(set1, data.frame(x = 10*c(4.99,5.01), y = c(1,0))) > set3 x y 1 10.0 0 2 20.0 0 3 30.0 0 4 40.0 0 5 60.0 1 6 70.0 1 7 80.0 1 8 90.0 1 9 49.9 1 10 50.1 0 > mod.fit3<-glm(formula = y ~ x, data = set3, family = binomial(link = logit)) > summary(mod.fit3) Call: glm(formula = y ~ x, family = binomial(link = logit), data = set3, na.action = na.exclude, control = list(epsilon = 1e-04, maxit = 50, trace = T)) Deviance Residuals: Min 1Q -1.19990 -0.00555 Median 0.00000 3Q 0.00555 Max 1.19990 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) -26.3822 48.0078 -0.55 0.583 x 0.5276 0.9597 0.55 0.582 (Dispersion parameter for binomial family taken to be 1) 8 Null deviance: 13.863 Residual deviance: 2.900 AIC: 6.9 on 9 on 8 degrees of freedom degrees of freedom Number of Fisher Scoring iterations: 9 9