Assessment Report

advertisement

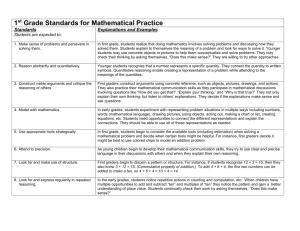

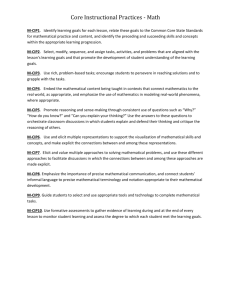

Tier 1 and Tier 2 Programs – Assessment Report Department or program name: Degree/program assessed: Submitted by: Date submitted: Geology and Geophysics Undergraduate Paul Heller June 1, 2012 What is your research question about student learning? The mathematical competency (GG Learning Outcome 1: Basic scientific and mathematical competence) of our undergraduate students continues to be a topic of discussion for our faculty. Consequently we conceived a project designed to assess: i) The mathematical competency of our undergraduate students. ii) How that mathematical competency evolves from their entry into, until their exit from the BS Geology & Geophysics program. iii) The effectiveness of a short answer quiz given in an exam environment without prewarning as a mathematical competency assessment tool. What program or department-level student learning outcomes were assessed by this project? The mathematical competency component of our GG Learning Outcome 1: Basic scientific and mathematical competence. Basic mathematical competence requires competence in quantitative reasoning. Consequently we decided to test the competency of our students in the following skills: 1. Computational Skills: a sufficient level of ability in the language and symbols of mathematics and the ability to perform calculations and operations related to the application of mathematics or statistics to the field of geology. 2. Data Interpretation: the ability to read and interpret visual displays of quantitative information in graphs, and tables and to use them to make predictions and draw inferences from the data. 3. Problem Solving: the ability to understand a word problem, set up the necessary equations that describe the problem, solve the equations using basic quantitative techniques, and then interpret or draw a conclusion from the solution. 1 Describe your assessment project and provide pertinent background information. Geology has traditionally been a descriptive subject that is striving to be a quantitative science. Our undergraduates typically have a mixed range of mathematical abilities (as an example a cohort of 47 students taking Geol. 2005 this year averaged a 2.5 GPA, including retakes, in pre-calculus math and a 1.75 GPA, including retakes. in Calculus 1). Consequently, the mathematical competency of our students is a major concern to our faculty and has previously led to faculty meeting discussions of whether we need to address/improve the mathematical competency of our students. No actions have previously been agreed. The Department Assessment Committee initially discussed how we might best assess the mathematical competency of our students. Particular concerns included: i) the level and scope of the questions/assessment tool given that students take different course options and thus have varying exposure to courses with a significant math content, ii) the development of a reliable, meaningful and useful set of questions/assessment tool (e.g. Wallace et al., 2009), and iii) the method/environment we would use to administer the assessment. After consideration of the above we decided to construct an assessment tool that would test the basic mathematical competency that we expect all Geology & Geophysics BS students to have and to administer that tool to students at both the entry and exit stages of their undergraduate careers in order to test the evolution of that competency during our degree program. The assessment tool itself was designed to take about 90 minutes to complete and to mostly consist of short answer (not multiple-choice) questions which permit assessment of the students ability to create a plan, make a decision or solve a problem, rather than just providing the correct answer to a question. The questions were based on three sources. Firstly, all geology faculty were asked to list the basic mathematical skills that they expected students to have upon entry and at exit from their course (Appendix I) and a subset of questions were devised based on this response. Secondly, the Committee was concerned that the questions should be meaningful, and reliable and for this reason a subset of questions were derived from an existing tried and tested quantitative reasoning test (the Wellesley Quantitative Reasoning Test: http://serc.carleton.edu/files/nnn/teaching/wellesley_qr_booklet.pdf). Lastly a few example questions were taken from the Force Concept Inventory (Hestenes et al. 1992). All the questions taken from the last two sources were modified to have a “geological context” to provide interest and relevance for our students. For example, a question from the Wellesley Test that used an example of a garbage dump after a parade in Boston was changed to consider the mass of dinosaurs that could have lived in Wyoming during the Jurassic. All modified questions were scientifically correct. The final assessment tool consisted of 29 questions (Appendix II). Each question assessed one or more of 34 different mathematical knowledge/competency skills that can be grouped into the three broad areas of: computational, data interpretation and problem solving skills (Appendix III). All skills assessed by a given question were separately graded pass/fail. For example the answer given by a student to a question may show that they had the 2 quantitative reasoning skills required to evaluate the question, but didn’t have the computational skills to give the correct mathematical solution to the question. In this example, the student was awarded a “pass” for quantitative reasoning and a fail for the algebraic skill. In most cases, each skill was assessed by multiple questions thus allowing a valid assessment of whether the student has that knowledge/competency/skill. The tool was administered in an exam environment to 24 students taking Geol. 2000 (the first sophomore class that is taken mostly by geology majors; our 1000-level classes are not specific to geology students) and 33 students taking Geol. 4820 (our capstone class which mostly consists of graduating seniors). No prior warning of the test was given and students were asked to be anonymous to alleviate their fears that we were testing them. No calculators were allowed. Provide relevant data to answer your research question. What are the key findings? Table 1 gives the pass rates for each mathematical competency skill. The data show that the students have different competencies for each of the skills that were assessed. For example, they clearly have a good understanding of scientific notation, areas and perimeters, the equation for a straight line, etc. But fewer are able to correctly solve equations, work with fractions and do long division without a calculator. For many, knowledge of some areas of basic physics (e.g. gravitation, terminal velocity, buoyancy) is poor. There is also a richness of data beyond what is shown in Table 1. Each individual question provides more detail regarding student abilities. For example, although the students showed a clear understanding of scientific notation, question 23b clearly showed that the students had great difficulty dividing by a negative exponent (without the aid of a calculator). Greater than 50% success/pass rate 2000level 4820level Less than 50% success/pass rate 2000level 4820level Area 100 80 Force/physics 48 Scientific Notation 97 88 Gravitation 48 y=mx+c 95 76 Solving exponential eqn. 48 Perimeter 95 100 Acceleration 47 SI units 86 54 Long Division 47 Velocity Formula 86 92 Term Vel 41 Cartesian Co-ords 83 87 Quadratic Eqn. 36 Evaluation 74 60 Tan 33 Literal Eqns. 71 89 Triangular Plot 31 Interest 71 42 Fractions 29 Exponents 69 52 Rates 24 Graphical Skills 64 62 Buoyancy 0 Quantitative Reasoning 63 53 Period 61 64 Slope of a line 60 61 Properties of a cube 57 61 Ratios 56 47 Trigonometry 56 25 Sin 56 25 Cos 56 25 Algebra 52 46 Distance Formula 50 43 Table 1: Pass rates (%) for each mathematical competency skill for students taking Geol. 2000 or 3 46 46 44 43 44 13 73 7 71 51 18 0 Geol. 4820 in the spring of 2012. The skills listed in Table 1 were also grouped into three broad skill sets that form the basis of mathematical competency. Table 2 presents the pass rates for those skill sets and for the questions that were derived from the Wellesley QR test and those that were written in-house. 2000-level pass rate (%) Computational Skills Data Interpretation Problem Solving Questions based on the Wellesley QR test In-house questions 55 62 63 60 57 4820-level pass rate (%) 49 62 55 53 51 Table 2: Pass rates (%) for the three broad skill sets of mathematical competency and the pass rates for the questions based on the Wellesley QR test and for the questions that were generated in-house. These data clearly show that the students are slightly better at data interpretation and problem solving than they are at using computational skills, a result consistent with the discussion of Table 1. Put simply, our students are more able to analyze a problem and interpret data than they are able to calculate/compute the answer to the problem. The Wellesley questions appeared to be marginally easier than our in-house questions, with the discrepancy being due to the 3 physics questions taken from the Force Concept Inventory, which the students generally found to be hard. Thus we conclude that our questions were at the appropriate level. Interestingly, the mean score for incoming Wellesley College students taking their QR test is 70%, a full 10-17% higher than our students achieved. However, our students took the test “cold” without any warning, whereas Wellesley students are encouraged to practice and study for the test. A key objective of our project was to determine how the mathematical competency of our students evolves throughout their undergraduate careers. At first glance, examination of Tables 1 and 2 suggest that mathematical competency slightly decreases as the students’ progress from sophomore to senior level; although it is clear that some individual skills have significantly improved. For example, senior students had greater facility at triangular plots, a technique that is much used in the geosciences. 4 Figure 1 provides a graphical comparison of the data. Figure 1. Graphical comparison of the pass rate of students taking Geol. . 2000 vs. Geol. . 4820. Unfortunately, two important factors complicate interpretation of this data: i) nearly 50% of the Geol. 2000 class were juniors or seniors, thus one might expect the pass rate for the 2000level class to be somewhat inflated (we choose to administer the assessment within existing classes and therefore had to accept a non-ideal distribution of student experience, a problem we intend to address next year) and, ii) the overall performance of this year’s capstone class was lower than previous years on all fronts, suggesting that the apparent decrease in mathematical competency might reflect year to year variation in the ability of the students. Table 3 shows data from our ongoing Capstone Assessment program (by Barbara John) which illustrates that this year’s class performed less well than previous years in terms of some of our other learning outcomes. Other contributing causes for the decrease in mathematical competency could be that Geol. 2000 is a problem-solving class and thus these students were more “in practice” for the assessment. Conversely the decrease may reflect a reduction in “skill retention” as students’ progress away from the courses that taught those skills. Regardless, the major conclusion has to be that there is no significant difference in the overall mathematical competency of the students in the Geol. 2000 & Geol. 4820 classes. Interestingly when the students were asked “did their experience in Geology & Geophysics courses contribute to the development of their mathematical competency skills” in our exit questionnaire, they agreed/strongly agreed. 5 GG-OT3 Technical & Computer Competence Year of Capstone class 2012 2011 2010 2009 Year of Capstone class 2012 2011 2010 2009 Year of Capstone class 2012 2011 2010 2009 Fail 1 0 3 1 Fail 0 2 0 1 Fail 2 1 3 1 Minimum 15 2 6 4 Minimum 1 2 4 0 Minimum 11 1 3 4 Good 19 14 10 13 Good 22 15 2 7 Good 13 5 10 14 Excellent 1 10 5 7 Excellent 13 7 18 19 Excellent 10 19 8 9 Describe the meaning of your results as they relate to program strengths and challenges. What changes to the program or curriculum have been made, are planned, or contemplated in the future as a result from this assessment project? We conclude that although Geology & Geophysics expects students to have basic mathematical competency; we do not significantly improve those skills in our upper- division classes. Put simply, we largely assume students will gain these basic skills by their sophomore year and concentrate on teaching higher levels of skills that were not tested by this assessment. To some extent, the assessment project confirmed our fears that our students do not have the mathematical competency that we think they should have and thus exposes a weakness in our program. The data collected during this project were presented and enthusiastically discussed at a faculty meeting towards the end of the spring semester. Faculty agreed that, after re-visiting a couple of the less successful questions, the test should be given again during 2012-2013 to more students in different courses/classes to gain a more robust data set to be used for future discussions, and that we should also collect demographic data (i.e. whether the student is a freshman, sophomore etc.) to help more clearly address the question of improvement in skills as a student progresses through our degree program. There was concern that the overall mathematical competency is not at the level we expect. Faculty have discussed how we might improve this competency (e.g. an additional quantitative reasoning class versus an agreement to insert more quantitative reasoning exercises in all our classes so as to reinforce and improve student competencies). The Department Chair suggested that we might need a faculty retreat to discuss how we should proceed. In summary, we will modify and continue to use some version of this tool to assess the undergraduate students over the next few years. Over the next year we will begin to discuss how we can adjust our program of study to positively impact student quantitative reasoning. 6