CS 676 – Parallel Processing

advertisement

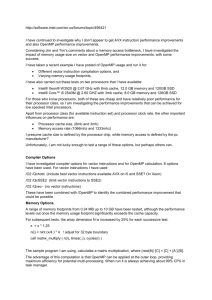

Boguste Hameyie (bh88) CS 676 – Parallel Processing Assignment 2 – Report Files included Makefile A make file has been included to ease the compilation process. - To clean up the folder, call ‘make clean’. To compile the sequential program, call ‘make seq’. to run the program afterward, use the command ./seqsolver.o To compile the OpenMp program, call ‘make open. to run the program afterward, use the command ./ompsolver.o To compile the Pthread program, call ‘make thread. to run the program afterward, use the command ./threadsolver.o SequentialSolver.c (seqsolver.o) make seq The sequential solver uses a Gaussian elimination algorithm I’ve found and updated that first performs forward elimination, followed by back substitution. What made this algorithm interesting is the way it approached back-substitution, going from tail to head, whereas the back substitution algorithm I had written in the previous assignment went from head to tail. This proved particularly useful in for my parallel programs. This algorithm is usually stable, i.e. it produces the correct results most of the time (more than 90% of the time). It is usually very fast, although there is some randomness involved due to the matrix that gets randomly generated. Its main fault is that, as the matrix gets bigger, it spends a lot more time performing forward elimination. However, it usually makes up for by doing back substitution really fast. It cannot handle matrices of size higher than 1000x1001. ThreadSolver.c (threadsolver.o) make thread This program was written with PThread constructs. It attempts to improve the speed of the algorithm used in the SequentialSolver. While it performs forward elimination really fast, its main fault is the way it handles back substitution. This is primarily due to the sequential nature of back substitution. When I was experimenting on this, I noticed the importance of selecting an adequate chunking size. When the chunking size is too big or too small, very little speedup can be attained. Initially, I was using condition variables to signal workers that a given x value was now available for use. This slowed than the algorithm and introduced number errors, as well as occasional race conditions. I also experimented with using a mutex to enforce he existence of a single writer at a time. This raised the accuracy of the program to 90-100%, while considerably slowing it down. Instead, I opted to let it run without the use of a mutex with the belief that, as the back substitution algorithm works in ”reverse”, the x values will get updated without requiring blocking. This has proven to be correct in most cases, except when the matrix is approaches n=1000. OMPSolver.c (ompsolver.o) make open This program was written using OpenMP constructs. Just like the PThreads version, its speed suffers due to the sequential nature of back substitution. In my initial experiments on my machine (a MacBook Pro with the latest version of the OS), I used a combination OpenMP constructs that sped up back-substitution, making the OpenMP version the fastest. When tux was once again available and more stable, I tried that version of my program but it couldn’t compile. As such, I made several modifications to it, which involved removing the chunking that used to occur in the back substitution and the use of “pragma critical” in order for it to work on Tux. This led to a loss of speed. The OpenMP version can handle matrices of size higher than 1000x1001. Experiments The timing experiments I have run are recorded in the attached excel sheet (bh88-timing.xlsx). The experiment were run on tux from an ssh connection. The programs ran significantly faster, taking less than few seconds on the matrices of size n>100, when ran on campus. From a remote connection, with multiple users using the machine, the speed was considerably reduced, which is why I only recorder the speed of matrices of size n <=100. As the size of the matrix gets bigger, the speed of the parallel program surpasses that of the sequential version. Every now and then, the Pthread version runs faster than the OpenMP version, although there is a point at which their speed starts to converge. The Pthread version runs significantly faster when the margin of error is 0. Only the time produced by wtime was considered. Execution Time chart 3.5 Execution Time 3 2.5 2 sequential 1.5 pthread 1 openmp 0.5 0 1 2 3 4 5 6 7 8 9 10 N Size/10 The above chart shows the wtime taken by each version. With smaller sizes, the sequential program runs faster, but as the size increases, the parallel programs run faster. The chart below shows the speed up obtain on 16 processor, with the formula speedup= SequentialTime/(Parallel Time/N); where N is the number of processors. Speed up on 16 procs 30 Speed up 25 20 15 10 5 0 1 2 3 4 5 6 7 8 9 10 pthread 6.326 9.024 27.18 23.8 18.77 16.69 17.72 17.01 16.46 16.62 openmp 0.738 1.368 23.14 21.27 20.72 18.44 18.06 16.82 16.79 16.81 Lessons learned: - Parallel programs are only useful when the problem solved deals with large quantity of data. Otherwise, a sequential program could do the job faster. - When chunking or load balancing, it is important to choose an appropriate size so as to avoid having workers that either too much or too little - OpenMP is easy and pretty useful, depending on the task, frameworks such as PThreads can be more suitable as it gives the programmer more control over what is happening.