Weighted Trigonometric and Hyperbolic Fuzzy Information

advertisement

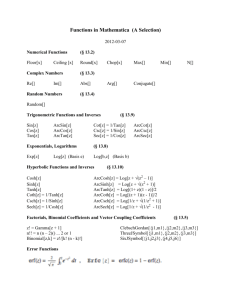

Paper Template Weighted Trigonometric and Hyperbolic Fuzzy Information Measures and Their Applications in Optimization Principles Arunodaya Raj Mishra Dhara Singh Hooda Divya Jain Depatrement of Mathematics Jaypee University of Engineering and Technology, Guna, M. P., India Depatrement of Mathematics Jaypee University of Engineering and Technology, Guna, M. P., India Depatrement of Mathematics Jaypee University of Engineering and Technology, Guna, M. P., India arunodaya87@outlook.com ds_hooda@juet.ac.in divya.jain@juet.ac.in ABSTRACT In the present communication, two new measures of weighted trigonometric fuzzy information and two new measures of weighted hyperbolic fuzzy information are introduced, and their validity and the essential properties are studied. Fuzzy information measures can be used for the study of optimization principles when certain partial information is available. The proposed measures of weighted trigonometric 0, if xi A and thereis noambiguity A xi 1, if xi A and thereis noambiguity 0.5, max ambiguity whether x A or x A i i The concept of measuring fuzzy uncertainty without reference to probabilities began in 1972 with the work of [2] who defined the entropy of A FS ( X ) using Shannon’s fuzzy information and weighted hyperbolic fuzzy information are applied to study the maximum information principle. Keywords Fuzzy set, fuzzy information measure, weighted fuzzy information, membership function and maximum fuzzy information principle. entropy as n H ( A) A ( xi ) log A ( xi ) (1 A ( xi )) log(1 A ( xi )) . i 1 This is called fuzzy information measure. The fuzzy information measure has found wide applications to Engineering, Fuzzy traffic control, Fuzzy aircraft control, Introduction The concept of entropy was developed to measure the uncertainty of a probability distribution. [9] introduced the concept of fuzzy sets and developed his own theory to measure the ambiguity of a fuzzy set. A fuzzy set A is represented as A xi A xi : A xi 0,1 ; xi X , where A ( xi ) represents the degree of member-ship and is defined as Computer sciences, management and Decision making, etc. [5] proposed a rule to assign the numerical values to probabilities in circumstances where certain partial information is available known as Maximum Entropy Principle. It has many applications in physical, biological and social sciences and stock market analysis. [6] have studied the use of extended MaxEnt in all these applications. They have also studied the use of measures of entropy as a measure of uncertainty, equality, spread out, diversity, interactivity, flexibility, system complexity, disorder, dependence and similarity. In the study of optimization principles, reliability theory, marketing, measurement of risk in portfolio analysis and quality control various generalized measures of entropy and cross entropy have wide applications. [3] used [8] measure in Evidently, studying the market behavior and found an anomalous result which was later overcome on using parametric measure of n 1 H ( A, W ) wi sin A ( xi ) sin (1 A ( xi )) 0 i 1 entropy. Similarly, various other methods can be explained if if and only if either 𝜇𝐴 (𝑥𝑖 ) = 0 or 1 − 𝜇𝐴 (𝑥𝑖 ) = 0 for all 𝑖 = we use the generalized measures of entropy and directed 1, 2, … … , 𝑛. divergence. 1 It implies H ( A, W ) 0 if and only if A is a crisp set. [3] and [6] also studied the totally probabilistic maximum entropy principle. However, there are situations where probabilistic measures of entropy fails to work and thus these require extending their scope of application by applying 1 (P2) H ( A, W ) is maximum if and only if A is the fuzziest set i. e. A ( xi ) 0.5 for all i 1, 2, ......., n . Differentiating (3) with respect to A ( xi ) , we have possibility of fuzzy measures. Corresponding to weighted entropy introduced by [1], [7] has developed two new measures of weighted fuzzy entropy as follows: 1 H ( AW , ) n wi cos A ( xi ) cos (1 A ( xi )) (5) i 1 A ( xi ) n (1 A ( xi )) ( xi ) H s ( A, W ) wi sin A sin 1 (1) i 1 2 2 which vanishes at A ( xi ) 0.5 . n (1 A ( xi )) ( xi ) H c ( A, W ) wi cos A cos 1 (2) i 1 2 2 2 1 n 2 H ( AW , ) 2 i 1 wi sin A ( xi ) sin (1 A ( xi )) A ( xi ) and applied these measures to study the principle of maximum weighted fuzzy entropy. In the present paper, we introduce two weighted trigonometric fuzzy information measures and study their validity in section 2. In section 3, we introduce two weighted hyperbolic fuzzy information measures and study their validity. Their applications to maximum weighted fuzzy information principle are discussed in section 4. Again differentiating (5) with respect to A ( xi ) , we have (6) which is less than zero at A ( xi ) 0.5 . 1 H ( A, W ) is maximum at A ( xi ) 0.5 for all i 1, 2, 3, ..., n . 1 Further from (5), we see that H ( A, W ) is an increasing function of A ( xi ) in the region 0 A ( xi ) 0.5 and 1 H ( A, W ) is a decreasing function of A ( xi ) in the region Weighted Trigonometric Fuzzy Information 0.5 A ( xi ) 1 . Measures (P3) Let Corresponding to [4], we define the following measures of If weighted trigonometric fuzzy information: n 1 H ( A, W ) wi sin A ( xi ) sin (1 A ( xi )) i 1 2 A ( xi ) 1 1 n 2 H ( A, W ) wi cos n i 1 2 (3) Here, we prove that (3) and (4) are valid measures. Theorem 1. The weighted trigonometric fuzzy information measure given by (3) is valid measure. Proof: To prove that the given measure is a valid measure, we shall show that (3) satisfies the four properties (P1) to (P4). 1 (P1) H ( A, W ) 0 if and only if A is a crisp set. 0 A ( xi ) 0.5, * ( xi ) A ( xi ) A for all i 1, 2, 3, ..., n and if (4) A* be a sharpened version of A , which means that 0.5 A ( xi ) 1, * ( xi ) A ( xi ) A for all i 1, 2, 3, ..., n . 1 Since H ( A, W ) is an increasing function of A ( xi ) in the region 0 A ( xi ) 0.5 1 and H ( A, W ) is a decreasing function of A ( xi ) in the region 0.5 A ( xi ) 1 . 1 * 1 * ( xi ) A ( xi ) H ( A , W ) H ( A, W ) in [0, 0.5] (7) A 1 * 1 * ( xi ) A ( xi ) H ( A , W ) H ( A, W ) in [0.5, 1] . (8) A Hence (7) and (8) together give 3 H ( AW , ) n wi cos h A ( xi ) cosh (1 A ( xi )) (11) i 1 A ( xi ) Which vanishes at A ( xi ) 0.5 . 1 * 1 H ( A , W ) H ( A, W ) . Again differentiating (11) with respect to A ( xi ) , we have (P4) From the definition It is evident that 1 c 1 H ( A , W ) H ( A, W ) c where A is the complement of A obtained by replacing A ( xi ) by (1- A ( xi ) ). 2 3 n 2 H ( AW , ) 2 i 1 wi sinh A ( xi ) sinh (1 A ( xi )) A ( xi ) Which is less than zero at A ( xi ) 0.5 . 1 Hence the measure H ( A, W ) proposed in (3) is a valid weighted trigonometric fuzzy information measure. Proceeding on similar lines, it can easily be proved that the weighted measure proposed in (4) is a valid measure of weighted trigonometric fuzzy information measure. 3 H ( A, W ) is maximum at A ( xi ) 0.5 for all i 1, 2, 3, ..., n . 3 Further from (11) we see that H ( A, W ) is an increasing Weighted Hyperbolic Fuzzy Information function of A ( xi ) in the region 0 A ( xi ) 0.5 Measures 3 H ( A, W ) is a decreasing function of A ( xi ) in the region In this section, we introduce the following measures of weighted hyperbolic fuzzy information as n 3 H ( A, W ) wi i 1 sinh A ( xi ) sinh (1 A ( xi )) sinh and 0.5 A ( xi ) 1 . * (P3) Let A be a sharpened version of A , which means that (9) n cos h A ( xi ) cos h (1 A ( xi )) 4 H ( A, W ) wi (10) i 1 (1 cos h ) If 0 A ( xi ) 0.5, * ( xi ) A ( xi ) A for all i 1, 2, 3, ..., n and if 0.5 A ( xi ) 1, * ( xi ) A ( xi ) A for all Firstly, we prove that (9) and (10) are valid measures. i 1, 2, 3, ..., n . Theorem 2. The weighted hyperbolic fuzzy information 3 Since H ( A, W ) is an increasing function of A ( xi ) in the measure given by (9) is valid measure. Proof: To prove that the given measure is a valid measure, we shall show that (9) satisfies the four properties (P1) to (P4). 3 (P1) H ( A, W ) 0 if and only if A is a crisp set. region 0 A ( xi ) 0.5 3 and H ( A, W ) is a decreasing function of A ( xi ) in the region 0.5 A ( xi ) 1 . Evidently, 3 * 3 * ( xi ) A ( xi ) H ( A , W ) H ( A, W ) in [0, 0.5] (12) A n sinh A ( xi ) sinh (1 A ( xi )) 3 H ( A, W ) wi 0 i 1 sinh 3 * 3 * ( xi ) A ( xi ) H ( A , W ) H ( A, W ) in [0.5, 1] . (13) A if and only if either 𝜇𝐴 (𝑥𝑖 ) = 0 or 1 − 𝜇𝐴 (𝑥𝑖 ) = 0 for all 𝑖 = 1, 2, … … , 𝑛. 3 It implies H ( A, W ) 0 if and only if A is a crisp set. 3 * 3 H ( A , W ) H ( A, W ) . (P4) From the definition, It is evident that 3 c 3 H ( A , W ) H ( A, W ) 3 (P2) H ( A, W ) is maximum if and only if A is the fuzziest set i. e. A ( xi ) 0.5 for all Hence (12) and (13) together give i. Differentiating (9) with respect to A ( xi ) , we have c where A is the complement of A obtained by replacing A ( xi ) by (1- A ( xi ) ). 3 Hence the measure H ( A, W ) proposed in (9) is a valid weighted hyperbolic fuzzy information measure. Similarly, it can easily be proved that the weighted measure proposed in (10) is a valid measure of weighted hyperbolic fuzzy information measure. 1 n 1 cos 1 i 1 2 wi g r ( xi ) K Thus when 2 0 , we have Principle of maximum weighted fuzzy information In this section, we study the applications of two newly introduced weighted trigonometric measures and two newly introduced weighted hyperbolic measures of fuzzy 1 1 g r ( xi ) sin cos 1 2 2 wi n 1 H max ( A, W ) wi i 1 g (x ) sin 1 1 cos 1 1 2 r i 2 wi information for the study of maximum weighted fuzziness by or considering problems. n 1 H max ( A, W ) 2 wi sin i 1 Problem 3.1. Maximize n 1 H ( A, W ) wi sin A ( xi ) sin (1 A ( xi )) i 1 Subject to the fuzzy constraints n A ( xi ) 0 , i 1 (15) (16) Consider the following Lagrangian n L wi sin A ( xi ) sin (1 A ( xi )) i 1 n n 1 A ( xi ) 0 2 A ( xi ) g r ( xi ) K i 1 i 1 A ( xi ) n 1 Hence H max ( A, W ) f ( , wi ) , where i 1 ' f ( , wi ) 2wi cos , (20) 1 Thus (20) shows that H max ( A, W ) is concave. (17) Problem 3.2. In this problem, we apply another weighted measure to study maximum weighted fuzzy information 0 gives A ( xi ) 1 cos 1 1 2 g r ( xi ) 2 wi . From (15) and (16), we get (18) 1 n 1 2 g r ( xi ) cos 1 g r ( xi ) K i 1 2 wi (19) where 1 and 2 can be obtained from (16) and (19). When 2 0 , we have 1 n 1 cos 1 i 1 2 wi 0 principle. For this, we consider the following problem: Maximize 2 A ( xi ) 1 1 n 2 H ( A, W ) wi cos n i 1 2 1 n 1 2 g r ( xi ) cos 1 0 i 1 2 wi and 2 wi '' and f ( , wi ) 2wi sin 0 . Thus L 1 1 2 g r ( xi ) f ( , wi ) 2 wi sin n A ( xi ) g r ( xi ) K i 1 and where cos (14) (21) Subject to the set of fuzzy constraints are given in (15) and (16). Consider the following Lagrangian L 2 A ( xi ) 1 n 1 n wi cos 1 A ( xi ) 0 i 1 n i 1 2 2 n A ( xi ) g r ( xi ) K i 1 (22) L A ( xi ) A ( xi ) Consider the following Lagrangian 0 gives n L wi sinh A ( xi ) sinh (1 A ( xi )) sinh i 1 1 n 2 sin 2 wi 1 1 n 1 n 2 sin ( 1 2 g r ( xi )) 1 0 2 i 1 wi A ( xi ) 1 n 1 n 2 sin ( 1 2 g r ( xi )) 1 g r ( xi ) K 2 i 1 wi Now when 2 0 , we have where 1 n 1 n 2 sin 1 1 0 2 i 1 wi 1 1 n 2 H max ( A, W ) wi cos n i 1 2 (23) ( 1 2 g r ( xi )) 1 wi 1 cos n 2 wi 1 ' f ( , wi ) sin 2n 2 f ( , wi ) 4n 2(1 cosh ) c 0 1 cos 2 (24) 2 Thus (24) shows that H max ( A, W ) is concave function. Problem 3.3. In this problem, we maximize the weighted hyperbolic fuzzy information measure introduced in (9) under the set of fuzzy constraints given in (15) and (16). c g r ( xi ) K When 2 0 , we have 2(1 cosh ) 0 1 cosh sinh 1 wi 2 and and 2 2 (1 2 g r ( xi )) wi 1 n log i 1 1 cosh sinh 1 wi 1 n log i 1 n 2 Hence H max ( A, W ) f ( , wi ) where i 1 wi ( 2 g r ( xi )) c 1 wi c and Thus when 2 0 , we have f ( , wi ) (1 2 g r ( xi )) wi 1 n log i 1 1 cosh sinh 1 n 1 n 2 sin 1 1 g r ( xi ) K 2 i 1 wi 1 n 2 sin 2 wi 1 (1 2 g r ( xi )) wi log 1 cosh sinh Applying the constraints (15) and (16), we have and 1 (25) zero, we get and where Differentiating (25) with respect to A ( xi ) and equating to Applying fuzzy constraints given in (15) and (16), we get '' n n 1 A ( xi ) 0 2 A ( xi ) g r ( xi ) K i 1 i 1 ( 1 2 g r ( xi )) 1 1 wi 1 n log i 1 1 wi 2 1 cosh sinh Thus when 2 0 , we get 2(1 cosh ) g r ( xi ) K n sinh sinh (1 ) 3 H max ( A, W ) wi i 1 sinh where 1 (1 2 g r ( xi )) wi log 1 cosh sinh (26) c When 2 0 , we get 1 wi 1 n log i 1 n 3 Hence H max ( A, W ) f ( , wi ) i 1 where f ( , wi ) sinh sinh (1 ) sinh ' f ( , wi ) cosh cosh (1 ) '' 2 and f ( , wi ) sinh sinh (1 ) 3 Hence (26) shows that H max ( A, W ) is concave. we maximize another weighted hyperbolic fuzzy information measure introduced in (10) under the set of fuzzy constraints (15) and (16). n L wi cosh A ( xi ) cosh (1 A ( xi )) (1 cosh ) i 1 (27) Differentiating (27) with respect to A ( xi ) and equating to zero, we get (1 2 g r ( xi )) A ( xi ) Where d 1 log wi d 1 cosh sinh ( 2 g r ( xi )) 1 wi 2(1 cosh ) 0 1 cosh sinh 1 wi 1 n log i 1 2(1 cosh ) g r ( xi ) K 1 cosh sinh 1 wi 2 Thus when 2 0 , we have n 4 H max ( A, W ) wi cosh cosh (1 ) (1 cosh ) i 1 where 1 (1 2 g r ( xi )) d wi log 1 cosh sinh n 4 Hence H max ( A, W ) f ( , wi ) i 1 where f ( , wi ) cosh cosh (1 ) (1 cosh ) ' f ( , wi ) sinh sinh (1 ) '' 2 and f ( , wi ) cosh cosh (1 ) 0 2 2(1 cosh ) Applying the constraints (15) and (16), we get (1 2 g r ( xi )) d wi 1 n 0 log i 1 1 cosh sinh and 2 The corresponding Lagrangian is given by n n 1 A ( xi ) 0 2 A ( xi ) g r ( xi ) K i 1 i 1 1 wi and 3 Thus H max ( A, W ) 0 Problem 3.4. Here, (1 2 g r ( xi )) d wi 1 n g r ( xi ) K log i 1 1 cosh sinh 4 Thus H max ( A, W ) 0 4 which shows that H max ( A, W ) is concave. Conclusion The development of new parametric and non-parametric fuzzy measures of information will definitely reduce uncertainty, which will help to increase the efficiency of the process. It is therefore concluded that though many information measures have been developed, still there is scope for the better measures to be developed which will find applications in a variety of fields. In this paper, we have developed two measures of weighted trigonometric fuzzy information and two measures of weighted hyperbolic fuzzy information, and have studied their applications in optimization principle. References 1. Belis, M. and Guiasu, S. 1968. A quantitativequalitative measure of information in cybernetic systems, IEEE Trans. Inform. Theory, 14, 593-594. 2. De Luca, A. and Termini S. 1972. A definition of non probabilistic entropy in setting of fuzzy set theory. Inform. Contr., 20, 301-312. 3. Herniter, J. D. 1973. An entropy model of brand purchase behaviour. J. Marker Rev., 11, 20-29. 4. Hooda, D. S. trigonometric and Mishra, A. R. 2014. On fuzzy information measures. (Communicated). 5. Jaynes, E. T. 1957. Information theory and statistical mechanics. Phys. Rev., 106, 620-630. 6. Kapur J. N. and Kesavan, H. K. 1987. The generalized maximum entropy principle (with applications). Standard educational press. 7. Prakash, O., Sharma P. K. and Mahajan, R. 2008. New measures of weighted fuzzy entropy and their applications for the study of maximum weighted fuzzy entropy principle. Information Sciences, 178, 2389-2395. 8. Shannon, C. E. 1948. A mathematical theory of communication. Bell Syst. Tech. J., 27, 379-423 & 623-659. 9. Zadeh, L. A. 1965. Fuzzy sets. Inform. Contr., 8, 338-353.