AISB11_Abstracts - Department of Computing

advertisement

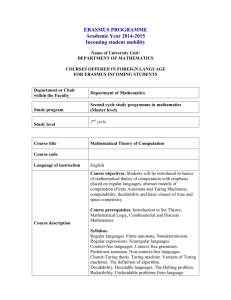

Abstracts MS no 1 2 3 Author / Institution Margret Bowden Peter Burton Honorary Fellow, ACU (Canberra) Title Abstract Computational creativity Cognitive Architecture: Issues of Control and Effective Computation N/A Nir Fresco PhD candidate, School of history and philosophy, UNSW (Sydney) The Information Processing Account of Computation Biologically inspired cognitive architectures are developing to modularise and emulate aspects of human cognition, but do not yet seem to be questioning the implicit presumption of universality in the application or scope of ‘effective’ (syntactically symbolic) computation in their models of natural human intelligence. For example, my Cognitive System Theory requires a 2D ‘balance-board’ steering control for its model of the self, a kind more ‘intuitive’ than syntactic or ‘rational'. Therefore, the paper will reconsider all aspects of control within embodied cognitive systems, as this control relates particularly to issues of autonomy, agency and the control associated with the self-model. These issues will be considered from multiple perspectives, ranging from exadaptive control theory as a way of approaching the emergence of human intelligence, to considering what is yet to be embodied to reach a hominid quality of (self-aware) intelligence in robotic systems, particularly those already capable of inductively learning natural language use.1 There is a widespread tendency in cognitive science to equate computation (in particular digital computation) with information processing. It is hard to find a comprehensive explicit account of information processing, which explains concrete digital computation (rather than mathematical computability). Still it is not uncommon to find descriptions of digital computers as information processing systems proper. Such descriptions take it for granted that digital computation presupposes information processing. The Information Processing account seems like a natural candidate to explain digital computation. After all, digital computers traffic in data. But when ‘information’ comes under scrutiny, the standard account becomes a less obvious candidate. ‘Information’ may be interpreted semantically and non- Reviewer 1 N/A Reviewer 2 N/A Ian Sillitoe David Gamez Hector Zenil Steve Torrance 4 Leighton Evans Department of Political and Cultural Studies (Swansea) 5 Raffaela Giovagnoli, Pontifical Lateran University (Rome) semantically, and its interpretation has direct implications for Information Processing as an account of digital computation. This paper deals with the implications of these interpretations for explaining concrete digital computation in virtue of information processing. To begin with, I survey Shannon’s classic theory of information, and then examine how ‘information’ is used in computer science. In the subsequent section, I evaluate the implications of how 'information' is interpreted for explaining concrete computation. The key requirements for a physical system to compute, on an Information Processing account, are then fleshed out, as well as some of the limitations of such an account. Any Information Processing account must embrace an algorithm-theoretic apparatus to be a plausible candidate for explaining concrete digital computation. Object-oriented I argue that the category of equipment denoted computational objects have, philosophy – the by virtue of the unique presence of those objects in the world as permanently nature of the withdrawn from full disclosure of operation due to their dependence on relations computational code, a unique manner of causal interaction with users that between humans can only be described as vicarious. As computational devices become and computational increasingly ubiquitous as tools for managing and navigation the human objects world, this vicarious relationship becomes important in understanding how this technology affects the phenomenological experience of being in the world as it is, alongside computational objects, and how orientation towards the world can be described as computational. Computational Some formal aspects of human reasoning, as Brandom shows in the second Rationality and chapter of Between Saying and Doing (Oxford University Press, Oxford, Religious Beliefs 2008), can be elaborated by a Turing Machine (TM) namely by a machine that simulates human reasoning. But what can’t be elaborated either by Artificial Intelligence and by Computational Intelligence is the content of beliefs. I would like to present an example of the impossibility of elaborating the content of human beliefs. It concerns religious beliefs. I’ll discuss the following point: 1. I explain the phenomenon of “Bootstrapping” in the pragmatic context, which shows how from basic practices described by a “metavocabulary” new practices and abilities characterized by a new Steve Russ Mark Sprevak David Gamez Raymond Turner 6 Jiri Wiedermann, Institute of Computer Science (Academy of Sciences of the Czech Republic) Beyond Singularity 7 Rafal Urbaniak, Centre for Logic and Philosophy of Science Ghent University, Belgium Florent Franchette, PhD student, Paris in France. Randomness, Computability, and Abstract Objects 8 Why is it necessary to build a physical model of hypercomputation vocabulary emerge. The elaboration of certain aspects of practices and abilities by a Turing Machine is an example of pragmatic bootstrapping. 2. I clarify why human beliefs (in our case, religious beliefs) can’t completely be elaborated either by Artificial Intelligence or by logic through material inferences embedded in conditionals as they have peculiar contents. Using the contemporary view of computing exemplified by recent models and results from non-uniform complexity theory, we investigate the computational power of cognitive systems. We show that in accordance with the so-called Extended Turing Machine Paradigm such systems can be seen as non-uniform evolving interactive systems whose computational power surpasses that of the classical Turing machines. Our results show that there is an infinite hierarchy of cognitive systems. Within this hierarchy, there are systems achieving and trespassing the human intelligence level.We will argue that, formally, from a computation viewpoint the human level intelligence is upper-bounded by the _2 class of the arithmetical hierarchy. Within this class, there are problems whose complexity grows faster than any computable function and, therefore, not even exponential growth of computational power can help in solving such problems. Olszewski [21] claims that the Church-Turing thesis can be used in an argument against platonism in philosophy of mathematics. The key step of his argument employs an example of a supposedly effectively computable but not Turing-computable function. I argue that the process he describes is not an effective computation, and that the argument relies on the illegitimate conflation of effective computability with there being a way to find out. A model of hypercomputation is able to compute at least one function not computable by Turing Machine and its power comes from the absence of particular restrictions on the computation. Actually, some researchers defend that it is possible to build a physical model of hypercomputation called \accelerating Turing Machine\. But for what purposes these researchers Murray Shanaha n Kevin Magill John Preston Barry Cooper Barry Cooper Hector Zenil Philosophy of computing/ hypercomput ation 9 William (Bill) Duncan, Graduate student, Buffalo Using Ontological Dependence to Distinguish Between Hardware and Software 10 Michael Nicolaidis, TIMA Laboratory Grenoble, On the State of Superposition and the Parallel or not Parallel Nature of Quantum would try to build a physical model of hypercomputation when they already have mathematical models more powerful than the Turing Machine? In my opininon, the computational gain of the accelerating Turing Machine is not free. This model also lost the possibility for a human to access to the computation result. To define this feature, I'll propose a new constraint called the \access constraint\ stating that a human can access to the computation result regardless of computation ressources. I'll show that the Turing Machine meets this constraint unlike the accelerating Turing Machine and I'll defend that build a physical model of the latter is the solution to meet the access constraint. The distinction between hardware and software is an ongoing topic in philosophy of computer science. We often think of them as distinct entities, but upon examination it becomes unclear exactly what distinguishes the two. Furthermore, James Moor and Peter Suber have cast doubt on the idea that there is a worthwhile distinction. Moor has argued that the distinction should not be given much ontological significance. Suber has argued that hardware is software. I find both these positions implausible, for they ignore more general ontological distinctions that exist between hardware and software. In this paper, I examine the arguments of Moor and Suber, and show that, although their arguments may be valid, they draw implausible conclusions. The ontological perspectives on which their arguments are based are too narrow, and the ontological distinctions used to motivate their arguments are not applicable to reality in general. I then argue that distinctions do emerge between hardware and software when they are considered using ontological distinctions that have wider applicability: A piece of computational hardware is an ontologically independent entity, whereas a software program is an ontologically dependent entity. In this paper we use ideas coming from the field of computing to propose a model of quantum systems, which gets rid of the concept of superposition while reproducing the observable behaviour of quantum systems as described by quantum mechanics. On the basis of this model we contest the interpretation of quantum mechanics based on the so-called quantum Ian Sillitoe Murray Shanaha n Steve Russ Tillmann Vierkant France Computing: a controversy raising point of view 11 Paul Schweizer, Edinburgh, Informatics Multiple Realization and the Computational Mind 12 Tom Froese From Artificial Life to Artificial Embodiment: Using humancomputer interfaces to investigate the embodied mind 'as-it-could-be' from the firstperson perspective 13 Li Zhang, School of Computing, Univ. of Contextual Affect Modeling and Detection from Open-ended Text- superposition and the related parallel-computing interpretation of quantum algorithms. The goal of this presentation is to bring for discussion in the conference ontological arguments against the so-called quantum parallelism, which are mutually supported with recent advances in complexity analysis of quantum algorithms. The paper examines some central issues concerning the Computational Theory of Mind (CTM) and the notion of instantiating a computational formalism in the physical world. In the end, I argue that Searle’s view that computation is not an intrinsic property of physical systems, but rather is an observer relative attribution, is correct, and that for interesting and powerful cases, realization is only ever a matter of degree. And while this may fatally undermine a computational explanation of conscious experience, it does not rule out the possibility of a scientifically justified account of propositional attitude states in computational terms. There is a growing community of scientists who are interested in developing a systematic understanding of the experiential aspects of mind. We argue that this shift from cognitive science to consciousness science presents a novel challenge to the fields of AI, robotics and related synthetic approaches. While these fields have traditionally formed the central foundation of cognitive science and led the way toward new views of cognition as embodied, situated and dynamical, in the current turn toward the experiential aspects of mind their role remains uncertain. We propose that one way of dealing with the challenge is to design artificial systems that put human observers inside novel kinds of sensorimotor loops. These technological devices can then be used as tools to investigate the embodied mind ‘as-it-could-be’ from the firstperson perspective. We illustrate this methodology of artificial embodiment by drawing on our recent research in sensory substitution, virtual reality and large-scale interactive installations. Real-time contextual affect detection from open-ended text-based dialogue is challenging but essential for the building of effective intelligent user interfaces. In this paper, we focus on context-based affect detection using emotion modeling in personal and social communication context. Bayesian Kevin Magill Susan Stuart Mark Bishop John Preston Raymond Turner Slawomir Nasuto Teesside based Dramatic Interaction 14 Kevin Magill, Philosophy, Wolverhampt on; Yasemin J. Erden, CBET/Philoso phy, St Mary’s University College Autonomy and desire in machines and cognitive agent systems 15 Mark Bishop & Mohammad Majid alRifaie, Goldsmiths Simon Colton & Alison Pease Creativity? 16 Computational Creativity networks are used for the prediction of the improvisational mood of a particular character and supervised & unsupervised neural networks are employed respectively for the deduction of the emotional implication in the most related interaction context and emotional influence towards the current speaking character. Evaluation results of our contextual affect detection using the above approaches are provided. Generally our new developments outperform other previous attempts for contextual affect analysis. Our work contributes to the conference themes on sentiment analysis and machine understanding The development of cognitive agent systems relies on theories of agency, John within which the concept of desire is key. Indeed, in the quest to develop Barnden increasingly autonomous cognitive agent systems desire has had a significant role. Yet we maintain that insufficient attention has been given to analysis and clarification of desire as a complex concept. Accordingly, in this paper we will discuss some key philosophical accounts of the nature of desire, including what distinguishes it from other mental and motivational states. We will then draw on these theories in order to investigate the role, definition and adequacy of concepts of desire within applied theoretical models of agency and agent systems. Yasemin Erden Yasemin Erden Mark Sprevak Kevin? Nasuto? Colton? Mark Bishop Programme Committee (for reviewing): Mark Bishop Steve Russ Kevin Magill Yasemin J. Erden 1. Prof. Ian Sillitoe (Wolves; robotics) 2. Dr David Gamez (Essex; computing/philosophy) 3. Dr Hector Zenil (Wolfram Research; computer science) 4. Dr Slawek (Slawomir) Nasuto (Reading; cybernetics) –(have sent only one for review, without a request for just one) 5. Professor Murray Shanahan (Inperial; computing) 6. Professor Raymond Turner (Essex; logic/computation) 7. Professor Steve Torrance (Sussex; informatics/philosophy)—consciousness symposium organiser! (one paper only) 8. Dr John Preston (Reading; philosophy/AI) 9. Professor John Barnden (Birmingham; AI/metaphor) –(have sent only one for review, without a request for just one) 10. Professor Barry Cooper (Leeds; mathematics) 11. Dr Mark Sprevak (Edinburgh; philosophy of mind etc) 12. Dr Susan Stuart (Glasgow; philosophy/technology)—(one paper only if possible, two maximum) 13. Dr Tillmann Vierkant (Edinburgh; philosophy of mind)—(one paper only if possible, two maximum)