paper

advertisement

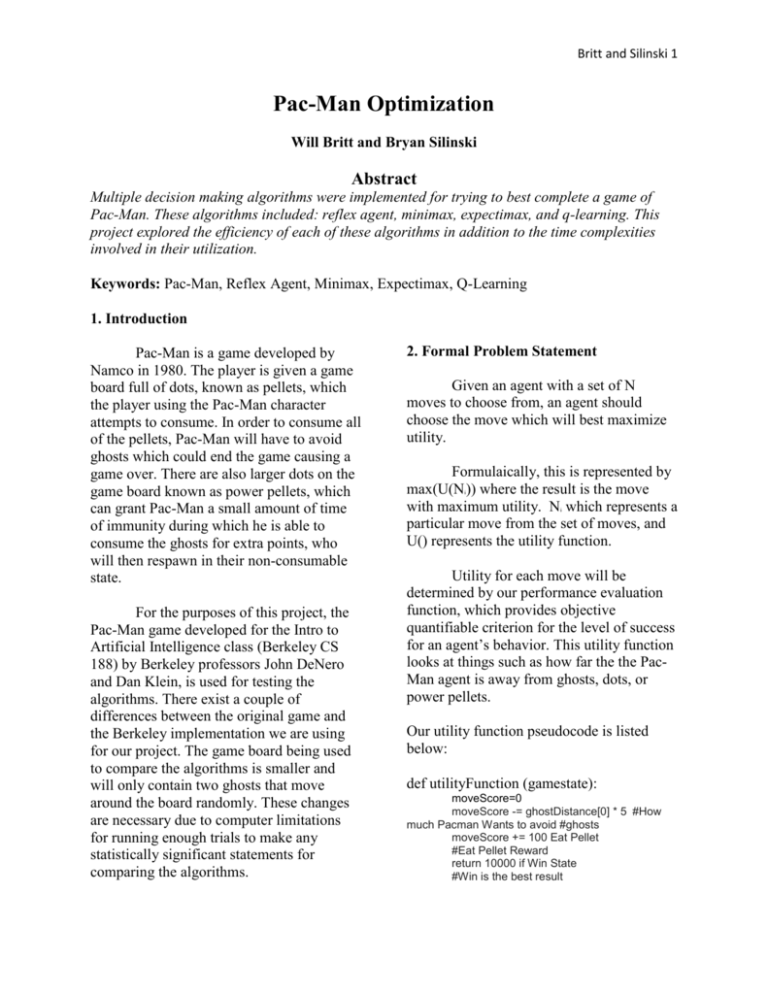

Britt and Silinski 1 Pac-Man Optimization Will Britt and Bryan Silinski Abstract Multiple decision making algorithms were implemented for trying to best complete a game of Pac-Man. These algorithms included: reflex agent, minimax, expectimax, and q-learning. This project explored the efficiency of each of these algorithms in addition to the time complexities involved in their utilization. Keywords: Pac-Man, Reflex Agent, Minimax, Expectimax, Q-Learning 1. Introduction Pac-Man is a game developed by Namco in 1980. The player is given a game board full of dots, known as pellets, which the player using the Pac-Man character attempts to consume. In order to consume all of the pellets, Pac-Man will have to avoid ghosts which could end the game causing a game over. There are also larger dots on the game board known as power pellets, which can grant Pac-Man a small amount of time of immunity during which he is able to consume the ghosts for extra points, who will then respawn in their non-consumable state. For the purposes of this project, the Pac-Man game developed for the Intro to Artificial Intelligence class (Berkeley CS 188) by Berkeley professors John DeNero and Dan Klein, is used for testing the algorithms. There exist a couple of differences between the original game and the Berkeley implementation we are using for our project. The game board being used to compare the algorithms is smaller and will only contain two ghosts that move around the board randomly. These changes are necessary due to computer limitations for running enough trials to make any statistically significant statements for comparing the algorithms. 2. Formal Problem Statement Given an agent with a set of N moves to choose from, an agent should choose the move which will best maximize utility. Formulaically, this is represented by max(U(N )) where the result is the move with maximum utility. N which represents a particular move from the set of moves, and U() represents the utility function. i i Utility for each move will be determined by our performance evaluation function, which provides objective quantifiable criterion for the level of success for an agent’s behavior. This utility function looks at things such as how far the the PacMan agent is away from ghosts, dots, or power pellets. Our utility function pseudocode is listed below: def utilityFunction (gamestate): moveScore=0 moveScore -= ghostDistance[0] * 5 #How much Pacman Wants to avoid #ghosts moveScore += 100 Eat Pellet #Eat Pellet Reward return 10000 if Win State #Win is the best result Britt and Silinski 2 moveScore += 100 if Powerpellet #Eat Power Pellet Reward moveScore += random.random() * 10 #Avoid a flip flop state when the move #scores are equal return moveScore These mathematical manipulations exist inside a function that will be called utilityFunction for the rest of this paper. utilityFunction requires a game state parameter. A game state contains all the information from the game at that current moment. Due to this project’s concern with having Pac-Man choose a move, this function is passed potential game states in order to choose the best one. The moveScore variable is the calculated score of a move and is manipulated based on the data from the game state such as the distance from Pac-Man to the ghosts, or if Pac-Man eats a pellet at that state, etc. The moveScore is returned is the utility for that particular move. 3. Methods 3. 1 Reflex Agent A reflex agent makes decisions based only on the current perception of a situation, not past or future perceptions. In order for a reflex agent to come to a decision, it must be given rules or criteria in order to evaluate the potential decisions to be made. In a general sense, a reflex agent works in three stages: 1. The potential states of the current environment are perceived. 2. The states are evaluated by the rules or criteria supplied for the decision process. 3. Based on the evaluation, a decision is made. When applying this general model to Pac-Man problem, the decision which is needed to be made is the move (North, South, East, West, or Stop) to be taken by Pac-Man. In order to make a decision between these moves, the utilityFunction mentioned previously was used. In general, the reflex agent runs in O(n) time where n is the amount of moves that are possible from a game state, which are run through the evaluation function before selecting a move. Given, the PacMan problem, the algorithm would run at a maximum of five times if Pac-Man was in the middle of an intersection. Pseudocode for reflex agent for the Pac-Man problem: max = -infinity for i in PacManLegalMoves: if utilityFunction(i) > max: max= utilityFunction(i) currentMoveChosen = i From the pseudocode above, one can see how the loop responsible for choosing the move would run for as many moves PacMan has available so O(n) is the time complexity. 3.2 Minimax Agent Minimax is an algorithm often used in two-player games where two opponents are working towards opposite goals. Minimax takes into account future moves by both the player and the opponent in order to best choose a move. Minimax is implemented in “full information games.” A full information game is one in which the player can calculate all possible moves for the adversary. For example, in a game of chess a player would able to list out all possible outcomes of the opponent’s next move based on the game pieces available. The minimax algorithm also operates under the assumption that the opponent will always make the optimal choice to make the Britt and Silinski 3 player lose the game, which is that it looks for the worst-case scenario for the player. Implementing a minimax algorithm involves looking at the game as a turn-by-turn basis. Generally, implementing a minimax into a given 2-player situation looks like this: 1. If the game is over, return the score from the player’s point of view. 2. Else, get game states for every possible move for whichever player’s turn it is. 3. Create a list of scores from those states using the utilityFunction 4. If the turn is the opponent’s then return the minimum score from the score list. 5. If the turn is the player’s then return the maximum score from the score list. For our problem, a maximum of five moves would be considered (North, South, East, West, and Stop) if Pac-Man was in the middle of a 4-way intersection. These moves utilities would be calculated and the maximum value move would be found through the calculations. For every ghost turn, a maximum of four turns would be considered( North, South, East, and West) if the ghost was in the middle of a 4-way intersection, since ghosts do not have the ability to stop. These moves would be evaluated by their utility and since we are looking at Pac-Man adversaries, the minimum utility would be calculated. This algorithm is often done recursively, as is the way we implemented it, and branches out as farther future game state depths are explored. The algorithm would choose the move which, according the game tree, would best benefit the player. Ideally, the minimax algorithm would run through an entire game’s worth of moves before choosing one for the player. However, exploring enough depths to completely explore a game would only work on small games like tic-tac-toe due to the space and time constraints of performing this algorithm. For other larger games, such as Pac-Man, it is more beneficial to limit the depth. The time complexity for the minimax algorithm is O(b^d) where b^d represents the amount of game states sent to the utility function. b, referred to as the branching factor, represents the amount of game states per depth. In Pac-Man this would be 3-5 Pac-Man successor states (depending on location). The d in this time complexity refers to the amount of depths explored. Minimax pseudocode for Pac-Man: def value(state): if the state is terminal state: return utility if the next agent is max(Pac-Man) return max-value(state) if the next agent is min(Pac-Man) return min-value(state) def max-value(state): initialize v = - infinity for each successor of state: v = max(v,value(successor)) return v def min-value(state): initialize v = infinity for each successor of state: v = min(v,vale(successor)) return v Looking at the pseudocode for minimax, one can see that the branching factor, b, from the big O notation comes from the successors of each game state, which the potential next moves for either Pac-Man or the ghosts depending on the agent. Depth, d, is handled by the recursion Britt and Silinski 4 which exits upon reaching a terminal state or reaching the necessary depth. One interesting thing to note is as previously mentioned the minimax algorithm assumes that the adversaries, the ghosts, will perform the optimal move, which would be to come after Pac-Man. However, for our tests the ghosts did not move optimally, but instead moved by selecting a random move from the legal moves available from a position. This provides for some interesting scenarios. When faced with a possibility where enough depth is explored so that Pac-Man believes that it’s death is inevitable due to a ghost proximity of the ghosts, Pac-Man (while operating under the minimax algorithm) will commit suicide as quickly as possible in order to best maximize the final score. This is obviously not the ideal decision to make, as there is a chance that the ghost will not make the optimal decisions and they will not converge upon Pac-Man. player’s moves and minimizing the ghost moves until the depth limit had been reached. With expectimax, chance nodes are interleaved with the minimum node, so that instead of taking the minimum of the utility values as was done in minimax, we are now getting the expected utilities due to the probabilities. This ghosts are no longer being thought of agents which are trying to minimize Pac-Man’s score, but rather they are now being thought to be a part of the environment. 3.3 Expectimax Agent Man: The time complexity of O(b^d) is the same as minimax, where b^d represents the amount of game states evaluated by the utility function. As it was in minimax, b (branching factor) represents the amount of game states per depth ( in Pac-Man this would be 3-5(Pac-man successor states) multiplied by 4-16(ghost successor game states). d represents depth. Expectimax pseudocode for Pac- In order to solve the problem of PacMan not taking into account the random nature of the ghosts, the minimax function had to be altered so that it was no longer looking at the worst case, and instead looking for the average case. When applying this algorithm to Pac-Man, the utilityFunction from the reflex agent will once again be used to evaluate to the game states. For the player’s turn, the expectimax functions similar to a minimax function would where the maximum utility is returned for the player, but for the nonplayer turn, we are no longer looking for the worst case for Pac-Man(best case for ghosts), and instead looking for the average case. To do this, the probability of moves occurring needed to be taken into account. In the minimax algorithm, the levels of the game tree alternated between maximizing a def value(state): if the state is a terminal state: return the state’s utility if the next agent is Max. return max-value(state) if the next agent is Exp, return exp-value(state) def max-value(state) v = -infinity for each successor of state: v = max(v,value(successor)) return v def exp-value(state): v=0 for each successor of state: p = probability(successor) # where probability is Britt and Silinski 5 #1/number of available moves v +=p * value(successor) return v Upon inspection of this pseudocode, one can see the similarities between minimax and expectimax. The only clear difference is that instead of having the minimizing function that was previously used for the adversary agents(ghosts), there is an expected value function which considers the probabilities of a ghost’s potential position. Like minimax, the branching factor within the time complexity comes from the for loops that explore the each agent’s possible moves. The depth comes into play through recursion with the exit of the recursion once again being the required depth or terminal state. 3.4 Q-Learning Q-learning is a model-free reinforcement learning technique. Reinforcement learning in computing is inspired by behavioral psychology and the idea of reinforcement where behavior is changed by rewards or punishment. In computing, the idea is to have an agent choose an action to best maximize its reward. Q-learning uses this idea for finding an optimal policy for making decisions for any given Markov decision process (MDP). A MDP is a mathematical framework for making decisions, where outcomes are determined by both randomness and the decision maker’s choices. MDP can be further explained as: during a specific time in a decision making process, the process is in some state, s, and the decision maker may choose any action, a, that exists in s. Once an action is chosen, the process moves to the next time step and randomly reaches a new state, s’, and the decision maker is given a corresponding reward, defined as the function R (s,s’). The probability of reaching a s’ is influenced by the action that was chosen, which is represented by the probability function P (s,s’). a With standard Q-learning each state is a snapshot of the game which takes into consideration the absolute position and feature of the game state(i.e. the ghosts are at position [3,4], not 1 move away from PacMan). This works for smaller problems sizes, because every state can be sufficiently visited enough as to properly determine what move from that state has a high utility. This decision takes into absolute positions of food, ghosts, and the position of Pac-Man. However, this is inefficient for larger problems sizes due the large potential states that Pac-Man has to visit in order to properly train for the scenario. Another consideration is that Pac-Man might train itself to avoid certain areas because it is inefficient, leaving Pac-Man without any training when it does end up in an area with less training. To combat this flaw, we modified the standard q-learning into an approximate q-learning algorithm. In the approximate qlearning, instead of learning from only each coordinate state, the algorithm generalizes the scenario. Some factors to consider for Pac-Man are the vicinity of dots, ghosts, and relative position. The relative position is used to create a more efficient route while pursuing dots and avoiding ghosts, in addition to the actual coordinates as to optimize the traversal of the game board. The approximate q-learning accounts for similar state factors as the reflex agent. The q-learning is more technical than the basic reflex agent because the algorithm will, with the process of trial and error, converge upon the optimal weight values for the different state factors. The q learning algorithm must be trained in order for it to figure out the most optimal way to play Pac-Man. After the training sessions are done, the values are Britt and Silinski 6 stored in a table. This allows for Pac-Man to look up the state and move, consider the features of the gamestate( i.e. there is ghost directly to Pac-Man’s East), then perform a move. There is very little on the fly calculations performed when compared to the other algorithms. The time complexity is slightly different than the other algorithms. For our evaluations we are only comparing the performance of the algorithms in regards to playing time and not time taken to train. With that in mind q learning has a time complexity of O(1) because q learning uses a lookup table in order to determine the optimal move. 4 Results (See the Appendix section for pertinent graphs and tables) In our tests we ran each algorithm for a total of one thousand tests trials. We ran the minimax and expectimax algorithm at depth two, three, and four in order to determine how as more depths were evaluated the performance changed. From this point forward minimax depth two, expectimax depth three, etc. will be referred to as minimax two and expectimax three respectfully. For q-learning we also ran for the same number of test runs; however, we also varied the training by running the algorithm four separate times with fifty, one hundred, five hundred, and one thousand training trial in order to see how the results differ. Shockingly, the reflex agent lost every game. We found that reflex agent failed as much as it did because the utility evaluation function was not as well suited for the reflex agent as it was the other algorithms. Ultimately, we decided to live with this because for the purposes of this class the utility evaluation function is not important. It is instead important to use the same evaluation function for all the algorithms so that the algorithms themselves can be compared and not the evaluation function. Within the set of minimax tests, the minimax two algorithm had the highest score, however it had a win percentage .01% less than that of minimax four. Due to minimax depending upon the adversary to take the optimal move, it is logical that minimax two had such a high score because if the ghost does not take an optimal move that disrupts the accuracy of the evaluation. As the depth increases the potential for ghosts to move not optimally increases. With minimax four having the highest win percentage that is a surprise. As the depth increases the win percentage does not have a pattern of increase, and when going form depth 2 to depth 3 it actually decreases, which is most likely due to randomness and with more trials we expect to find this trend to not continue. Interesting to note, is that as the depth increases the average number of moves also decreases. As the depth increases, the in game time stays the same because one unit of time in game consists of Pac-Man and all of the ghosts moving once; however, the time it take the algorithm to evaluate the possible moves increases. Depth three takes approximately 3 times longer than depth two and depth four takes approximately 5 times longer than depth 3 which suggests the exponential increase in time due to depth suggested by our theoretical time complexity (Appendix Item D). Within the set of expectimax tests, unlike minimax, expectimax four had both the highest score and the highest win percentage out of the group. Expectimax also had the lowest standard deviation Britt and Silinski 7 amongst the expectimax class, showing the algorithm performed more consistently than expectimax at other depths. When comparing the different depths of expectimax, the greatest change was from depth two to depth three. This stays the same for the standard deviation of the move count because expectimax one had the greatest standard deviation. The transition from expectimax depth three to four had smaller changes in the win percentage, average score, and move standard deviation. With this in mind, that as the depth increases the in game performance increase, it must be noted that the time it takes in reality (as in real time for a human: seconds, minutes, etc.) to calculate a move increases. When going from depth two to depth three it takes approximately three times as long on average per move. However, depth three to depth four takes approximately five times as long on average. This matches up with the time complexity stated previously where the increase in depth causes an exponential increase in the number of game states explored and time taken. With additional depths, more time will be required to complete the algorithm. (Appendix Item E). In the q learning tests, we varied the training games with 50, 100, 500, and 1000. Q learning with 500 training games performed the most optimally with the highest score, highest win percentage and lowest standard deviation. However, it did not have the lowest average number of moves with Qlearning 100 taking on average the fewest number of moves. Given the similarity between how the function calculates the utility and does not rely upon an depth, q learning requires the same time regardless of the number of training games and that is as expected. With the nature of q learning it is expected that as the number of training sessions increases the more optimal the game would play because the weights of the state evaluators converges upon the optimal values. However, when q learning was ran with 1000 training sessions the game did not perform better than it did with 500 q learning games and actually performed worse. When comparing the different algorithm upon each other and not amongst their sister algorithms(i.e expectimax depth 2 vs. expectimax depth 4 or q learning with 50 training games vs q learning with 100 training games) the strengths of each of the functions can be more efficiently compared. When comparing the most optimal algorithms (and depth pairs when appropriate) expectimax depth 4 performed the best when being judged by the win percentage and the average score. This illustrates how brute force can be leveraged in order to compute every combination to a certain depth and evaluate how the future might unfold. This depth evaluation comes at a cost because expectimax depth 4 takes the longest time per move. If the move time of each of the best algorithms in each subset is summed excluding the expectimax subset, expectimax depth 4 still takes over five times as long. Overall game performance comes at a cost; however, if willing to sacrifice .006 win percentage and lower score, q learning performs the most optimally out of each of the subsets. If you consider the in game trade off to time taken, q learning calculates almost 500 times faster than expectimax depth 4. Q learning even performs faster than the reflex agent because q learning looks up the utilities in a table and does not perform any calculations when playing in a test game. Another thing to consider is q learning with 500 training sessions had the smallest standard deviation amongst the move per game count. This illustrates how more consistent q learning is at reaching the desired goal. Britt and Silinski 8 5. Conclusions and Future Considerations Based on our results and the time complexities involved with acquiring such results, we can draw a few overall conclusions. First, one can see how much randomness mattered within the context of Pac-Man as there appears to be a large discrepancy between the results of the minimax and expectimax algorithms. If we were to do this experiment again, it would have been beneficial to run the same algorithms on a game board where the ghosts did, in fact, move optimally to see what differences could be found. Another conclusion drawn from this project is the noticeable power of a properly trained qlearning algorithm. When considering the time taken make the decisions and the results, q-learning was far and away the best algorithm. Q-learning was able to retrieve similar results to expectimax depth four in a fraction of the time. For future problems similar in nature to the one explored in this paper, it would be wise to apply the qlearning algorithm. There are a couple of concerns worth mentioning in regards to this project. First, reflex agent had the potential to do much better than it did with a different utility function, but we wanted to have consistency across algorithms for evaluation so that the algorithms, themselves could be compared. If we were to do this project again, we would have tried to find an evaluation function that would work well for all algorithms, even reflex agent. In addition, coming up with a better utility function in general would have been a good idea. A utility function is fairly subjective due to the programmer deciding what states are desirable. These subjectivities may not have been ideal for winning at PacMan. A lot of the decisions involving the utility function were made based on guessing and checking until the behavior evident in Pac-Man coincided with our preferences for how to play the game. Looking back on this project, there is probably a better way for how to come up with a utility function. If we were to do this project again it would also be worth exploring future depths of minimax and expectimax. Due to computer limitations and the amount of trials needed to draw conclusions, we could only explore up to depth four reasonably for both algorithms. It would be interesting to see how close to a perfect 100% rating we could obtain by investigating larger depths. The same goes for q-learning. By adding more games to the training count it would be interesting to see how close to perfection we could get. Britt and Silinski 9 Appendix (A) Algorithm Reflex Minimax 2 Minimax 3 Minimax 4 ExpectiMax 2 ExpectiMax 3 ExpectiMax 4 Qlearn 50 Qlearn 100 Qlearn 500 Qlearn 1000 Qlearn 50 W/ Train* Qlearn 100 w/Train* Qlearn 500 w/Train* Qlearn 1000 w/Train* Avg Move Time Avg # Moves AVG Score Win % Move STD 0.001919824 68.144 -445.024 0 52.22509669 0.004229125 189.043 975.877 0.672 81.1781562 0.012895651 322.443 801.657 0.648 151.2438741 0.061221232 411.292 818.098 0.682 193.9309773 0.016378297 168.175 1223.215 0.836 60.59668583 0.048551765 192.308 1283.672 0.915 54.26267201 0.234599232 200.735 1305.865 0.927 52.37050166 0.000527238 132.411 1191.929 0.9 29.7713354 0.000494471 129.223 1214.287 0.91 25.68413802 0.000504648 130.534 1230.316 0.921 24.23758041 0.000488254 130.6677632 1205.631579 0.90296053 25.28541812 0.001037794 1191.929 0.9 0 0.000993991 1214.287 0.91 0 0.000958897 1230.316 0.921 0 1205.631579 0.90296053 0 0.000942771 (B) Win % 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 Britt and Silinski 10 (C) Avg Move Time 0.25 0.2 0.15 0.1 0.05 0 (D) Avg Move Time for MiniMax 0.07 0.06 0.05 0.04 0.03 0.02 0.01 0 Minimax 2 Minimax 3 Minimax 4 Britt and Silinski 11 (E) Avg Move Time for Expectimax 0.25 0.2 0.15 0.1 0.05 0 ExpectiMax 2 ExpectiMax 3 ExpectiMax 4 Pseudocode Pseudocode for utility function: def utilityFunction (gamestate): moveScore=0 moveScore -= ghostDistance[0] * 5 #How much Pacman Wants to avoid #ghosts moveScore += 100 Eat Pellet #Eat Pellet Reward return 10000 if Win State #Win is the best result moveScore += 100 if Powerpellet #Eat Power Pellet Reward moveScore += random.random() * 10 #Avoid a flip flop state when the move #scores are equal return moveScore Pseudocode for reflex agent for the Pac-Man problem: max = -infinity for i in PacManLegalMoves: if utilityFunction(i) > max: max= utilityFunction(i) currentMoveChosen = i Minimax pseudocode for Pac-Man: Britt and Silinski 12 def value(state): if the state is terminal state: return utility if the next agent is max(Pac-Man) return max-value(state) if the next agent is min(Pac-Man) return min-value(state) def max-value(state): initialize v = - infinity for each successor of state: v = max(v,value(successor)) return v def min-value(state): initialize v = infinity for each successor of state: v = min(v,vale(successor)) return v Expectimax pseudocode for Pac-Man: def value(state): if the state is a terminal state: return the state’s utility if the next agent is Max. return max-value(state) if the next agent is Exp, return exp-value(state) def max-value(state) v = -infinity for each successor of state: v = max(v,value(successor)) return v def exp-value(state): v=0 for each successor of state: p = probability(successor) # where probability is #1/number of available moves v +=p * value(successor) return v Britt and Silinski 13 References Sutton, R., & Barto, A. Reinforcement Learning: An Introduction. Retrieved November 2, 2015, from https://webdocs.cs.ualberta.ca/~sutton/book/ebook/the-book.html Tic Tac Toe: Understanding The Minimax Algorithm. Retrieved October 2, 2015, from http://neverstopbuilding.com/minimax UC Berkeley CS188 Intro to AI -- Course Materials. Retrieved September 1, 2015, from http://ai.berkeley.edu/home.html